At this point, everyone has already heard that Microsoft is buying GitHub. Acquisitions of this scale take time, but most expect everything to be official by 2019. The general opinion online seems to be one of unease, and rightfully so. Even if we ignore Microsoft’s history of shady practices, there’s always an element of unease when somebody new takes over something you love. Sometimes it ends up being beneficial, the beginning of a new and better era. But sometimes…

Let’s not dwell on what might become of GitHub. While GitHub is the most popular web-based interface for Git, it’s not the only one. For example GitLab, a fully open source competitor to GitHub, is reporting record numbers of new repositories being created after word of the Microsoft buyout was confirmed. But even GitLab, while certainly worth checking out in these uncertain times, might be more than you strictly need.

Let’s not dwell on what might become of GitHub. While GitHub is the most popular web-based interface for Git, it’s not the only one. For example GitLab, a fully open source competitor to GitHub, is reporting record numbers of new repositories being created after word of the Microsoft buyout was confirmed. But even GitLab, while certainly worth checking out in these uncertain times, might be more than you strictly need.

Let’s be realistic. Most of the software projects hackers work on don’t need even half the features that GitHub/GitLab offer. Whether you’ve simply got a private project you want to maintain revisions of, or you’re working with a small group collaboratively in a hackerspace setting, you don’t need anything that isn’t already provided by the core Git software.

Let’s take a look at how quickly and easily you can setup a private Git server for you and your colleagues without having to worry about Microsoft (or anyone else) having their fingers around your code.

A Word on Remotes

The first thing to understand is that Git doesn’t strictly use the traditional client-server kind of arrangement. There’s no Git server in the sense that we have SSH and HTTP servers, for example. In Git, you have what’s known as the remote, which is a location where code is copied to and retrieved from. Most of us are familiar with supplying a URL as the remote (pointing to GitHub or otherwise), but it could just as easily be another directory on the local system, or a directory on another machine that’s been shared over the network. It could even be the mount point of a USB flash drive, which you could then physically move to other machines to keep them all synchronized in a modernized version of the classic “Sneakernet”

So with that in mind, a basic “Git Server” could be as simple as mounting a directory on another machine with NFS and defining that as the remote in your local Git repo. While that would certainly work in a pinch, the commonly accepted approach is to use SSH as the protocol between your local machine and remote repository. This gives you all the advantages of SSH (compression, security, Internet routability, etc) while still being simple to configure and widely compatible with different operating systems.

Git over SSH

I’ll be doing this on a stock Raspbian install running on the Raspberry Pi 3, but the distribution of Linux you chose and even the device don’t really matter. You could just as easily do this on an old PC you have lying around in the junk pile, but the system requirements for this task are so light that it’s really an excellent application for the Pi.

The general idea here is that we are going to create a specific user for Git, and make it so they don’t have any shell access. We want an account that SSH can use, but we don’t want to give it the ability to actually do anything on the system; as anyone who uses the Git server will by necessity have access to this account. As it so happens, Git includes its own minimal shell to do just that called git-shell.

We’ll then prepare a place for our Git repositories to live, create a basic empty repository, and verify it’s working as expected from a client machine. Keep in mind this is going to be a very bare-bones setup, and I’m not suggesting you follow what I’m doing here exactly. This is simply to give you an idea of how quickly you can spool up a simple distributed control system for yourself or a small group using nothing but what’s included with the basic Git package.

Preparing the User Account

So let’s say you’ve already created the user “git” with a command like:

sudo adduser git

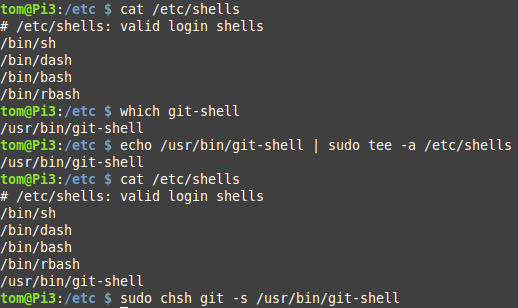

The next step is to see if

The next step is to see if git-shell is an enabled shell on your system, and if not (it probably isn’t), add it to the list of valid options.

That last line is the one that actually changes the default shell for the user. If you try to log in as “git” now, you’ll be presented with an error. So far, so good.

Except we already have a problem. Since the “git” user can no longer log into the system, we can’t use that account for any of the next steps. To make things easier on yourself, you should add your own account to the “git” group. As you’ll see shortly, that will make the server a bit easier to maintain.

The command to add a user to an existing “git” group will look something like this:

sudo usermod -a -G git USERNAME

A Place to Call Home

You might be inclined to just drop your repositories in the home directory of the “git” user. But for safety reasons it’s an account that can’t do anything, so it doesn’t make a whole lot of sense to put anything in its home directory.

You might be inclined to just drop your repositories in the home directory of the “git” user. But for safety reasons it’s an account that can’t do anything, so it doesn’t make a whole lot of sense to put anything in its home directory.

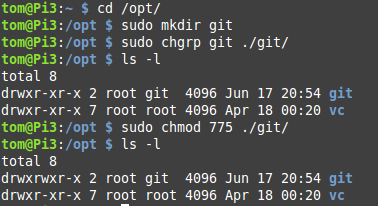

To make things easier on yourself, you should create a directory in a globally accessible location like /opt, and then change its permissions so the group “git” has full access. That way, any account on the system that is in the “git” group will be able to add new repositories to the server.

If you want to skip this step, just keep in mind you’ll need to use sudo to add more repositories to the server. If this is a one-off box that’s not really a problem, but if you want to open this up to a few other people it’s helpful to have a more granular control over permissions.

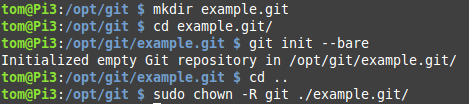

At any rate, once you have the directory created where you want to store your repositories, it’s time to create a blank repository to use. This is as simple as creating a new directory and running a single Git command in it.

Pay special attention to that last line. You need to make sure the owner of the repository you just created is the “git” user, or else you’ll get permission errors when you try to push code up to the server from your clients.

The First Push

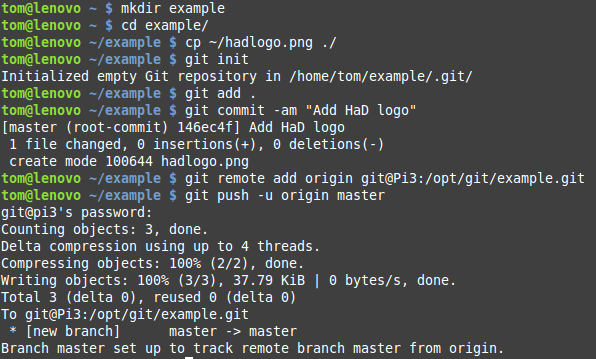

We now have a Git server that’s ready to have code pushed into it. From a client device, you would either create a new project to upload or else set this server up as a new remote for an existing Git repository. For the sake of clarity, here’s what it would look like to create a new local Git repository, add a file to it, and then push it to our new server.

Notice in the git remote add command that the username and hostname are from our server system. If you don’t have DNS setup for name resolution on your network, you could simply use the IP address here. Of course that path should look familiar, as it’s where we created the example repository previously.

Assuming no errors, you’re now up and running. You can git clone this repository from another computer, pushing and pulling to your heart’s content. New repositories will need to be manually added before code can be pushed to them, but beyond that, the workflow is the same as what you’re used to.

A Simple Start

That’s all it takes to create your own Git server, and in fact, we actually did a fair bit more than was strictly required. If you’re on a secure network and the server is only for one person, you could really skip the new user creation and shell replacement. Just make the bare repository in your home directory and use your normal SSH credentials. Conversely, if you wanted to open this up to a group of trusted collaborators, the next logical step would be to setup SSH public key authentication and add their keys to the system.

But what if you want something a bit closer to the admittedly snazzy GitHub experience? Well, as it so happens there are a number of packages available that can provide a similar experience on a self-hosted server. There’s even a build of GitLab that you can run on a private box. Now that we’ve got the basics out of the way, we’ll be taking a look at some of these more advanced self-hosted Git options in the near future.

Fantastic, lose all your data when the Raspberry Pi inevitably corrupts the SD card.

I’m assuming most people who care about not losing this now boot from a hard drive. In my case an SSD with local and remote backup.

You do have your local repo, don’t you?

You make backups, don’t you?

Actually, thanks to Git’s distributed nature, a “server” out there isn’t the “single point of failure”, but just a convenient “meeting point”. If the server goes down in flames, just set up a new one and clone over your local repos, that’s all.

You should plan for catastrophic loss, whether your storage is an SD card or a pro-grade SAS.

I feel the same way, but these instructions can easily be applied to your regularly-backuped server with redundand disks, too.

-d +t

Read only SD cards are indeed a thing. log2ram is indeed a thing. My uptime on the pi is measured in months, and the sd card lifetime measured in years.

why not only just boot from the sdcard, and have /root, / on a usb key, or a hard drive, as it should be.

It’s true that the SD cards have a long life. But that’s not the issue here. It’s the lack of a proper shutdown when the power glitches. RPI is useless for anything long term because of this. Not to mention they have no SD card latches anymore.

A read-only SD card is done in software, it’s simply not mounted rw, just ro. and if you are concerned about reliability at all, thats what a cheap UPS is for. I have a 250VA unit that could keep my pi up for days, if not more, including the drives.

Here’s a fun fact. SD cards will usually re-write blocks occasionally if they start to have recoverable read errors (which happens a lot). Some will preemptively rewrite blocks if they haven’t been written to in a long time. So, simply not writing to the SD card is only going to get you so far. The card can still find a way to corrupt itself. The lack of power-safety makes it a horrible standard IMHO.

You can use a power bank to feed the RaspberryPi and then add a wall wart 5V charger to charge the battery bank. Assuming you can charge faster than you can draw you just made a simple battery backup for like $40. I agree and have witnessed the SD corruption from Pi V1, Pi V3, Pi CM1 and Pi CM3.

I believe there is a project here on HackADay that goes ever further with the backup battery system with a small PCB. Here it is: https://hackaday.com/2016/03/17/battery-backup-for-the-raspberry-pi/

So mount a USB drive on the Pi and store your stuff there. Setup a read only boot on the SD card and put the majority of your system on a USB drive. I assume there are guides out there on how to do this. You can also rsync things across multiple drives/servers if you’re really paranoid.

It’s not ideal, but set up a power manager. I have a project where I have to shutdown the Pi numerous times a day so it receives power from an Adafruit PowerBoost 1000C driven off a small lipo. An arduino monitors an enable pin and when it’s time to shutdown, drives a pin high on the Pi that signals a script to issue the shutdown command. After a 10 second delay the Arduino turns off the PowerBoost. It ran for a couple years with no issues what-so-ever.

…and how often does that happen? I have who knows how many Raspberry Pis at home and at work, carrying out various tasks: Kodi box, digital signage, even a “panic button” to summon security. SD-card corruption has been almost a non-issue, save for one box where the card died.

If it’s a regular problem for you, perhaps you need to buy better SD cards.

Couldn’t that risk be minimized by keeping the repository data an USB drive separated from the OS SD card? I’m assuming most repository activity consists of users downloading files, with very little of community modification of files that would add wear and tear to the flash memory.

Sorry to inform you, with this kind of comment, you really deserve to lise your data. :)

Like others says, the repo is also in local, and local should be rsynced on your nas, then nas synced to cloud. Cloud is then synced beetwen clouds.

What else…

“Even if we ignore Microsoft’s history of shady practices, there’s always an element of unease when somebody new takes over something you love. ”

Running Gitlab on the NAS so not even at the “unease” stage which was the point of “distributed” and “open source”.

I’ve been trying Gitea for the past few weeks and I’m rather happy with it.

Much easier to install than GitLab and still has everything I need and more. I’m currently working on a script to migrate all my issues from Redmine to Gitea. (Although, to be honest, Redmine does a better job as issue tracker, but it’s kinda nice to have it all in one place.)

Gogs ( https://gogs.io/ ) is easy to set up and provides a similar web-UI as Github has. I use it myself for private projects and haven’t yet found anything to complain about.

Made me laugh that at the bottom it says ‘powered by Peach’ and then ‘peach’ links to the code on…. github

+1 for Gogs. Easy setup, available on multiple platforms, similar to github, and tons of features. Highly recommend you take a look at it!

Unfortunately it’s not that easy on Raspbian Strecth because it has old mariadb-server (10.1.23). I personally run into this: https://github.com/gogs/gogs/issues/4917

It should work on MariaDB 10.2 and also 10.1 with backported fix:https://jira.mariadb.org/browse/MDEV-14904

And of course you can use something else. Arch Linux (ARM) for example upgrades to newer packages all the time.

I’ve been using gitolite for over four years. Gitolite is a git hosting utility with simple (that’s why I used it!) support for multiple users, access rights, machines, keys, etc. highly recommend if you want to take the next step from the manual route. http://gitolite.com/gitolite/index.html

I’ve being using gitolite with great success for local personal projects.

I have customized a docker container https://github.com/flatlining/rpi-gitolite-docker based on existing ones so it’s even easier to deploy it to a rPi using https://blog.hypriot.com.

Keep in mind I’m far from a docker wizard, so any feedback is welcomed :D

I’ve being using gitolite with great success for local personal projects.

I have customized a docker container https://github.com/flatlining/rpi-gitolite-docker based on existing ones so it’s even easier to deploy it to a rPi using https://blog.hypriot.com.

Keep in mind I’m far from a docker wizard, so any feedback is welcomed :D

Yupp, Gitolite is Git done right.

No enforcing of stupid cloning just for creating a pull request, but sensible user management within one repository. This way one can cherry-pick and rebase contributions, hard to do with Github. And users can simply fetch newest developments the opposite way. No need to wrestle with multiple remotes.

Why isn’t Gitolite more popular? Well, it doesn’t come with a shiny web interface. It just works.

The Pi3+ with Raspbian is now fully capable of running entirely from a USB attached HDD instead of depending on a fallible SDcard. It requires one simple change that is documented at the Raspberrypi.org website. In fact, the NOOBS installation kit will let you select the attached SDA drive to install your OS.

I didn’t know that, thanks for the hint!

But you still need to mind your backups. One of the big reasons for using a repo hosting service is that all the boring, but important, sysadmin stuff is taken care of.

Why do we even need to say stuff like this? In any sane reality “making backups” is one of the first computer skills you learn. It’s always been the case that you always need to make backups. It’s like saying “remember to tie your shoes before you go outside”. My wife knows nothing about software but she still knows that she needs to make backups of her stuff.

Unfounded paranoia, and a complete waste of time. If you have a code that you’ll be sharing as open source, just pick one that already exists it doesn’t matter if MS, Google or a company you hate hosting it.. you gave the code away as an open source anyway. If you need something private, just pay or use a free and private one out there. If you need to create a git server, you better have a d*mn good reason. If you really don’t want anyone to look at your code, just make it as a local repo… Heck, store it in a USB drive, encrypt it to death, and share it that way. There are just so many features a side from git that companies like GitHub and GitLab offer… Not including redundancy, management, and scalability.

Imagine you were using a private GitHub repository for a closed-source product that competes with MS in any way.

What do you think they would do? Steal the code? MS doesn’t risk that. They don’t want to risk license issues. They have bought companies, then had parts of their code base re-written, because licensing wasn’t 100% clear.

Your case in a patent lawsuit might be a tiny bit easier to pursue if you, say, don’t store your source code on the defendant’s server farm… dunno. Just a thought.

Except the closed-source accounts are most likely going to be of the paid variety complete with a legal contract. You know? The one’s lawyers are suppose to be reading. In that case copyright infringement would be easier to question. BTW how’s the SCO case against Linux going? ;-)

No, but they can expect change things about the tools to require you to use Microsoft products/services, for example they could easily with all new sign ups to require a microsoft account.

-expect

* for example they could easily require all new sign up’s use a Microsoft account

typing is hard and i am dumb :/

“We love GitHub login. Your GitHub account is your developer identity, and many users are accustomed to signing into developer tools and services (e.g. Travis, Circle) with their GitHub accounts. So, if anything, we may decide to add GitHub as a login option to Microsoft.”

etc

http://reddit.com/r/AMA/comments/8pc8mf/im_nat_friedman_future_ceo_of_github_ama/e0a53si

Imagine that you’re a student whose school Github has decided to permaban from having free private repos. Are we seriously going to pay them $7 a month indefinitely for something we could do with $10 of Pi Zero and an SD card?

I wrote a guide on how to set up a git server using Gitea which I put online at https://craig.stewart.zone/guides/building-a-git-repo/ it assumes a working knowledge of GIT and passing familiarity with Linux, but I doubt either of those are in short supply for many Hack-A-Day readers.

You don’t even need a server for git. You can share changes with your team via e-mail. If you’re doing a solo project then locally using Git is fine. Back-ups can be done anyway you feel like.

I can hardly see a problem with this idea.

Fun fact, rpi3 is lame on networking due to the architecture design (networking over usb). So max is about 220 Mbps on Gigabit networking !!!

By the way even orange pi plus on board gigabit ethernet is about 500 Mbps.

You could have stopped at the word “lame”.

fun fact, you can download the entire Ubuntu installation DVD in 30 seconds with an rpi3. Oh wait, your internet is nowhere near that fast.

I think you may have killed him.

You may just know, but the fact is I have 2 fiber gigabit internet connection at home, and 3 at my office. There are part of the world, where gigabit is not considered as sci-fi or alien technology. All of my networks are at least gigabit, my colleague’s and my time is more valuable than, to use low tech stuff.

But if you have a design which is wasting 80% of the available bandwidth, than that design is LAME period.

wtf would you do this on a Pi for!? It’s not an offsite backup or means of sharing your codebase, it’s just an inconvenience to push code from the local repository that’s presumably on your desktop/laptop, and gains you approximately nothing.

Much better to get a cheap or free shell account on a VPS somewhere (I use digitalocean, but there’s loads more and cheaper still) and run git there, assuming you were paranoid or dumb enough to care that MS owns github.

I have two remotes from each of my local repositories: github, and my VPS (actually it’s also my webserver and two domains). So when I check-in, it’s basically:

git commit -a

git push www

git push github

and there I have two backups, the latter of which is public. If you don’t want to use someone else’s git service at all, it’s real easy to make the git on your VPS publicly accessible.

One of the worst comments I’ve ever seen on HaD, congrats.

If you are doing the same thing on a VPS that you are paying for, why not do it on your own hardware that’s under your own control?

And after you get that remote setup working, you’ll notice how much it’s slowing you down, you’ll say “lets upgrade the internet” and you can have a big fight with your wife about why you need to spend and extra $50 a month.

In EU you can get gigabit fiber less than $20-$30 a month.

Not in SW Munich… OTOH, many Biergärten have WiFi, which is relevant this time of year.

But here, if you live in a fiber neighborhood, it’s $30-40. And if you don’t, you’re just out of luck. And don’t hold your breath for Telekom to roll out the fiber, b/c they’re trying to amortize their copper with all sorts of DSL-based bandaids.

You recommend using the ‘git’ group to create repositories so you don’t have to use sudo, but then you have to use sudo to fix permissions on the new repository.

True. The right thing to do would be

sudo -u git git init –bare –shared

On my project list now!

Maybe put the whole thing in a hollowed out book titled “Git it Done”

Thanks!

Is GitLab fully open source? AFAIK the “Community Edition” doesn’t have all the features of the paid version.

Anyone out there planning to build a git server should just use gitstorage – prebuilt and ready to go, 2 minutes to set up (click through the wizard), has a built in git client, everything gets encrypted if unplugged, and it sends encrypted backups to the cloud. A git server about the size of a a deck of cards (mountable) and under 200 bucks for 64GB..

Or just use Mercurial. Free, open-source, comes with an HTML UI viewer thing.

You can see MS’s thinking:

“Hey, Github, and we are gits aren’t we? I mean just look at windows10. We should definitely buy a github”

I think I figured out the plan now of MS with github. The idea I bet is to have all compiled only be available on the Microsoft ‘app store’ thereby making that a (forced) thing and controlling all programs that way eventually.

It’s a roadmap to a closed system like Apple has.

And I guess the idea is to eventually take a good cut like Apple does and justify the cost of buying Github that way, as a road to an end goal.

For those of you who haven’t looked at Microsoft in the last few years and simply assumed they were still the 100% evil closed source villains of 2001 (https://www.theregister.co.uk/2001/06/02/ballmer_linux_is_a_cancer/), you might be surprised to learn that they’ve changed a lot. They still revere developers as their number one asset, and the last decade of geniuses that have gone to work for them have been brought up on Open Source.

A few years ago they realized they could no longer attract the talent they needed unless they adopted an open source philosophy in-house. So they did, even though it was often to their detriment. For example, Microsoft Team Foundation Server is arguably one of the finest high performance development environments ever built. But because people wanted to work on open source projects, a plugin to Visual Studio was created to support github as a source code control system. So you could either choose their high performance SQL based source system, with all the change management, tracking, and code review features you could want; or you could have github integration, where subsets of those features are roughly emulated in a much harder to use package. Developers have been flocking to github because it doesn’t cost thousands of dollars per license. (But because it’s harder to quantify, they’re still paying for it in wasted time; even though they don’t see it that way.)

Microsoft also ran codeplex for a decade, where they attempted to foster an open source community. They discontinued it less than a year ago, about when they decided to purchase github.

They’re no longer actively opposed to open source; it just took them a while to figure out how to keep making money in the face of it.

” So they did, even though it was often to their detriment. For example, Microsoft Team Foundation Server is arguably one of the finest high performance development environments ever built.”

Very arguable. Course now that they have Github they can bring those features to the paid product.

I would like to add that if you are running a server with multiple users that share a group (for example `git`) for the repositories you should use –shared option like this:

git init –shared –bare

This tells git to set group permissions correctly when pushing things. Of course you can do this afterwards with git config.

You could probably get away with sgid bit and/or ACLs but this is easier I think.

The tutorial is good for getting started. But for a production system, you would need to set-up some bacjup system and probably a port forwarding for remote access outside your network.

For a simple 1 man system at home, it might not be the best solution. Git does not necessarily need a server. A network drive is good enough for a bare repository. For people with a NAS system at home something like this could be a better choice:

http://tiny.cc/c7a6uy