AI is currently popular, so [Chirs Lam] figured he’d stimulate some interest in amateur radio by using it to pull call signs from radio signals processed using SDR. As you’ll see, the AI did just okay so [Chris] augmented it with an algorithm invented for gene sequencing.

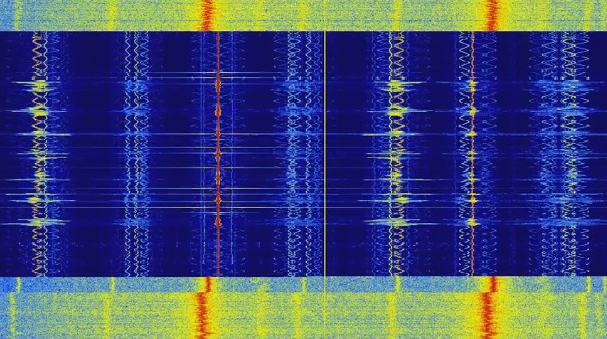

His experiment was simple enough. He picked up a Baofeng handheld radio transceiver to transmit messages containing a call sign and some speech. He then used a 0.5 meter antenna to receive it and a little connecting hardware and a NooElec SDR dongle to get it into his laptop. There he used SDRSharp to process the messages and output a WAV file. He then passed that on to the AI, Google’s Cloud Speech-to-Text service, to convert it to text.

His experiment was simple enough. He picked up a Baofeng handheld radio transceiver to transmit messages containing a call sign and some speech. He then used a 0.5 meter antenna to receive it and a little connecting hardware and a NooElec SDR dongle to get it into his laptop. There he used SDRSharp to process the messages and output a WAV file. He then passed that on to the AI, Google’s Cloud Speech-to-Text service, to convert it to text.

Despite speaking his words one at a time and making an effort to pronounce them clearly, the result wasn’t great. In his example, only the first two words of the call sign and actual message were correct. Perhaps if the AI had been trained on actual off-air conversations with background noise, it would have been done better. It’s not quite the same issue, but we’re reminded of those MIT researchers who fooled Google’s Inception image recognizer into thinking that a turtle was a gun.

Rather than train his own AI, [Chris’s] clever solution was to turn to the Smith-Waterman algorithm. This is the same algorithm used for finding similar nucleic acid sequences when analyzing genes. It allowed him to use a list of correct call signs to find the best match for what the AI did come up with. As you can see in the video below, it got the call signs right.

I thought this would just be about converting CW Morse to text – but it went in a different direction.

How would this handle shortwave radio stations? In a foreign language?

China still has a fair few shortwave stations blasting away, but I have no idea what they are saying.

what type of computer is required to process? Will you post the code?

I have no use for this but I’m very impressed.

Such technology would be a fantastic boon for hard-of-hearing amateur radio operators for whom correct callsign identification from a voice signal is incredibly difficult even with the use of international phonetics.

He might have better results if he didn’t use an antenna. The RTLSDR is being over driven and the radio’s mic may also be over driven. There is quite a bit of distortion that doesn’t need to be there.

The audio being replayed from the SDR in the video sounds ok. That’s what the algorithm would be processing as well.

AI is bullSH$# you heard it here first.

I’d like to do the same think with AM transmissions of my local airport. Also, I plan to use DeepSpeech by Mozilla instead of Google’s Cloud Speech-to-Text service.