People take their tabletop games very, very seriously. [Andrew Lauritzen], though, has gone far above and beyond in pursuit of a fair game. The game in question is Star War: X-Wing, a strategy wargame where miniature pieces are moved according to rolls of the dice. [Andrew] suspected that commercially available dice were skewing the game, and the automated machine-vision dice tester shown in the video after the break was the result.

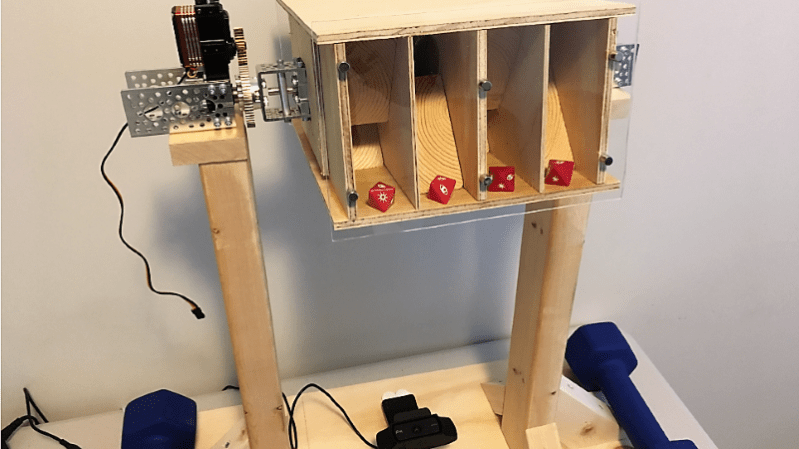

The rig is a very clever design that maximizes the data set with as little motion as possible. The test chamber is a box with clear ends that can be flipped end-for-end by a motor; walls separate the chamber into four channels to test multiple dice on each throw, and baffles within the channels assure randomization. A webcam is positioned below the chamber to take a snapshot of each “throw”, which is then analyzed in OpenCV. This scheme has the unfortunate effect of looking at the dice from the table’s perspective, but [Andrew] dealt with that in true hacker fashion: he ignored it since it didn’t impact the statistics he was interested in.

And speaking of statistics, he generated a LOT of them. The 62-page report of results from his study is an impressive piece of work, which basically concludes that the dice aren’t fair due to manufacturing variability, and that players could use this fact to cheat. He recommends pooled sets of dice to eliminate advantages during competitive play.

This isn’t the first automated dice roller we’ve seen around these parts. There was the tweeting dice-bot, the Dice-O-Matic, and all manner of electronic dice throwers. This one goes the extra mile to keep things fair, and we appreciate that.

[gadwag] tipped us off to this one. Thanks!

“He cheated! He had loaded dice! I KNEW IT!”

Are you saying lucky dice are just imperfectly skewed towards a certain face? And jailed dice are just skewed to bad numbers?

“This scheme has the unfortunate effect of looking at the dice from the table’s perspective, but [Andrew] dealt with that in true hacker fashion: he ignored it since it didn’t impact the statistics he was interested in.”

Best way to deal with that, since the numbers on the dice are not put on in a random fashion. Easiest to verify example are D6s, since it’s quite easy to see the opposing faces. The opposing pairs are 1-6, 2-5, 3-4 on those. I think the only exceptions to that rule are D3s and pyramid D4s, which don’t have enough opposing faces.

I think these are D8s, but they have the added complication of being asymmetrically labeled especially for this game. He goes into some depth on the reason for this from a game play perspective, but it was all a bit baffling to me. I’m glad he abstracted it away.

Andrew explains in his writeup that these d8s are not uniquely labelled and so you can’t quite apply that logic here.

The green dice have 3 blank faces, 3 “evade” (arrow) faces, and two “focus” (eyeball) faces. The red ones have 2 blanks, 2 “focus”, three “hit” (coloured explosion) and one “crit” (explosion with unpainted center). If you treat hits and crits as equivalent (they roughly are) then you can work out the top of a red die from the bottom, but it’s not possible with a green die.

It’s of note that he actually finds an approximately 95% similarity to perfect abstract fairness. Despite writing a 60+ page report, he’s fumbled the basics of statistics, and ignored confidence levels. His own study disprovies his conclusion. There is no benefit to be gained from the minor variation within these dice, and claiming that there is is dishonest and causing “panic” in an already reactionary and hysterical community

@Wil

He describes his methodology, he explains when he considers results statistically significant, and he backs his report with data. You’re just complaining in a website comment without proving it with data: the minimum effort. So… You’re super-confident about being a lazy naysayer. Cool, bro.

This guy built a machine, taught a neural network, collected tons of data, and wrote a 60-page report to share and explain his findings and methodology. If you’re going to criticize him, you can afford to put just a modicum more effort into backing your critique with data. Yes you can. No excuses.

But I might be reading far, far too much potential into someone who ranted that X-Wing is a “reactionary and hysterical community”. I don’t think you’re taking this that seriously; I have my doubts you actually read the document instead of scanning for a few numbers to sophistically yell at. Maybe you’re just drunk, or maybe you’re just naturally petty and lazy. It takes real work to create, but it takes no work to dismiss someone’s work without good reason.

Is there any way to get into contact with Andrew Lauritzen? After having a deep look into the work, I found one interesting effect:

Especially for the standard dice, it seems that around 6000-10000 rolls, the chi squared value suddenly starts to increase, which shouldn’t be the case. My only explanation for that could be that the dice are worn down and develop extra imperfections. One can also see that the metal dice do NOT show this behaviour, which could correltate to their robustness.

A suggested variation of the experiments could be to calculate the chi squared value for batches of data (e.g. only calculate a chi squared value for 1000 dice rolls), this should show the effect even more, since the “old, good” data is not included here any more. A sudden increase of the chi squared value could correlate to a part of the dice breaking away. If the increase happens continuously, this might rather be a wear-out effect like one side getting sanded down by the continuous rolling.

If you, Andrew, see my comment, I’d be happy to get a reply from you, so we could discuss this topic. If you look for Udo’s 3D World, you can contact me also directly.

Best regards,

Udo