The story of Linux so far, as short as it may be in the grand scheme of things, is one of constant forward momentum. There’s always another feature to implement, an optimization to make, and of course, another device to support. With developer’s eyes always on the horizon ahead of them, it should come as no surprise to find that support for older hardware or protocols occasionally falls to the wayside. When maintaining antiquated code monopolizes developer time, or even directly conflicts with new code, a difficult decision needs to be made.

Of course, some decisions are easier to make than others. Back in 2012 when Linus Torvalds officially ended kernel support for legacy 386 processors, he famously closed the commit message with “Good riddance.” Maintaining support for such old hardware had been complicating things behind the scenes for years while offering very little practical benefit, so removing all that legacy code was like taking a weight off the developer’s shoulders.

Of course, some decisions are easier to make than others. Back in 2012 when Linus Torvalds officially ended kernel support for legacy 386 processors, he famously closed the commit message with “Good riddance.” Maintaining support for such old hardware had been complicating things behind the scenes for years while offering very little practical benefit, so removing all that legacy code was like taking a weight off the developer’s shoulders.

The rationale was the same a few years ago when distributions like Arch Linux decided to drop support for 32-bit hardware entirely. Maintainers had noticed the drop-off in downloads for the 32-bit versions of their distributions and decided it didn’t make sense to keep producing them. In an era where even budget smartphones are shipping with 64-bit processors, many Linux distributions have at this point decided 32-bit CPUs weren’t worth their time.

Given this trend, you’d think Ubuntu announcing last month that they’d no longer be providing 32-bit versions of packages in their repository would hardly be newsworthy. But as it turns out, the threat of ending 32-bit packages caused the sort of uproar that we don’t traditionally see in the Linux community. But why?

An OS Without a Legacy

To be clear, there hasn’t been an official way to install Ubuntu on a 32-bit computer in some time now. Since version 18.04, released in April of 2018, Ubuntu has only provided 64-bit installers. Any alternative methods of getting a newer version than that running on legacy hardware was unsupported and done at the user’s own risk.

Since ending support for installing on 32-bit hardware went fairly painlessly last year, Ubuntu concluded that this year it would make sense to pull the plug on providing packages for the outdated architecture as well. All modern software is either developed for 64-bit hardware specifically, or at the very least, can be compiled and run on a 64-bit machine. So as long as users made sure all of their packages were updated to the latest versions, there should be no problem.

This is a perfectly reasonable assumption because, on the whole, Linux has no concept of “legacy” software. Software developed for Linux is generally distributed as source code, which is then compiled by the distribution maintainers and provided to end users through a package management system. Less commonly, the end user might download the source themselves and compile it locally. In either event, regardless of how old the software itself is, the user ends up with a binary package tailored to their specific distribution and architecture.

Put simply, the average Linux user in 2019 should rarely find themselves in a situation where they’re attempting to run a 32-bit binary on a 64-bit machine. This is in stark contrast with Windows users, who tend to get their software as pre-compiled binaries from the developer. If the developer hasn’t kept their software updated, then there’s a very real chance it will remain a 32-bit application forever.

For this reason, Windows will allow seamless integration of 32-bit software for many years to come. But for Linux, this capability is not nearly as important. Most distributions do provide the ability to run 32-bit binaries on a 64-bit installation through a concept known as multlib, but it’s not uncommon to find this capability disabled by default to reduce installation size. It’s this multilib capability the community was worried they’d be losing when Ubuntu announced they would no longer be providing 32-bit packages. Support for 32-bit hardware had already come and gone, but support for 32-bit libraries was something different altogether.

Gamers Rise Up

So if there’s no extensive back catalog of 32-bit Linux software to worry about, then who really needs multilib support? For the user that’s running nothing but packages from their distribution’s official repository, it’s typically unnecessary. But Ubuntu, arguably the world’s most popular Linux operating system, is something of a special case.

Being so popular, Ubuntu is often the distribution of choice for the recent Windows convert, and has also become the defacto home to gaming on Linux thanks to official support from Valve’s Steam game distribution platform. This means the average Ubuntu user is far more likely to try to run 32-bit Windows programs through WINE, or Steam games which have never been updated for 64-bit, than somebody running a more niche distribution.

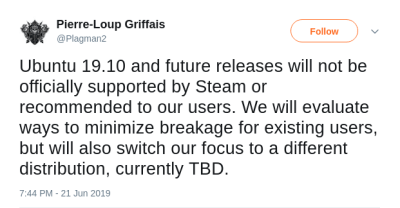

When WINE and Steam developers heard there was a possibility that Ubuntu would drop the 32-bit libraries their respective projects relied upon, both camps released statements saying that they would have to reevaluate their position on the distribution going forward. Ubuntu users were understandably concerned, and their very vocal displeasure at this potential scenario created a flurry of articles from all corners of the tech-focused media.

32-Bit Lives On, For Now

With the looming threat that WINE and Steam would no longer support their distribution, it only took a day or two for the Ubuntu developers to adjust their course. In a post to the official Ubuntu Blog, the developers make it clear that they never intended to completely disable the ability to run 32-bit “legacy” programs on future versions of Ubuntu. But more to the point, they promised to work closely with the teams behind WINE and Steam to make sure that any and all 32-bit libraries their software needs will remain part of the Ubuntu package repositories for the foreseeable future. For now, a crisis seems to have been averted.

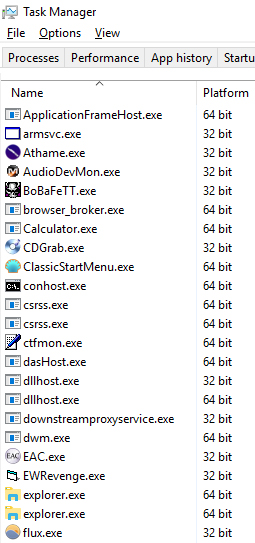

But for how long? At what point will it be acceptable to finally remove the ability to natively run 32-bit software from modern operating systems? This story started something of an internal debate here at the Hackaday Bunker. In an informal poll, a few of us didn’t even know if our Linux systems had the packages installed to run 32-bit binaries, and only one of us could name the exact application they use that requires the capability. Still others argued that virtualization has already made it unnecessary to run such software natively, and that any code so far behind the curve should probably be run in a VM from a security standpoint to begin with.

When was the last time one of our penguin-powered readers had to invoke multilib to run something that wasn’t a closed-source game or Windows program through WINE? If you’re running Windows, do you give any thought to what architecture the various programs on your machine are actually compiled for? Let us know in the comments.

Well, yes.

As a rule of thumb here, any machine with 4GB or less will get a 32-bit OS . Exception to gamers, that are more prone to accepting the recommendation to install more memory, then we install a 64-bit OS even if they still haven´t bought the memory.

But for normal users, the kind that will question you if they “really really” need more memory, 32 bit to you. Those kind are also more prone to have old software that is still 32 ( or 16 ) bit they need to run.

For most ot fht linux users not minding when ubuntu stopped releasing 32bit versions, that could be a proof that many systems are set-and-forget and keep happily running, with a occasional update . Also, many people will not upgrade ( some of my machines also are not to be updated ) to new versions because of the perception of they being slower in the same hardware.

One of the important points for defending linux in conversations was hardware support. The argument of you can still run the xt-ide hard disks is something that always get throw around, along witi parallel ports, parallel zip drivers, etc. If linux starts removing support for a lot of that just “because it is hard “, then it starts moving th MacOS camp. No problem if that is their decision, but it just changes the basic idea of what it was described to be.

If you want to run 15 yr old hardware, that’s fine, but you likely either want to run 15 year old kernels on it and with that, a 15 year old distribution. Arguing that linux should keep support for devices which aren’t just out of manufacturing, but are entirely out of copycat producers in china making them anymore, out of any kind of sane support and so far out of date to replace them ‘as is’ would likely cost more than a entirely new system to entirely replace it is verging on crazy.

I love the fact Linux runs my old hardware fine, but I have reasonable expectations of ‘old’ – upto 10 years means I can generally still make some kind of use of it, any older than that and I’m looking at devices which not only cost more to run, but generally can be replaced by something fair simpler and less likely to go wrong.

However, this also entirely misses the point of what Ubuntu (not the kernel) is doing, Linux is still Linux, and if you want those old libraries then just install a distribution that still offers them (cough gentoo cough) or install a older version of ubuntu. Accept that the working life of that device is likely coming to a end, or you’re looking at having no more support (which to be honest you never had anyway, as I covered above).

And of course there’s always outliers such as weird business dependant systems which “can’t” be replaced/reproduced for whatever reasons – strange how when things get critical they suddenly are replaced, and generally it ends up costing a lot more than if it was just done when it should of been.

Your reasons could be right, but they do away with the linux differentials, and just turn the whole situation equal to the other OS´s. Want to run X and Y ? keep running Window 2000 then, because Windows 10 doesn´t support it anymore.

There are a lot of old machines out there that will run only the 32 bit versions of linux. If it is discontnued, it is also killing the whole “try it in an older machine to give it new life and see if you like it” experience.

Whole situation would…if one ignores the whole open source part.

Yeah, like the HP laptop we bought in 2003.

A few months ago I loaded a small Linux distrib on it so we could use it to browse the web and play talk radio.

I disagree quite strongly. You may think that modern hardware is cheap, and modern enough older hardware even cheaper. But for many people, that kind of cheap is still too expensive. I know that may be hard to believe when your pockets are full. But there are still many people in the world who are in a position where they need a computer, but just can’t easily afford to buy one. Especially not another one because the old one is to old now.

Granted, i can’t proof that. But still, i hardly can doubt it.

And what is “old” anyway? Even core2 generation processors not always supported 64 Bit. That’s not that that long ago, maybe 10 years. And many of those are easily fast enough for everyday tasks. The computer in my workshop has a core solo at 1.66ghz. Its fine for web browsing, reading datasheets, Arduino programming or using my USB-Oscilloscope. A newer one would bring pretty much no gain. I have currently 2 computers with 32 Bit processors in daily use with modern software and no need to upgrade. A third will come along as soon as i get a new battery. A fourth just waits for me to fix a driver issue. Two of them have a very convenient form factor which is basically not made any more, except for very few niche products which are way outside of my budget. One just works fine. The last has a very nice keyboard.

There are many reason for using older hardware with modern software. Even older hardware than you would use. It may just be still perfectly fine for its job. It may be a very nice device, for one reason or another. It might be the only one you can afford. It might have special, otherwise hard to come by qualities.

There is now a 32 Bit Arch edition. Manjaro has one too. Debian still supports 32 Bit. Devuan too. So people do care, and have use of it. Some may have need for it.

Its something i see more often these days in the open source community: this easy dismissal of needs that are not ones own. And that is something that feels odd to me.

“But for many people, that kind of cheap is still too expensive. I know that may be hard to believe when your pockets are full. But there are still many people in the world who are in a position where they need a computer, but just can’t easily afford to buy one. ”

I don’t know where you live, but where I live it’s possible to get old 64-bit computers for free (or very close to free). If you live in a part of the world where that’s not the case, it’s not impossible to arrange shipping from a part of the world where old PCs are plentiful.

Excellent, I’ll take 50 desktop computers. Will pay for freight shipping. When can you send them?

Thank you, Nullmark,

I agree with all you’ve stated. I also want to add-in that we’re piling poisonous, working computers into our landfills and further polluting this already-in-emergency planet of ours. I feel pure manipulation at some of the push to “modernize” because “your working computer is too old”.

It’s reckless & irresponsible, imho, to just say, “oh, toss it away & too bad for you if you cannot afford another new one”. Really dismissive of the “have-little/have-nots”.

“As a rule of thumb here, any machine with 4GB or less will get a 32-bit OS”

As a rule of thumb, no, if you want to directly address more than 4 GB, that rules out a 32 bit kernel, not a 32 bit OS.

Beyond that, you run the kernel that best utilizes the hardware it will be on. Period.

A 64 bit CPU with 512 megs would still get a 64 bit kernel. Other than slowing your computer down needlessly there are plenty of reasons not to use a 32 bit or any older kernel.

Converting your kernels 32 bit pointers to 64 bit, operating on them, and converting back to 32 will add significant slow downs for no gains at all.

A 32 bit kernel will likely require more RAM than a 64 bit one that was compiled for that specific CPU, which is important when you have a low amount of RAM in the first place.

Not to mention there is over a decade of hardware accelerated opcodes for dedicated purposes you won’t be able to take advantage of.

None of this has anything to do with the OS libraries however.

Ugh… No…

If you run a 32 bit OS on a 64 bit machine with 4GB ram or less you still save a lot. You can even run it with more RAM (using the address extensions features of x86) and you save a lot. Compare sizes of full installs of 32-bit and 64-bit systems and you’ll see a difference. There are advantages with virtualization as well. All you need to do is not to enter the long mode on the processor, or whatever it was called.

By the way, RPi 3 has a 64-bit processor, but the soft released for it is still 32bit. Riddle me why :P

Because it’s ARM and has plenty of registers even in 32bit?

“By the way, RPi 3 has a 64-bit processor, but the soft released for it is still 32bit. Riddle me why :P”

So users don’t have to download a different version of Raspbian for the Pi 3 vs. the 1/2.

Also the Pi 3 is a special case where, as I understand it, Broadcom got lazy and grafted AArch64 cores without bothering to update the peripherals, most notably the GPU and video codecs. There was a technical discussion somewhere that indicated getting a 64-bit Pi 3 up and running WITH hardware video acceleration was quite a challenge due to some of the video peripherals not playing nice with 64-bit pointers.

Uuuhhh… Dude… You can use way more than 4GB of ram with a 32-bit OS. It’s just any SPECIFIC process cannot address more than 32-bits of ram at one time.

You can only address up to *8GB of RAM* with the 32-bit OS, and you need to enable PAE in the kernel to do it. The RAM address space is a mess, and having the kernel switch and use the hack that is PAE is .. ***insane*** to do today due to the crazy overhead/slowdown. You can only address up to 4GB of RAM with each process in 32-bit land (correct cnlohr). I think the specifics here, and the grasp of commentators on the 32-bit topic is making it pretty clear that 32-bit should really go away, it’s just not supportable.

Rog Fanther saying 4GB of RAM gets a 32-bit OS is making a major mistake and hindering systems needlessly, for what Dissy identifies.

While I do run a 32-bit machine from 2002, it’s mostly for kicks. “Holy cow, this thing still runs ?” is fun and all, but not practical in the power ($) spend to compute ratio.

WTF are you saying!? PAE extends the address line through paging, which is a regular way to address the memory anyway, and not to 8GB but to 64GB (extending address line from 32 to 36 bits).

Moreover, PAE is usually switched in the Linux kernel by default.

By the way, 64-bit kernel would use even more complicated paging than a 32-bit one, as you have 2-level structure in protected (32bit) mode without PAE, 3-level in protected (32bit) mode with PAE and 4-level in long (64bit) mode.

“As a rule of thumb here, any machine with 4GB or less will get a 32-bit OS ”

64 Bit X86 has much more going for it than just larger pointers. For instance i386 is badly register starved meaning the compiler has to swap from register to memory memory more often.

Um, no! This is a stupid comment and not thought through one iota. What modern desktop cpu is 32bit? None being produced by Intel or AMD. Plenty of computers that still sell with 4 gigs of DDR4. Most “normal” users start at 8gigs and tend to upgrade to 16 once they see the trouble multiple tabs on a web browser will cause. And aren’t most normal pc users gamers any more? Most people I know that dont know about computers ask people like me what to buy. Guess what we tell them? 16 gigs. Most normal users buy pre-built systems with an Intel or AMD cpu……all 64 bit. I think you should not talk in a public forum when you are so wofully ill informed.

while on the topic of stupid comments and not thinking…

ALL modern “desktop” cpus are 32 bit.

let that sink in for a minute.

nearly all modern “desktop” cpus are 64 bit capable as well.

they aren’t truly 64 bit, they are 32 bit cpus with 64 bit extensions. they were designed to run legacy 16/32 bit software and have added 64 bit addressing support and newer instructions.

let that sink in for a minute.

Itanium was a true 64 bit cpu, the popularity (and speed when running current software of the time) of AMD’s Opteron/Athlon 64 implementation forced Intel to change direction and follow along adding 64 bit support on to their older 32 bit ISA as well.

almost all modern “desktop” cpus are in reality 32 bit designs with extra 64 bit addressing and new instructions tacked on, causing the cpu to have to “switch” execution modes and making a mess of things when running 64 bit and 32 bit at the same time. it takes a lot of overhead to run 32 bit apps under a 64 bit OS both in coding the OS and executing the apps at runtime. Windows does it by simply doubling up on certain DLL files to be both 32 and 64 bit and baking in the switching support for those kernel elements that require it.

the comment that 4 GB or less should probably run a 32 bit version OS is actually not that far off. There are systems sold today with 4 GB RAM as standard that cannot be upgraded, further the storage on these is typically 16 or 32 GB of flash that also cannot be upgraded by the end user. a 32 bit OS in that case makes sense as it will have a smaller install, cannot take advantage of greater ram, and the processor isn’t going to ever be “fast” no matter what AVX instruction is used.

And then there’s legacy owned / used / refurbished systems.

These will have older hardware installed and may no longer have supported drivers released and may never have had 64 bit drivers such as wifi adapters and graphics cards.

What if my application doesn’t require much RAM, but benefits from 64-bit processor architectures?

Got a chuckle out of that artwork. Great stuff!

yup, really good graphic today.

Teaching MIT scratch to primary school students on old hardware is difficult without 32 bit i386 libs, particularly if you want to run scratch v2.0 as a standalone programming environment without internet access, as opposed to the newer web based 3.x versions.

I’m wondering if you are conflating two things. Multilib has very little to do with the kernel. It is a linker system where-by different sets of static and dynamic libraries are maintained on the file system and invoked based on an ABI (Application Binary Interface) switch. That switch is defined by -march/-mcpu at compile time and the ELF EABI type at run-time. The article is entirely unclear what you are talking about. Multilib is never going away nor changing. It’s used for more than 32 vs 64 bit systems – such as with FPU or without FPU (soft FPU) on most architectures. It’s also used to select variations in otherwise homologous sized instruction sets like 32-bit ARM Cortex A vs M vs R.

You’ve also entirely assumed a 32-bit vs 64-bit distribution debate relates to x86 vs ia64. There are many more architectures that will continue to require 32-vs-64 based distributions for years to come. ARM for example.

AFAICT, you’re just commenting on some distros not building 32-bit LIBRARY support into their 64-bit distributions using Multilib – which is typically done with “compat-*” type packages; and dropping 32-bit compiled distributions altogether.

Basically saying: If you still have a Pentium 4, you’re fucked…

But it keeps me warm !

Or an Atom.

It has been a long time since Atom was 32 bit only. An Atom that old would be slower than a Pi 3, so the only good uses I can think of for such a machine are along the lines of running legacy x86 software such as old games or the few things it can do better than a Pi as in a DIY router or NAS, none of which depend on using the latest desktop distribution at all.

Memories of pulling apart an old netbook to mount a giant cpu cooler to overclock it well past any reasonable limitations. The main board ran out of power before I found the upper limits of that chip.

My 2008 Atom is running Mint 19.1 64 bit just fine. I use it to backup over my network.

07/06/19 from “screenfetch -n”

atom@Fileserver

OS: Mint 19.1 tessa

Kernel: x86_64 Linux 4.15.0-54-generic

Uptime: 3m

Packages: 1927

Shell: mateterminal

Resolution: 1024×768

DE: MATE 1.20.0

WM: Metacity (Marco)

GTK Theme: ‘Mint-Y-Blue’ [GTK2/3]

Icon Theme: Mint-Y-Blue

Font: Noto Sans 9

CPU: Intel Atom 330 @ 4x 1.596GHz

GPU: Mesa DRI Intel(R) 945G

RAM: 325MiB / 1983MiB

“Any alternative methods of getting a newer version than that running on legacy hardware was unsupported and done at the user’s own risk.”

Lol, all of open source is generally done at the users own risk, supported or not. (I’m a linux fan, but this line made me laugh)

…not to mention, all those stick computers (Intel “Compute Stick” clones and compatibles) and “Mini PCs” sold on eBay and Amazon, such as from MeeGoPad and the like. Those all have the nifty and remarkable (and remarkably fiendish) misfeature of a 32-bit UEFI, thus requiring a 32-bit bootloader (bootia32.efi, your search engine of choice should easily point you to the Github link where some kind soul has stashed it for us relatively-ordinary peasants) to go with your 64bit OS. I run Ubuntu most of the time now because, unlike Mint, it’s installer doesn’t cough up a lung at that stupidity. Don’t ask me why, I don’t write code — I just know how to execute it ;)

You dreadfully misinterpreted that commit by Linus.

While “i386” has often been considered a generic “designator” for all 32-bit x86 systems, that commit message did NOT remove 32-bit support entirely. It ONLY removed support for actual 386 (Dx/SX) models.

486 continued to be supported (but might be gone by now in 2019?), Pentium continued to be supported, Pentium 4 continued to be supported.

I’m pretty sure that’s what the article says, you may have dreadfully misinterpreted the article.

Did maybe you stop reading after that line? That isn’t what the article claims at all.

Why does the unproductive life of a Gamer even get a say in anything? If you cannot afford a 64-bit PC, your probably spending to much time gaming, instead of making a living. Work some OT and get a new rig and get with the times.

We’re not talking about 32-bit hardware, we’re talking about closed-source 32-bit binaries.

Read the article again. It is not the gamer´s system, it is the support for games that are still 32-bit that they are complaining about.

I´m against discontinuing the 32 bit support for now but, to a point, the part about the game support could be negotiated between Ubuntu and Steam to give the game developers some time, like, two years, then the support would be removed. And really do it. If it is in the best interests of everybody, and is conducted in a organized way, while also trying to offer solutions to the edge cases, then it should work and people wouldn´t complain that much.

The thing really needed is solidly designed yet efficient “translator” libraries acting as a shim between 32bit binaries and 64bit libs.

Because 32bit ain’t ever gonna go away nor will all the software based on it be irrelevant.

But canonical went with a hamfisted

approach and got rightfully burned for it.

“The thing really needed is solidly designed yet efficient “translator” libraries acting as a shim between 32bit binaries and 64bit libs.”

That IS Multilib. It exists already, Canonical just needs to continue to ship it.

> the part about the game support could be negotiated between Ubuntu and Steam to give the game developers some time, like, two years, then the support would be removed.

> And really do it.

The heck are you talking about? Nobody is going to rewrite/recompile decades old games against 64-bit libraries, yet people still want to play them :D And they do.

Yeah, REAL gamers have jobs so they can buy the fastest, coolest, Most RAM, Most Procs, most bandwidth, most renders, and keep pushing the limits of personal computing.

Crysis anyone?

(tongue in cheek)

Lets not forget a super spring-y mechanical keyboard and ridiculously long mouse pad.

Forget Crysis, it better be able to run mine craft.

Super long mouse pads and mechanical keyboards are not just for gamers – just sayin ;)

The one with edgy design (aka futurist but for someone with no design skill) and full of ridiculous light effect, But of course, the last touch: something with Dragon or Falcon written on it.

Squeaky wheel gets the grease. People with superfluous needs have been driving tech for years. That’s why you can’t find computer monitors with anything but 16×9 aspect ratios, that’s why phones/tablets/notebooks are so thin they bend just looking at them and batteries need to be glued in, that’s why American sports cars all look like they came off the set of the latest Transformers movie… the list goes on.

You’re so far off-base it’s astounding.

Don’t shame the user.

Also, the problem isn’t the 32-bit games here, it’s that the most popular gaming platform – Steam – does not provide a 64-bit version, and the linux community isn’t profitable enough for them to put much work towards doing so. Killing 32-bit software kills one of the most popular software platforms on the planet (regardless of ‘whose fault that is’).

If you don’t shame the user out of reflex, then you tend to understand their problems better.

GAMERS RISE UP

WE LIVE IN A SOCIETY

BOTTOM TEXT

I build my systems using LFS as a distro. I currently have both 32 & 64-bit versions in active development. A Pentium-III system is in daily use and will continue to be.

Also, as far as virtualization – virtualization has always had serious issues with video acceleration. Especially on desktop GPUs. (NVidia now supports GPU virtualization, but only on Quadro/Tesla parts to my knowledge.)

Pass-though for Intel.

Considering the fact that people tend to run machines for much longer now, it doesn’t make sense to ditch backward hardware support. I havn’t rebuilt my machine for over 6 or 7 years now…. still chugging along, and still gaming on it just fine. old as dirt, but a hefty graphics card update to an hawaii gpu sure did the trick to keep it doing everything that needs to be done. Granted the processor is showing some age, but I’m still happy with it from a hardware perspective. Having said that, I can bitch about shit software all day long. Seems nobody (almost) knows how to fucking build a web page or a program these days. it’s sad. They prioritize flashy gui and graphics over actual functionality, usability, and ubiquity. the last part is important, because it’s difficult for our brains to organize memory on how to use multiple different user interfaces, and if every app has a different interface, and nobody follows basic rules, then it makes it harder for your customer to use the app/site, it makes them less likely to use it, and it makes them more frustrated. I really miss the days of web design when it was literally just a bar of menu’s at the top or the side or both, and just very well organized so you can get to literally everything you need in 2 or 3 clicks of a mouse form the home page. These days, in the days of “lets spread an article out over 30 slides”… it’s fucking garbage, and it breaks continuity with the reader, and makes it more difficult for them to actually use the site. I am frustrated with almost every site I go to these days. I don’t use shitty phone apps because they are all laid out differently. Guess what, doesn’t bother me. Those apps don’t add value to my life, at all. I just use ublock origin and a host of other programs to fuck all of that garbage in the asshole. anyway, how about next time someone designs a piece of software, they look at the last 60 years of computing design, then look at the last 30 years of how things actually look. The entire idea of a computer is that it adds value to your life, is easy to use, yet powerful as a tool when you need it to be, and flexible enough to do what you need it to do with minimal input. Software and web designers these days ignore all of those rules. This article outlines a great example of all of this. The internet, and life as a whole is made better when we don’t artificially turn a few billion pounds of computer hardware into computer scrap a decade or more early… You know, if you care about our planet at all, a handful of people always make the decision to gain profit and drop backward support, and that ends up in billions of pounds of e-waste, that is either burnt, or just dropped into a lake where people drink directly from…. /pathetic.

“Seems nobody (almost) knows how to fucking build a web page or a program these days. it’s sad. They prioritize flashy gui and graphics over actual functionality, usability, and ubiquity”…

This is partly true but a lot of the blame belongs to the customer (the guy that pays for the site to be built) and the managers that pander to the customers while constantly wanting to leave their finger prints on the end product. Yes there are plenty of web programmers that have NO clue about programming but if you really want someone to blame look higher up.

agreed.

+1

Funny rant but true!

Likewise my CPU is 6 or 7 years old, but it’s still 64 bit. It’s finally time for an upgrade now that Ryzen 3rd gen is out. 50% more performance for less than 50% the power consumption.

When was the last 32bit Intel desktop chip sold? I just checked out my old Intel E2180 from 2007 but that was 64bit so sometime before then?

Paragraphs

are

a

thing.

You may send me your CV if you’d like to be my editor.

Physician, heal thyself. You are clearly not a UI guru with that indigestible post.

One of the main points of the Graphical User Interface was “Learn to use one program and you know how to use them all!”.

All the major operations of all programs on any given GUI *are supposed to be identical*. Thus you only need to learn once how to open and save files, how to print, how to move, copy, cut, paste etc.

The OS/GUI is supposed to provide APIs and common dialogs *to make the programmer’s work easier*.

But what have we gotten from all that? GUI Programmers who code as though they’re writing programs for MS-DOS where every individual program had to handle *everything* except the basic file system tasks.

Of course some of the biggest violators of the GUI rules are the companies that make the operating systems. Apple shredded, burned, then peed on the ashes of their own user interface guidelines starting with the release of QuickTime 4. Microsoft Office (especially the file open/save dialogs) pretty much ignores Windows’ UI conventions. Then there’s Adobe software that is even worse about ignoring the host OS UI style.

They’ll even do stupid crap like making a web browser bypass the OS user input device API. Remember Firefox 4.0? The initial release didn’t support *any* scrolling input devices. The very next update added scroll *wheel* support, poorly. No help for laptop users because Firefox ignored the scroll zones on their trackpads, and it was that way for quite a while. WHY did the Firefox people want to take on the extra work of doing that instead of simply interacting with the user input like all other software?

Another issue I’ve run into with various releases of Firefox since 4.0 is that it tends to slow down a lot when I’m burning a disc – doesn’t matter that the data going to the disc is NOT coming from the drive where Firefox is installed or where its cache is, or that the source drive and disc burner are on a completely separate controller – the browser would damn near come to a halt – while everything else would continue to work fine. WTH is Firefox doing that it’s so badly affected by data movement with which it should have no contact?

that’s likely an issue with your southbridge, or whatever chip handles your i/o for your devices. Don’t get me started on how intel and AMD both make shit chipsets and the entire market suffers from the ubiquity of only two chipset manufacturers. my old nvida chipset (circa 2007) did things my 2012 chipet won’t do and chipsets to this day will struggle with. but i digress. other than that, you pretty much said it. and in paragraph form. i’m sure the ocd fella above will appreciate that.

Once some web wrote an article on “how we changed in 15 years” and comments were as follow:

– much more clear before

– add were not that disturbing though visible

– content was better

I still use some older software because it take less space, provide more features, interface is clear and is not so resources hungry.

Both Intel/Altera nor Xilinx toolchains require multilib to run under Linux (not for any good reason; as far as I can tell the broken part is something to do with a tcl/tk wrapper that makes the Linux implementation of said script glue look like the 32-bit cygwin port that’s their golden test bench and nuts to you if you want a full 64-bit version because they lost the source code years ago or something stupid like that). The actual compiler tools are of course all 64-bit (and fall over dead compiling hello world if you give them less than 4 GB (well, not quite that bad, but close)), but the glue scripts invoking all the right stages in the right sequence and calling out to the tcl hooks for timing constraints and whatnot still rely on a hacky 32-bit infrastructure.

If I were less pragmatic and more dogmatic I’d say “well, just one more reason to use the Lattice parts with the open source toolchain and no such silly constraints as broken legacy vendor tools” but ultimately Intel/Altera and Xilinx have higher density FPGAs and broader product lines and spiffier hard blocks (memory controllers, ARM cores, PCIe endpoints, etc.) so realistically many projects would be a squeeze at best with the Lattice parts. *Sigh*

Maybe one day there will be an open source toolchain for Intel or Xilinx parts. Now that Moore’s law has hit a wall the time from one generation to the next has grown long enough that it’s a bit less like pissing into the wind because there’s a fighting chance that an open toolchain could become stable before the parts it targets go end-of-life (hell, Altera finally caught up to the point where their proprietary toolchain became stable before the part was obsolete).

It’s not just gamers. There are plenty of examples of software that ONLY comes in 32-bit binaries and thus requires 32-bit libraries. I’ve read about people in companies that use older 32-bit proprietary software for their business, simply because nothing better has been made yet to replace it. Kill of 32-bit library support and you kill off their ability to do their business. Right now, on my home Linux Mint installation, if I try to uninstall all 32-bit libraries, a bunch of software that I use gets uninstalled as well. And very few of them are games. This isn’t a simple problem.

Sure, on Windows that kind of stuff happens. But that’s why Windows has such seemless 32 bit support as mentioned in article.

But is there an example of this actually happening on Linux? How many businesses out there are seriously relying on a closed source outdated Linux binary? The numbers just don’t add up.

Quartus 2 comes to mind, or older licenses of Eagle. Still valid but no 64 Bit compatible binary in sight and never will be.

” I’ve read about people in companies that use older 32-bit proprietary software for their business, simply because nothing better has been made yet to replace it.”

Not necessarily a bad thing. There’s lots of software out there written for “alternative” OSes which work just fine, and practically free.

^This

Scientific software is another example. Nearly all the scientific software binaries I use on a regular basis are 32 bit. I use them because there either isn’t anything better or there is no 64 bit version. Upgrading is a highly non-trivial problem.

What are the names of these programs?

Back in 2004, when we were doing graph analysis in university, we quickly hit the address space limit of 32 bit processors with out data set (we made heavy use of mmap) and resorted to 64 bit machines.

Not to mention that science should be about repeatability and the ability to replicate. If you someday want to expand the work or find issues, using the exact same method to reproduce your results is important- so when you want to change things, you can reduce the number of variables.

Also, if upgrading, having the old version and the new version of the analysis software around is important- do you get the same results from the same dataset?

Thank you. I wish we worked together.

It also would have broken every scanner more than 3 years old that uses binary drivers. So many of those are 32bit. Scanners have a life much longer than that.

Your point about 32-bit scanner and other hardware drivers is quite valid but sadly seems to have been largely overlooked.

I have a Brother printer/scanner which I bought bought brand new from a retail office supply outlet just 1-1/2 years ago. The Linux drivers which Brother supplies are 32-bit. Am I now supposed to junk a perfectly good — and nearly new — piece of hardware just for the sake of “progress”?

You should be able to run it in 32-bit OS inside a VM in Linux.

I am running a XP in a VM in virtualbox under Win10. They took away XP Mode from win7. :(

The VM is setup without network access and I spin it up just long enough for a few scans.

I appreciate the tip! Thanks. :-)

That’s quite cumbersome for just scanning.

From my HDD, spinning up the VM take like 10 seconds. Run a few scans and take another 10 seconds to save the session. This is actually faster than my old XP box which I took the license from.

I rarely do scanning these days, so it isn’t too bad.

The old non-Contact image sensors (CIS) scanner are perfect for scanning PCB with large depth of focus to about an inch or so.

Mustek’s flatbed scanners had quite a good depth of field so scanning non-flat items worked well. OTOH every Brother flatbed scanner I’ve encountered is focused precisely to the top side of the glass. If it’s not in intimate contact with the glass, it scans blurry.

One of the reasons I keep an old HP, big and bulky, but they don’t make them like they use to.

Scanners have long been an issue with getting ‘unsupported’ by their manufacturer. Mustek was one of the outliers, providing support on Windows 2000 for their parallel port scanners when other companies like UMAX claimed that was impossible. ISTR doing some minor hacking on a Mustek LPT scanner driver to make it work on XP, and sometime later Mustek supported some LPT scanners on XP.

UMAX’s older SCSI scanners were an interesting thing. Plug one ‘not supported’ into an XP system and Windows would detect the scanner as seven identical devices. Some outfit in Australia figured out a hack to stop that. Still didn’t support various older models. I dug up drivers from a UMAX site in Europe (IIRC Germany) and dug into the INF and other setup files. Turned out that most of the SCSI scanners UMAX had declared to be no longer supported used files that were in the then current driver package, unchanged from the previous packages. So I did some copying and pasting from the INF and other text files from old to new and combined with the Australian multiple detection patch we had many old UMAX scanners working in XP. (I sent the people who did that fix my how-to on editing the setup files.)

Then Adobe released a new version of Photoshop that mysteriously lost the ability to access some of those old scanners through TWAIN, so a separate scanner program had to be used. My assumption was UMAX ‘politely asked’ Adobe to update Photoshop to not work with their older scanners.

Sounds almost like a HaD project, using a 555 timer. :-) Or at least a Raspberry Pi.

It always seemed weird to me that I need multilib to run a 32-bit application in Wine. I’m already using a compatibility layer! Why can’t the 64-bit wine libraries be written to provide a 32-bit interface for a 32-bit windows application?

I have this cute old mini itx board that still works, but the processor is a VIA C3 800A. IIRC, the processor is 32-bit only, but also doesn’t have several multimedia extensions that are commonly assumed by newer distros, so the drop off of 32-bit support doesn’t really make much a difference in this case.

The other problem this board has is that the CD drive I have on hand is USB, and none of the old live CDs I have lying around support that, so it’ll start booting and then crash because the OS can’t find the CD drive it booted from. It’s an easy problem to fix, I just think it’s funny.

One of the reasons https://www.plop.at exists, but that requires a floppy drive.

does the kernel even use 486 instructions?

there is only 6 new instructions: XADD, BSWAP, CMPXCHG, INVD, WBINVD, INVLPG.

or has it just gotten so slow it needs the extra speed of an 486?

In my opinion the only reason for dropping x86_32 support, is the missing sane way to read the program/instruction pointer.

(to make position independent code, it need to call next instruction and pop stack to get a position offset)

The difference between 386 and 486 that matters here is that on the 486 you are guaranteed to have an FPU in the system while a 386 might not have one.

Linus’s commit wasn’t dropping support for the 386 per-se, but rather drop support for FPU-less system, which happens to only include the 386 as support for all other FPU-less chips was already ejected.

There was the 486SX (with a silence ‘U’) without the FPU.

Found a repair method for those 486SUX that involved a sledgehammer. Very therapeutic.

Also, Early 586/Pent-up-CPU’s were (IIRC) mandatory disable-the-FPU in the “you spent how much to run slower than a 486?” era.

As you can see from the linked commit, cmpxchg and bswap were emulated on 386-class CPUs. IIRC i386 also has some bugs and other system-level funkiness that require workarounds or more complicated code than later generations.

I have no idea what I’m talking about but I have a musty memory of Debian dropping support for 80386 CPUs in their i386 kernels because the CMPXCHG instruction made a big difference

i686 adds the CMOV instruction, and seems a reasonable starting point for most 32-bit software these days.

It’s nice to keep supporting the older chips but maybe the kernel should simply be forked at that point? A 386-focused kernel could dump a lot of code relating to PCI-Express, SATA, AGP, and other things that don’t exist in actual hardware anywhere.

PCIe and SATA are current technologies (maybe you were thinking of PCI-X?). Some of the “legacy” peripheral support is also used by non-x86 hardware. Even the ISA and PCI lives on in embedded and industrial hardware (eg. PC/104).

Oh, no, I meant forking the kernel supporting actual 80386 code (and maybe 486 even) into a new project… then from the new project, removing supporting code for slots and peripherals that didn’t exist on those Packard Bells of the 1990s :P

You have hardware to run this on? If I recall correctly, one of the reasons that the Linux kernel team killed off the 80386 code was that they had noone with hardware to actually test the work arounds for the CPU level bugs so they couldn’t be sure the code even worked despite all of the effort needed to keep it in place.

I‘m using Wine (386 version) to run LTspice on my Linux system… hmm…

isn’t the latest ltspice also 64bit?

Hi fonz, I checked the latest release and yes, LTspice now seems to be available as 64 bit version. I’ve updated my older release and it now says ‘LTspice XVII(x64)’. I wasn’t aware of that. Thanks!

32 bits? Kids these days. DOS runs fine with 16 …

@aeva linux and live boot from usb cd reader. same problem here, with a Acer Aspire es1-111m and a samsung usb dvd writer. Solved with “system rescue cd” 6.0.3.

And I run Slackware here who has properly grokked 64 bit on Intel, and oddly enough on ARM, and even beginning to do so on S/390. (But since the fellow who builds for S/390 has a day job, selling that for SuSe I believe the release is TBD.)

Oh and Tom? There is a big colony of penguins all wearing fishing vests looking for you, with nifty hats as well. But no tribbles, those are all with two others…..

I have a few machines that have IO busses that I need that are getting obsoleted in modern hardware. And that obsolete hardware is 32-bit. As those machines are “light” they don’t normally compile their own stuff. I do that on a more powerful server. That server is running a 32-bit userspace until this day: To be able to compile 32-bit compatible binaries.

The HTK library requires multilib, because it compiles as 32-bit even on 64-bit machines.

Seems like this was a bug that got found ~5 years ago?

http://markstoehr.com/2014/04/09/Installing-HTK-on-64-bit-architectures/

I would say it is funny, but in fact it is really sad what Linux has turned into. Back when I started it was two 3.5″ floppy images you had to download the software to write. People were encouraged to run it on old computers. When the first version of X came out and after you got the config file right for your monitor and keyboard it started to really catch on. For a good decade it was the OS to run on old hardware. It just ran circles around Windows while running in a very resource starved environment. But slowly the prebuilt binaries and distributions started taking over and linux started being a bit of a pig. And this situation has not improved much in the 2 decades that have followed. I have seen what was once a lean, tight thing become big and bloated. Most users have no idea what most of the services they are running do, and that is not a bad thing because a lot of them are never used by many users. Simple things have become complicated. It is not quite as bad as Microsoft, but Microsoft had a good head start. Let’s just say it seems to be following a similar trajectory for the most part. Kudos to the tiny core guys, but for the most part Linux, at least in it’s current form, defines bloatware, and I don’t think this is gong to get better any time soon. There once was a time when it would have been unthinkable to drop support for old hardware. That was it’s forte. I guess those days are long gone. As I said, sad…

So don’t use Ubuntu. There are plenty of distros that still specialize in being light weight and the kernel itself doesn’t drop things until no one is using it. Even on my state of the art hardware I prefer something more light weight. (Debian + XFCE)

People may have said it was good for old hardware, but for at least sime of us we wanted !inux because it was a way to run Unix. I finally tried Linux in 2000 (having been convinced by articles about 1981 that Unix was desirable) on an 8meg 486, and rapidly decided the hardware wastoo limited. So six months later I splurged $150 on a 200MHz Pentium. Much better.

If you read old books about Unix, the expectation was that for every install you’d compile at least the kernel, fitting it to your hardware.

Most people stopled doing that in recent times, so the !inux kernel kept getting bigger. But it’s not so much bloat but endless drivers for all the hardware out there. You generally don’t have to fuss about hardware.

Distributions got larger as more apps came along. Once they became too large for floppies, the CDROM meant lots of space for more apps. Hardware likewise increased so more drive space for the extra apps. They don’t use up RAM space unless you run them.

I paid less for my refurbished i7 with 8gigs of RAM in 2016 than I did for my OSI Superboard with 8K if RAM in 1981, so the “bloat” doesn’ t bother me.

Michael

486SX says otherwise

Running Mint (mate) at home, ubuntu at work. Depending on WINE on both systems to run some windows 32 bit exe file programs for CAD software. Not using windows for thee old windows CAD programs as I’ve found running the programs under WINE on linux a much more pleasant and stable experience than trying to worry about the horrors of keeping Win 7 up to date, or keeping Win 8.1 free of metro interface junk or keeping Win 10 free of m$ shovelling unwelcome feature updates that break compatibility and tamper with settings. The systems are relatively new hardware, can’t remember specs off top of head but they’re both 64 bit hardware obviously, but I frankly expect to be needing to make use of that legacy exe software under WINE for DECADES to come. I have no problem if distros don’t want to add new 32 bit capabilities, no-one is trying anything new with 32bits, but I for one really need them to keep working as well as they do for current/legacy stuff.

as a long time 64-bit proponent, going way back to the athlon64 days, im actually glad to see 32 bit finally going away. will it break legacy software (mostly games), probably. but vms are so good now i probably won’t notice much. im not against running a win XP/7 image (nay even a win98 image in some cases) in a vm so i can play some old game that would otherwise be unplayable. and you can virtually airgap those oses which are likely full of security holes at this point. this may finally allow me to adopt linux as a primary operating system without abandoning legacy software entirely.

Still have to worry about speculative execution vulnerabilities in processors breaching the airgap in your VM.

e.g. Intel MDS, zombieland etc. Have to keep the host side VM software/microcode up to date.

It is easy to take a snapshot for a clean install with the old OS with final patches, so that you can rollback to it or as starting point for new VM.

There are also containers with less overheads than a full VM. Win10 started supporting HyperV containers in 1903 release.

Im not sure a lot of the commenters here know what the difference is between the 32 and 64 bit architecture are, Or even bigger the core memory model. If anyone had an appreciation for these details they would readily say, leave 32 bit behind now and go 64 bit only. Read up on it if you are interested, but stop showing ignorance of the situation.

THIS!

Sorry guys, but you’re plain wrong. in the X86 universe, amd64 is good upgrade even for a 1mb system. The main reasons are, more registers, simpler memory architecture, lower multitask protection latency. If people isn’t using 64 bits now is just sloth, from both users and devs and complaining pointless things. Also, the stuff being retired is the support of a booting operating system with 32bits. Nothing impedes to establish a compatiblity libraries version (like FreeBSD’s linuxulator) and mantain compatiblity fort the next 20 years. Also, computers now being manufactured for the next year are UEFI CLASS 3, without BIOS, so much of the legacy compatiblity will be lost forever in the next 4-5 years. The next is 32 bit booting from firmware. 32 bit is a major burden in the X86 architecture maintenance, so if linux not drop it, intel will, and dropping hardware support with lots of software there, it would be a catastrophe. Better to drop the trash now

I just reinstalled multilib on my 18.04 LTS so that I could compare behaviour or an old (and working) DS emulator borrowed from a 32-bit backup and thé fresh (but buggy) packaged version.

“32 bit is a major burden in the X86 architecture …”

I ever since wondered why intel built it in that way, while looking at other 32 bit systems of back then.

If you read Donovan’s Operating Systems book, one of the case studies list the IBM370 case. Though is not an “exact copy” 386 was, a kind of 370 on a chip. Lots of less performance and features obviously, but that way of protection was made, the pages or fixed segments choice, the permissions levels, was inherited from that architecture.

X86-32 and X86-64 are not exactly equivalent or compatible. The most important difference is the task system: 32bit has, 64 not, only has context saving, so isn’t just a “register enlargement” upgrade like other architectures had, is a complete change. Most of 32bit are bloated with lots of microcoded features that most pcs don’t use today. However, for security sake and compatibility, they have to be mantained and put together with 64bit features, meaning lots of extra and most times dirty work, only for the 2 guys that remain using that stuff (especially the older protection/multitask features).

For BIOS based pc, real mode was mandatory, now it will be unnnecesary, because UEFI and also, the existence of different turn on ways that initializes CPU in long mode(amd has some docs on that). The easiest way to mantain X86 alive is to drop all this burden, especially considering the last security issues with cache and paging. A good way to do this is to drop real mode and the almost never used features of x86-32 and just allowing the compatibility mode, running without privileges. Most of this 32bit software is user mode software, so in most cases this not will be a problem.

This is going to be a serious problem for folk still running legacy software.

What legacy software you might ask?

Wine … 32 bit windows software

What uses this?

… lots of DSP toolchains and older CPUs typically for embedded work – where embedded != arduino.

Linux statically compiled Arm compilers … for older ARM5 codesourcery, ARM TCC et al

“So upgrade?” –

Yeah, products that are near the end of their lifetimes (but still need supporting by manufacturers under various contracts) aren’t going to ever get upgraded.

So … yes. Dropping full 32-bit support is going to be a huge problem for everyone that needs 5/10+ years of continuity.

>Put simply, the average Linux user in 2019 should rarely find themselves in a situation where they’re attempting to run a 32-bit binary on a 64-bit machine.

1) The repository maintainers rarely bother to include older software. Many times they won’t bother to include updated software either, or any software they themselves don’t use. (Really: the repository software distribution system is just an Appstore run by disinterested amateurs)

2) The average user does not compile from source because they literally don’t know how to.

3) The user shouldn’t ever have to do that in the first place.

The end result is that many software packages that people want to use are only available through random sources on google in the form of binary blobs, and telling people to upgrade is stupid because the providers have either gone away, or “upgraded” the software to some stupid money-grabbing subscription/service scheme, or made it worse.

Slackware.

Just repurposed a Pentium 4 desktop into a home server for Minecraft and stuff using FreeBSD two weeks ago. No issues whatsoever.

Come to the dark side :P

No! FreeBSD is not the OS you are looking for… move along…

Please don’t encourage the hoard of “bloat is my middle name” programmer wannabes from attaching themselves like vampires to the cheetah of OSs.

Ah the grandfather of forking the greedy corporations much better ideas. Agreed.

On Linux? Never, AFAIK.

Sure do! I stay 64-bit as much as possible. 32-bit only apps on Windows, are generally either a sign of abandoned development, outdated build tools, or dev incompetence.

There are plenty of 32 bit x86 devices still in production. For examples, see http://www.vortex86.com/products/Vortex86EX – they are used in many of the low-end fanless PCs (e.g. Ebox series) used for industrial control. If Linux gives up on 32 bit x86, the software people will just go back to Windows XP.

I can’t imagine you would be running Ubuntu on that and the Linux kernel still works on 32 bit with the exception of the early (buggy) i386 range that no one seems to be using anymore and the kernel maintainers had to keep workaround code for that noone was even sure worked because there are few, if any i386 CPUs still in use.

Those aren’t meant to run a standard desktop distribution, though. ARM is dominating that space anyways.

This is really Wine’s fault for being a POS. It is Linux after all, you can compile all the 32-bit binaries you want,why doesnt the only project left on Earth that still requires 32-bit binaries for whatever reason simply distribute them itself?

Because under Linux the point is that the distribution supplies all the libraries. If the individual programs start to roll their own, then it’s no different than how Windows does it, and you run up to DLL hell with all the different programs trying to install different versions of the same library.

Also ArchLinux does still have 32-bit binary support, just not straight out of the box: you have to uncomment the line for the multilib package database in pacman.conf and resync. Still not entirely sure why, but it does.

I solved the problem definitively! After 10 years of using Linux (Kde neon / Kubuntu). Don’t get blackmailed by Canonical who doesn’t care about desktop and community. If he wondered he would still be releasing new 32bit software only! So under Wine is useless. My choice will never use Linux on hdd only sometimes try virtual.

As a IT-admin working in a mixed Win/Lin/Mac environment, it would be sad if Canonical ditches 32bits support. Me and my colleagues are using Ubuntu as the main working OS and one even is using a 32bits version. ( We have one win10 laptop for skype for business)

Many enterprise systems are managed with programs that only work in 32bit systems, like the vmwarerc (VMware remote console), that we use for managing our (VMware) virtualmachines — only work if the 32libs are installed and there are more systems out there, still heavily in use that are managed with 32bit apps.

Is Canonical in a “gentleman agreement” with Microsoft: “in not to molest the MS-hegemony in the enterprises”?

I use the 32 bit version of Firefox because Firefox developers don’t provide a tunable that says “pretend this system only has X GB of RAM or try not to use more than X GB of RAM”. I have noticed that Firefox often doesn’t actually need to use that much RAM – if you install it in a VM with 3GB RAM it works fine and doesn’t try to use 4GB of RAM and cause it to swap.

As such switching to the 32 bit version of Firefox ensures it can’t use more than 4GB of RAM. With my usage it does not make sense for Firefox to use more than 4GB of RAM. I have no problems have tons of tabs and windows open with 32 bit Firefox.

Similarly, lots of normal desktop apps don’t need to use more than 4GB of RAM and if they do they’re usually hitting a bug, and most desktop users would be better off having their apps crash after hitting the 4GB limit than their apps successfully allocating 64GB of memory and then dragging down the entire OS along with other apps into Crash Zone or Swap Hell.

For stuff like 7-zip and video encoding sure get the 64 bit version. But you wouldn’t want your email client to be using 6GB of RAM…

Problems in updating and recycling computers.

Linux and Windows have stopped supporting x32 (i386) computers in 2015.

Which means that XP computers cannot be updated, as you cannot download the appropriate programs.

This means that devices that have the physical capability of 2Gb, and large hard drives cannot be updated as thier CPU does not manage ram memory in the same way and cannot do hardware multi-tasking.

Software suppliers say their is no call for the versions of the software compatable with these systems, but I think it is just easier to only have one operating system supported. This leaves a whole load of working systems going to waste which could be used to give to children, older people or third countries to reuse.

Even if you manage to download a operating system such as windows 7 or Lubunto (Linux), you cannot update them as suppliers refuse to segregate their updates into x32 or the newer x64 versions. This menas large downloads, which then cannot be installed.

Windows 7 still requires a product ID to be purchased, even though it is no longer supported!

It is also not possible to transfer your old windows 7 product ID to another machine after an upgrade.

People like Google do not provide a version of chrome browser, which is required to access some websites, so you are left with a version of Internet Explorer which is banned and therefore unusable. It also makes it very difficult to download new programs to update these computers!

Ubuntu only permitting x64 downloads and upgrades basically cuts off all of this market to recycle computers into working units, which are much needed by the poor and digitally disenfranchised in the UK and elsewhere.

By all means carry on producing x64 software, but leave open a route to x32 software available for those who cannot achieve this high goal. AND a plea from a linux newbe, produce a GUI based installation program that can take a tar package and install it without having to the user go to the command line!

Seems to me there’s still a good market in newer 64-bit computers and older 32-bit machines that people are still using because they either want to, can’t buy a new machine now $$$, or still wanting to use a useful machine. Just because something is older, doesn’t mean it’s still not useful and economically worthwhile to keep using. I’m separated now, but I gave the wife and daughter a couple of years ago each a nice new 64 bit desktop PC and Laptop with Windows Professional 10 on them with 8GB memory each. They run well for what they were designed for. I’m still using a 12 year old 32-bit PowerSpec N100 Mini Tower (2008) that I got from Micro Center. It was sold with Ubuntu 10.4 TLS on it, only 1GB memory and a crummy Celeron CPU. I’ve upgrade it as much as possible: Good 4GB 667Mhz DDR2 memory, on it’s third upgraded power supply, better of the Intel 2 DUO CPUs at 2.6Ghz, PCIe x 1 card with USB 3.01 output connections and plate for the digital audio, DVD ROM, DVD +R DL ReWriter (SATA), Two Internal SATA Hard Drives (Boot is 251GB and data drive is 1TB) and most importantly upgraded recently from Ubuntu Mate 16.04.x LTS to 18.045 LTS. Now, for an older machine it runs pretty well with a reasonable amount of speed. Of course, I’d like for myself a nice and new 64-bit Mini Tower with same and updated hardware features (like 16GB DDR4 or better memory) for my Ubuntu Mate. That PC would fly and have get processing Power. I’ve had other things I’ve had to spend my money on. The girls have better hardware then I do, but I’m the one that knows how to get the most out of them. In the meantime, I use the 12 year old computer. For new and older computers it’s important to have whatever flavor of Ubuntu to use available in 32-bit and 64-bit. If Ubuntu Mate was available as 20.04.x LTS, I would have upgraded to it. By the way, I have a Raspberry Pi 3 B+ too. Since it only has 1GB memory on it, it only has Ubuntu Mate 18.04.x LTS on it. I may use it for Amateur Radio use later. New Mini Tower or not, getting a Raspberry Pi 4 with 8GB memory even without the extra hardware maybe desirable and easier to get. It would support the Ubuntu Mate 20.04.x LTS and supports USB 3.1 like for hard drives. But, in the meantime, I use the 32-bit Ubuntu Mate 18.04.5 LTS. If I had a good 64-bit machine, I’d use it for Ubuntu Mate 20.4.x LTS, but I don’t. If you want to compete against Microsoft who has most of the market share, you need to provide support for both 32-bit and 64-bit platforms of the software. Even MS supports 32-bit software still on the 64-bit machines for backwards compatibility.