The Ada programming language was born in the mid-1970s, when the US Department of Defense (DoD) and the UK’s Ministry Of Defence sought to replace the hundreds of specialized programming languages used for the embedded computer systems that increasingly made up essential parts of military projects. Instead, Ada was designed to be be a single language, capable of running on all of those embedded systems, that offered the same or better level of performance and reliability.

With the 1995 revision, the language also targeted general purpose systems and added support for object-oriented programming (OOP) while not losing sight of the core values of reliability, maintainability and efficiency. Today, software written in Ada forms the backbone of not only military hardware, but also commercial projects like avionics and air-traffic control systems. Ada code controls rockets like the Ariane 4 and 5, many satellites, and countless other systems where small glitches can have major consequences.

Ada might also be the right choice for your next embedded project.

Military-Grade Planning

To pick the new programming language, the DoD chartered the High Order Language Working Group (HOLWG), a group of military and academic experts, to draw up a list of requirements and pick candidate languages. The result was the so-called ‘Steelman requirements‘.

Crucial in the Steelman requirements were:

- A general, flexible design that adapts to satisfy the needs of embedded computer applications.

- Reliability. The language should aid the design and development of reliable programs.

- Ease of maintainability. Code should be readable and programming decisions explicit.

- Easy to produce efficient code with. Inefficient constructs should be easily identifiable.

- No unnecessary complexity. Semantic structure should be consistent and minimize the number of concepts.

- Easy to implement the language specification. All features should be easy to understand.

- Machine independence. The language shall not be bound to any hardware or OS details.

- Complete definition. All parts of the language shall be fully and unambiguously defined.

The requirements concluded that preventing programmers from making mistakes is the first and most essential line of defense. By removing opportunities to make subtle mistakes such as those made through implicit type casting and other dangerous constructs, the code becomes automatically safer and easier to maintain.

The outcome of that selection process was that while no existing programming languages was suited to be the sole language for DoD projects, it was definitely feasible to create a new language which could fit all of those requirements. Thus four constructors were paid to do exactly this. An intermediate selection process picked the two most promising approaches, with ultimately one language emerging as the victor and given the name ‘Ada‘.

The outcome of that selection process was that while no existing programming languages was suited to be the sole language for DoD projects, it was definitely feasible to create a new language which could fit all of those requirements. Thus four constructors were paid to do exactly this. An intermediate selection process picked the two most promising approaches, with ultimately one language emerging as the victor and given the name ‘Ada‘.

In-Depth Defense as Default

Ada’s type system is not merely strongly typed, but often referred to as ‘super-strongly typed’, because it does not allow for any level of implicit conversions. Take for example this bit of C code:

typedef uint32_t myInt; myInt foo = 42; uint32_t bar = foo;

This is valid code that will compile, run and produce the expected result with bar printing the answer to life, the universe and everything. Not so for Ada:

type MyInt is Integer; foo: MyInt; bar: Integer; foo := 42; bar := foo;

This would make the Ada compiler throw a nasty error, because ‘Integer’ and ‘MyInt’ are obviously not the same. The major benefit of this is that if one were to change the type definition later on, it would not suddenly make a thousand implicit conversions explode throughout the codebase. Instead, one has to explicitly convert between types, which promotes good design by preventing the mixing of types because ‘they are the same anyway’.

Anyone who has wrestled through the morass of mixing standard C, Linux, and Win32 type definitions can probably appreciate not having to dig through countless pages of documentation and poorly formatted source code to figure out which typedef or macro contains the actual type definition of something that just exploded half-way through a compile or during a debug session.

Ada adds further layers of defense through compile-time checks and run-time checks. In Ada, the programmer is required to explicitly name closing statements for blocks and state the range that a variable can take on. Ada doesn’t define standard types like int or float, but instead requires that one creates types with a specific range from the beginning. This is also the case for strings, where aside from unbounded strings all strings have a fixed length.

At run-time, errors such as illegal memory accesses, buffer overflows, range violations, off-by-one errors, and array access can be tested. These errors can then be handled safely instead of leading to an application crash or worse.

Ada implements an access-types model rather than providing low-level generic pointers. Each access type is handled by a storage pool, either the default one or a custom one to allow more exotic system memory implementations like NUMA. An Ada programmer never accesses heap memory directly, but has to use this storage pool manager.

Finally, the compiler or runtime decides how data is passed in or out of a function or procedure call. While one does have to specify the direction of each parameter (with ‘in‘, ‘out‘, or ‘in out‘), the ultimate decision of whether the data being passed via a register, via the heap, or as a reference will be taken by the compiler or runtime, never by the programmer. This prevents overflow issues where stack space is not sufficient.

The Ravenscar profile and the SPARK dialect are subsets of Ada, the latter of which strongly focuses on contracts. Over time features from these subsets have been absorbed into the main language specification.

Programming with Ada today

The ANSI certified the Ada 83 specification in 1983; Intel’s 80286 had just been released and Motorola’s 68000 was still only four years old. It was the dawn of home computers, but it was also the awkward transition of the 1970s into the 1980s, when microcontrollers were becoming more popular. Think of the Intel 8051 and its amazing 4 kB EPROM and 128 bytes of RAM.

Today’s popular microcontrollers are many times more powerful than what was available in 1983. You can grab any ARM, AVR, RISC-V, etc. MCU (or Lego Mindstorms NXT kit) and start developing for it just like you would any other C-based toolchain. Not surprisingly, the popular GNAT Ada compiler is built on GCC. An LLVM-based toolchain is also in the works, currently using the DragonEgg project.

Today’s popular microcontrollers are many times more powerful than what was available in 1983. You can grab any ARM, AVR, RISC-V, etc. MCU (or Lego Mindstorms NXT kit) and start developing for it just like you would any other C-based toolchain. Not surprisingly, the popular GNAT Ada compiler is built on GCC. An LLVM-based toolchain is also in the works, currently using the DragonEgg project.

There are two versions of the GCC-based Ada toolchain. The AdaCore version enjoys commercial support, but comes with some strings attached. The Free Software Foundation’s version is free, naturally, and has more or less feature parity with AdaCore.

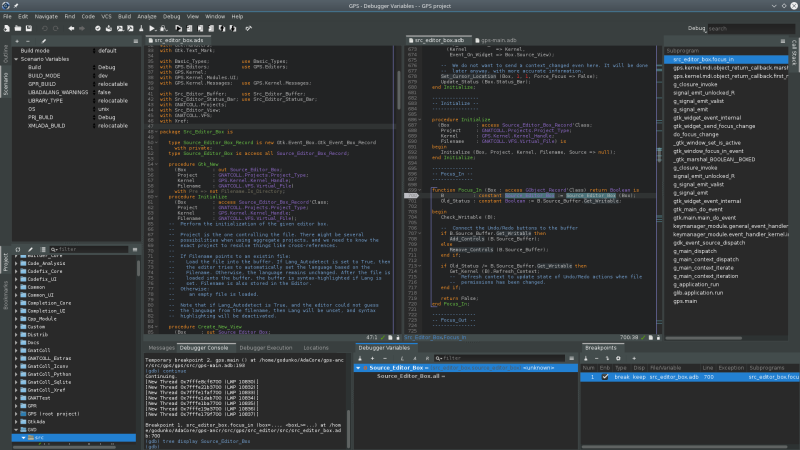

To get started quickly, you can either use the GNAT Programming Studio IDE (GPS) that comes with the AdaCore version of the GNAT toolchain (and on Github), or rough it with a text editor of your choice and compile by hand, or cheat by writing Makefiles. The toolchain here is slightly more complicated than with C or C++, but made very easy by using the gnatmake utility that wraps the individual tools and basically works like GCC, so that it can be easily integrated into a build system.

An example of a small, yet non-trivial, Ada project written by yours truly in the form of a command line argument parser can be found at its project page. You can find a Makefile that builds the project in the ada/ folder, which sets the folders where the Ada package specifications (.ads) and package bodies (.adb) files can be found.

These files roughly correspond to header and source files in C and C++, but with some important differences. Unlike C, Ada does not have a preprocessor and does not merge source and header files to create compile units. Instead, the name of the package specified in the specification is referenced, along with its interface. Here the name of the .ads file does not need to match the name of the package either. This provides a lot of flexibility and prevents the all too common issues in C where one can get circular dependencies or need to include header files in a particular order.

Where to go from here

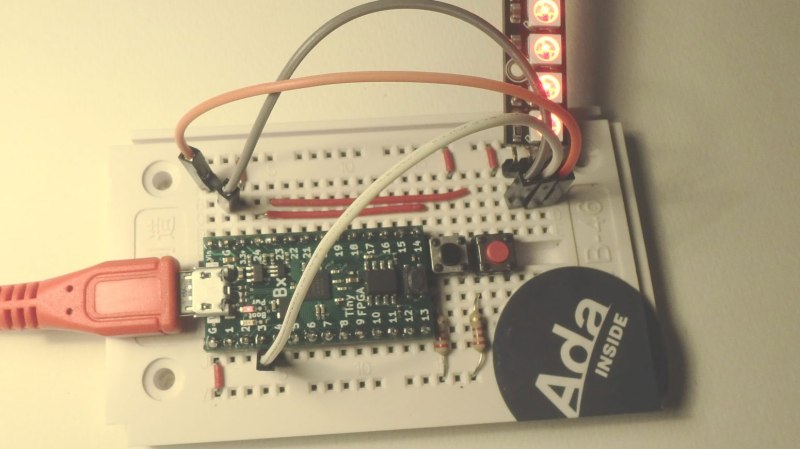

After grabbing the GNAT toolchain, firing up GPS or Vim/Emacs, and staring at the blinking cursor on an empty page for a while, you might wonder how to get started. Fortunately we recently covered this project which had an Ada-based project running on a PicoRV32 RISC-V core. This uses the common ICE40LP8K CPLD that is supported by open-source FPGA toolchains, including Yosys.

Introductions and references for the language itself are found as a simple introduction for Java and C++ developers (PDF), the AdaCore basic reference, a reference over at WikiBooks, and of course ‘Programming in Ada 2012‘ in dead-tree format for those who like that little bit of extra heft. It is probably the most complete reference beyond diving into the 2012 Ada Language Reference Manual (LRM) and its glorious 945 pages.

While still quite a bit of a rarity in hobbyist circles, Ada is a fully open language with solid, commercially supported toolchains that are used to write software for anything from ICBM and F-15 avionics to firmware for medical devices. Although a fairly sprawling language beyond the core features, it should definitely be on the list of languages you have used in a project, if only because of how cool it looks on your resume.

It’s all fun and games untill boss ask you to develop device and gives you unrealistic deadline. Unless you want to become a lavatory hygene technican at your local fast food joint you just have to deal with it, write nasty, buggy code and get the job done in time. Customer won’t pay and won’t care for design patterns and test driven development. In the end all that matters is CPU crunching instructions.

Works like a charm for your first project. Then when it comes time to make version 2, 3, etc. You can’t meet deadlines because your codebase sucks and all your decent engineers have gotten the heck out of dodge. I hate that this attitude is so prevalent amongst embedded engineers.

It isn’t just prevalent in embedded engineers its an engineering problem in general from what I’ve seen. The number of times I’ve had to ask people if they would have wanted be to build their house the way they are building software is way to high for my relatively short career at this point.

IME the conversation often starts to break down when somebody asks, “Don’t they use the Waterfall development process to build houses?”

The main reason is that developer is often unwilling or unable to defend Waterfall process, and engineering generally. But you can’t use the E-word without planning things in advance.

I watched an old MIT introduction to CS lecture where the professor extolled the LISP as a language impervious to the consequence of impromptu requirement changes. He even went as far as to humiliate a student who challenged him on it. It was a cringey moment.

It’s interesting how software engineering is a victim of its own success. In every other discipline, requirements are not only expected, but fixed before any design. Yet, in software engineering, we’re expected to add 2nd kitchen after we’ve already framed and plumbed the structure.

Is that not because for years, NO DECADES, the industry out sourced their jobs showing ZERO loalty to those that built their companies. Oh…and now we whine cuz they won’t hang around. LOL

You cannot polish a turd.

When someone demands you polish a turd, they are telling you to cover it gold spray paint and glitter, then run like a Frenchman.

Then they bitch? Nerve!

You live in some sort of fantasy world. Here in the real world we have actual customers who rely on working product, they will not pay for the product without proper documentation, and they will not adopt it if they find bugs and we cannot fix them and turn around new builds in a timely manner. This requires formal test procedures and automation, otherwise your “bug fix” releases will add new bugs.

Sorry but here in Poland it is real world. We do affordable IT engineering outsource for companies from USA and we develop quickly. If boss or project manager wants code quick then you develop it quick because in the end of day its what gets food on your table. Bugs happen to everyone, such is life. I know its shocking but for US customers its better to pay cheap poland company than develop code themselves and still I have a good enough wage that I bought a very nice 2006 BMW when most of my friends baerly make it month-to-month with payments.

I agree with you. The trick a good developer needs to learn is producing good work quickly, without leaving a mess lying around. Half the internet seems to think that working fast means doing a bad job, when that just isn’t true – these guys will write phonebooks worth of code to manage the code which manages the code which actually does anything, and then declare that it’s “maintainable.” Working code is maintainable, because you still have customers to pay you to maintain it :)

You mean stuff we can buy on aliexpress?

Just so we understand each other here: Doing embedded using Ada is arguably cleaner than C and … in turn takes probably less time and gives better results. Just check the quality/difficulty/time frame of projects coming out from MakeWithAda.org.

2006 BMW? Are you mad?

That’s an endless money pit. Designed to bleed you dry, with German precision.

Hint: America invented the ‘Lie like rugs, half ass solutions and make money on support’ business model. EDS is now called HP enterprise. Never hire them, they were the prototype for Tata. Still better then any company that once was part of an accounting firm.

Real world? Not sure what planet you live on but everything they stated is exact. Better do whatever to keep that job you “currently” have, cause the “real world” simply doesn’t work the way you think it does.

It certainly does work his way. The mere fact there is a market for it, proves it. Not everyone has JPL time and budgets. In the business world, there is a saying – perfect is the enemy of good enough. Too bad no one in govt. or academia has discovered this.

I don’t think I’ve seen ‘perfect’ code in academia. In fact I’ve seen some pretty naive code from academia. I do think it’s better for academics to work with the ‘perfect’ workflow so that new developers know what corners are being cut. After all: if you know the rules you know how to break the rules without causing too much harm, if you don’t know the rules you’ll break them all and not know what you’ve done wrong.

Just good enough. LOL. Boeing. Space Shuttle

Government contracting in the USA is a specialization.

There is basically no crossover. Once a company is a qualified government contractor there is no chance they will take honest work.

They do not produce ‘prefect’, they mostly produce no-show jobs for powerful peoples kids. And invoices, lots and lots of invoices.

“If you are not embarrassed by the first version of your product, you’ve launched too late.”

– Reid Hoffman (the founder of LinkedIn)

How is linkedin doing now? Are they broadly considered a successful site that developers should emulate? Or are they a mostly-failed site that was purchased by a big company for backroom reasons?

How many bugs do tier 1 companies like Microsoft & Google get discovered in each release?

If only they programmed with ADA or in your world….

Are there any statistics for average bugs per 1,000 LOC by language? Just because Ada is stricter doesn’t mean Ada software wouldn’t have bugs. Certainly we had bugs back in my Ada days; a mate of main had a doozy that was found in a simulator. When the aircraft crossed the international date line, it flipped by 180 degrees so it was flying upside down…

And I’ll say it again; the Ada used in avionics was a vastly reduced subset of the main language. Spark Ada. https://en.wikipedia.org/wiki/SPARK_(programming_language)

Was that the one that affected the F-22s a few years back where the avionics shut-down?

No. Different manufacturer. Hint. British!

The so called “gold plating” issue that language designers imagine we all want.

Most of us just want the damn thing to work well enough, enough of the time.

If you are able define “well enough” and “enough of the time”, accomplish and then measure them then yeah, by all means keep at it.

It’s when the costs spread over time of dealing with issues is less than the cost of the original project at the time.

It’s pretty well defined in industry, think Ed Norton in Fight Club.

Totally.

Like if you are having a heart attack and at takes an extra few attempts for anyone nearby to get a dial tone on their mobile phone and phone a hospital, that would not be a big deal at all. Or if the number was miss routed to the wrong destination. Software runs everywhere, the telephone exchange system runs software. And to generate a dial tone, software runs to generate that tone. When software has bugs, either design or logic, people have died. It is not always just a little bit of an inconvenience, “oh damn lets just power cycle and trying that again”.

e.g. https://hackaday.com/2015/10/26/killed-by-a-machine-the-therac-25/

Part of problem is that people have become so accustomed to anything that runs software being buggy. And if I would point a finger at anyone it would be the vendors with the largest number of CVE (Common Vulnerabilities and Exposures) databases Vulnerabilities. (Microsoft and Adobe instantly pop into my mind. And after checking Microsoft is number 1 and Adobe is number 7 – https://www.cvedetails.com/top-50-vendors.php ).

Writing good software is hard and slow, writing crap software is easy and fast.

This is not a best measure because Microsofts’ main products are operating systems. And these must be backwards-compatible, which means tons and tons of legacy code written in past 30 years by hundreds of people. Also you should look at the last field in the table: Vulnerabilities/#Products. Let’s compare those numbers with some of the FOSS brands:

Adobe: 25

Microsoft: 13

Debian: 32

Linux (?): 138

Canonical (Ubuntu): 66

OpenSUSE: 57

13 vulnerabilities per product is not that bad. And one of the often repeated fallacies of FOSS is that open source makes easier to find and patch vulnerabilities. So why closed-source products have less vulnerabilities per product than open source ones? Well, because FOSS developers follow specific philosophy, the kind known as “worse is better”.

Right now Debian comprises 59000 software packages. Not to mention 10 architectures.

That 13 Microsoft vulns comes from how many products and architectures?

Maybe you shouldn´t forget that when Linux tester/programmer find some fatal error reaching long back into history, a CVE is filled or it can be backtracked from versioning system used on that part of Linux.

When a fatal bug is found in Windows, mysterious fog appears. We know from history that Microsoft repeatedly acknowledged bugs affecting most of their products and simultaneously saying “Nah, we´re not that much into fixing it, because… you know, it doesn’t affect business customers that much. And you would have to have local access to misuse that bug, so sod off”. And tell me, when someone from inside MS finds a bug, do they file a CVE? Can we see the source code?

Those issues should be prorated against the number of installations. More installations uncovers more bugs, given the identical programming practices and skills. That’s why Microsoft is (still) on top — it’s freaking everywhere, the others, not so much. On average, the industry, especially large corporations, are on par with each other. The code they produce is no better than the others.

(Good) testing is critical, but super expensive. It’s no longer (computer) rocket science to do regression analysis and testing, that’s mostly now been “automated”, but there’s probably too much reliance on that automation, especially by managers who either never really were programmers or were really poor ones that decided to “manage” instead.

You can’t fix bad programs with the world’s “BEST” programming language, since you still have to overcome pushy managers, incomplete and unverified specifications, LARGE variations in programming skills (which require a thorough understanding of the language used, regardless of which) across the project, and really good (er, expensive) tools. Slow code can be “fixed” with faster processors, but bad code cannot.

Side note: ever hear of the UK’s “Project Viper”? That’s because it was impractical to implement a CPU that was mathematically correct. The “idea” was it could execute mathematical correct programs. Huh?

Ada sprang from a US DoD project, based on the idea that the “ideal” programming language would prevent programmers from writing invalid (aka BAD) code, so it has lots of constraints, — LOTS. That’s what’s supposed to make it “work”. It’s supposed to be the BEST HAMMER, since all programs are (of course) simply nails. Since its inception, it requires “extensive” validation (hint: that makes it initially expensive) by passing a “test suite” for each target architecture which it was to be qualified for use. So that meant (back in 1993 when I encountered it) you use a different compiler for 8086, 80186, 80286, 80386, etc. Note that this was not a compiler switch, it was a separate compiler! Intel even got “into Ada” by making an Ada oriented, 32 bit processor, the iAPX-432, that had instructions to validate integer ranges, a “big deal” back then, since many complained about range checking’s overhead on run-time and code space. Admittedly, back then, compiler testing was less effective than it should have been. Ada also came with a “run time” package that not only included internal subroutines to implement language elements, but also a real-time kernel for delays and simple tasking. I haven’t kept up, so that has probably expanded a lot since then. I realize there is a “good” GNU Ada compiler, but it produces C as its intermediate code, like Niklaus Wirth’s original Pascal compiler producing p-code. Hmm?

Ada in GCC – GNAT – has not produced c-code as intermediate language in this millennia.

It _may_ have done so in an early 1990’ies version.

When I did my googling just prior to my post, that was the info I found, which coincided with research I did in the late 90’s. Since this reference was from the late 90’s too, I wrongly assumed that was when new work on the GCC Ada compiler ended (based on Ada95 I think). I’ve been retired since 2015 and was never a fan of Ada, nor have I done a Ada programming my self (but then, I did C programming without “formal” training, but I started by reading K&R and relied heavily on the language definition in its appendix). My reasoning was something like: “why learn French to write prose when I am fluent in English?”

I KNEW that most of the code produced by my company (in Ada or C/C++) and elsewhere was mostly junk because programmer’s were not fluent in the languages they used. So, why I learn a new language that I did not have enough time to become fluent in and then end up being just another lousy programmer? Actually, I “did some Ada programming” in that I researched a bug that was blamed on my C code used in a different unit from the one that supposedly “did not change” so the bug had to be in my code. I ended up getting a copy of the Ada code (from the FAA, since we no longer maintained it locally, which required special approval that took about 2 months to get). It ended up that the Ada guys didn’t know their language well (as I said above). They defined a bit-field where the interface (that I wrote) specified that UNUSED bits be left ZERO for backward compatibility, so the Ada guys just those bits “unspecified”. When I read the Ada language definition, I found that unspecified bits can take ANY value (i.e. they are treated as “don’t care”). This was apparently true for both reading and writing. So, when I traced the Ada code to its temp (stack) variable to hold that value when making changes to it, changing ANY bit would come up with seeming random values for the reserved bits. Since my code was updated for our commercial, non-FAA product which added features that disabled FAA behavior when set to ONE, BANG, the “bug” manifested itself. It still took two weeks to convince the FAA to pull out their debug tools (as I said, we no longer supported it and had no resident Ada programmers) to verify my theory. Once they did, I was exonerated. So why did that bit get set to ONE? Because the temp stack variable containing that was an old return address (memory was zero initialized at startup like all “good” code does) that happened to have that bit set. When the Ada’s assembly code was analyzed, it showed that they did an AND mask of bit-field variable to clear the “used” bits, but left the other bits (from the stack) intact. Talk about esoteric bugs!

Some of my C (and older assembly) programs have been criticized for being too difficult to “read”. But even when I counter that it’s not my program that is too difficult to understand, the difficulty was due to the underlying math and science of the criticizer encountered (how could they understand the code if they didn’t know what it was supposed to do?). While I don’t advocate going back to assembly code as a few have, I don’t see the benefit of learning a “new” language, whether “superior” or not). 90% most languages are really “identical” to each other — it’s mostly syntax and tool usage that creates the biggest problems, assuming the tools are bug-free. I have NEVER encountered any new SW tool that’s bug free, PERIOD.

GNAT has never generated C as an intermediate language, it has always been a front end (gnat1) which generated assembly, just like cc1, cc1obj, cc1objplus, cc1plus, etc.

AdaCore has only recently added C and C++ binding support to gcc/g++.

As someone who worked for a defense contractor for 10 years, I can assure you – Ada doesn’t really solve any of the issues it claims to solve in the real world.

What’s worse, at least with the Ada implementations our codebase was derived from, achieving endian-cleanliness for network code without destroying performance was extremely difficult. As a result, we actually had at least one hardware design revision that existed SOLELY to build an SBC “old” enough to run our legacy Ada code.

When I left the industry, the company I was at was in the middle of a MAJOR effort to rewrite half of the software running on the aircraft we were the prime contractor for. Everything new was C/C++

… at least with the Ada implementations … is key here. For sure if you were forced to use a ‘stuck in the past’ toolset, yeah ‘that Ada’ probably suck on some fronts.

But hey, you really think the C/C++ they choose 10 years ago did not suck..? please sir, I know the ride.

Also, so much efforts have been deployed trying to give C++ a straight face over the past ~4 decades. Its unfair to run and snitch on some cherry picked Ada’s flaws. The fundamentals are more sound period and in the 90’s C++ was ‘elegant’ so yeah hordes of devs and managers bought into your ‘corporate nightmare story’ saved by C++. If Ada received even 1/100 of C++ love the world would be a better place.

Your C++ is always broken. Pick any random C++ github repository. Try to build it for fun: 8/10 projects will not build for a myriad of reasons. Now try that with an Ada repo, it will be the other way around. I know because I do try this kind of stuff.

When doing anything important, the newest C standard I see is C99. C89 (ANSI C) is more common.

Yeah, GUI stuff might be C++11, but the business logic and libraries are not.

Hey. in 2020 it changes in reverse manner. In this video. you can review a briefing from Daniel Rohrer vice president of software security at Nvidia and Dhawal Kumar principle software engineer at Nvidia. In brief, they have come to this decision to change their primary programming language from C to Ada/Spark. They even said something like to write whole libraries in Ada. Unfortunately i do not remember in regard to which department they were talking about, as nvidia is not just GPU manufacturer. it is also in mobile computing and Autonomous Driving market. Here is the video. I really appreciate your opinion.

https://www.youtube.com/watch?v=2YoPoNx3L5E

If your boss gives you unrealistic deadline, he is an idiot and you should run away. Other warning sign – pointy hair…

What if you are working on something like a medical device and one of bugs caused by your nasty, bad code causes someone to die? For example faulty software interlocks fail causing someone to get very high dose of X-rays?

And another thing: you wrote your code in two days, fueled by almost lethal doses of caffeine. Product is out, and it didn’t maim or kill anyone by sheer luck. 6 months later your boss wants you to write firmware update. Would you be able to read and modify that spaghetti code written under influence?

“Product is out, and it didn’t maim or kill anyone by sheer luck. 6 months later your boss wants you to write firmware update. Would you be able to read and modify that spaghetti code written under influence?”

“Good enough” is the enemy of the “good”

We have a sarcastic saying about our senior management when we argue to improve and they say “it’s fine”.

Fuck It Near Enough.

True i’m sure for most industries.

The fallacy there is that writing spaghetti code would be faster.

I think even awful, rushed code is likely to use some form of Structured Programming.

Non-structured programming is essentially DEAD — and Pascal killed it.

Structured programming is about functions with one entry and one exit… whether multiple ‘return’-statements violate the “one exit” is debatable, and I’m inclined to say ‘no’ because the speghetti-code that non-structured code was things like returning early, leaving things on the stack, and then doing something with them.

I’m curious — so, how exactly did Pascal kill structured code? Niko Wirth, it’s creator, was all about structured code. Pascal is SO structured, that it does not allow forward references (at least the version that Wirth defined) so all variables and functions had to be defined BEFORE use. That simplified the compiler so that it only needed to do one pass (since no forward references existed). But then, maybe I’m just getting too old and don’t care anymore. Prost.

To me rushed code is code written with not enough time to consider and anticipate the fulfillment of Murphy’s law regardless of how it is written structured or not

Worst code I’ve ever seen (in Access VBA, so you know it won’t be good):

global variant aLocalArray()

You know they originally intended to fix it ‘tomorrow’.

The worst coding related thing I’ve ever done personally:

Shown the coders that did that how to invoke their access application from the ‘replacement’ ASP code. They OLE automated their Access app with hard coded control names from ASP classic, had it doing the price forecasts behind the scenes on the server. Stateless schmateless.

There is a non-trivial chance that _your_ electric power was traded with that contraption. Don’t look smug Euro’s/Aussies. You too.

I was gone later that month.

Taught them that _on_purpose_ right before bailing. Am bastard, will go to VBA hell.

The access coders involved now work for the (redacted).

That should scare you, California electric power users.

It’s not the rushed code.

It’s the barely working, nobody knows how it works or why it works the way it does. Highly ‘evolved’ code. Workarounds for the older workarounds, accreted for years or decades code. Nowadays, no doubt, featuring both Access VBA and JavaScript library greased pigF land communicating via a MySQL table.

Ideally your customer requirements include testing, safety audits, and multiple release milestones. If not then many get away with throwing any old junk over the wall the first time it seems to run in the lab. (examples of good standards for product development processes and coding standards: ISO 26262 and MISRA C for the automotive industry)

“Ada might also be the right choice for your next embedded project.”

Windows written in ADA. ;-)

It couldn’t have made it any *worse*, at least.

Well possibly it could. Back in the early 90s, a project I worked on in Ada had to have parts recoded in C because it was too slow. Also the Ada that was used in avionics is not the full Ada but a reduced subset which had all the possible iffy bits (generics for example) removed. It was effectively a slightly stricter Pascal. After that the Defence industry stipulation that everything had to be Ada was relaxed.

Ada is available on the micro:bit https://blog.adacore.com/ada-on-the-microbit

While the language is designed to prevent error, the Ariane rocket is a testament to the fact that reliable software can still cause objects to fall from the sky; reliably.

http://sunnyday.mit.edu/papers/jsr.pdf

The second paragraph sent me straight to the comments to see that someone made this point, especially about Ariane 4/5.

*hat tip*

Except that it’s a non-point and people really need to do their research rather than just parrot what others have told them.

What is a non-point? That a programming language can’t prevent design errors?

That what people say about the Ariane disaster was Ada’s fault, it was management’s fault for using a pre-existing package for the previous Ariane which wasn’t compatible nor tested. There’s a report detailing it all and everything, if you bothered to look.

tldr: everyone in this thread actually _agrees_ with the relevant/coherent part of what you wrote (on the second try).

“…people really need to do their research rather than just parrot what others have told them.”

“…if you bothered to look.”

You mean like reading and comprehending the whopping two Ariane-related sentences Tricon and I wrote, before flying off the handle? Emphasis on comprehending.

This shouldn’t be a difficult task, especially for a programmer. Neither of us blamed the Ada language for Ariane 5, we pointed out that it’s possible to write bad code, even in Ada.

Exactly what I did, Ariane 4 was one of our good examples of how *not* to design a reliable system..

Interesting!

If you specify the wrong actions to a condition in your requirement, no languages are going to help you.

Iirc, this fall from the sky was due to badly ported code between two architectures coupled to lack of integration tests. It worked before porting so it will run after, no? Qualified Ada compilers are brick solid but your code will do what you told it to onto the data it feeds onto. This does not make intensive testing and auditing a waste of time.

Well as far as i remember the root cause for that crash was reuse of software designed for Ariane 4, and that ship had completely different flight characteristics. In short the crash was caused by an overflow and that should have been discovered early in the requirements phase, so the software did exactly what it was required to do.

Full details at: http://sunnyday.mit.edu/nasa-class/Ariane5-report.html

Just had a quick peek at your code and it looks a lot like VHDL. Might just give it a go…

That’s no coincidence. When the DoD developed VHDL to describe the behaviour of components that suppliers would provide them with (ICs, etc.), they picked the Ada syntax as they already used that internally. Basically VHDL is like a little sister of Ada :)

Yes ADA is VHDL. VHDL is better and less error prone (no real need for linting tools to some degree) reflects its strongly typed nature, yes there are few shortcuts – so things are pretty explicit which is why DOD used ADA and Hardware Designers use VHDL. Yes its easier to write C/C++ (or Verilog / System Verilog) but there are more chances people will have sloppy code leading to unexpected results. ADA / VHDL / UVVM is very strongly typed, which while annoying at times – its constructs are 100% clear and work as intended without ‘gotchas’.

if you know only half what you are doing (ie you have half a brain :) you can use c without any problem

yes, if you are stupid (i mean challenged) then it is better if you don’t use any language where others can

be hurt by your actions

There does not exist a single human on this planet that can write C code without introducing numerous security flaws and other bugs. The proof is in the pudding. There is no C program that is “done”, every one of them is an unfinished work in progress. Even the old UNIX command line programs from the 1970s, written by the gods of programming themselves, are still bug-ridden and they are still getting software updates even today.

On the other hand, there is FORTRAN code for numerical analysis, basically bug-free and untouched since the late 1960s.

Just grab a copy of “UNIX-Haters Handbook”. Read Preface and first chapter. It’s all explained there…

COBOL, that language everyone hated? Some software written 40-60 years ago is still running. Usually on emulated hardware/environment. Usually without need for any bugs and problems. It’s cheaper and easier to use it as it is, than to rewrite it in more modern languages…

About the FORTRAN code: it’s basically bug-free… until you port it to Ada and discover subtle out-of-range situations that stayed undetected in FORTRAN – happened several times.

Yes, you can write C code without any problems, unpredictable behaviors or mountains of compiler errors. Sometimes even 2-3 whole screens/pages of it…

Also if you know what you are doing, and you develop some skills first, you can juggle with bottles of nitroglycerin. Just keep juggling and nobody explodes…

This is objectively false.

https://www.zdnet.com/article/microsoft-70-percent-of-all-security-bugs-are-memory-safety-issues/

https://www.vice.com/en_us/article/a3mgxb/the-internet-has-a-huge-cc-problem-and-developers-dont-want-to-deal-with-it

http://blog.llvm.org/2011/05/what-every-c-programmer-should-know.html

https://blog.regehr.org/archives/213

You know, I had a course in Ada when in college back when and enjoyed working with it… But it went no further as where I ended up working, C/Assembly was used for all projects. Later we used Pascal (Turbo/Delphi/C++) for the UI work. I’ve written some trivial programs in Ada at home, but just never had a ‘use’ for it, so always on the back burner. With C/C++/Assembly/Fortran/Python/Perl on the brain there isn’t much room for another language proficiency unfortunately at my age.

My experience with dynamically typed languages like javascript and Scheme has taught me to appreciate the implicit numeric type conversions that happen all the time “behind the scenes” in languages like C and C++. This stuff will mess up your program big time when the compiler is turning your double into a float or an int even though you did not ask it to, or when you ask it to do integer math and it does it in doubles instead.

I also find it really hilarious that for decades, it was supposedly okay in C to compare a signed number to an unsigned number. Now suddenly it’s a Bad Thing, the compiler issues dire warnings and you should fix your code right away. Do you feel like Theodoric of York, medieval barber? Well, you should, because when you program in C you are no different from the barber who bled his patients and applied leeches.

Erlang anyone?

There is a hypervisor written in spark.

https://muen.codelabs.ch/

If you want to know more of the language, a few years ago I wrote a overview of the language and all the things which makes it cool:

http://cowlark.com/2014-04-27-ada

(And here is a multithreaded Mandelbrot renderer I wrote in it: http://ideone.com/a1ky4l)

It’s not perfect — the OO syntax is awful, it’s case insensitive, the standard library is clunky, Unicode strings are nightmarish, and there are a number of weird restrictions where some things work in certain contexts but not others — but it’s a damn fine language with a good toolchain which deserves to be a lot better known than it is.

– the OO syntax is awful : bahhhh, can be argued. Anyway, its only syntax. Why scare people over syntax… tell me?

– it’s case insensitive : so what… I do appreciate it.

– standard library is clunky: no… or depends compared to what.

– Unicode strings are nightmarish: ok.

The OO syntax is not awful, it is just not a copy of C++ or Java. It has a totally different approach which is actual for more expressive and flexible if you don’t try to pigeon-hole it into the type of OOP you’re use to. We use it extensively. Ada’s OO syntax is particularly good at encapsulation, and for code re-use, both of which are core Ada requirements.

Case insensitivity was a deliberate design decision and contributes hugely to general safety. If you are upset that you can’t have two variables “My_Var_a” and “My_Var_A”, then you really need to consider your approach in the larger context.

The Standard Library.. I don’t even know why you think it is “clunky”. it is less bloated than in similar languages (like C++), and it is more precisely defined than most other languages.

Unicode strings.. Not sure what you mean by that. Ada has a building in Wide_ and Wide_Wide_String, which can natively handle any codepoint in Unicode. If you want to get that in/out of the program, the standard library Ada.Strings.UTF_Encoding.Conversions has you covered. How hard is My_UTF8_String := Encode (My_Wide_String); ?

Just stating this as a startup that writes all of our stuff in Ada.

“Ada running on a RISC-V core, driving LEDs.” Ada is a compiler, isn’t it? How does it drive LEDs?

In an armored truck.

oh, would have though led’s in ada would only work on a golf course given the range requirements.

Ada is a programming language, like C or C++.

There are different compilers for the Ada language, the open-source one is called GNAT and it is part of GCC.

GNAT is the only up to date and open one though.

PTC ObjectAda (not open-source) covers good (if not most) parts of Ada 2012. Checked with several open-source projects on SourceForge.

Is is most now? Last I knew they were only just starting 2012 and that was only this year.

Well Rust only has one compiler, doesn’t seem to be a problem for them..

Have the Rust lot ever turned around and slapped a restrictive GPLv3 licence on the compiler, the runtime and ever library they created for Rust? No? Well, they don’t need a second compiler to stop that ever happening again.

To help Ada adoption, please start referencing this: https://github.com/ohenley/awesome-ada

It is one of the most, if not the most, comprehensive list about ‘modern Ada’ related stuff.

ADA is painful. That is all.

Wrong form? Are you an architect maybe? I did study Architecture, and I know the American Disabilities Act (ADA) can be a bit onerous, but it is for a good reason.

Anyways, here we are talking about Ada, the programming language. It is named after Ada Lovelace.

Ada has good marketing, but anyone interested in it should read C.A.R. Hoare’s “The Emperor’s Old Clothes” for a view of how that sausage was made:

http://zoo.cs.yale.edu/classes/cs422/2011/bib/hoare81emperor.pdf

The Jargon File says (among other things) this:

“the kindest thing that has been said about it is that there is probably a good small language screaming to get out from inside its vast, {elephantine} bulk.”

http://www.jargon.net/jargonfile/a/Ada.html

“Ada has good marketing”

Nobody has ever said that, because it doesn’t, otherwise Ada would be better known and have less people with no knowledge putting it down.

As for Hoare’s view, that was on original Ada and Ada83, Ada95 changed Ada a lot, Ada 2012 / 202x are so different. I think it’s about time people stopped taking old views/advice as current fact.

Do you mean the person you’re replying to is a nobody, or do you mean you didn’t read their words before replying?

I’ll say it too, Ada has good marketing. Enthusiasts volunteer to market it, and they work hard at telling people that they it IS (not might be) what YOU (not merely themselves, but even the reader!) WANT. Of course, most readers already know what it is and that they do not want it.

It isn’t great marketing, because many people (such as myself) find that sort of advocacy insulting.

But it is still good marketing, because it elevates a language that is not on the list of considerations to the point where many people might believe or expect that it would be among the considerations for real projects.

Listen up idiot, don’t accuse me of not reading a post before I commented, I suggest you do that.

I meant that nobody has ever said that Ada has had good marketing, because it hasn’t. I’ve probably done more than most in “marketing” the language by using and advocating it.

I’ve read about it’s history and how it was pretty much hated from the start by people who either didn’t want to know or were told it was crap and that is still happening to this day. There’s more people out there badmouthing the language, you only need to look on here as an example.

Examples?

“My professor told me x about Ada”

“Ada is painful”

“Eww Pascal!”

“Ariane 5”

This article is one good piece of marketing even if it’s wrong in parts, I’ve already pointed that out, but it’s literally one of only a few. Where’s your good marketing for Ada?

I suppose those are not real projects? https://www2.seas.gwu.edu/~mfeldman/ada-project-summary.html

rubypanther: we, as a profession, are already not considering Ada and it is, on many accounts, a shitshow. I give you the lead role, with pleasure, if you really want the status quo.

“Of course, most readers already know what it is and that they do not want it”

No they don’t already know!

Practically all criticism found here have been by people talking through their hat about Ada. People who still pick up some old stories hidden somewhere in their cabinet to drag Ada in the mud when they see fit. I think this was Luke’s take on this.

Its funny because every story implying that Ada is so ‘in your way’ comes from people who experienced its ‘overly complex specification’ at a time when everything was rudimentary, their knowledge too for that matter. Slowly, for the last 40 years, most of them (except those who are still “C from K&R is perfection on earth”) bought into the same concepts, just more badly executed, by following the C++ evolution. Now who’s specification do you think is bloated beyond repair? Ada or C++…?

Ada is a walk in the park to get a hang of compared to C++? Can it achieve the same results? Sure thing, this is no Ruby!

Your ‘species’, insulted by people who know better/who promote better, gave us the mess we are in.

The core of our technology ecosystem is C/C++ and from a systemic point of view people are ‘tripping’ on each other, all the time. Why do you think we have a thousand scripting languages all competing to be used for the next ‘real project’?

Because the intricacies of low level coding are a mess and people want to escape it.

The coupling of toolchains is, after 40 years, still hyper brittle. The tremendous obfuscating potential of C/C++ inevitably made its way everywhere. (Do you comprehend, are you interested to fix any bug/regression found in a GNU C-ishh legacy project or something like Boost?) Most are not and they are right, because a lot of complexities found in these projects are self-sustained. We have better things to do with our lives.

Are Python, Ruby, JavaScript etc good considerations for real projects, airplanes, game engines, medical applications? Sure, as in underperforming prototypes released to market went wrong. (My Netflix tv app makes for a good flagship of such crappy software that probably millions of people hate) Oh I forgot web serving millions. Last time I checked the ‘served’ web is basically a bunch of interactive flyers that took 30 years to somewhat hold together… Progressive Web Apps anyone? Anyway, Golang is eating back all those ‘scripting web serving for millions’ wrong decisions.

Now back to real projects. The Gnat Programming Studio is a real project, available on GitHub, akin to something like Visual Studio, and its made in Ada by a company orders of magnitude lighter than Microsoft. The thing is tight. But one would have to try it, inspect its code, before arguing Ada cannot be used for real projects! Will it happen? I do not think so: rubypanther already knows we do not go there.

Finally, sure I am marketing Ada ‘with a mission’ because I am tired of losing my time trying to cope with your bunch of dead corpses. Actually, most young people are like me, they just do not grasp the real reasons why in the end they are implicitly pushed to … “We will do our next 3D project in JavaScript!” … while they could achieve the same thing, or better, with way less abstraction layering, arguably a much more stable, maintainable, and discoverable code base by using something like Ada.

Just open Orka (https://github.com/onox/orka) and tell, to my face, the thing is not pristine, comprehensible, maintainable, bare metal and cool. It is not only the coder; Ada has a lot to do with it.

Ada is just sound. Stop trying.

Shit clicked on the report by mistake – ops take no notice.

> People who still pick up some old stories hidden somewhere in their cabinet to drag Ada in the mud when they see fit. I think this was Luke’s take on this.

Absolutely!

“successively more refined versions from STRAWMAN through WOODENMAN, TINMAN, IRONMAN, and the present STEELMAN.”

Hilar!

I’ll make my comment again, with some modifications.

This article contains an error, but is otherwise fine:

“Ada doesn’t define standard types like int or float”…

It does, technically, Integer and Float. As an aside, it’s amusing to see the people arguing against Ada because they don’t see the value in decreasing the chances of human error.

Perception of loss of control better known as “you can’t tell me what to do, I’m the programmer, dammit”.

i just threw away an Ada book because it didn’t think it was going anywhere

So, the book did end up going somewhere, right?

B^)

You should have sent it to me, I use them to train new hires.

If you have to paper train your new hires, you should get better HR.

Aside: It’s always better to discipline managers with a rolled up newspaper. You don’t want them to fear your hand.

I wanted really badly to agree with you on these points, but I don’t think there is anything ADA does that C++ can’t do better. After working with ADA for over 5 years on flight deck software, a lot of the points you make in favor of ADA are actually things that infuriate me. This language is great if you are writing a small embedded system, but when your codebase is literally in the millions of lines and half of them are syntax and boiler plate, you start to wish for things like inferred typing.

First its Ada, not ADA.

Ada is consistent, explicit and simpler all across the board, and this has a lot to offer compared to C++.

I participated in AAA C++ game projects and its been a constant nightmare:

Take all your loved C++ ‘sugar’, all the C++ ‘idioms’, all the trends in architecting C++ for the last 20 years and glue all occurrences of these across million lines of code… go to a Bjarne conference and buy into “you have to use ‘modern C++’ from now on…” (A kind of reinvented Ada, of infinite complexities and declinations, that will still bite you for obscure reasons from time to time, wrapped in a beloved, nevertheless, old syntax) – (I forget, add some C# … remember 2010):

You get kind of ‘the night of the living dead of spaghetti code’ with brittleness on par with an unmaintained wordpress plugin.

Are C++ games always riddled with bugs when released? Yes.

Are they not made from a ‘stable/mature’ and ‘maintained’ engine? Yes… ok so why all the crashes?

Because the house is made with a 1000 building code and liberties. In this context you have more or less three types of willful agent: 1) the guy who knows and studies at night the 1000 building codes. Most of the time no-life, he is the irreplaceable master. 2) the middle of the road enthusiast that is stoked by the latest building code addition and tries to fit most of it in his ‘realm of construction’. He secretly admires the irreplaceable master and therefore repeats everything he says about C++. Finally, 3) the ‘old school’ carpenter; he punches nails near electric wires and uses duck tape to close a leak; he knows all Visual Studio ‘supported legacy’ tricks to get by with something and C+- is a tool. Whatever, he is doing hours.

Btw, many huge C++ projects prohibit the use of ‘auto’ altogether… because its only useful in ‘your little universe’. Two years go by, I get to your code because you left the company or moved to another project and I need to fix/maintain your ‘baby’. God, turns out your naming is complete nonsense, you had a thing for const correctness and still your things are glitchy, not working properly, in the actual matter of logic and architecture. So I’ll swear to your ‘cleverness’ for weeks. You know why?

Because I do not care about ‘your code’, deciphering the orchestration of ‘your auto types’ in all its glory and overuse.

I am not enjoying myself trying to discover ‘your encoded intent’ rooted in an immutable ‘structure’ that actually ‘has a problem’. I’d rather leave the office at 5 pm and go having sex with my girlfriend than stay until 10 pm to swipe ‘mister did his fresh with sexy C++’ ass. I just want to understand straight up what you, an employe number, did.

So yeah explicit stuff is not convenient for you. It is for the company.

To wrap this up. The C++ at SpaceX is probably using a very precise and boring subset to get across approval for mission critical standards so I would not be too fast celebrating C++.

I actually think that writing Ada, with all its truly well crafted provisions, is by far more enjoyable than C++. But for that one needs to understand that ‘standard fixed point arithmetic support’, ‘protected objects’ and ‘image attributes for enums’ to name a few are greater addition in ones toolbox than ‘auto’, ‘mutexes’ and ‘global enumeration names’ … just to name a few.

So true, I worked in gamedev with C++ and then C with a company, it was a total mess and crashed all the time. Add onto this the quality of MSVC6, Intellisense worked for about 1 week on each project before it gave up forever, it would also mess up formatting aligning all braces or deleting braces making it a nightmare to read or find bugs.

Yeah, C++ is great, :/ yawn…

When you find yourself trying to correct people about a spelling, the truth is actually that they’re not wrong, they’re just using a less popular spelling.

English doesn’t have an official curator, and we do not substitute whoever coined a word as its curator.

There is nothing incorrect in capitalizing all the letters in a word. You may believe that it is stylistically inferior to other ways of writing, but making stylistic claims that purport to be semantic claims is an incorrect application of English grammar concepts. I say concepts instead of rules, because there is no curator to declare rules. There are competing style guides, but they have to compete for real reasons.

Also, “spaghetti code” means unstructured code. Nobody does that outside of ASM.

He’s correcting capitalisation, not spelling, because people keep typing it like an acronym, it’s not an acronym because it’s a *name* of a person.

Funny. Same guys argue that the ugly(#) case insensivity of Ada is fantastic, but rant against people writing “ADA” instead of “Ada”.

Btw, acronym would be “A.D.A.”.

(#)Why ugly? Have you studied mathematics or physics?! Do you think that Ω and ω are the same object? In several natural languages, the case is semantically signifiant.

DragonEgg is dead and has been for years. AdaCore have been porting GNAT to LLVM and it should be released on GitHub this month.

Ada does define Float and Integer, also there is Natural and Positive defined, along with a bunch of Unsigned_x (where x is 8, 16, 32, 64) in the Interfaces package. They are available for further subtyping, you really should define your own types, but you can use these as is.

Hi, thanks for the post, I’m eager to see more code examples of features you mentioned like defining types, type conversion, safe handling of runtime off-by-1 errors and others. Hope to see this in future posts!

You could go to GitHub and search based on language.

“`

for I in Some_Type’Range loop

null;

end loop;

“`

Or

“`

type Sprite_Frames is mod 10;

Frame : Sprite_Frames := Sprite_Frames’First;

…

Draw_Sprite (Sprite (Frame));

— no need to check for wraparound.

Frame := Frame + 1; — Ada 202x will be Frame := @ + 1;

“`

Ada is also used in another domain where any little mistake can lead to serious consequences : trains !

You know, this transportation system that allows to move more than a thousand of passengers (1268 with the TGV Ouigo in France) in one single vehicle at more than 300km/h, or several hundreds in completely automatic subways, with in both cases only 3 or 4 minutes between two trains (remember that at 300km/h you need 3.3km to stop a train !).

It’s also precisely because of its unique specificities preventing errors both during coding and at run-time that it is one of the language explicitely cited as highly recommended by railway software norms (avionics DO-178 equivalent).

I really like how you ‘bluntly underline’ the critical specs of such system and rub it in the face of anyone who might have had ‘the inspiration of the moment’ to give it a try, a serious shot using … his favorite language? Hahaha!

Oh, never thought my comment could lead to such feeling when I wrote it… My bad !

I meant to say that, if military, avionics and medical are obviously the main fields that come to mind when thinking of critical systems that could cause injury or death, ground transportation systems are to be considered in the same category.

And of course as today great and free tools exist to play with Ada on computers and MCUs, don’t hesistate to give it a try !

As said on other comments, its syntax was used as a base for other languages (VHDL, PL/SQL, …) that you may use in your future life.

Also, after several projects with Ada maybe you will acquire reflexes and habits like choosing and checking your types precisely, avoid using casting as much as you can, etc… when using ‘more permissive’ languages.

Life is tough.

Then we make lemonade.

So true.

Its the only language I use for hacking projects. Chiefly STM32s: https://github.com/morbos/STM32

AdaCore released Ada_Drivers_Library and an embedded-runtimes some years ago. I ported both of those to targets they did not originally support. The STM32L4 series. STM32F103 (aka the SoC on the sub $2 Bluepill board). Last year for MakeWithAda I ported to the Sensortile (STM32L476) and got a BLE client/server stack going. Subsequently that stack moved into the new STM32WB SoC (in the WB section of my tree). Lately I have been on the STM32L072 (A CM0+) and will soon add the STM32L151 once the boards arrive.

Ada is a breath of fresh air to an almost 40 year exp C programmer.

That reminds me. The 2019 MakeWithAda contest just began yesterday. Any level of experience is welcome! Have a go: https://www.makewithada.org.

I entered yesterday. The deadline for contest submissions is 2020.1.31

A correction above I have lately been working on the SM32L073 (CM0+) core (another 20k data chip like the F103 but it has 192k of flash vs 128k on the F103). Its ARM v6 so code density is a bit worse.

I still wish I had the bandwidth back in college to learn Ada. One of the local other colleges offered Jovial, but restricted the “allowed” students because they pretty much despised courses not in line with thier “vision”.

As to real world, you mean the one where patient facing systems from major vendors get deployed with fixed & unpatchable OS images or the one where some systems cannot be scanned for missing patches or open ports because it will crash them?

Noob question of the day; What is meant by compile by hand?

Just a guess but I believe this refers to running all your compile/build steps by entering each on the command line (no master script or build automation)

I think I’ve understood some of the potential benefits of Ada for specific use cases, but are there any really simple code examples that show where using something like C could lead to problems that Ada would solve? (Or do the advantages only start to emerge for complex code/systems?).

See https://twitter.com/MayaPosch/status/1171725480891944961?s=20

Strict typing is a pain. It unnecessarily causes program compilation to be more likely to fail until each and every equivalent type is renamed or conversion routines written (!!!!). Implicit conversions for standard types keeps the source code free of calls to conversion routines, calls which would make the program harder to understand.

C++ operator overloading is the right approach, and even in C++ the typing is too strict.

Your doing it wrong, see https://twitter.com/MayaPosch/status/1171725480891944961?s=20

I currently use Rust for all my embedded projects (for the ESP32, STM32, RISCV, ARM (cortex-m) and VxWorks).

Why should I choose Ada over Rust for my next project ? I’ve used Ada in university, but the Rust compiler generates better code, and the language feels both safer and easier to use to me.

Looking at the Steelman requirements, the only thing missing from Rust appears to be a complete definition of the language. I used to use C before, and while the C language is more defined, there is still so much undefined behavior that I don’t think having a complete definition of the language matters (and probably isn’t possible anyways, unless you count undefined behavior as defined, but if that’s the case Rust does have a spec).

Ada was designed for “programming in the large” – making very large projects with large teams. Rust is very not good at that. Ada has unmatched abstraction capabilities, and extremely rich generics.

Also error handling and containment is so much easier in Ada. First of all, having to return status codes from every function you need error recovery for is really painful (the Rust approach). Otherwise, in Rust you have to panic, which are not designed to be caught and dealt with. They are like exceptions that are meant to terminate the program, which if you ask me, is pretty insane.

The exception mechanism in Ada is much more practical than in most C-like languages. You do not need to “expect” an exception (which is a silly concept if you think about it), you can always put exception handlers not where you except them, but where you know you can recover from them gracefully. This makes Ada software, especially large systems, able to handle errors without going down at all. Rust is not very good at that.

Lastly, Rust was created to deal with the same kinds of problems that Ada was designed to deal with over 30 years ago. And Ada has been not just continually updated that entire time, it has been used IRL for that entire time. Ada is proven, and very good at it’s goal. The truth is, Rust is basically necessary if what you want is reliable software.

It’s really a popularity contest. And in the world of programming language, what winds popularity contests are:

1. Looks like C

2. New and shiny

3. Uses ‘fn’ instead of “function” cuz “muh fast typing”.

You seem to know a bit about Rust, would be useful if you could write up a Rust Vs Ada type document that these Rust people keep asking for (because they’re too lazy to look into Ada themselves).

I certainly considered blogging about Rust v Ada many times, but I’m usually unsure if it’s a good idea to get into a language war.. I’m tempted though..

“The truth is, Rust is basically necessary if what you want is reliable software.”

Did you mean to say “The truth is, Ada is basically necessary if what you ant is reliable software.” ?

Yes that was a typo! I meant “unnecessary” in that Rust has reason to exist at all. As in, if you really care about building reliable software, Ada is very obviously a superior choice in relation to Ada.

Thanks for catching that, I hope that didn’t confuse too many people.

You’ve just confused people more :D

I assume you meant to say that “Rust has *no* reason to exist” and “Ada is very obviously a superior choice in relation to *Rust*?”

The whole debate about the language definition seems a bit silly to me; C is so well defined that only retired or effectively-retired-senior-levels memorize it. And support engineers, but mostly because they have to sound Authoritative. They cite the standard, but then the solution doesn’t use that knowledge it uses knowledge from the compiler documentation.

It seems to me that the set of Best Practices being defined is both more important, and more practicable.

In practice undefined behavior is only a problem if you’re not using any set of Best Practices. If you treat warnings as errors, you probably never even tried to do those things.

You don’t know what the hell you’re talking about. The C specification is FULL of “UNDEFINED” behaviour like most language specs. You truly are clueless.

I have worked at several different software houses.

Of them, one house wrote code that could kill people if the code supplied the wrong pharmaceuticals to the wrong patient or order up the wrong procedure or protocol for a surgery. Quick doesn’t work in that environment. You test and test and test and you make it work right or the legal liability once the product hits “real world” will crush your company. It was literally life or death to get the code right, from both a moral and legal perspective.

Another “house” I worked for developed code for machines meant to kill people. Lots of people all at once. You don’t want something like that falling back on your own people, so again, you test, test, test and get it right. There’s no “we’ll fix it in the next release” with that.

You can see from my experience, I am not at all for slinging code out fast and dealing with bugs later, and would never hire or collaborate with anyone with that attitude. The lives of the people you’re affecting is worth more than getting fired from an angry boss who’s yelling at you to rush, and people who cannot see the plain necessity in making sure people’s lives are helped, not hindered (and certainly not shortened) by such, are not people I want to work with, ever.

Are you still active?

The availability of great tools is a must in the dev world. There are not enough ADA devs to encourage the open source or proprietary world to provide and evolve the best tools for ADA. Also, where do you find ADA developers? And when they come on board how long will the stay before leaving for high paying jobs using modern software. Lookmat the world of COBOL.

“The availability of great tools is a must in the dev world”

Yes having great tools is nice, but there are cases where you simply can’t have the latest or greatest at your disposal. For example, if you are developing embedded software, you usually only need your compiler and debugger. Sadly, sometimes those may be the only tools that your work may provide to you regardless! When you don’t have access to all the tools, then the choice in language can become even more significant. Ada helps to prevent many common bugs found in other more popular languages, so your time in the debugger will tend to be less. That also means less cost for maintenance and helping to avoid looking bad to the customer. But let me raise a question, do some of the great tools you have in mind around because they are helping to compensate for deficiencies in the more popular languages? Static code analysers for C and C++ are examples that comes to mind.

“…where do you find ADA developers? And when they come on board how long will the stay before leaving for high paying jobs using modern software”

This is the wrong thinking. First of all, a company should hire people who possess important domain knowledge and who can adapt to different things, such as tools, processes and even computer languages. If a person can only think and write in computer language X, then they are limiting themselves. I’ve actually heard the fear of retaining new talent if they had to program in Ada. That is ridiculous! There are far more significant reasons why someone would leave (e.g. $$$, lack of respect, poor life/work balance, etc). If a person left because of the computer language they had to use, then they weren’t serious enough to stay any way.

BTW, the language used does not dictate how “modern” a software is. I would say it’s more about how well the software addresses current and future needs and problems. People often use the term “modern” as a superficial and lazy attempt to make something else seem inferior. Most of what is in “modern” languages has been around for decades in some form or another.

I made a career of writing in (IBM mainframe) PL/I, processing massive amounts of data. I knew the language well enough to know how to write efficient and reliable code, elegantly presented. (There was a compiler option to provide equivalent pseudo-assembler code to check the efficiency of alternative equivalent code constructs, of which there were always many.) I can continue to sing the praises of PL/I, but I do not program any more. (Don’t get me started on the utility and beauty of SuperWylbur, a teleprocessing monitor – not TSO.)

I know next to nothing about ADA, but I had to get my 2 cents in.

Apl was great. And FORTH.

I believe one of my graduate professors, Alvin Surkan, helped create APL. Fascinating language, with a huge number of nestable primitive operations. It worked best with an IBM 2741 terminal, Selectric-based with a special typeball which could create the operation symbols by backspacing and overstriking. The programmers’ gimmick was to get as much done in as few lines of code as possible. Like a generalized computed solution of n equations in n unknowns in 1 brief line.

I wrote my first device drivers on Z80 systems in assembly language, using a lot of my own macros and subroutines. Those constructs began to look like a higher level language to me, and the discipline that was forced upon the coding came from the narrow capabilities of the device, based on its design and implementation. Of course, my code was familiar to me but foreign to others. Then I tried two languages – Ada and Forth. Ada promised the world, and Forth promised “tighter code than assembly language,” which may have been true if you wrote sloppy assembly. Ada reminded me of PL/1 but was very inhibiting to produce, like trying to write a book while having to edit and proof it at the same time. Forth, on the other hand, was just the opposite; I called it ‘write only’ because once I wrote it, I couldn’t read it. The point is that programming languages are written for humans, not computers. Like human languages, each has its strengths and weaknesses with proponents and those who “just don’t get it.” If a language inhibits your from using it, it is not a good language for you. Eventually, computers will code themselves and use virtual systems analyst AI to get the specifications. That is a long way off, but it has been evolving since the ’70s. I remember a 1980s MIPS compiler that would not only find basic coding problems but also correct them. Now, IDE editors can predict your code while you write it. This all points to machines eventually solving many of the development problems we have seen over the past 70 years. Of course, there will be new problems, too. After all, what would life be without problems to solve?

“IDE editors can predict your code while you write it”

Which IDE is that? Hope you are not talking about context aware word completion.

Seriously, it’s time to stop with these articles. Use the language(s) best suited for the task/job, not what someone, who’s never programmed a day in their life, tells you what to use in some blog.

Like when a manager tells you to use C or C++ because “everyone else uses it?”

It depends who “everyone else” is, though.

In the case of articles like this, it is just random developers.

In the case of a manager talking about C/C++, they might mean “the people who write support libraries for our problem domain.”

I learned ADA in college because our teacher literally wrote the official french book about ADA and made us buy it. It’s a decent language but there’s a huge lack of libraries available. And yes, you can interface ADA with C but it’s a lot of work where you could simply directly write in C…

You’re right there isn’t nearly as many libraries for Ada, and it’s been an ongoing issue that I’m afraid won’t go away in the near future.

While creating an Ada binding can be a challenge, some of that challenge is because of the questionable C prototype that existed in the first place. Questions such as: Is that pointer parameter for a single item or a list? Is NULL allowed?” Does the function really want to allow negative values? etc. I’ve seen plenty of cases where it really wasn’t clear. Fortunately, once you figure all that out, your Ada binding can impose the proper restrictions (e.g. forbid NULL completely, use arrays if necessary, define numeric types with proper ranges, etc). This can be worthwhile if you expect to reuse the bindings on other projects.

Sorry, I can’t support this enthusiasm about Ada and IMHO it is quite natural that Ada does not play a big role today.

I learned and practised Ada during my university years – but for my work I prefer C++. Why? Because C++ offers to me the necessary abstraction- and reusability level of object-oriented programming in a practical, usable way.

Ada is IMHO too coupled to the Pascal-alike general syntax that it could incorporate the later concept of object-oriented progamming in a clean, fluent way.

C++ has proven itself for my working duties to formulate safe, efficient and reusable code. Using the concepts of object-oriented abstract datatypes one can create datatypes which do much more for verifying their setting than just range checks.

Another important aspect for the safety aspect in modern big programs is the clarity of the code. And for this purposes objects representing clean concepts on the current abstraction level in combination with objects representing abstract datatypes for the arguments are very valuable. Combine this with operator overloading (clarity!), strict enumerations and value literals and you get a decent tool for managing complex problems.

And especially for embedded systems I’m really thankful for constexpr methods and constructors. Using these one can create register content values from clear, abstract notations at compile time.

I’ve written far more C++ than Ada code over my career, but I still prefer Ada’s OOP. The language already supported abstract data types since the beginning and the OOP features were a natural incremental enhancement to it. Sure the syntax is different that C++, which you pointed out, but the capabilities is very similar (minus multiple inheritance). If you were turned off by the lack of object.method notation, you should know that was added years ago in Ada2005.

In Ada, I like how I can create a tagged type (i.e. Ada’s equivalent of a C++ class) at one point and then later add additional routines or other closely related types without the need to derive from the original tagged type or being forced to pollute the original declaration with friends. I also like how creating operators to have the tagged type on the left or right side uses the same syntax that was there since day one.

All the “research” (eg, serious analysis) shows that TDD is irrelevant to code quality. If writing tests before or after the implementation works better for you, it ends up with the same in the cases where you wrote good tests and good code.

Similar for design patterns; applying an applicable design pattern is good, but simply throwing named design patterns at a problem is not a useful way of getting there. What determines the utility of using named design patterns is something deeper; having a developer that understands those patterns well. A developer who hasn’t done that isn’t going to give a better result by doing it. And a developer who does have that knowledge isn’t going to save time by not doing it.

In the end, which instructions you ask the CPU to crunch will determine not only the quality of the design, but the complexity of the code and the difficulty of both the implementation and maintenance phases.