Smartphones and other modern computing devices are wonderful things, but for those with disabilities interacting with them isn’t always easy. In trying to improve accessibility, [Dougie Mann] created TypeCase, a combination gestural input device and chording keyboard that exists in a kind of symbiotic relationship with a user’s smartphone.

Smartphones and other modern computing devices are wonderful things, but for those with disabilities interacting with them isn’t always easy. In trying to improve accessibility, [Dougie Mann] created TypeCase, a combination gestural input device and chording keyboard that exists in a kind of symbiotic relationship with a user’s smartphone.

With TypeCase, a user can control a computer (or the smartphone itself) with gestures, emulate a mouse, or use the device as a one-handed chording keyboard for text input. The latter provides an alternative to voice input, which can be awkward in public areas.

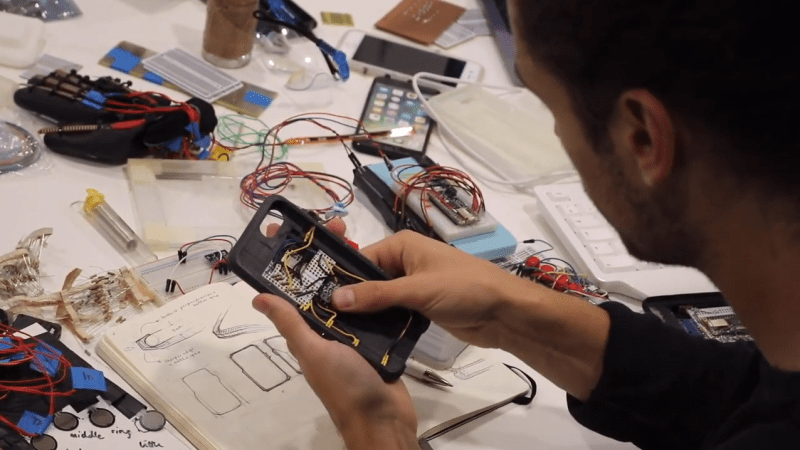

The buttons and motion sensors allow for one-handed button and gestural input while holding the phone, and the Bluetooth connectivity means that the device acts and works just like a wireless mouse or keyboard. The electronics consist mainly of an Adafruit Feather 32u4 Bluefruit LE, and [Dougie] used 3D Hub’s on-demand printing service to create the enclosures once the design work was complete. Since TypeCase doubles as a protective smartphone case, users have no need to carry or manage a separate device.

TypeCase’s use cases are probably best expressed by [Dougie]’s demo video, embedded below. Chording keyboards have a higher learning curve, but they can be very compact. One-handed text input does remind us somewhat of a very different approach that had the user make gestures in patterns reminiscent of Palm’s old Graffiti system; perhaps easier to learn but not nearly as discreet.

BTW, Palm’s Graffiti is now owned by ACCESS Co. and is still available for Android in the Play Store.

It is a mess (at least was hellishly slow when I tried it first time with rather high hopes), no cyrillic, (that was available on palm, with cyrhack, and that was what broke it for me).

I use mesagease instead, it is about as fast after you learn it, and finger oriented, instead of ‘stylus oriented’,

But I still miss graffiti

Hmm, talks about being “inclusive” and useful for amputees – but only if you’re right handed, or have lost your left hand! What about us lefties?

Still, impressive piece of design work.

Wouldn’t it just be a simple matter of mirroring the print?

The link is down, two much visits, should I guess.

Mobile braille displays and keyboards exist today. Braille has its issues, but has been optimized to ensure the differentiability of its different pin patterns.

How is the encoding used better than Braille? How fast is the learning process for users? How would people transition between Braille-based systems and this system? What happens when you put your phone down or in your pocket and it hits buttons? How can this same encoding be adapted to different devices if it is geometrically constrained to phone proportions? How would upgrading you phone, and hence modifying its geometries impact the user’s understanding of the encoding being used? What happens if the user uses their other hand for some reason, is the mapping still intelligible?

Also, saying “I used a user-centered design approach” for a system aimed at helping out blind people without engaging with blind users in the design process is nothing more than plugging design buzzword.

I see here a very nice design exercise and a very neat implementation, but without empirical proof that there is any advantage of using the proposed encoding vs existing solutions for blind users, this is nothing more than portfolio padding.