Is there no end to the dongle problem? We thought the issue was with all of those non-USB-C devices that want to play nicely with the new Macbooks that only have USB-C ports. But what about all those USB-C devices that want to work with legacy equipment?

Now some would say just grab yourself a USB-C to USB-A cable and be done with it. But that defeats the purpose of USB-C which is One-Cable-To-Rule-Them-All[1]. [Marcel Varallo] decided to keep his 2011 Macbook free of dongles and adapter cables by soldering a USB-C port onto a USB 2.0 footprint on the motherboard.

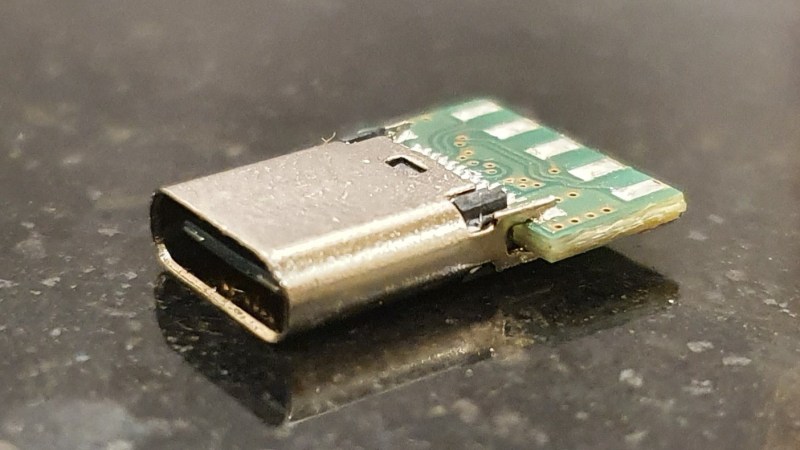

How is that even possible? The trick is to start with a USB-C to USB 3 adapter. This vintage of Macbook doesn’t have USB 3, but the spec for that protocol maintains backwards compatibility with USB 2. [Marcel] walks through the process of freeing the adapter from its case, slicing off the all-important C portion of it, and locating the proper signals to route to the existing USB port on his motherboard.

[1] Oh my what a statement! As we’ve seen with the Raspberry Pi USB-C debacle, there are actually several different types of USB-C cables which all look pretty much the same on the outside, apart from the cryptic icons molded into the cases of the connectors. But on the bright side, you can plug either end in either orientation so it has that going for it.

Can’t see the end result, so I’m sorta disappointed. But since he hacked the adapter, it’s hack, nonetheless ;-)

Cutting up a cheap, possibly defective, adapter and then showing a photo of an unmodified circuit board counts as a hack?

In a post arduino / pi / python world turning on the laptop is a hack

Yes, it counts as a literal hack.

Plaease, don’t do it. These adapters are against the spec. You can fry your device. If you do this at least post fail of the week.

see:https://medium.com/@leung.benson/why-are-there-no-usb-c-receptacle-to-usb-b-plug-or-usb-a-plug-adapters-f97736bb62be

Add a small MOSFET to only connect power when a valid device is attached.

Yet companies broke those rule. Sandisk for example sells “Extreme Portable External SSD” from 250GB to 2TB and comes with a short type C-C cable plus a C to A adapter to connect to computers that do not have C port.

That’s okay as long as you use that adapter exclusively with the hardware it came with (which is probably also stated somewhere in the fine print).

You can’t really do anything novel that’s dangerous with a A->C adapter. You could combine two of them into an A->A cable. You can buy A->A cables off the shelf already, so any risks already exist (standard does not allow A->A either)

I’ve got one of those drives… I always wondered why it died, I used a Micro-USB cable. Makes sense now. Maybe they should have made the warnings a little more clear on the drive itself…

One solution that does not involve making the adapter active is to make the adapter captive, that is it’s linked to the cable in such a way that it can only be used to change the end, not connect some other device directly to the adapter. Not too sure but such a cable might actually be able to meet spec if it meets A-C spec with the adapter used and C-C spec with the adapter swung out of the way.

Hot take that I know will ruffle plenty of feathers:

USB A is vastly superior to USB C in any usage scenario, except where the device acts as both “master” and “slave”, and where you’re having a hard time figuring out the orientation of the plug and socket.

But regarding the latter I’ve acquired a sixth sense for that after growing up with SCART and having CRT TV’s in recessed “pockets” in large shelves.

You soon develop tricks for minimizing your reacharound time that proved handy for USB A after plugging in SCART plenty of times in such situations.

What about the scenario where the USB connection is used for high power charging, pleas explain how this is done with a USB-A connector. Also the USB-C connector has provision for direct video output to a monitor, again how to do this with USB-A???

USB PD 1.0 worked over USB A and B connectors, allowing up to 100W using cables that were specifically built for the task but had normal connectors on each end. As for video output, displaylink USB video cards could cover that.

I’m not saying these are good solutions but they exist. I’m thinking about them at the moment since the otherwise interesting Asus Zenbook Duo has a USB-C port that can’t be used to charge the laptop, or carry video output, or carry thunderbolt 3. I’m going to need to find a USB-C + PD to barrel jack adapter and figure out how a dock stuffed full of USB 3 displaylink devices would work.

AFAICT, every single problem that people blame on “USB-C” is actually the fault of shitty manufacturers trying to cheap out by omitting important resistors. Unscrupulous penny-pinching isn’t the fault of the spec.

Why this doesn’t happen on usb 3.0? maybee too much costs for usb c.

Yeah those surface mount resistors cost one tenth of a cent each and you need two of them.

I’m assuming krater meant licensing costs.

A spec that encourages manufacturers to cheat in a way that’s hard to detect and potentially fatal to the parts is a problem with the spec. Any other assumption is just wishful thinking.

Yup. Much like how software APIs should be designed to be easy to use and hard to misuse, a good hardware spec should be easy to do right and hard to cut corners on.

USB B and mini connectors are far less annoying – they have a clear sidedness you can feel!

USB C for me is asking for trouble, too many specs to select from and complex cables. Its got some positives sure but its getting away from being a simple reliable choice that its hard to screw up the implementation of.

The author makes no sense by saying :

“So I have this Macbook Pro 17 inch from 2011, you know…from when Apple seemed to give a shit about making well built and powerful laptops. It’s the same one I repaired the graphics card on by putting the motherboard in the oven on high, several times. ”

What ? Well built -> I had to shove my laptop in the oven to reflow it.

I see you’re not familiar with the fanboi. They make about as much sense as flat earthers.

Not Apples fault nVidia couldn’t keep their gpu chip soldered to its BGA

I understood him perfectly to mean real laptops, not ones crammed with low power processors that bump off turbo in a matter of seconds and have minimal, or one! External port.

If you are using it to render or simulate you need more coolong than an ultrabook usually provides, and a higher tdp

Actually yes it is apple’s fault if they are so worried about making machines so thin it can’t cool its parts, that’s called form over function, and apple has a nasty history of doing just that.

PS: there are many laptops featuring the same nvidia chip that didnt fall off the board

I’d say 50/50, and the ones that didn’t fail are likely because the GPU was never used by the owners

nVidia promised one thing, and reality proved different, those mfr that picked aggressive throttling or fan settings, or low tdp modes may have survived.

(Cough cough, buy a thinkpad)

No need to rag on Apple

nVidia released specifications of tdp, proper thermal design application notes, etc so it was up to apple to do due diligence and follow them (or at the very least do an fmea analysis with support as to why they felt it was reasonable to ignore certain aspects without considerable risk of failure). Ergo, yes in the end it was apple’s decision to design in a way that placed operating conditions of the gpu out of spec, in an attempt to increase performance while simultaneously decreasing thermal allowance at the expense of long term reliability. Every manufacturer deals with similar issues in the design control process, apple is not any different.

“nVidia released specifications of tdp, proper thermal design application notes, etc”

no, they did not. If you google for this you will see that every laptop manufacturer has problems with overheating Nvidia chips. If the specs were correct you would expect at least one of them to get it right. The fact that every single manufacturer has problems indicates that Nvidia has failed to communicate the required information.

@N, To make that claim I assume you work for a company which has obtained the technical literature under NDA so you can surely say nVidia hasn’t provided such info? Isn’t it a much more reasonable assumption that most laptop manufacturers tend to make the choice to design thermals as an afterthought, than a chip manufacturer to not provide documentation on a very well explored discipline such as thermodynamics?

I make my claim based on googling “Nvidia laptop overheat” and seeing multiple complaints from every laptop maker.

As they say “the proof is in the pudding”

the reason you can’t just buy bare Nvidia chips from mouser or digikey is because Nvidia wants a close relationship with their customers, to approve designs and provide guidance. If the laptop makers are producing bad video designs Nvidia has only themselves to blame for not providing proper guidance to its customers.

The end decision is in the manufacturer’s hands, whether it’s a problem with heavily budgeting engineering or a poor qms. nVidia only publishes and provides technical data, they cant hold a gun the a manufacturer’s head and force compliance. Sounds like you are basing your opinion on the perspective of an end user, and not from how things actually work in engineering design and manufacturing.

” nVidia only publishes and provides technical data, they cant hold a gun the a manufacturer’s head and force compliance.”

Yes they can! They most specifically can deny shipments to companies that don’t adhere to the published specifications, because laptop vendors must sign a contract agreeing to this before they can even get the specs. Again this is why you can’t buy Nvidia chips from mouser, you must sign a contract with Nvidia.

“Sounds like you are basing your opinion on the perspective of an end user, and not from how things actually work in engineering design and manufacturing.”

I am basing my knowledge on my experience working for computer hardware manufacturers who had to jump through many many hoops to get contractual approval from chip vendors before the product could be shipped. Chip companies do not want to be associated with poor quality manufacturers and they use contracts to keep their customers in line.

Clearly the contractual obligations don’t include enforcing good/conservative thermal design because like you said many manufacturers aren’t going that route and the end result speaks for itself. Yeah they could put a clause in the contract to pressure manufacturers, but that’d be risky as that may push customers their competition. I really don’t get where you got the idea that a silicon chip manufacturer can specify technical design beyond what’s suggested in the datasheet.

“Yeah they could put a clause in the contract to pressure manufacturers, but that’d be risky as that may push customers their competition.”

What other competition is there for high performance video chips? They will all make you sign a contract where they have to sign off on your design before they ship you chips. None of these places want to be associated with poor quality hardware.

Wow, cant force your opinion as fact so resorting to suggesting I’m using drugs? Really classy buddy. Why would you overlook that the much higher likelihood that market pressures are favoring higher performance and smaller devices with laptop manufacturers and instead lay the issue at the feet of a chip manufacturer? Look I’ve said what I wanted to say, you can continue arguing to yourself, I’m done wasting my time in a myopic argument with no benefit to either of us.

isnt there m2 to usb3 adapters ? a hub, usb wifi dongle in case attached tonoriginal antennas and one port routed to a usbc port outside would be inpressive

Woah, you’re sounding mighty aggressive there. Please relax.

You asked how power and video could be done over USB type A, I pointed out options. I didn’t say they were good ones.

As for getting a macbook and being done with it, those machines have their flaws. I do love having a proper full-featured USB-C implementation but then Apple go and whitelist the TB3 chips they’ll connect to and miss a bunch of popular eGPU or desktop docks off the list in the process, boo. Let’s not mention the keyboards from 2016-2019 either, or that docker on mac OS isn’t as featureful as on a linux host (try passing in the docker socket, no can do), or that until we have keyboard drivers (!!!!) linux is basically unusable on macbooks.

I found a company on Alibaba that has genuine Mini PCIe to USB C adapters. They’re not like those cheap “USB 3.0” Mini PCIe adapters that are simply a 3.0 A connector attached to the USB 2.0 pins. The board is pretty long with some chips on it and has a little extra power connector.

So there’s a way to hack real USB C into a laptop.

Despite Mini PCIe and ExpressCard being essentially functionally identical, and there being desktop PCIe x1 to USB C cards, the company making those Mini PCIe to USB C adapters insisted that a USB C ExpressCard “isn’t possible” because PCIe x1 can’t meet the data transfer rate.

If that’s so, then why are they making a device that could be reconfigured to fit the ExpressCard form factor? Why are there so many companies making the PCIe x1 USB C cards for desktops.

Seems to me the answer is they just don’t want to make a USB C ExpressCard.

I wouldn’t care if it can’t meet the maximum data transfer rate. The vast majority of USB devices cannot come near the maximum transfer rate of whatever USB version they are.

The main reason to have a USB C ExpressCard is to be able to use USB C devices that are either not compatible with anything but real USB C, or have limited functionality when connected via an adapter or hub to a USB 3.x port.

Currently I have a USB 3.0 hub with a USB C cable permanently attached. It absolutely will not work at all connected to a 3.0 port with an adapter, but plugged into a USB 2.0 port it will function as a USB 2.0 hub. It must have a real USB C port to function to its full capability.

What the ExpressCard people need to do (and should have done *years ago*) is introduce a 54X form factor that has a connector using the full width of the card, and make it all for extra PCIe lanes and some extra beefy power supply. 54X wouldn’t help older laptops but it’d give the laptop expansion card a whole new lease on life for new models, especially for eGPU.

The reason that nobody is making an ExpressCard USC-C ports is because ExpressCard is a dead standard. The last Mac to have an ExpressCard slot was back in 2011. In fact, I found an article back from 2011 saying how manufacturers were considering dropping ExpressCard support.

Additionally, you seem pretty confused about what USB-C actually is. You keep talking about ‘real USB-C port’. USB-C is a connector standard, not a communication standard. A USB-C port that only supports USB 2.0 is indeed a ‘real’ USB-C port. You keep referring to USB-C where you should actually be saying USB 3/3.1. You need to separate USB-C from USB 3; they are two totally different things.

Well. that was a good project for this year. And next year he gets to do it again to update his computer to whatever connector the industry “standardizes” on next!

Don’t get me wrong, I do think USB-C (minus the spec breaking issues) is the best connector so far. But I am rather tired of all my adapters being obsoleted so often. I want a connector that lasts as long as the headphone jack did!

Better yet, Fahnstock clips forever baby!