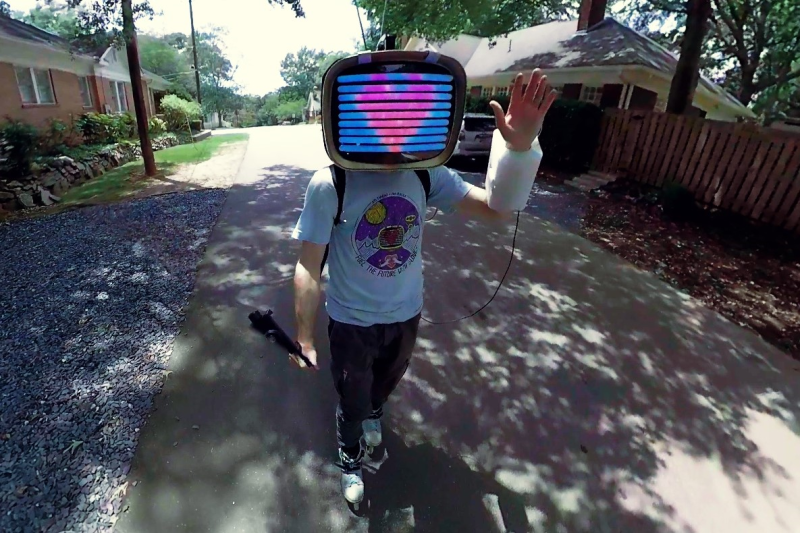

Gesture recognition and machine learning are getting a lot of air time these days, as people understand them more and begin to develop methods to implement them on many different platforms. Of course this allows easier access to people who can make use of the new tools beyond strictly academic or business environments. For example, rollerblading down the streets of Atlanta with a gesture-recognizing, streaming TV that [nate.damen] wears over his head.

He’s known as [atltvhead] and the TV he wears has a functional LED screen on the front. The whole setup reminds us a little of Deep Thought. The screen can display various animations which are controlled through Twitch chat as he streams his journeys around town. He wanted to add a little more interaction to the animations though and simplify his user interface, so he set up a gesture-sensing sleeve which can augment the animations based on how he’s moving his arm. He uses an Arduino in the arm sensor as well as a Raspberry Pi in the backpack to tie it all together, and he goes deep in the weeds explaining how to use Tensorflow to recognize the gestures. The video linked below shows a lot of his training runs for the machine learning system he used as well.

[nate.damen] didn’t stop at the cheerful TV head either. He also wears a backpack that displays uplifting messages to people as he passes them by on his rollerblades, not wanting to leave out those who don’t get to see him coming. We think this is a great uplifting project, and the amount of work that went into getting the gesture recognition machine learning algorithm right is impressive on its own. If you’re new to Tensorflow, though, we have featured some projects that can do reliable object recognition using little more than a Raspberry Pi and a camera.

“…and he goes deep in the weeds …”? Or BC is going far afield in search for new euphemisms. You’re upstaging the uh, subject artist… literally, one might poffer.

I read the article again, but still don’t understand the concept. It seems to be random things put together because they are cool, but really have nothing in common. Gesture recognition, twitch chats, and rollerblading.

It just makes no sense. Also “generate positivity”? Adding something for people who don’t know this guy would be useful, because his TV-head looks somewhat cool, or really just unusual, but spreading positivity with it. Hm… bit of a stretch.

The part on machine learning in the video is extensive, and clear. But the point of the gesture recognition remains mysterious. So he waves his hand in real, everybody sees it, now what?

Only in the end it gets somewhat clear: gestures (skating faster/slower, and moving arm in a certain way) create other pictures on the screen head. The point of this remains mysterious, though.

In short: the video and article focus on the how, but fail completely to address the why.

Ok, reading the article again, you can extract the meaning once you understand the concept.

“He also wears a backpack that displays uplifting messages to people as he passes them by on his rollerblades, not wanting to leave out those who don’t get to see him coming. ” makes sense now.

Still, the article should be clear on it’s own instead of focusing on stuff like how machine learning has become more accessible.

A simple introductory line would have done this, such as (I am not claiming it to be a greatly phrased line, but it mentions the essentials):

Guy puts a fake TV on his head while rollerblading through a city. The TV head can show emotions via hearts or similar pictures/animations and display messages on his backpack to greet and uplift people nearby. These affective expressions can be triggered using gestures he makes while he rollerblades, or can be triggered via twitch chats.

Not sure I got it completely right, but I will not dig further in the project to clarify this. It should be made obvious by the project author or the article author here.

It wasn’t relevant on The Big Bang Theory. It wasn’t even funny, which it was supposed to be–but not much is.

People never learn…