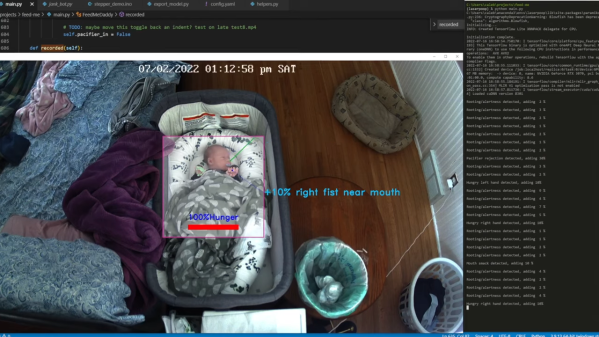

You can do all kinds of wonderful things with cameras and image recognition. However, sometimes spatial data is useful, too. As [madmcu] demonstrates, you can use depth data from a time-of-flight sensor for gesture recognition, as seen in this rock-paper-scissors demo.

If you’re unfamiliar with time-of-flight sensors, they’re easy enough to understand. They measure distance by determining the time it takes photons to travel from one place to another. For example, by shooting out light from the sensor and measuring how long it takes to bounce back, the sensor can determine how far away an object is. Take an array of time-of-flight measurements, and you can get simple spatial data for further analysis.

The build uses an Arduino Uno R4 Minima, paired with a demo board for the VL53L5CX time-of-flight sensor. The software is developed using NanoEdge AI Studio. In a basic sense, the system uses a machine learning model to classify data captured by the time-of-flight sensor into gestures matching rock, paper, or scissors—or nothing, if no hand is present. If you don’t find [madmcu]’s tutorial enough, you can take a look at the original version from STMicroelectronics, too.

It takes some training, and it only works in the right lighting conditions, but this is a functional system that can determine real hand sign and play the game. We’ve seen similar techniques help more advanced robots cheat at this game before, too! What a time to be alive.

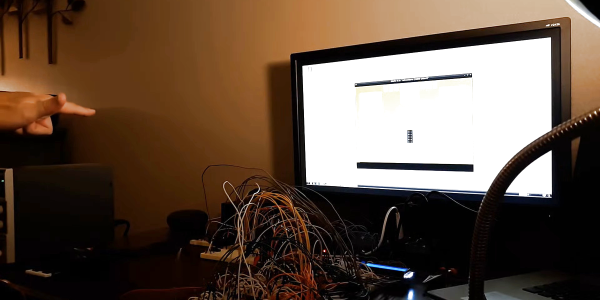

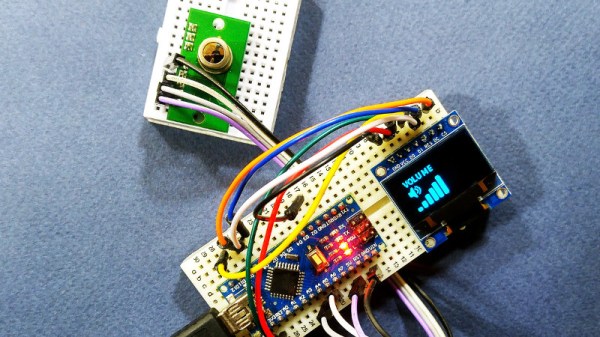

The project uses the TPA81 8-pixel thermopile array which detects the change in heat levels from 8 adjacent points. An Arduino reads these temperature points over I2C and then a simple thresholding function is used to detect the movement of the fingers. These movements are then used to do a number of things including turn the volume up or down as shown in the image alongside.

The project uses the TPA81 8-pixel thermopile array which detects the change in heat levels from 8 adjacent points. An Arduino reads these temperature points over I2C and then a simple thresholding function is used to detect the movement of the fingers. These movements are then used to do a number of things including turn the volume up or down as shown in the image alongside.