Sipeed have been busy leveraging developments in the RISC-V arena, with an interesting, low-cost module they call the Lichee RV. It is based around the Aliwinner D1 SoC (which contains a Pingtou Xuantie C906 for those following Chinese RISC-V processor development) with support for an optional NAND filesystem. This little board uses a pair of edge connectors, similar to the Raspberry Pi CM3 form factor, except it’s based around a pair M.2 connectors instead. The module has USB-C, an SPI LCD interface, as well as a TF card socket on-board, with the remaining interfaces provided on the big edge connector.

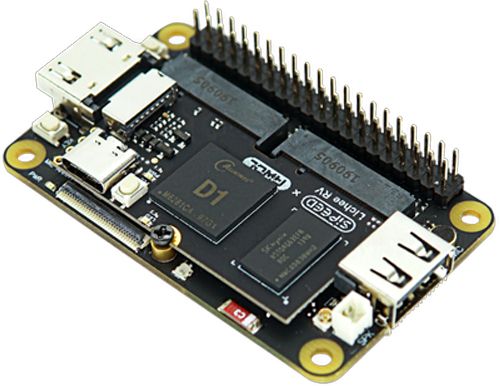

So that brings us onto the next Sipeed board, the Lichee RV Dock which is a tiny development board for the module. This breaks out the HDMI, adds USB, a WiFi/Bluetooth module, audio driver, microphone array interface and even a 40-way GPIO connector. Everything you need to build your own embedded cloud-connected device.

Early adopters beware, though, Linux support is still in the early stages of development, apparently with Debian currently the most usable. We’ve not tested one ourselves yet, but it does look like quite useful for those projects with a small budget and not requiring the power-hungry multi-core performance of a Raspberry Pi or equivalents.

We’ve seen the Sipeed MAix M1 AI Module hosted on a Pi Hat a couple of years ago, as well as a NES emulator running on the Sipeed K210. The future for RISC-V is looking pretty good if you ask us!

Thanks [Maarten] for the tip!

Debian was the first distribution to adopt RISC-V and port a large number of packages.

However be aware that Allwinner is (still) violating GPL.

Also Sipeed is not providing good documentation and steps to generate an image.

Just a heads up.

How are they violating GPL? I’m actually curious, I haven’t heard of this before.

Seriously? Allwinner is a huge GPL violator. https://www.phoronix.com/scan.php?page=news_item&px=Allwinner-GPL-Violate-Proof

Hey, I was just asking. I follow Risc-V development fairly actively, but a lot of those guys are GPL detractors and don’t tend to bring that kind of thing up.

Thanks for the link!

https://linux-sunxi.org/GPL_Violations

Also, anyone who trusts a CPU made under the eyes of the CCP is a fool.

The NSA backdoored damn near everything coming out of the US that had crypto in it, even diverting network equipment shipments. No way the CCP isn’t doing even worse.

> Also, anyone who trusts a CPU made under the eyes of the CCP is a fool.

But trust the systems with an unwanted MINIX inside?

POWER9, Microwatt, and later LibreSoC. For FPGA scrambling techniques, see betrusted.

Whilst I wouldn’t fancy seeing it in critical infrastructure, most of the issue we seem to read about are to do with “sloppy” software implementation – and that code does get shared with high level customers.

One can imagine that if they were putting covert hardware backdoors in, then, given the obvious grounds for suspicion, that they would be discovered reasonably quickly – and that might be serious enough for a complete ban on importing such devices – would manufacturers or CN.gov want to take that risk for “toy” equipment?

I am not saying they wouldn’t give it a try if they thought they would get away with it, but where is the proof?

It only gets found if somebody actually looks. It took years before the backdoor processor in various VIA x86 chips was found, and by then they were (and are) scattered throughout lots of military equipment.

I agree with what you say, and I hope that TPTB take this sort of thing seriously, now.

Frankly, I think it is of strategic importance for the West to maintain its own fabs, and not just for security – one never knows when the supply might get cut off.

Of course backdoors are not in the “toys”, at least not until someone orders at least 100k of them!

;^)

Well one would bloody well hope that in this day and age that there would be a fair amount of checking – frankly, I wouldn’t be surprised if someone with deep pockets and suspicion didn’t order a tonne of items as you say, specifically in order to catch such tricks; one instance of a hardware backdoor found and it is game over for that company as far as the west is concerned.

Maybe an automated xray box with ML, to compare to a known to be good reference?

I don’t doubt supply chain attacks exist either, just that the risk/reward ratio isn’t worth it when it’s about money and an export market.

To be clear, I don’t want that stuff near critical infrastructure, and anyone using it for gov/mil purposes needs their head examined.

Backdoors won’t do too much damages if you don’t give the SBC any network access i.e. internet, LAN, WIFI, BT. This particular dock doesn’t have that. If you go out of you way, that’s your problem.

“This breaks out the HDMI, adds USB, a WiFi/Bluetooth module”

Read again.

Um, actually, Fedora was the first out of the gate. We used it on spike to demonstrate that “outside” / non-UCB-built software worked. One undergrad at UCB had a painful Yocto build everyone tried to use before that.

“uhm acshually”, Fedora did not have the same amount of tested and working packages

Sipeed is also the provider of the very famous, very useful, and very cheap “Longan nano” module, featuring a RISC-V MCU from gigadevice. It is basically a stm32 clone with the ARM core replaced by a RISC-V core “bumblebee” from chinese IP company “Nuclei”.

What about power management and sleep modes? Will it be viable for battery powered gagdets or yet another RasPi-like shitty STB style thing?

I’m actually looking for RPi Zero alternative capable of suspend to RAM for my eink reader project but haven’t yet found one :(

All these SBCs sucks in this regard.

The PineNote runs on their Quartz64 board. Mine is pretty nice. Looking forward to actual Linux support.

And the price is …?

It varies, but looking at AliExpress right now:

-$5 for the non-wifi dock

-$8 for the wifi-capable dock

-another $14 for the Lichee RV itself

$22 all-in for a wifi-capable SBC equivalent, $19 if you don’t care about or don’t want wifi.

Or $22 for the Lichee RV with display. I have two and didn’t realize I should get the dock as well. Oops.

There’s something to be said for skipping HDMI and just having a CLI over DSI.

Comes down to use case.

One thing I do love about the C906 chip used is that the verilog source code is available ( https://github.com/T-head-Semi/openc906 ), how many production CPU’s can you say that about ?

One thing I do not like about the implementation it in the AllWinner D1 RISC-V is that the SDRAM has a “Maximum capacity up to 2GiB”. Which is probably enough for a single core CPU with 32KB I-CACHE and 32KB D-CACHE.

I’ll give the Lichee RV board a miss because it ships with 512MiB. I know there are many problems/solutions/applications where limited RAM is not an issue (e,g. most Arduino only have 2KB of SRAM, and it is not like people have run out of things to do with them) But for me, if I’m running a UNIX OS on a machine with limited RAM I usually end up doing things differently than I would normally. Instead of thinking how can I make this happen as fast as possible, it becomes how can I do this to minimise the amount of resources I’m using (which usually ends up being much much slower).

I’ll probably skip the “VisionFive” (8GiB RAM) board as well, and may give the “Unmatched” (16GiB RAM) board a look see once Mouser has stock that is not continually on backorder. I want to own a RISC-V board.

What use-case would you have for a linux SBC that would use 8GB of RAM? 4GB is usually more than enough. Pi-alikes simply don’t have enough processing power to make reasonable use of large amounts of RAM except for a few niche use cases, such as crunching large data-sets or running high-fidelity simulations.

The Unmatched is more than an order of magnitude more expensive, it’s in a completely separate market segment. That’s not to say you shouldn’t get it, it’s a pretty rad machine.

https://www.tomshardware.com/news/raspberry-pi-4-8gb-tested

> What use-case would you have for a linux SBC that would use 8GB of RAM?

That would be personally identifiable information, which I do not generally post online (easy to post once, very hard to ever remove), you may as well be asking for my first pets name and where I was born.

I’ll give you an example where RAM is a problem. An Odroid-XU4 is a beast of a tiny machine for the price, 8 CPU’s (4 big;4 little) with 2 GiB of RAM. So you would think building gnuradio (or installing gentoo Linux) on it will be super fast if you use all eight cores to execute 8 compiler/assembler/linker instances in parallel. But in reality to avoid problems you end up using 4 and when that fails 2 and if that has problems a single CPU core. You can add swap to an external USB spinning rust hard disk over USB 3.0 but that will add extra latency. Or you could rapidly burn through the short write lifetime of the eMMc by putting the swap there (ref: “write amplification”) but I generally make the whole SSD readonly once my OS is installed and configured to never need to write to it. Or maybe add zswap, but then you are exchanging disk access times and CPU clock cycles for not having the ram you need.

Compiling stuff is not the purpose of these embedded platforms. How often do you compile Gnuradio? If the answer is “a lot” why not build crossarch or in an emulation environment on your desktop? 2GB limit is restricting for some embedded uses, but 4-8GB is generous and 16GB is almost always unnecessary.

Stuff does in fact have to be compiled for ARM or RISC-V by *someone*. People maintaining distros such as Fedora or Ubuntu have tens of thousands of packages to (re)compile every time the compiler, libraries etc are updated. A HiFive Unleashed (2018) or Raspberry Pi 3 (2016) are already faster per core than full system emulation in qemu on a current gen x86. With a Pi 4 or HiFive Unmatched or the new VisionFive 2 (same speed as HiFive Unmatched for $55-$85 instead of $665).

Sure, you can get a 64 core 128 GB 5995WX machine for $6500 for the CPU, $1000 for the RAM, maybe $8500 all up. And it will perform in qemu worse than a farm of 16 8 GB Pi 4’s costing $1200 and running the arm64 code natively. The same goes for 16 VisionFive 2’s. Ok, maybe you’d be better off with a farm of four 7950X 16 core machines costing maybe $1200 each. But you’re still looking at 4x the price of the ARM/RISC-V SBCs to get the same performance WHEN RUNNING ARM/RISC-V CODE.

Bruce’s observations on total system performance are, of course, spot on. There’s an important distinction in building code FOR a platform and building it ON that platform. Cross compilation is very well understood in our industry – we’ve been doing it for decades.

If I were building an operating system (and I used to…) I probably still wouldn’t be doing it on a native RV64 system – not even the VisionFive 2, though it probably is within reach. Building a full OS at scale is surely still best done on a cross. That ‘simple’ app may require all of g++, libstdc++, Qt (which has a web browser and a Javascript JIT inside it.) and many other artifacts. They’re large. If you’re the doing doing full integration of that Fedora build, you probably are still doing it on a system like described in that second paragraph. Cross compilation is just an easier task than emulation.

However, the scales have probably tipped for running the executables. It’s likely viable to have that farm of VisionFives doing the actual running of the executables for test because that scp/nfs to ship it to that computer to run it and ship the results back is faster than asking Qemu to to do it. (I know there’s a team in China that is doing exactly this with racks Unmatched and Vision Five R1s, but I’m not sure how Fedora or Debian integration teams are doing it.) It’s just easier to have the OS source tree sitting mostly in RAM on a Big Computer and farming out the runs. Fewer moving parts.

For a normal developer that’s building just a few packages – and maybe even big ones – native compilation on a u74 system like 7100 or 7110 is totally viable and there’s the convenience of having source, executable, debuggers, and runners all on the same system – and the same system that you’re actually targeting. (Even the worst ptrace implementation is less fiddly than remote GDB protocol or JTAG at scale.) But I can’t tell if the quibble here is about building an individual packages like a normal developer would. Of course everything has to be built by someone. Building natively on D1 is possible, but single core and low ram make it not very much fun for a full OS build. The 4 and 8GB multicore systems change that dynamic from ‘possible’ to ‘pleasant’. Of course that 64 core Xeon is still going to slay it in a compilation race.

It’s not hyperbolic when Fiddlingjunky agreed that newer generations of systems (both ARM and RISC-V) are game changers. They move from “embedded uses” into more recognizable “real computes”. And, chango has a valid point that the linking phase, in particular, tends to eat memory that can throw off the balance of “make -j8 and let the VM system work it out”. Newer linkers like LLD, Gold, and Mold (seems like there’s another…) are better handling the additional requirements of RISC-V relaxation but that’s been a race for a long time. Lots of large programs (Chrome, Earth, Firefox, Qt) don’t even try to build natively on 32-bit hosts any longer, relying instead on cross dev, exactly because the address space is too limited, so that 4GB limit IS a problem for building some packages.

I don’t at all understand the equivalence of asking “what are you doing that needs more RAM?” and “what is your pet’s middle name?” That’s just being evasive. You can say you’re compiling big things or doing big scientific workloads or building NNs or whatever without posting your social security number. The points by Junky and Holt that RISC-V now has plenty of head space above D1 are solid.

There are lots of available tools and lots of choices, with more on the way. It’s important to use the right tools for the task and to reevaluate that often. It’s a changing time and our expectations of SBCs and RISC-V in general can evolve.

I’m off to install my Star64 now…

In theory cross-compilation works, but in practice it only works for simple packages or for packages where it is exercised a lot and regressions found quickly: binutils, gcc, llvm, glibc, newlib, Linux kernel. For many others it is much more reliable to do a native build, whether on real hardware or emulated.

Depends on the package and the commitment.

Good thing you have that choice now.

Don’t forget it’s still early days. The Raspberry Pi didn’t get where it is today on day one, neither did the Arduino. But quite like those two platforms the Lichee makes some interesting new tech available to us tinkerers who otherwise might not have access to it. As far as I know this is the first cheap, simple and easy RISC-V sbc.

DDR3 memory is max 4 Gbits per die, so 512MB is as much memory as you can get in a single chip. In theory, they could try to layout with clamshell front/back chips to get up to 1GB.

There might be someone out there with stacked die packages, but good luck these days.

https://www.samsung.com/semiconductor/dram/ddr3/ See chip selector

I have just received my Lichee RV and I have to say I am very impressed. For about $20 it is a genuine Linux risc-v system. It is certainly not meant to be a general purpose desktop machine but it gives developers a flavour of what is coming. It feels slow and reminds me a little of the original raspberry pi B – loads of potential. Personally l am very pleased to be able to practice risc-v assembly without the need for cross-compilers or VMs. I haven’t received a Wi-Fi docking station yet so other functionality is somewhat limited.

The allwinner gpl violations mentioned above are from about 6 years ago, is this still an issue for people?.

I wonder how complex/problematic the alignment of the two M.2 sockets is during production of a dock. What might the argument be not to use something like a SIM stick socket? Are two M.2 slots smaller than a SIM socket?

SIM socket only has 6 pins. How are you going to fit the card in a SIM card?

SIMM.

And, from what I’ve seen, it’s somewhat easier to get M.2 socket hardware than various sizes of SIMM sockets at the moment.

It is an exercise for figuring out mechanical tolerances. Don’t seems to be an actual issue since they are actually shipping these cards.

I think this person meant SIMM (single in-line memory module), the old memory socket. Not the SIM card socket.

hi, it is fine to use two M.2 slot, as we have already mass product the dock board, it is no problem. and we have release the footprint, so you can layout pcb easily. and two M.2 slot only cost 45mm, much smaller than SIMM

The question for me is more why you did go with two M.2 connectors instead of a SO-DIMM. I guess you just didn’t need that pin count.

I’ve worked with this family of products. They suffer from a race to the bottom.

They have a noncompliant USB interface, so quality cables on a real usb3 power supply results in the board but powering up.

They reused the top bits in the PTE that were already in use, so anyone implementing VM in these D1 chips has to special case them.

They have vector support, but instead of waiting for the ratified version, they rolled 0.7.1, which is already being removed or never accepted by software like GCC and QEMU. The Allwinner branch of tools that added that are a snapshot in time and already unmaintained.

As mentioned by others, Allwinner has a long history of GPL compliance issues and their developer tools, even translated, don’t do a dev many favors.

All this makes big friction in getting the D1 upstreamed.

These are all things that can be worked around and the blame is shared by Alibaba, Allwinner, and Sipeed and maybe these are acceptable for a $19 Linux computer. Maybe if you lower your expectations, it’s fine. I personally burned many hours “discovering” that an Apple power supply and cable won’t power these. If you connect that same power configuration to a USB hub and use and additional USB-A to USB-C cable, for example, the board boots. Raspberry Pie publicly took a beating for this a few years ago.

These boards aren’t total disasters; they just have several sharp exposed edges when handling.

If they waited for the final Vector spec and the PMA spec then we wouldn’t have these boards at all. The SoC has been in mass-production for a year and was designed 2.5 years ago. Chips that conform to the final specs ratified in November probably won’t be out until early 2023, and boards late 2023.

If people want something conforming to the final spec then the existence of this SoC and board doesn’t hurt them in any way. They will have to wait in any case. If people want a pretty darn good chip now (or the Nezha 8 months ago) then here is one.

It’s a shame that they made the same USB C power mistake many others, such as Raspberry Pi, have also made. Yes, a quality $30 Apple USB iPhone/iPad power supply won’t work but I’d never had considered “wasting” one on a board like this anyway — and an $8.50 official Pi 4 power adapter will work fine.

Ha. We’ve tangoed on this before. :-)

Agreed, if your goal is developing code you need to support across products, instead of “early”, you lose nothing by ignoring this generation. (Right now there is no real “across” as C906/C910 are the only game in town.) If you’re in total control of the hardware, OS, and tool stack, maybe you can keep a fleet of these running for a while.

It’s probably an OK place to get a head start, but most casual users can be prepared for a misery of tool support. Again, that’ll improve/settle as 1.0 products reach the marketplace.

It’s likely the Pi supply makes the same design decisions as the Pi of not actually doing Power Delivery/negotiation in ignoring the CC1/CC2 pullups and just dumping 5V/3A onto the rails in an unnegotiated state. This is also against USB spec, IIRC, but it’s not uncommon for dumb supplies to do this. I think sometime around USB2 or 3 the spec changed the max current in an unenumerated state from .1A to .9A.

It’s not a matter of wasting a new charger as unplugging the power source from the computer you use for development and plugging it into the board or even trying to power it from a hub attached to your Macbook or iMac. Intuition tells you that your 67W power supply SHOULD power up a 10W (?) board.

For < $20, it's easier to overlook the power issues, the finger flip to both RISC-V and USB specs, the non-standard boot sequence, the recurring GPL violations of the chip maker, living on a kernel and u-boot fork for a while (maybe forever), and such. It'll provide experience to some and you can certainly blink a lot of lights with a single Ghz core so tinkerers may find it a value. It just seems they cut an awful lot of corners to be reach the line, even with asterisks beside their title.

As for my Nezha, after weeks of working with engineering, we decided mine was not only missing pieces, my board was defective so my experience with it is pretty bitter.