Discount (or even grey market) electronics can be economical ways to get a job done, but one usually pays in other ways. [Majenko] ran into this when a need to capture some HDMI video output ended up with rather less than was expected.

Faced with two similar choices of discount HDMI capture device, [Majenko] opted for the fancier-looking USB 3.0 version over the cheaper USB 2.0 version, reasoning that the higher bandwidth available to a USB 3.0 version would avoiding the kind of compression necessary to shove high resolution HDMI video over a more limited USB 2.0 connection.

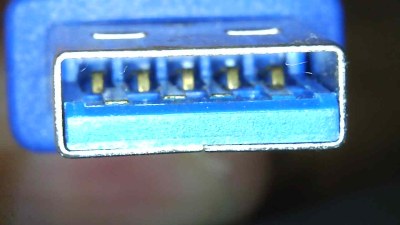

The device worked fine, but [Majenko] quickly noticed compression artifacts, and interrogating the “USB 3.0” device with lsusb -t revealed it was not running at the expected speeds. A peek at the connector itself revealed a sad truth: the device wasn’t USB 3.0 at all — it didn’t even have the right number of pins!

A USB 3.0 connection requires five conductors, and the connectors are blue in color. Backward compatibility is typically provided by including four additional conductors, as shown in the image here. The connector on [Majenko]’s “USB 3.0” HDMI capture device clearly shows it is not USB 3.0, it’s just colored blue.

Most of us are willing to deal with the occasional glitch or dud in exchange for low prices, but when something isn’t (and never could be) what it is sold as, that’s something else. [Majenko] certainly knows that as well as anyone, having picked apart a defective power bank module to uncover a pretty serious flaw.

Somewhere in PRC:

Employee: “Boss if we use blue plastic, we can charge more….’cause…”

Boss: “Blue it is”

Haha. Reminds me the “Microchip Response to PICkit 3 Review from EEVblog #39” video Youtube. The LED brightness issue.

I recently bought a usb3 4 port hub advertised as usb3 had blue plastic, but tested as only usb2. Priced less than other usb3 and way higher than typical usb 2. So price can be but isnt always such an apparent way to determine things. If ud bought from china and waited 12 weeks a real usb 3 was cheaper.

It should work.. Red cars are faster then other coloured cars..

Only in England

No. Green cars are faster in England. Red cars are fatter in Italy.

Faster!

Autocorrect is as fast as it is stupid.

Wait, it’s the red ones that go faster, innit?

I once cut apart a USB 2.0 cable and found it was just four conductors. No shielded twisted pair for the data pair.

Yet the wire was clearly marked USB 2.0. I bet the UL rating also mean enhanced flammability.

USB2 requires no shielding and no twisting of the wires. Needs impedance match to 90ohm.

You are wrong. From the USB 2.0 specificication:

“High-/full-speed cable consists of a signaling twisted pair, VBUS, GND, and an overall shield. High-/fullspeed cable must be marked to indicate suitability for USB usage (see Section 6.6.2). High-/full-speed

cable may be used with either low-speed, full-speed, or high-speed devices. When high-/full-speed cable is

used with low-speed devices, the cable must meet all low-speed requirements.

Low-speed recommends, but does not require the use of a cable with twisted signaling conductors”

Read the last sentence of what you copy-pasted.

“Does not require the use of a cable with twisted signaling conductors”.

*High Speed* USB needs twisted conductors.

USB 2.0 also includes the low speed standard.

Yeah pretty slimy to call it a USB2 cable if it’s not a HIGH SPEED USB 2.0 cable. But technically true

You are technically correct, but to basically everyone in the world who isn’t a marketing department associated with the USB-IF refers to the different speeds based on the version they were introduced with.

As far as normal human beings are concerned USB 1.x means 1.5 or 12mbit/sec. USB 2.0 means 480mbit/sec, 3.0 5gbit/sec, etc.

Anyone who actually uses the latest version number to refer to an older speed tier is trying to sell you some garbage. None of us should encourage, support, or even acknowledge the USB-IF’s absurd naming scheme.

I have seen plenty of those given as free usb cables for printers, after the whole regulation of not including the cable for eco reasons was added.

Eco reasons? Well it used to be because it cost a lot more money, back when it was DB-25 -> Centronics. That’s why when it was included “for free” by some companies (aka: rolled into the price). Then as that was transitioned to USB, perhaps it was claimed to be for eco-friendly reasons, but I’m sure they weren’t complaining about an upsold, overpriced add-on.

I have that very same capture device and I’ve always doubted it to be true USB 3. It was also around €1 more than the ‘2.0’ version so that was already pretty suspicious to me. But yes, it still is a bit scummy to advertise is as 3.0 when it clearly is not.

“a bit scummy”? If you consider outright fraud “a bit scummy,” I hate to think of what your threshold is for “objectionable.”

I thought Bluetooth was a wireless thing…

BLUE again!

I smell a pattern!

Now we should create a disc drive with blue lasers! We could tell everyone it’s faster and holds more storage due to the smaller wavelength

many many many usb devices I have seen have the plug, but not the speed, of the usb devices they pretend to be.

I have quite a few with ‘usb 3.0’ that actually run at usb 1.1 speeds. And it’s a rare usb 3 device that actually runs at a speed faster than usb 2..

It’s the same with usb c. Just because it has a usb c pysical port is no indication that it will run even at usb2 speed…

This is so common that when you get something that does run at the speed it is supposed to, it is a surprise.. ie when I got a samsung T7 and it ran really really fast..

It’s very common for USB 3.x flash drives to have decently fast READ speeds but abysmal WRITE speeds. They’ll have a pretty big fast cache for writing and it works well if you’re only writing a few small files at a time.

But throw any file at it that’s larger than the cache, or a lot of small files, the cache fills up then it *slams down to slow*. I have some drives I call USB “2.5” that have good read speeds but write speeds slower than what good USB 2.0 drives can do.

Some USB 3.0 drives just chug along at the slow speed once their cache fills. Others will pump up and down, repeatedly filling and flushing the cache to the storage chips. They’re usually even slower than the ones that just piddle along because they drop to zero to fill the cache then dump it and repeat.

Would have been nice if the people who manage the USB specifications had insisted that the only way to be allowed approval to mark and market a storage device as USB 3.x is if it had a minimum sustained write speed faster than the maximum possible by USB 2.0. Then Sandisk, Kingston, PNY and others couldn’t get away with all the crap USB “2.5” flash drives they sell.

The only USB 3.0 flash drive I have that deserves being called it is a higher end SanDisk that can maintain a 100+ megabit sustained write speed.

Of all the rest, the best are from Adata. They can keep up a 17 megabit write speed from 0% to 100% full. ‘Course the entire lot of four I bought failed one after another. They had slow write speeds around 7 megabytes per second – but Adata has a lifetime replacement warranty on their DRAM and flash memory products so I got replacements that are good. Could be they got shafted with a load of counterfeit chips.

PNY and others have also made some horrible USB 2.0 drives that could be called “1.5”. I have a PNY 8 gig that can barely write at 1 megabyte per second. It takes forever to put anything onto it.

If I was in charge of any company manufacturing USB storage devices, I’d be ashamed and embarrassed to send anything out the door that has worse write performance than the best of whatever version came before it. I wouldn’t sell anything that couldn’t at least perform at 50% of the maximum possible write speed from 0% to 100% full. My company would get sales by having a reputation *for not selling any crappy products*.

> Would have been nice if the people who manage the USB specifications had insisted that the only way to be allowed approval to mark and market a storage device as USB 3.x is if it had a minimum sustained write speed faster than the maximum possible by USB 2.0.

Who says they got approval from anyone? They just slapped a USB logo on the product without asking most likely… and if the USB group goes chasing them, they just vaporise like so many dodgy manufacturers.

> The only USB 3.0 flash drive I have that deserves being called it is a higher end SanDisk that can maintain a 100+ megabit sustained write speed.

I have some metal shelled Samsung sticks that are decent also. But yes it’s amazingly rare!

“I have some metal shelled Samsung sticks that are decent also.”

I’ve owned two of the metal shelled Samsungs and they both failed after not much use. They weren’t counterfeits. Who takes the chance with relatively expensive 256 and 512 GB drives? The sweat spot price wise is 64 GB and I don’t even trust that much data on those which I’ve also had fail far too soon. On the consistent failure of even major name brand drives to maintain 3.X transfer rates, I agree 100%.

That’s pretty bad luck, but in my experience (about half a dozen so far) they’ve been solid.

I agree though that that’s a lot of data to trust to a USB stick – above about 64 GB I usually prefer to get a proper external SSD rather than a USB stick.

Really calling yourself USB 3 should not have anything directly to do with write speed, as really for most portable storage mediums you don’t care all that much if its slow to fill, but you really really don’t want to be waiting forever for a read to finish, which is a choice you in theory should make knowingly. So as long as they give you a minimum write speed on the specs so you actually know what you are getting it is fine to call yourself USB (x), at least as long as you actually in some mode of operation would saturate and run slower on USB (x-1).

Or even using some protocol feature that requires USB x+1. There are more to the USB specs than just speed!

“Really calling yourself USB 3 should not have anything directly to do with write speed”

It sure does if that’s the spec! And I don’t like waiting for writes any more than I do for reads.

which is why I was so surprised at the T7. I can write hundreds of gigabytes to it at about 450Mbytes a second on my 5 year old pc’s usb 3.0 port..

Most of the USB 3 cables I’ve got that came with something e.g. Dell Utrasharp monitor (for the integrated USB hub) are black. USB 3 sockets on my notebook and desktop are also black. I guess I only have blue ones on RPi4s.

Point is that blue USB 3 have not been so common for some time.

None of the USB 3 ports on my Dell laptop are blue, nor are the USB 2 ports white. They’re all black.

Another common ‘fake USB 3’ use of a blue connector are the Mini PCIe to USB 2.0 adapters for putting USB storage into unused slots in laptops. All they do is connect to the USB 2.0 lines usually present in the internal connector. I put one into the useless cache card slot in a mpc Transport T2500 (rebranded Samsung X65) laptop to install a tiny 128 gig USB 2.0 drive.

The mpc laptop was quite nice, ran Windows 10 decently with a dual core Intel and 500 gig HD (before it got too bloated to work well with just 4 gig RAM) but for some &#%*^# reason Samsung chose to equip them with a CardBus slot – in 2008. CardBus in 2008!!!! IF they had put an ExpressCard slot in it like it should’ve had I would have kept it much longer because I could’ve popped in a dual port USB 3.0 card, like I had on the older, slower, laptop the mpc replaced.

All Micron, micronPC, and mpc laptops were rebranded Samsungs. Nice high end laptops from the mid 1990’s through 2008 and every last one could use the same power adapter because Samsung made the right decision to NEVER change the power connector or the input voltage of 19V. Similar to how all non-PIP NTSC Samsung televisions from 1995 on use the same remote codes. (Dunno about their ATSC televisions, smart or otherwise.) They’re the Ryobi ONE+ of consumer electronics.

You often get what you pay for with these cheap capture devices. Under $50 USD and it’s likely to be the same chipset, USB 2.0 only, and is stuck with poor MJPG compression on anything higher than 480p. Good if you just want a basic HDMI capture source for something like a homebrew KVM, but not great for quality.

EposVox on Youtube has a long string of experience messing with these and other capture cards on the market. I’ve also had my fair share of cheaper ones I’ve purchased that ‘looked’ like unique cards and possibly USB 3.0, but they all usually end up being the same exact chipset internally just thrown into a different box, sometimes with a slightly modified PCB.

I’m still hoping that the Chinese gadget-manufacturers will soon transition to a real USB3.0-capable chipset and we’ll start to see more capable capture-devices. I don’t have particularly high requirements, I just want 1080p60 capture at a cheap price, but better image-quality than those terrible USB2.0 MJPEG-producing ones do.

This really would be nice and solve a LOT of headaches at least in the content creator space due to the market being flooded with these garbage tier cheap capture devices.

Most are in the same boat as you and don’t really need anything fancy. 1080p60 using an uncompressed codec like YUY2, reasonably low latency. No fancy software needed, there’s already plenty of tools out there to work with run-of-the-mill UVC based capture devices.

Putting a blue USB-connector on devices and claiming that it’s a USB3.0-device is a pretty common tactic with the cheap Chinese gadgets and it’s been going on for years.

Unlike what the guy’s device there has, ie. a blue, four-pin USB2.0-connector, my HDMI capture-device has a real USB3.0-connector; it’s still wired up for USB2.0 only and the chipset inside obviously can’t do anything better. At least I knew it was really a USB2.0-device when I bought it, so I knew what I was getting.

I remember, back in mid 90’s. the first USB devices and cords were translucent for unknown for me reason. Remember iOmega ZIP Drive?

Late 90s, and _everything_ was bloody translucent… remember Apple iMac/iBook?

I’ve got the solution! Let’s just use USB 3.1 ports then (Gen 2). They’re red. ;)

Yep – discovered this around 2 years ago when I bought both USB 2.0 and USB 3.0 HDMI and SDI capture solutions. There are full USB 3.0 solutions out there for a reasonable price (~$100) but they capture at 30/60fps only – with 25/50fps sources crudely converted. Both SD and HD sources can be streamed uncompressed, or optionally use MJPEG.

The USB 2.0 versions (including the ‘fake USB 3.0’ models) capture natively at 25/50/30/60 – but only in MJPEG at HD resolutions (they capture SD sources uncompressed). The audio capture is horrible on them though.

I got full refunds for the mis-advertised USB 3.0 models that were just USB 2.0 with blue plastic (and they didn’t ask for them to be returned), and that re-seller stopped advertising them as USB 3.0.

I got one better. How about a USB 3.0 hub with blue connectors. That is in fact only a enhanced USB 2.0 hub. The enhanced part is only the first connector is wired to all USB 3.0 wires. The other 3 are free floating connected to no where. All 4 ports are connected to s USB 2.0 expander chip that has the ID label sanded off. So spend > $8 bucks for a f’ed up USB 2.0 hub.

That’s amazing

I’ve got another idea: instead of putting stupid LED bling everywhere it’s not needed in a PC, how about back-lit USB ports.

I got a better idea “How about a totally solar powered flashlight using white led’s”. Back lit USB ports get blocked by pulled in devices.

The point of being back-lit is finding front panel ports in low light.

I want USB ports with a motorized ejector pin so when I tell my PC to eject a USB device is will actually do it.

Few years back I ordered a few different USB ‘2.0’ hubs from China. Invariably they were functional USB 1.0 hubs, except for one special device. It was a translucent square shape with a USB port on each side, plus the cable to connect to the host. Inside was a very interesting PCB. It had place for a ‘blob’ chip to be attached plus passives but everything was unpopulated. Instead the host USB cable was mirrored to all 4 ports!

A few years later I bought a couple of cheap USB 3.0 hubs off ebay (and at that price expected them to be USB 2.0…), surprise surprise it was a 2.0 hub. Actually the connectors were blue USB 3.0, just the hub chip was 2.0. Got a full refund for them (part of my cunning plan), but they were fine as USB 2.0 hubs. Seems like when ordering cheap, expect to get the next standard down.

Reminds me a bit of an USB Ethernet adapter I brought ages ago. It was advertised being capable of 100 MBit/s Ethernet, but when I connected it, I found it could do only USB 1.1, which simply cannot do the advertised transfer speed. I had to argue a lot with the seller, because he claimed the device worked as advertised. Well, yes, it would negotiated a 100 MBit/s Ethernet connection, but one couldn’t use that with only 12 MBit/s USB speed.

Nasty. I recently bought a cheap USB-C Ethernet adapter supposedly capable of 2.5 GbE. Unsurprisingly, that requires USB 3.

I’ve yet to push it far enough to find out if it really can do it.

I had one of those as well. It made more sense back when ethernet hubs were still a thing: Unless you had one of the fancy dual-speed hubs adding a single 10Mb device dragged every link down to 10Mb; so there was some genuine utility in having 100Mb speeds appear on the ethernet side even if the USB side was basically only good for 10Mb/s.

At the time there were also a decent number of (at that point usually slightly older or macs with firewire for high speed peripherals) systems that only had USB 1.1; and a USB NIC plus a USB 2 add in card was more expensive than just a PCI or PCMCIA 10/100 NIC.

It was a reasonably narrow period of time, once switches became cheap and USB 2 became more or less universal that type of product moved into ‘scam’ territory; but there was a window where it was a real feature.

From what I gathered with those HDMI grabbers they allegedly use a protocol in the 3.0 versions that is only available with USB3.0.

Or in other words it is not about the speed but about something that is not available with USB2.0 stacks.

Which could be true, I’m not sure.

Not that there isn’t plenty of fake USB3.0 stuff around though, no doubt about that, but perhaps in this case there is a logic to it.

I just checked the cables on my desk. They’re just blue.

Thanks for spoiling my day.

I can’t help but to point out a subtle detail.

The USB Implementers Forum did start USB 3.0 as “USB 3.0” back in 2008, but that were back then.

Since it were renamed to USB 3.1 Gen 1 back in 2013. But USB IF is really good at names, so they renamed it again to USB 3.2 Gen 1 in 2017.

So officially, there is no such thing as “USB 3.0”. Ie, all products currently on the market claiming to be “USB 3.0” is most often a scam, since that hasn’t officially been the name for nearly a decade. (A fair few companies have though opted for just saying “USB 3” in marketing, or stating the bitrate instead.)

Technically no, 3.2 gen 1 is a different standard to 3.0 – it’s a later version at the same bitrate but there are minor changes to the standard (wording fixes and new features mostly).

It’s mostly not relevant to consumers though.

To what I have read, USB IF have more or less stated that no new devices should be marketed with 3.0 nor 3.1

And to what I have read USB 3.1 Gen 1 is USB 3.0 without any changes other than the name as far as compatibility is concerned.

The document for USB 3.1 though also contains information surrounding USB 3.1 Gen 2, and a USB 3.1 Gen 1 device is allowed to also accept USB 3.1 Gen 2 features where applicable, but this isn’t a requirement.

Same story for USB 3.2 Gen 1 and Gen 2. USB 3.2 though also added the extra feature of USB 3.2 Gen 2×2. And renamed the prior two to USB 3.2 Gen 1×1 and USB 3.2 Gen 2×1

I think they went wrong when they went with the original ‘speed’ terms. Low speed and full speed. Then came 2.0 with high speed.

Is high speed faster than full speed? How about super speed. There are only so many superlatives in the English language…

Yes, the USB naming convention derailed rather quickly. And most other protocols do not fair any better.

The best approach is to just state version number in regards to feature, and simply state bitrate as far as bitrate is concerned.

Ethernet is a somewhat “good” example, here bitrate is more or less the only number stated. (even though 100 Mb/s is “fast Ethernet”…)

at the time it was fast

no one is thinking

“what if in 10 years this is not fast?, we should name it 2005 fast instead”

Just name it with a monotonically increasing version number or the speed. Like SATA, or Ethernet.

The problem with USB is that 3.0/3.1/3.2 are _revisions_ of the _same spec_, and so they’re all just USB 3 – but they added new speeds in the revisions instead of the new speeds being USB 4 and 5. So people assume USB 3.2 is the new speed that was added in USB 3.2 – but really 3.2 is just the newest revision of USB 3 and includes multiple optional speed modes.

You can have a device built to the USB 3.2 spec but only 5 Gbps like the base USB 3.0 spec had – but still be 3.2 because you implemented some of the _revisions_ to the spec introduced in 3.2. Just not the optional 10 or 20 Gbps speeds.

USB 4 might resolve some of this, as a USB 4 port _must_ support 20 Gbps.

The “We can’t predict the future” stance a lot of developers takes is a bit inexcusable when there is better options available. It isn’t remotely hard to expect that things will be faster in the future, and so far it has practically always been the case.

And a lot of other protocols have just stuck to revision numbers and or bandwidth.

The USB IF naming convention is at least just “names” and not something functional that we have to consider for decades after it were relevant. Like Intel did with x86 memory addressing, since Intel back in the late 70’s decided that “1 MB of RAM is the goal!” so for a 16 bit CPU that means we still to this day have 2 address registers on boot where one is offset from the other by 4 bits… They should have just offset the second address register with 16 bit and made it able to handle 4 GBs, even if it is completely beyond what they could foresee as realistic even 20 years forth. (and they learned their lesson, today CPUs uses 64 bit addresses even if 16 EiB is completely unrealistic for the next 30 years.)

Had the exact same issue with Atomos HDMI capture card.

I checked around a bit and it might be related to ‘USB3 vision’ https://en.wikipedia.org/wiki/USB3_Vision

And specifically to the so-called ‘ Zerocopy’ feature perhaps, which avoids hiccups in data transfer.

In the wikipedia article there is a link to this on the subject of zerocopy as implemented in linux: https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/commit/?id=f7d34b445abc00e979b7cf36b9580ac3d1a47cd8

Can anyone share how “lsusb -t” shows the usb speed?

Nevermind. 480M vs 5000M

Oh man! These not-USB-3 connectors are making me blue!