When we last left this broadening subject of handwriting, cursive, and moveable type, I was threatening to sing the praises of speech-to-text programs. To me, these seem like the summit of getting thoughts committed to what passes for paper these days.

A common thread in humanity’s tapestry is that we all walk around with so much going on in our heads, and no real chance to get it out stream-of-consciousness style without missing a word — until we start talking to each other. I don’t care what your English teacher told you — talking turns to writing quite easily; all it takes is a willingness to follow enough of the rules, and to record it all in a readable fashion.

But, alas! That suggests that linear thinking is not only possible, but that it’s easy and everyone else is already doing it. While that’s (usually) not true, simply thinking out loud can get you pretty far down the road in a lot of mental vehicles. You just have to record it all somehow. And if your end goal is to have the words typed out, why not skip the the voice recorder and go the speech-to-text route?

Communication Breakdown

Some programs are better than others, but you get back what you put into them — especially with the higher-end, super-trainable kind like Dragon Dictation. If you’ve listened to me on the Podcast, you might understand how difficult it could be for a robot to understand the nuances of my speech 100% of the time.

While this is not meant to be an ad for any particular service or software package, there have been days where the nerve damage in my arms and long recovery from surgery prevented me from doing any typing or mousing without agony and regret. So what’s a professional writer supposed to do? Hire a typist and dictate? Then I’d have to share my paycheck. And who could be themselves with a typist shoehorned between their brain and the digital page, anyway? If good writing is a conversation between writer and reader, then there’s really no room for a third party, not even for transcription.

Necessary Evils

The problem that I have with speech to text programs is that I have to speak slowly and robotically for best results. No, actually, that’s not the real problem — it’s that I get going about something and establish a good flow, but Dragon is picking up words here and there that are just wrong. And then I go back through the talk-writing and I see stuff I don’t recognize, and thoughts get lost that way.

So I either need to use dictation programs enough that they understands me completely a hundred percent of the time, or I need to check myself every paragraph and make sure that what I said didn’t get garbled. As usual, the answer is some combination of both.

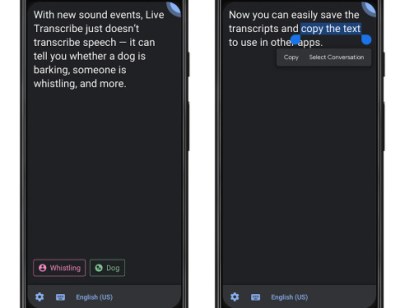

Dragon is much better than what I used to use, which is Google’s built in speech-to-text converter that’s available in Google Docs. Although it isn’t terrible as far as utility goes, I don’t like the idea of the very roughest draft form of my writing being right there in Google’s hands. Or ears, as it were. I know, I know, any program is gonna send my words over the Internet anyway, but it’s the principle of the thing. Interestingly enough, Google open-sourced their transcription engine in 2019. I guess if Dragon ever does me dirty, I might go that route.

Complications aside, for someone like me, any semblance of speech to text feels like a godsend. It’s gotten me through some of my darker, nerve-damaged days, and it’s well worth the amount for which I was reimbursed so graciously by my employers. When I have a lot to say quickly, I’ll just use a voice recorder. Once in a while, I’ll play the recording into Dragon and marvel the marriage of technologies to do my bidding. Anything to get the words out.

I recall a documentary about this subject, probably last century.

It ended with the phrase “Who knows, some time in the future we may be able to get a machine to wreck a nice beach” (cut to toy excavators digging up sandcastles)

A lot comes from context, and even humans can be easily tricked.

What?

Wreck a nice beach = recognise speach

Indeed. In fact if I recorded my own voice saying those two sentences I probably couldn’t tell which one I was saying.

Homonyms and punctuation can make or break you.

To quote Martha Snow:

Eye halve a spelling chequer, It came with my pea sea

It plainly marques four my revue, Miss steaks eye kin knot sea.

Eye strike a quay and type a word, And weight four it two say

Weather eye am wrong oar write, It shows me strait a weigh.

As soon as a mist ache is maid, It nose bee fore two long

And eye can put the error rite, It’s rare lea ever wrong.

Eye have run this poem threw it, I am shore your pleased two no

It’s letter perfect awl the weigh, My chequer tolled me sew.

Icy watch you’d id dare.

I don thunder stand.

peach recognition has come a long way. Before it could not under stand any peach, but now is can under stand all my peach. peach is vary hard for a computer to deal with. four every ruler in the english language their are many exceptions, and it does not under stand all peach depending on the speakers.

Free dumb F’ peach!

What?!

I must be missing something because all these comments are making me feel like I’m losing my mind…

Freedom of speech

I’ve been using live transcribe as I am deaf and communication can be difficult, especially when people wears mask. It’s OK for most part but now and then there’s hilarious transcribe failure. My aunt was talking about getting a bagel with cream cheese, Google transcribed “bagel with green cheese” somehow…

As a long time Dragon user, I think you will find the accuracy improves a lot if you use a noise canceling headset microphone and give it 30 minutes of training. They try to pretend that’s not needed:). It’s used with high accuracy by services that re-speech voice for individuals who are hearing impaired

Yes, you should never toke your spellchecker or voice repetition for granite. It will lead you Australia.

Google captions makes the verb trump or card suit noun have a capital, Trump. No, trump trumps this.

Whisper is open source and can handle accents and speed talking remarkably well. See https://openai.com/blog/whisper/

Saw an old comedy skit… Lady on a street corner asking people how to send a message on her cellphone. The cellphone was an early flip phone. One guy say to make an “R” to press the 7 and the #… another guy said no press the * and the 7… (Remember.. this is a comedy skit).. Finally a guy helps her type her message and said now we press send… The lady says You sent RAPE TONIGHT… It’s supposed to say RAVE TONIGHT!… Oh… Same difference. 😂

I understand speech-to-text for accessibility, it’s awesome, but for me writing and speaking seem to run on very different brain circuits. I can type way faster than I can speak and translating from brain text to words would be so slow. Honestly I think I’d get super frustrated trying to use speech-to-text.

This. Talking is so slow…

Meeting or other conversational transcription is another use case I’d find useful. I gather the Google option on pixel phones is particularly good at this (one YouTuber says he got useful, properly attributed transcription for an interview with the phone in his *pocket*for the whole thing, pretty sure that’s on phone for the recent pixels, too)

Like handwriting, the U.S. Government-controlled education system has abandoned teaching our students clear understandable oral communication skills (speech). Approach even a “highly educated” U.S. young person today and ask them to read out-loud the block-text printed word “IMPORTANT” three times in succession, and listen to the results. See what I mean? Never write the word “IMPORTANT” in cursive, the under educated young person may not be able to read it.

Like handwriting, the U.S. Government-controlled education system has abandoned teaching our students clear understandable oral communication skills (speech). Approach even a “highly educated” U.S. young person today and ask them to read out-loud the block-text printed word “IMPORTANT” three times in succession, and listen to the results. See what I mean? Never write the word “IMPORTANT” in cursive, the under educated young person may not be able to read it.

Important correction: Google didn’t actually open source their transcription engine (or at least the speech recognition part), sadly, as far as I can tell. They open sourced parts of their cloud client app. (Maybe transcription engine means something different from the part actually recognizing and transferring speech and I just misunderstood, but) The OpenAI one looks more real though needs more supporting code.

I did recently use “Nerd Dictation” to help with a wrist injury to ease commenting on merge requests, etc. Pretty nice and customizable, even if not as theoretically good as others (like my Google powered transcription), a few lines of Python are all it takes to bend to your whim. I had start, stop, and cancel bound to hotkeys on a macro pad.