Thanks to stints as an X-ray technician in my early 20s followed by work in various biology labs into my early 40s, I’ve been classified as an “occupationally exposed worker” with regard to ionizing radiation for a lot of my life. And while the jobs I’ve done under that umbrella have been vastly different, they’ve all had some common ground. One is the required annual radiation safety training classes. Since the physics never changed and the regulations rarely did, these sessions would inevitably bore everyone to tears, which was a pity because it always felt like something I should be paying very close attention to, like the safety briefings flight attendants give but everyone ignores.

The other thing in common was the need to keep track of how much radiation my colleagues and I were exposed to. Aside from the obvious health and safety implications for us personally, there were legal and regulatory considerations for the various institutions involved, which explained the ritual of finding your name on a printout and signing off on the dose measured by your dosimeter for the month.

Dosimetry has come a long way since I was actively considered occupationally exposed, and even further from the times when very little was known about the effects of radiation on living tissue. What the early pioneers of radiochemistry learned about the dangers of exposure was hard-won indeed, but gave us the insights needed to develop dosimetric methods and tools that make working with radiation far safer than it ever was.

Rads and Rems, Sieverts and Grays

While there are a lot of tools for measuring the dose of radiation a person receives, there needs to be some way to put that data into a meaningful biological context. To that end, a whole ecosystem of measurement systems exists, all of which boil down to some basic principles of physics and biology.

The first principle is that sources of radiation are all capable of imparting kinetic energy into tissues, either in the form of ionized particles (alpha and beta radiation) or electromagnetic waves (gamma radiation and X-rays). Different types of radiation have different impacts on tissue, and those differences need to be taken into account when calculating dose, through weighting factors that reflect the relative biological effectiveness (RBE) of the radiation. This is basically a measure of how much punch the radiation packs. For example, alpha particles, which are relatively massive helium nuclei, are weighted 20 times higher than beta, gamma, or X-rays.

The second principle behind dosimetry is biological in nature, and reflects the fact that in almost all cases, whatever deleterious effects of radiation experienced by an organism are caused by interactions with its DNA. There are certainly other effects, like ionization in the cytoplasm of cells and production of free radicals, but by and large, the big problems with radiation happen as a result of it crashing into DNA, particularly while it’s in the act of replicating itself. That’s why the rapidly dividing cells in the blood-forming organs (bone marrow mostly), the linings of the digestive system, and the gonads are particularly sensitive to radiation.

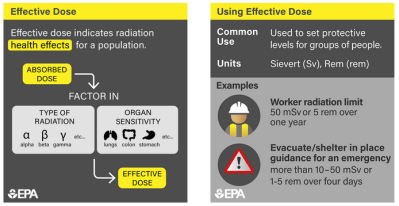

The need to take all these factors and more into account has resulted in a host of dosimetric measurement systems, with units crafted for different applications. In the SI system, the basic unit of absorbed dose is the gray (Gy), which is one joule per kilogram of matter. The equivalent dose, which takes into account the RBE of the radiation, is measured in sieverts (Sv), which is just the absorbed dose in joules per kilogram multiplied by the dimensionless weighting factor. Similarly, the effective dose is also expressed in sieverts, and is the effective dose multiplied by another dimensionless factor based on the target tissue’s sensitivity to radiation. To add to the complexity, non-SI units (rads for absorbed dose, rems for equivalent and effective dose) are still in wide use, and many dosimeters are still calibrated in these units.

Foggy Film

Although ad hoc methods of recording the dose received by occupationally exposed radiation workers go back well into the early years of radiochemistry, the first attempt to create a systematic method of monitoring radiation workers is credited to E.O. Wollan, a physicist who worked with the Chicago Metallurgical Laboratory. As the part of the Manhattan Project that was looking into the radiochemistry of plutonium, Wollan recognized the need for accurately monitoring exposures, and came up with the first film badge dosimeter in 1942. His device was simple: a lightproof envelope containing a strip of photographic film slipped into an aluminum holder. Wollan also included a filter made of cadmium, to even out the film’s response to different kinds of radiation. Developing the film would show the degree to which radiation had fogged it, which was easily read with an optical densitometer.

Given their ease of use, low cost, high sensitivity, and compact size, film badge dosimeters were the de facto standard until relatively recently. Modern versions were more likely to be made from plastic than Dr. Wollan’s aluminum, but they still incorporated a variety of metals to act as filters. Developing film badges and even reading them eventually became automated processes, and systems were developed that could handle millions of badges every month.

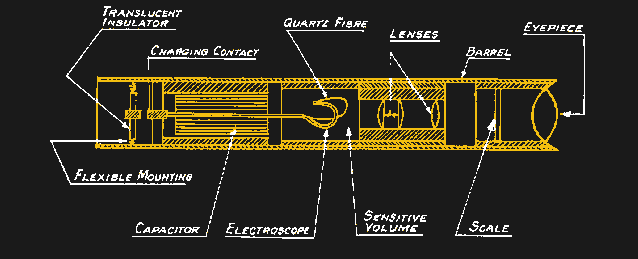

One disadvantage of film badge dosimeters is that they provide no feedback on the dose received until after they’ve been worn for a month and developed. While that’s probably fine in most medical and academic settings, where the radiation exposure is expected to be generally low, some dosimeters are designed to provide ongoing readings for cases where the environment is a bit more energetic. One design, the self-indicating pocket dosimeter, dates all the way back to 1937. The SIPD generally takes the form of a tube about the size of a pen and contains a sealed air-filled chamber at one end. Inside the chamber is an electroscope, consisting of a flexible quartz fiber and a fixed electrode, and a microscope with a calibrated reticle. When a high voltage is applied to the electrode, an electrostatic field bends the quartz fiber toward the zero mark on the reticle; as ionizing radiation passes through the chamber, charges are slowly knocked off the electrode, allowing it to bend further up the reticle scale. Wearers could easily keep track of dose by looking through the microscope before charging the chamber back up with a battery-powered portable charger.

Bright yellow SPIDs bearing the familiar red, white, and blue Civil Defense logo became very popular during the Cold War in the United States. Millions of the devices were made, some calibrated with scales that would only be useful only in catastrophically high radiation environments. SPIDs are extremely robust devices and most of them still work after many decades, and even the chargers, with their very simple electronics, can still be found in working order.

Solid(er) State

Film badges and quartz-fiber SPIDs were handy, but technology marches on, and cheaper, better methods for dosimetry have largely supplanted them. Thermoluminescent Dosimetry (TLD) has become a very popular method for keeping track of exposure. It relies on the tendency of certain materials to “trap” electrons excited by high-energy photons passing through them. These trapped electrons, which accumulate in the crystal matrix in proportion to the amount of radiation that has passed through it, can be released to their ground state simply by applying some heat. The light released is picked up by a photodetector and used to calculate the dose received.

TLDs for most commercial dosimetric applications are based on crystals of lithium fluoride doped with a small amount of manganese or magnesium, which creates electron traps. TLD crystals can be small enough to build into a plastic ring, to monitor the dose received by the extremities while handling radioisotopes, for example. A related method, known as optically stimulated luminescence (OLS), uses a beryllium oxide ceramic as the trapping material; electrons are released from the trap using a laser tuned to a specific frequency and a photodetector reads the emitted light.

Like film badges, TLD and OSL provide no real-time feedback to the wearer on dosage. Luckily, compact electronic personal dosimeters are now in wide use. Most EPDs use the humble PIN diode as a sensor. Much in the same way as PIN diodes are sensitive to light when reverse-biased thanks to their large, undoped intrinsic region — the “I” in PIN — EPDs use reverse-biased PIN diodes to count photons of ionizing radiation that pass through them. Charge carriers are created when a photon hits the intrinsic layer, resulting in a small current that can be amplified. A microcontroller totals up counts and displays the calculated equivalent dose; most EPDs have options to sound an alarm if setpoints are reached, too.

Another interesting radiation sensor, although one used more for in vivo dosimetry during radiation therapy than for personal dosimetry, is the MOSFET, which has similar properties as the materials used in TLDs. The area of a MOSFET that’s sensitive to ionizing radiation is the silicon dioxide layer that separates the gate from the source and the drain. When radiation travels through the MOSFET, electron-hole pairs are created in the SiO2 layer. The holes quickly migrate to the interface between the SiO2 and the N-type silicon, where they gradually increase the threshold voltage of the transistor. The more radiation it has received, the harder it’ll be to turn on the MOSFET; the total dose can be calculated by measuring this change.

The interesting thing about MOSFET dosimeters is that the accumulated damage in the transistor serves as a permanent record of the dose received. The disadvantage is that the accumulated damage to the MOSFET eventually makes it unusable as a sensor. The practical limit is an absorbed dose of about 100 Gy, which is more than three times the whole-body absorbed dose that’s 100% lethal within 48 hours.

In geotechnical engineering, site field engineer technicians use a Troxler device to check compaction of soil during construction. All technicians are required to wear a dosimetry badge during operation and transport.

As the Troxler uses a cesium with a beryllium buffer core.

Each badge has to be processed at specific times for the safety of the technician using it.

TLD Badges:

Don’t run them through the washer and dryer unless you want to “erase” the dose by temperature cycling. But, if you sent your badge through airport security, that process can come in handy…

Not sure of the effect on TLDR Badges…

Wonder what your suggestion is if it comes down to dealing with the airport security when you need to take the dosimetry with you :-)

It’s a pain in the arse. Pre warn them and have some documentation.

If you put them in checked luggage they receive a very large dose. I assume they put them through an industrial X-ray machine.

If you try and carry them on then the security bods get very excited. They don’t recognise it and as soon as your explanation includes the word ‘radiation’ they sit you down in a little room until men in suits can come and talk to you. They held the plane for me and let me on eventually though.

Its really not that big of deal. You just hand your dosimiter and say you are in a radiation monitoring program and ask that it not be xrayed. They just hand it to you on the other side.

Ok. My post was what happened to me in Heathrow about 10 years ago.

Love dosimeter badges.

When working in a synchrotron lab in college, we would get yelled at if we took the badges out for lunch. An hour’s worth of background radiation apparently would show up on them.

And then there’s the time my father left his badge in the yellow-glazed ashtray on his desk, and got a visit from the radiation safety team.

I don’t quite get it. If the badges were supposed to measure your radiation dose, then why ignore the background radiation during lunch? It’s as valid as any other radiation.

Also, was the lab environment shielded from background radiation, so that the average dose there was lower? Why measure it, if the expected dose is below the background level. So many questions…

Because the radiation from that ashtray was far above the background radiation level. The glass was manufactured with 2 to 25% uranium ore.

Your response had nothing whatsoever to do with the content of the question.

“It addressed this part “And then there’s the time my father left his badge in the yellow-glazed ashtray on his desk, and got a visit from the radiation safety team.”

Interesting enough.

The badges were to measure inadvertent radiation exposure from the synchrotron.

https://en.wikipedia.org/wiki/Synchrotron

A synchrotron can produce all kinds of radiation when smashing atoms and subatomic particles.

It is shielded to keep that radiation away from the operators and workers in the lab. The dosimeters were there to make sure that the people weren’t exposed to radiation from the synchrotron.

The lab was very well shielded to keep any radiation it generated from escaping to the outside world. Shielding works both ways, though, to the extent that normal background radiation couldn’t get into the lab.

If you wear your dosimeter out of the lab, it will show an increase in your radiation exposure. Procedures would then call for checking the synchrotron for radiation leaks. That means taking it out of normal operation while checking it. It probably also means that the technicians have to wear extra shielding (lead impregnated clothing) to carry out the safety checks.

All of that costs operating time and money – and all just because you forgot to remove your badge before leaving the lab.

That’s why you got yelled at for taking your dosimeter badge to lunch.

If you are worried about contamination, please look at Bind-It Radioactive Decontaminate Solutions. It removes the radiation.

This. Basically, b/c it was underground and super well shielded, we got less than background doses while at work. If you took the badge out to lunch (presumably a few times? I never tested it) it would show as a spike and they would think something’s wrong with the shielding.

As a measure of additional exposure from work, it was the right way to do it.

Ironic, though, that I probably got less radioactivity working in that lab than I would have at a bagel shop.

There’s another type of dosimeter that I’ve always thought was really cool/clever – bubble dosimeters. They are used for neutron dose measurements (which is otherwise really difficult) and are unbelievably simple – they are a tube of polymer gel with tiny droplets of liquid suspended in them. A neutron interacting in the vial causes the droplets to vaporize and this produces a void (bubble) in the gel that remains. To read them, you simply shine a light through them and count the number of bubbles, which turns out to correlate quite accurately to the total neutron dose.

We word TLDs at Browns ferry, and I always wondered how they worked. – Thanks.

I got my 5 rem/year dose in 30 min after the robot that loads the Phosphor32 into its tungsten holder crashed. I was suited up like a mummy in lead clothing. I’m fine though, despite the ear lobe growing on the back of my neck…jk.

Slightly confusing post. 32P is a high energy beta emitter which, if it interacts with high atomic weight atoms (like lead or tungsten) produce bremsstrahlung, also known as x-rays. (but historically x-rays are made in an electric generator while brem comes from beta emitters. Only later was it realised that they were of the same origin. ‘Characteristic x-rays’ are, naturally, the reverse!). The problem is that the brem produced by 32P on lead is fairly high energy and needs several millimeters of lead to make safe. The usual way of protecting from 32P is by using low atomic number compounds such as plastics, perhaps with a little lead outside to mop up the much lower energy brem.

Or was your exposure from the 32P on the tungsten? Was this some sort of an industrial brem source?

You are correct – While working in a kinase lab, we regularly used a mCi of hot p32 to make hot ATP for our assays. The p32 came in a vial housed in a thick lead pig. However, when making hot ATP, shielding consisted of stacks of rectangular tissue culture bottles filled with water (an excellent beta absorber) and plexiglass shielding. Thin lead shielding was to be avoided due to the production of secondary x-rays from bremsstrahlung radiation.

In that YouTube vid about thermoluminescence, there is used a tablet of calcium fluoride. Isn’t the same like using a human tooth? Won’t be the same effect if using a tooth instead of the tablet

Sadly, no. Teeth contain mainly calcium as hydroxyapatite, a phosphate. The calcium fluoride used for tld is specially grown and doped for optimised sensitivity.