The demoscene never ceases to amaze. Back in the mid-80s, people wouldn’t just hack software to remove the copy restrictions, but would go the extra mile and add some fun artwork and greetz. Over the ensuing decade the artform broke away from the cracks entirely, and the elite hackers were making electronic music with amazing accompanying graphics to simply show off.

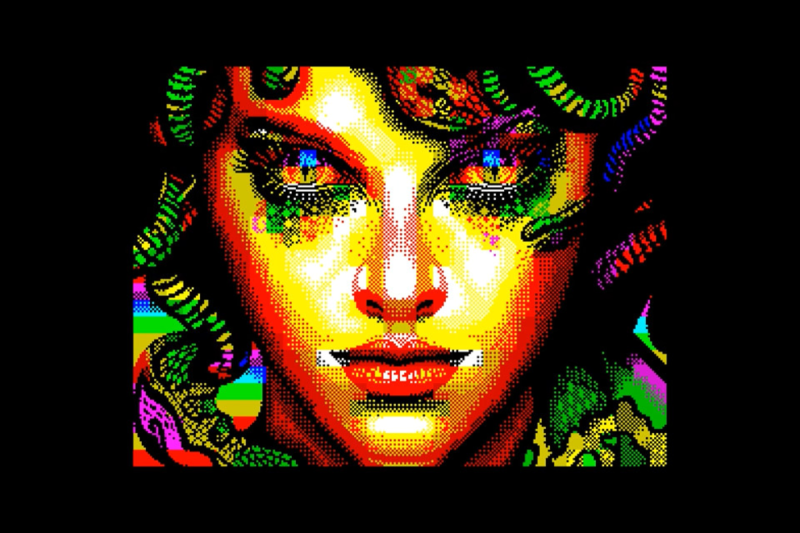

Looked at from today, some of the demos are amazing given that they were done on such primitive hardware, but those were the cutting edge home computers at the time. I don’t know what today’s equivalent is, with CGI-powered blockbusters running in mainstream cinemas, the state of the art in graphics has moved on quite a bit. But the state of the old art doesn’t rest either. I’ve just seen the most amazing demo on a ZX Spectrum.

Simply put, this demo does things in 2022 on a computer from 1982 that were literally impossible at the time. Not because the hardware was different – this is using retro gear after all – but because the state of our communal knowledge has changed so dramatically over the last 40 years. What makes 2020s demos more amazing than their 1990s equivalents is that we’ve learned, discovered, and shared enough new tricks with each other that we can do what was previously impossible. Not because of silicon tech, but because of the wetware. (And maybe I shouldn’t underestimate the impact of today’s coding environments and other tooling.)

Simply put, this demo does things in 2022 on a computer from 1982 that were literally impossible at the time. Not because the hardware was different – this is using retro gear after all – but because the state of our communal knowledge has changed so dramatically over the last 40 years. What makes 2020s demos more amazing than their 1990s equivalents is that we’ve learned, discovered, and shared enough new tricks with each other that we can do what was previously impossible. Not because of silicon tech, but because of the wetware. (And maybe I shouldn’t underestimate the impact of today’s coding environments and other tooling.)

I love the old demoscene, probably for nostalgia reasons, but I love the new demoscene because it shows us how far we’ve come. That, and it’s almost like reverse time-travel, taking today’s knowledge and pushing it back into gear of the past.

The second RPU in 6502 was buggy so it was decided to run both at once with a passthrough to joystick port. The system described is similar to the later family of Intel x86. Along with currently used x64 CPUs, it’s essentially a grandchildren of the demoscene.

Since you seem fixated on using that username, I am going back to my original.

If you say so 😂

Wait, we are three?

I’m sorry, apparently the Transporter received an OTA update intended for the Replicator.

Please stand by…

Can the real Ren please stand up…?

We are many.

We. Are. Beer.

Resistance is futile.

You will be intoxicated. ©

Ren v2.0

Hmmm…

Formerly known and formerly known as ren. Some other dude had the audacity to use my name too but then again, mine is a name and not something like yours. Not sure what the other dude gets from ripping you off and seriously WTF but.. whatever. I enjoy your (real ren) comments BTW

There’s two types of boring people. The first kind mostly talk about other people, the second kind talk about themselves. To do so however, they need to identify each other over otherwise anonymous channels on the internet, and consequently they tend to become very obsessive about displaying their names or handles.

On the early image boards and web forums around 2000-2004, they invented a term to describe when people do that. They call these people “namef**s”.

That’s twice I’ve tried for sarcasm and it came across poorly and was misinterpreted by you so I’ll try better next time. Usually appreciate your input on most stuff too FWIW.

cheers

[craig], so you were being sarcastic when you wrote that you enjoyed my comments?

(sniff!)

B^)

I think I still have a keyboard with drool stains after watching Future Crews Second Reality back in 1993. mind you I have an MP3 version of the Soundtrack I listen to once in a while

Future crew does have some great music in that demo Worth a look for that alone if you (reader, not OP obviously) haven’t seen it. https://www.pouet.net/prod.php?which=63

>What makes 2020s demos more amazing than their 1990s equivalents is that we’ve learned, discovered, and shared enough new tricks with each other that we can do what was previously impossible.

Could you at least go the extra step and try to tell us *what* was impossible and *why* and just /maybe/ some of the *hacks* that made this possible.

A week or two ago there was a post about writing larger video buffers than you have ram. That is at least content other than ‘here watch this youtube video’. What were the limitations of the ZX that made this impossible and what innovations make the new demoscene possible? There is so much possibility here and many of us would love to know. Even if you are linking old articles there is a chance we haven’t seen them yet.

for one thing they mention modern disk interface, probably flash based

+1

I generally skip any linked YouTube videos. They tend to be like 12-15 minutes and you never know if your time is gonna be wasted but a safe bet is on “yes.”

With a written article you can easily skip around, read the relevant bits and move on. Much better format.

100%

That and I detest youtube

Then you’re missing out on a real treasure trove of wonderfully informative videos. Naturally there’s a lot of garbage on YouTube but there are plenty of diamonds to be found if you look carefully and go by recommendations from those who know what they’re talking about.

History is always interesting.

https://youtu.be/UfDxsfiDl24

There are beautiful videos on youtube. But there is a whole host of 10mn (or more) videos, that could easily be written in a blog post which would take 3mn to read.

> 3mn to read

Or less.

Into.

Talking and handwaving.

Shout out to sponsors (sorta okayish if it is at least semi relevant and not some scam).

More pointless talking.

3 minutes of explanation. (if lucky – good explanation).

Wrapping up (more talking and handwaving).

End titles.

PS. Watching at x1.25 or x1.5 can help, but not much.

Even a sewer occasionally has something that sparkles. But if you go looking for it you’ll still see a lot of shit.

(but at least a sewer doesn’t have an algorithm that actively flings shit at you)

Oh some things would be harder with a written article.

https://youtu.be/N0RIGWUnVFc

> maybe I shouldn’t underestimate the impact of today’s coding environments and other tooling

yeah, you really shouldn’t. Back in the day developing non-trivial software on cassette tape and a rubber keypad was very hard work. Now you can edit the source in your fave text editor, compile it in seconds and immediately run it in an emulator. That makes a huge difference in time and effort needed.

I’d say the situation is this… it’s not that the demos we see on these platforms was impossible back when these devices were released in 1982.

Clearly they are not impossible for the hardware to execute or we would not see these demos.

What would have been a challenge back then, is coming up with all the various art elements the include in the demo, and encoding them in a form that the machine could understand.

Today, we could literally draw these using modern tools (either pen and paper, then putting the resulting artwork on a scanner or in front of a camera and “digitising” it, or creating it digitally from the outset), then having rendered it to a set of pixels at the appropriate colour depth and resolution.

You wouldn’t get past the need to figure out a machine representation appropriate for the architecture — but coming up with the original art would have been made easier by modern tools.

Representing the artwork in code might be able to leverage encoding schemes that weren’t thought of back in 1982. For example, we didn’t have LZMA back then — but nothing theoretically stops you from implementing it on a Z80 today. The only thing that stopped us from implementing this stuff back then is that the algorithm hadn’t been invented yet, so no one knew that this algorithm existed, how to implement it, or why you’d want to.

It looks like that demo is sticking strictly to the standard ZX Spectrum limit of 2 colors per 8×8 pixel block at a 256×192 resolution, then using dithering and motion to make it look better.

There are software tricks that can make it do 1×8 pixel 2 color horizontal blocks but at stock speed it can only manage 20 blocks per line doing that. Up it to a 2×8 pixel block and it can do the whole screen.

The other limitation is it only does 7 colors + Black, with dark and bright versions of the 7 colors. A palette similar to CGA but without CGA’s 2 and 4 color (restricted to two ugly palette choices) limitation in most modes.

There have been various upgrades to enhance the ZX Spectrum’s graphics, and various compatible clones had their own enhancements, but as with most 1980’s microcomputers there were so many incompatible upgrades that most software was always written for the lowest common denominator of the stock system plus peripherals from the OEM.

https://en.wikipedia.org/wiki/ZX_Spectrum_graphic_modes

Here’s a hot take: the demoscene actually epitomizes what was stupid about the “cybernerd” mentality of 80’s and 90’s hackers.

Why? Because a “demo” isn’t demonstrating the capabilities of the machine as such – it is merely showcasing an edge case function, much like if one would open a bottle of beer with the bucket of a JCB excavator, or dig a tunnel out of prison using a spoon. There’s no future in that, because under the general operating conditions and typical tasks, the tricks displayed are perfectly useless. Most likely they wouldn’t even work because the hack or trick you’re using is in conflict with the actual task you’re trying to do, or the machine has only enough resources to do that one pretty thing and nothing more.

However, the nerds of the 90’s mistook party tricks for actual prowess, and endless platform wars etc. ensued. They engaged in a lot of technological masturbation over machines that were quickly becoming obsolete, believing they could stay relevant by piling hacks on top of hacks (e.g. Amiga nerds), while the real world quickly realized that what you really need is cheaper, simpler, faster machines with more RAM to do anything really interesting.

It was a time of hype and unwarranted optimism, and under-estimating the actual difficulty of doing real stuff on a computer, because the tricks were working so well that people got fooled. Compare and contrast with things like AI or self-driving electric cars today.

Exactly the same as AI or self-driving cars. Not much difference in the endgame. Lots of flash and boasting and trash talking, not a lot of plain usable info.

Like Tim Hunkin says of chain when compared to belt drive “it wants to work”. Technology that ‘wants to work’ will succeed and be in use longer term. Notice that Amazon Echo is becoming just that.

Well covered in hard Sci-Fi. See Clarke, Asimov etc.