ChatGPT is an AI large language model (LLM) which specializes in conversation. While using it, [Gil Meiri] discovered that one way to create models in FreeCAD is with Python scripting, and ChatGPT could be encouraged to create a 3D model of a plane in FreeCAD by expressing the model as a script. The result is just a basic plane shape, and it certainly took a lot of guidance on [Gil]’s part to make it happen, but it’s not bad for a tool that can’t see what it is doing.

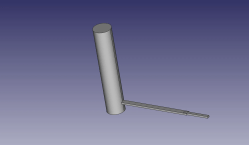

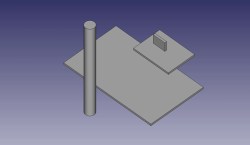

The first step was getting ChatGPT to create code for a 10 mm cube, and plug that in FreeCAD to see the results. After that basic workflow was shown to work, [Gil] asked it to create a simple airplane shape. The resulting code had objects for wing, fuselage, and tail, but that’s about all that could be said because the result was almost — but not quite — completely unlike a plane. Not an encouraging start, but at least the basic building blocks were there.

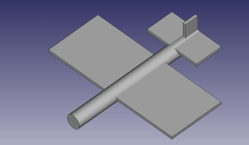

What followed was a lot of back-and-forth where [Gil] would give direction and feedback like “the fuselage is in the wrong orientation, please re-write the code to move it” or “the wings are too large, please change the code to make them smaller”. Progress was slow, but with patient guidance ChatGPT eventually went from some nearly-random shapes to a basic plane model. The image on the left represents the first attempt, the middle shows the correct basic shapes lacking relative orientation, and the last image is the final result.

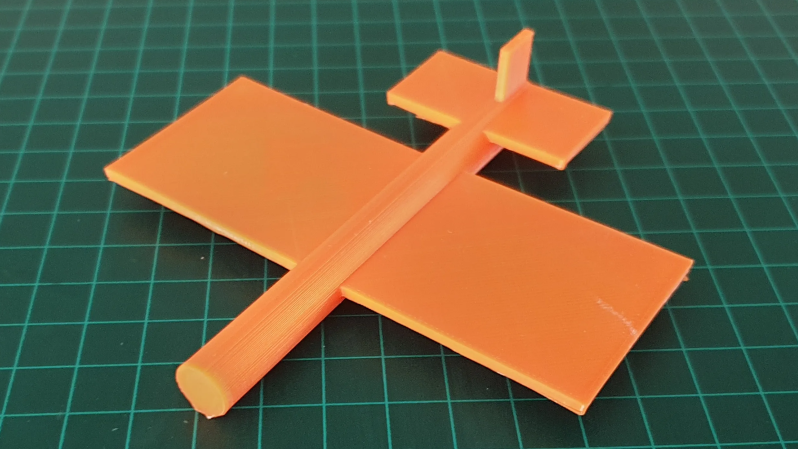

[Gil] decided to 3D print the result to cap off the experiment, thus creating as a physical object a 3D model that was generated in FreeCAD by ChatGPT, thanks to human guidance. We’ve seen ChatGPT create objects in OpenSCAD, also thanks to leveraging a text-based method of defining 3D objects. Maybe the results are not going to wow any crowds right now, but by this time next year it might be a different story.

Awkward first steps with this sort of thing tend to lead to fairly powerful tools sooner than you’d think

You need to have another AI tweak the result so you don’t have to do it manually, AutoGPT style.

I meant *critique the result. Then the first AI uses that as feedback so it can improve the 3d model.

Nice Hitchhiker’s reference.

My favorite Douglas Adam’s quote.

Maybe designed to hang in the sky exactly the way that bricks don’t?

Now hook it up to a plugin for image recognition to match some initial shape, another plugin to calculate the volume and pack whatever payload, some finite element analysis, fluid dynamics simulation… then have it design an engine, formulate a fuel, plan a production and logistics system…

Yeah, humans are becoming obsolete. I had some hope that 3D and CAD/CAM would hold out the longest, but ultimately all we humans do is just “language with some plugins”. In some years, there will be little more for a meatsack to do than press Enter and wait for the AI to spit out a whole industry setup. Add a robot plugin, and it will even build it itself.

When someone finds out how to get it to make a better version of itself, then things could get interesting.

When a mommy and a daddy ChatGPT love each other very much…

Making a better version of yourself requires you to come up with a test or a problem that you can’t solve but the next version could, but then you can’t usually check whether the answer was correct because you’re too dumb to solve it yourself. With all AI training, the outcome is only as smart as the test criteria for pass/fail. Narrow criteria allow the AI to find shortcuts that solve the problem in dumber ways, wider criteria require more intelligence out of the designer than from the AI you’re training.

An AI that designs a better AI goes beyond the special case because there are no known right answers as to what would make a better AI. If you knew, you could already design one. You can only make some random modification and then set it loose to perform the actual tasks you intended to see if the change made it better – and the odds are it will perform worse. IF it does perform better, the odds are it found some special shortcut for your special task rather than evolving a better AI.

Solving a problem, and designing/improving a system that can solve that problem are two different domains. A human programmer who’s bad at chess can write a program that can beat a grandmaster.

Though in that case at least one successful solution is already known: write a program that can assimilate and remember all the moves and counter-moves by all the grand masters or everyone who’s ever played. The solution is obvious because it’s actually dumber than the programmer – it’s just that the person himself cannot perform it.

In the case of designing the better AI, there are no obvious solutions and no obvious ways to test whether a solution would work before you actually set it loose in the world and hope for the best.

You are talking about under and over fitting data – which sure those are things to consider in ML.

But I don’t think you appreciate the transition that has occurred. I have spoke with AI teams at both Google and Amazon and I can tell you OpenAI has something different. A phase shift seems to have happen to make the LLM’s response more useful and creative. Limiting the LLM quickly pushes you down the phase curve into a useless domain.

I think the ultimate test of this will be discovering new physics or mathematics.

Can it come up with something like sqrt(-1)?

You would say no because you think it’s faking creativity however you could prompt it to try and pretend it would work and proceed through the calculation. Because of the way the attention mechanism works, it will then walk a different path through the LLM. This path could go through some fantastic fictional literature that generates the response or even a critique of the response which starts another walk until you end up with novel solutions.

Ramanujan took these creative walks during sleep.

>I think the ultimate test of this will be discovering new physics or mathematics.

Not at all. It’s got a random number generator that pecks away like a blind chicken, so of course it will eventually come up with something new – it will also come up with loads and loads of total nonsense, and it has no understanding between the two because it’s not built as a general AI. It’s just a language model that generates new text based on statistical regularities in known text.

>You are talking about under and over fitting data

Nope. Completely different topic.

Suppose you ask ChatGPT to make a better ChatGPT. First off, it would start by copying its own source code, then it would need to generate a genetic algorithm to run permutations of said code, and then it would need to find the pass/fail criteria for the algorithm to outline what would define “better” and “worse”.

Assuming it gets through steps one and two without adding some show-stopping error, where does it get the criteria for the test? How does it select them? Obviously the training material would have to include an idea of how the better ChatGPT would perform already, otherwise it would have to come up with that by sheer dumb luck as it mixes and matches data.

In other words, someone has to tell ChatGPT what the better ChatGPT is, otherwise it has a snowball’s chance in hell of figuring it out. Running the same task repeatedly would generate an endless stream of mangled up copies of ChatGPT that are dumber than the original, with a tiny probability that one of them might be better – but someone else has to be the judge of that.

Think of it like this: suppose we start a breeding program to evolve a more intelligent human. What’s your definition of intelligent? If your criteria is something simple like being able to solve difficult math problems in your head, how would you know you weren’t just selecting for autistic savants instead?

Its not totally about performance and correctness as it were. Over time as an inferior intelligence is exposed to a plethora of facts it can then look at itself and fix existing logical flaws. ANY fix to the original code that is based on newfound information is by definition an upgrade. The problem is keeping ignorant “bad actors” away from the “fact” database…

>Obviously the training material would have to include an idea of how the better ChatGPT would perform already, otherwise it would have to come up with that by sheer dumb luck as it mixes and matches data.

Yes it would need to be fine tuned for that specific objective because ChatGPT does not have memory and has fixed perimeters thus won’t improve on its own. But even though the model is fixed you can vary the prompt to move towards the objective you want even though the fine tuning may have been just on “like/dislike”.

A model that is able to identify airplanes can critique ChatGPT’s output which will then adjust the prompt in a non-random way. But the memory of this would need to be stored outside the model and added every time.

In a vacuum ChatGPT does nothing unless you allow it to interact with humans or other AI’s then it becomes very useful. If you applied zero input to a human from birth you wouldn’t get useful output either, although brains have memory and are more flexible than these models so not really analogous.

The point is indeed that you can’t get an AI to improve on itself in an exponential loop in isolation, so you can’t get a runaway “skynet” scenario going. The bottleneck is in proving that the AI performs better, and that test comes from the world outside.

Also:

https://arxiv.org/abs/2304.15004

Are Emergent Abilities of Large Language Models a Mirage?

” existing claims of emergent abilities are creations of the researcher’s analyses, not fundamental changes in model behavior on specific tasks with scale. We present our explanation in a simple mathematical model, then test it in three complementary ways … In all three analyses, we find strong supporting evidence that emergent abilities may not be a fundamental property of scaling AI models.”

>A model that is able to identify airplanes can critique ChatGPT’s output

A model that is able to identify airplanes is different prospect from a model that can identify BETTER airplanes. One produces critique based on whether the output resembles known airplanes, the other would have to first acquire an understanding of what makes a better airplane, which is not easily defined or known.

We all sort of knew that pushing a button was George Jetson’s main job. The future is nearly here…

Imagine using an aerodynamics simulator as feedback.

A bit like the self repairing code post on here recently.

It keeps going back and fixing the model until it flies.

kosmic on twitch sometimes shows videos of a neural net playing super mario brothers. It can work, but is very slow.

When I was in doing my engineering degree, we had to do a final year thesis, one on offer was using genetic algorithms to optimize airfoil design for different applications. I think the workflow was meant to be the algorithm would generate a airfoil profile, then simulate the fluid dynamics with ANSYS to determine the performance, and would then tweak parameters to find the local maxima. this was around 2015-2016.

The thesis was writing the genetic algorithm and then evaluating performance.

Genetic algorithms work very well for things like that but isn’t particularly different than a person modifying the object, testing it, evaluating it and then repeating until the get the best result, all it really does it automate that process.

Also it may be possible to use physics to come up with the best aerofoil design in the first place.

Best aerofoil design, but for what? What exactly are you optimizing for?

Fwiw Freecad has an AirplaneDesign, CfdOf(computational fluid dyanamics) workbench built in..

(I haven’t played with those personally)

It can generate circuits too if you ask for a netlist. I’ve tested it with asking for a netlist of a 2 transistor astable multivibrator and the result was satisfactory.

I did something like that: to ask for a spice netlist for an RC filter. That worked well.

Then I asked to produce syntax for an LTSpice .asc file (basically the graphical representation of such a circuit). A result was produced, it even could be opened by LTSpice, but it looked like a piece of abstract art. I stopped there though, I suppose that it could be instructed how to improve until it works.

Hopefully you’ve skimmed the “sparks of AGI” paper and seen the Unicorn and the 3D models they were abl to get. The situation is, GPT4 was much better at making 3D models and drawings at one point during training, and its abilities in those areas degraded as it was fine tuned toward better conversation.

That’s an issue of Catastrophic Interference, where new learned information overwrites the old because the model either can’t compartmentalize and contextualize data (it’s just diffused all over the model), or the model is not large enough to contain everything.

It’s like, if you learn a new word, you forget how to chew bubble gum, because the two tasks were overlaid in your brain and there weren’t enough neurons to encode for both behaviors.

It is a powerful tool, I tried to get some drawings with SVG code, it created a funny version of it’s face, asking it to draw the chatgpt face with SVG code.

Didn’t see any issues repeating this myself. I got a perfectly recognisable plane straight away.

https://imgur.com/gallery/IgdYCZH

Me:

Using povray scene description can you give me a 3d description of a plane consisting of a fuselage, two wings, tail fin and stabiliser?

ChatGPT

ChatGPT:

Here’s a simple POV-Ray scene description for a basic airplane model consisting of a fuselage, two wings, tail fin, and stabilizer. This example uses basic shapes like cylinders and boxes to create the components of the airplane. You can adjust the size, position, and orientation of the objects to fit your desired model.

// Camera and light setup

camera {

location

look_at

angle 40

}

light_source {

color rgb

}

// Fuselage

cylinder {

, , 5

texture {

pigment { color rgb }

finish { phong 0.8 }

}

}

// Wings

box {

,

texture {

pigment { color rgb }

finish { phong 0.8 }

}

}

// Tail Fin

box {

,

texture {

pigment { color rgb }

finish { phong 0.8 }

}

}

// Stabilizer

box {

,

texture {

pigment { color rgb }

finish { phong 0.8 }

}

}

This code creates a simple airplane model using POV-Ray’s scene description language. You can use this as a starting point and modify it to create a more detailed and realistic model by adding textures, materials, and additional shapes.

Since chatGPT is just a trained neural network it’s output very much depends on its input data, it may have better training data for the program you used than it does for freecad. This just highlights that it has no idea what it is actually doing or else it would be able to do the same task in just about any method or language.

It looks like inputting into the AI took more steps than just opening grasshopper and making a parametric one with some input-box. To me ChatGPT looks like a giant toy, it makes things that makes you go “wow” but it’s the same. feeling you get when a baby says something intelligent. Its “wow” but still very far to be employable. In my opinion right now its just a giant bubble, its going to burst very soon. It will reappear however within ten years in a much much stronger form and maturity. IMHO

It’s not a bubble that’s going to pop. Maybe some initial users will walk away if it doesn’t solve their problems correctly, but quality will steadily increase with each subsequent release. The people that walked away will gradually start to return. Seeing the difference between v3 and v4, it’s not going to take 10 years.

I would argue that with that much input from the person to get chatGPT to generate it, it wasn’t just guided by a human and it wasn’t solely generated by the AI.

Falls into the category of ‘Alignment’ – which is more adequately expressed as ‘misalignment’ or “inadequate alignment”. User-Accessible AI can’t (so far) be expected to receive a brief text instruction and output something which immediately meets user expectations. Humans must learn to operate within the AI’s capabilities. Open Pandora’s Box and out steps Baby Huey.

Prompt to 3D object: https://github.com/openai/shap-e

I wonder if one of the AI’s can be used to design camera lenses.

To design those takes a lot of math and finesse because of the many interactions of parameters, where each ‘perfect’ number degrades another number is some way, so they need to find a compromise that is pleasing to the eye.

If an AI could do that we might get cheaper (and possibly even better) lenses. Although the cheaper would be based on the Chinese, or some such, being able to compete with the Japanese.

I don’t think the Japanese would kick back the savings to the consumer.

ChatGPT can code in G-code directly… Write me a G code file for my 3d printer to build a retangular box, x 20 mm , y 25 mm and z 30 mm, and use a layer height of 0.5 mm. No CAD, No .stl file, no slicer…… ;-) Start G-code

G28 ; Home all axes

G1 Z15.0 F9000 ; Move the platform down 15mm

G92 E0 ; Reset the extruder’s position to 0

; Build the box

G1 X0 Y0 F2400 ; Move to the lower left corner

G1 Z0.5 F9000 ; Move the nozzle to the build plate

G1 E10 ; Extrude some filament to start the print

; Loop through the layers

; Each layer will be 1.0mm high

; So, we need to do 3 layers in total

; The extrusion rate can be adjusted to your preference

G1 Z1.0 F9000 ; Move up to the next layer

G1 X20 Y0 E8 F2400 ; Extrude along the bottom edge

G1 X20 Y25 E10 F2400 ; Extrude along the right edge

G1 X0 Y25 E12 F2400 ; Extrude along the top edge

G1 X0 Y0 E14 F2400 ; Extrude along the left edge

G1 Z2.0 F9000 ; Move up to the next layer

G1 X20 Y0 E18 F2400 ; Extrude along the bottom edge

G1 X20 Y25 E20 F2400 ; Extrude along the right edge

G1 X0 Y25 E22 F2400 ; Extrude along the top edge

G1 X0 Y0 E24 F2400 ; Extrude along the left edge

G1 Z3.0 F9000 ; Move up to the final layer

G1 X20 Y0 E28 F2400 ; Extrude along the bottom edge

G1 X20 Y25 E30 F2400 ; Extrude along the right edge

G1 X0 Y25 E32 F2400 ; Extrude along the top edge

G1 X0 Y0 E34 F2400 ; Extrude along the left edge

; End G-code

G1 E-10 ; Retract the filament

G1 Z15 F9000 ; Move the platform down 15mm

G28 X Y ; Home the X and Y axes

M106 S0 ; Turn off the cooling fan

M104 S0 ; Turn off the extruder

M140 S0 ; Turn off the bed

M84 ; Disable the motors

Note: This code assumes that your printer is already properly calibrated and the print bed is leveled. Make sure to adjust the extrusion rate and print speed to match your specific printer’s capabilities and the type of filament you’re using. Also, keep in mind that this is a simple example and that more complex models may require additional code.

That’s 3mm high. And using 1mm layer heights. At 2400 mm/min? What’s your nozzle diameter? And it’s really just the walls of the box, without floor or top. It’s like a single-wall test piece.

This _is_ GCode, and it absolutely won’t work.

You can prompt it with a sample of a gcode you know works on the machine.

Still hadn’t had much luck though. Maybe I ask it to make a program that makes gcode and go from there.

Proof of concept right now, more to come http://origami.tanso3d.com