AI image generators have gained new tools and techniques for not just creating pictures, but modifying them in consistent and sensible ways, and it seems that every week brings a fascinating new development in this area.  One of the latest is Drag Your GAN, presented at SIGGRAPH 2023, and it’s pretty wild.

One of the latest is Drag Your GAN, presented at SIGGRAPH 2023, and it’s pretty wild.

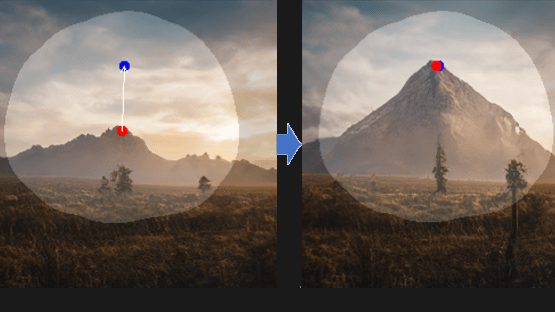

It provides a point-dragging interface that modifies images based on their implied structure. A picture is worth a thousand words, so this short animation shows what that means. There are plenty more where that came from at the project’s site, so take a few minutes to check it out.

GAN stands for generative adversarial network, a class of machine learning that features prominently in software like image generation; the “adversarial” part comes from the concept of networks pulling results between different goalposts. Drag Your GAN has a GitHub repository where code is expected to be released in June, but in the meantime, you can read the full paper or brush up on the basics of how AI image generators work, as well as see how image generation can be significantly enhanced with an understanding of a 2D image’s implied depth.

That looks like a useful composition tool particularly when paired with the initial pin the scene method, but can it fix the finger count problem that so many of these systems have?

You think the finger count problem is bad? Wait until a police officer uses this to turn round a photo of a suspect to show they were carrying a gun in their hidden hand…

I actually wouldn’t worry about the legal system using it to incriminate people. Legal systems have already partly had issues with handling erroneous face recognition and image enhancement as is. A country that regards any modified image as “evidence” is frankly going to have to change their stance. This isn’t a new issue that AI has brought, it has been here for as long as photo editing has existed, and that is well over a century now.

But I would be far more worried about deep fakes to incriminate people in general becoming more common or to just shift public opinion through bots in social media. Not made by the police or the government, but rather by the people around you that has something against you. Or by companies shifting the political debate in their own favor, creating nuanced and wide reaching public uproar online has never been easier.

As more complex photo editing becomes more and more trivial and easy to do, it also gets more ripe for abuse. It is just a question of how much effort is required compared to the value of the dispute. These “AI” image editing solutions do lower that bar significantly. Far more than digital photo editing alone had done before.

Hopefully AI makes people distrust digital images to the extent that surveillance becomes obsolete. Tired of all these cameras everywhere.

You can always dream, that’s not (yet) illegal… Seriously, it really worries me that manipulating images has become so easy. This as an enormous potential of abuse, from “The famous X had a relationship with the famous Y” to political manipulations, legal stuff (like the missing gun above), … We already have a huge problem with so-called “fake-news”, so this is worrying.

To be fair here, it isn’t digital images that are the actual problem.

It is the trivially of creating false incriminating evidence that is hard to disprove, be it for use in a court or for spreading to the public through various means.

To a large degree, this is illegal already.

Making up false evidence isn’t legal to start with.

But likewise isn’t it legal to spread false “truths” about people, that is defamation.

Deep fakes falls into one or both of these categories if done without permission.

However, some might say that satire is legal, and it is. But the line between satire and defamation is a rather thin one at times. But generally speaking, satire should be clear in its form that it is satire. So seeing how satirical media outlets uses AI to even further impersonate people is frankly perhaps overstepping into defamation due to removing a lot of the clarity that it is satire and in fact not something the supposed individual has done themselves.

Using these types of systems to impersonate people should frankly be taken far more seriously. Since it really undermines both the legal system and our social interactions at large. Courts can set a man free, but the public can still have strong opinions. And both AI image generation and large language models do make it rather easy to steer public opinion.

I should however end by saying that I am not directly against a lot of machine learning applications. But there is pros and cons, and currently the long term negative aspects of some machine learning applications are frankly dystopic to a degree that makes even 1984 (the book) look fairly tame in comparison.

Totally agree this doesn’t enable anything new, photo manipulation has been with us for a long time. My concern is that most people don’t realise that the AI is inventing new pixels. As obvious as it seems to us, I fear many people will think the AI’s rotation of a photo subject is a trivial and accurate/truthful process not a creative photo manipulation.

You’re right, it should be shot down easily by any defence lawyer (provided they realise it’s been done), but in other situations like internal company or university investigations it could be abused unknowingly. And here in the UK we’ve just had Horizon back in the news (a Fujitsu accounting system which caused over 700 sub-postmasters to be prosecuted based on faulty data), so I’m aware that sometimes software is just trusted.

OK, I have to admit that I came here expecting something COMPLETLY DIFFERENT when the headline said “Drag Interface”…

Yep

The culmination of all these AI art tools are building towards something and it’s not SkyNet .. it’s better cat videos.

Sometime in the near future all those pet videos where the owner gives the pet a funny voiceover will finally be able to have a quality AI voice and mouth synced lips. It will truly be one of mankind’s greatest achievements …

And then an AI-generated lip synced cat will become president…

unfortunately, ‘ai’ is already been used to create false facts (tts systems faking voices based on call recordings) and politics refuse to recognise the danger in that, even when being shown how easy it is to pull of.

professionally I am not worried to have less to do in the future. privately I am really shitting my pants for what is already there (not just what is coming more)

Yes, it is scary to look at all the bad ways people already use “AI” systems for.

Be it to impersonate famous people without consent for roles/things that said person wouldn’t want to partake in. Or just impersonate Joe average for blackmailing or to get money out of family members by fooling them that one needs financial help and that it is really important to do it as quickly as physically possible.

Steering public opinion through bots in social media is something I have heard of happening. But so far can’t say I have seen much of it myself. However, the whole point of biasing public opinion is that it is discrete and done in ways that people don’t actually notice that it is a bot. So how widespread this practice is is frankly hard to judge and likely getting harder by the day. (perhaps Facebook and friends might eventually have more active users than there is humans in existence.)

People that talk about students cheating on tests, or that journalists, writers, programmer, musicians, artists, etc, can end up on the street in the next year or two due to being unable to compete with these “AI” systems is frankly only seeing the smaller side of the issues at hand. This is though still serious issues in their own right.

But a lot of decisions made by both companies and politicians is purely based on largely anonymous reactions from social media, reactions that can be deep faked by all sorts of unscrupulous people. And this is far from new, but large language models and image generation sure makes it millions of times easier.

Some people will though say that “it is easy to tell what is fake.” But not everyone has the same experiences to judge the potential truth of the information they see. People believe in all sorts of crazy stuff. (everyone has some myth that they consider true even though it isn’t.)

Personally, I think politicians needs to regard deep fakes as a serious offence. Since it does undermine both the legal system and the ability to anonymously express political opinion in a democratic nation. Bot accounts that pretends to be individuals should be illegal, regardless if they impersonate someone or not (since Joe average can’t tell the difference), it should always be clear that it isn’t an individual. (retaining anonymous accounts is however another can of worms…)

If you haven’t seen bots steering public opinion on social media, then either you never use social media, or you’ve been steered.

I don’t use social media to be fair.

Only hear about it when politicians makes decisions based on people on social media raving about something or another.

I am a fairly passive observer of what happens on such platforms, and most I don’t stick a finger in.

But yes, steering public opinion through bots is a thing.

I however don’t have good metrics for how common that is, though partly think a lot of people don’t have figures for it, since more competent bot operators would be hard to detect, since that is half the point, that it isn’t remotely obvious.