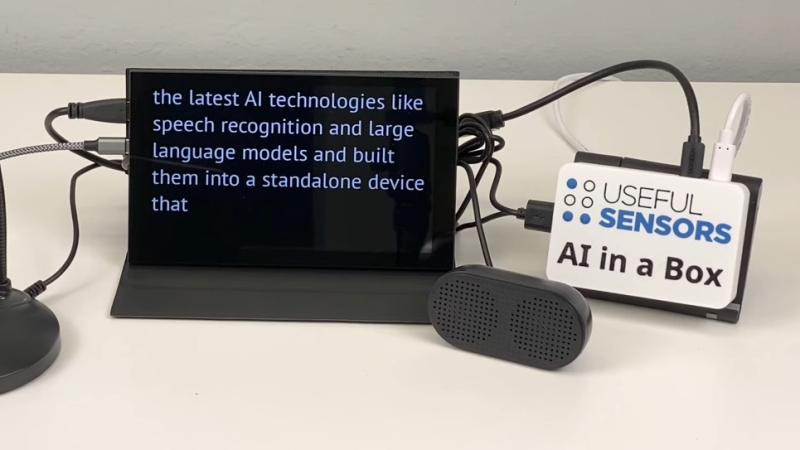

[Useful Sensors] aims to embed a variety of complementary AI tools into a small, private, self-contained module with no internet connection with AI in a Box. It can do live voice recognition and captioning, live translation, and natural language conversational interaction with a local large language model (LLM). Intriguingly, it’s specifically designed with features to make it hack-friendly, such as the ability to act as a voice keyboard by sending live transcribed audio as keystrokes over USB.

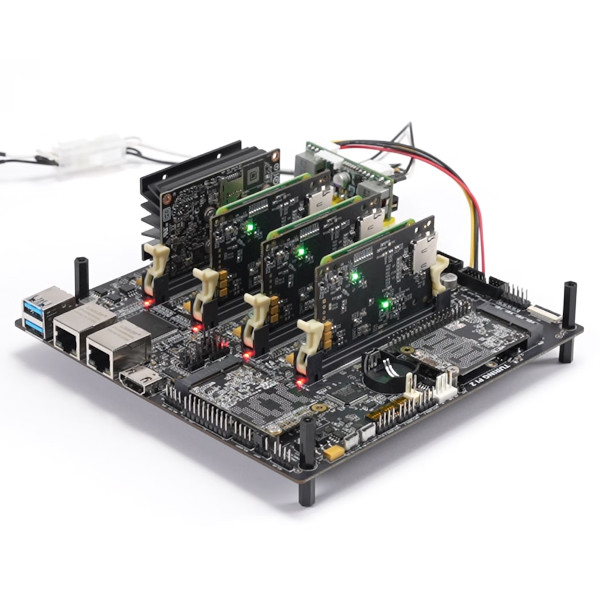

Right now it’s wrapping up a pre-order phase, and aims to ship units around the end of January 2024. The project is based around the RockChip 3588S SoC and is open source (GitHub repository), but since it’s still in development, there’s not a whole lot visible in the repository yet. However, a key part of getting good performance is [Useful Sensors]’s own transformers library for the RockChip NPU (neural processing unit).

The ability to perform things like high quality local voice recognition and run locally-hosted LLMs like LLaMa have gotten a massive boost thanks to recent advances in machine learning, and it looks like this project aims to tie them together in a self-contained package.

Perhaps private digital assistants can become more useful when users can have the freedom to modify and integrate them as they see fit. Digital assistants hosted by the big tech companies are often frustrating, and others have observed that this is ultimately because they primarily exist to serve their makers more than they help users.

Just how large does the local memory have to be for a working “large” language model?

who knows. It has 8gb of ram, no mention of permanent storage, no real details on software, github doesn’t have any details. Mainly seems to be a marketing project at this point.

Depends on what you need to do with them. If you’re okay with waiting for the responses from the LLM, your only limitation is the amount of RAM on your machine. You could run absolutely massive models on a Celeron CPU with enough RAM to hold them.

The size of the models start from 7 billion parameters and go all the way up to 130 billion parameters. The models are roughly about 800MB/billion parameters. 7B are ~5GB-6GB, 13B are 9GB-10GB. 7B feel kinda “dumb” while 13B are quite a bit smarter. I have no experience with larger models but I hear its diminishing returns above 34B parameters.

If you want any kind of speed, you will need a GPU with enough VRAM. 4GB will be the absolute minimum, 6GB should be fine. It all depends on the size of the model you are running.

I run a 13B parameter model on my PC for…reasons…and I have a RTX3060 with 12GB VRAM and 32GB of RAM with an Ryzen 5 5600X. Its plenty fast for what I need.

“I run a 13B parameter model on my PC for…reasons…”

HaD comments have been improving. ;-)

Someone is going to become very rich when they develop a framework for a 5-8B parameter model that can gab effectively and use a database to store tokens in place of short & long-term memory. RPG NPC’s don’t have to be _that_ with it if they can remember what happens to them and react to their senses.

I got LLaMa to run on my phone. Very slowly though.

65-128GB at 800GB/s is a start. Think Mac studio, not a low end RockChip dev board.

Can it run Bender?

Of course not! Bender runs on a 6502.

Probably relevant to note that one of the founders of Useful Sensors is Pete Warden. Pete has done a lot of work on making AI run on low end hardware e.g. he’s one of the creators of TinyML. The other AI-powered edge devices they’ve shown, like their Person Sensor, have performed as expected. I’m sure this will too.

Full disclosure: I have known Pete for many years.