[Michael Kohn] started programming on the Motorola 68000 architecture and then, for work reasons, moved over to the Intel x86 and was not exactly pleased by the latter chip’s perceived shortcomings. In the ’80s, the 68000 was a very popular chip, powering everything from personal computers to arcade machines, and looking at its architecture and ease of programming, you can see why this was.

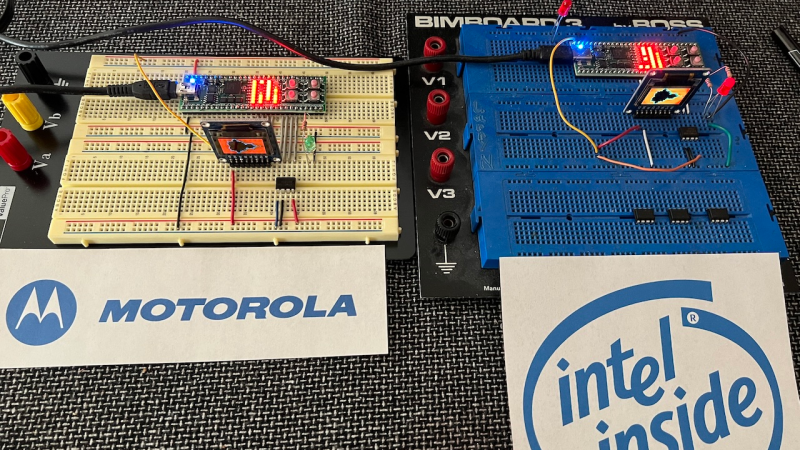

Fast-forward a few years, and [Michael] decided to implement both cores in an FPGA to compare real applications, you know, for science. As an extra bonus, he also compares the performance of a minimal RISC-V implementation on the same hardware, taken from an earlier RISC-V project (which you should also check out !)

Utilizing their ‘Java Grinder’ application (also pretty awesome, especially the retro console support), a simple Mandelbrot fractal generator was used as a non-trivial workload to produce binaries for each architecture, and the result was timed. Unsurprisingly, for CISC architectures, the 68000 and x86 code sizes were practically identical and significantly smaller than the equivalent RISC-V. Still, looking at the execution times, the 68000 beat the x86 hands down, with the newer RISC-V speeding along to take pole position. [Michael] admits that these implementations are minimal, with no pipelining, so they could be sped up a little.

Also, it’s not a totally fair race. As you’ll note from the RISC-V implementation, there was a custom RISC-V instruction implemented to perform the Mandelbrot generator’s iterator. This computes the complex operation Z = Z2 + C, which, as fellow fractal nerds will know, is where a Mandelbrot generator spends nearly all the compute time. We suspect that’s the real reason RISC-V came out on top.

If actual hardware is more your cup of tea, you could build a minimal 68k system pretty easily, provided you can find the chips. The current ubiquitous x86 architecture, as odd as it started out, is here to stay for the foreseeable future, so you’d just better get comfortable with it!

According to the description the X86 implementation uses an 8 bit bus and processes instructions in 8 bit chunks, while the 68k implementation uses a 16 bit bus and processes instructions in 16 bit chunks.

Not exactly a fair comparison. Of course the RISC-V implementation is even more optimized.

Hm. Sounds like 68000 vs 8088. Not quite fair, right.

Back in the 80s, PC emulator boards for Atari ST/Amiga had used an V30 (8086 superset) or 80286, because they’re bus compatible with 68000 (both 16-Bit data width/16-Bit instructions, but different endianess).

8088 was indeed a real dog. I still have one that I fire up to check my little coding experiments on. It’s full of bugs and slow at everything.

But the 8088 won because it, and important support chips, was more readily available than the 68k in 1980, when the IBM PC prototype was quickly thrown together. Despite the two CPUs coming out in ’79, it was difficult to get samples without a manufacturing commitment. And the nature of the PC’s proposal at IBM left it picking off-the-shelf components.

If someone was making a computer just a year or two later. The whole situation is different, and the 68K starts to become a very good choice. And these systems tended to have custom chips, such as PALs or ASICs, to drive more advanced graphics and sound. And lower costs with better integration. While also making it more complicated to clone a 68K home computer. But the PC clones took off because the components were pretty easy to obtain and there wasn’t a need to clone any proprietary chips.

That said. The best technical choice would have been to use 68K or switch to MIPS or ARM sometime in the late 1980’s. But people weren’t willing to give up their huge software library on the IBM PC compatible. Which is funny, because I feel like half the software people used was pirated back then.

At the time the consensus was that pirated software and cheap commodity hardware drove the PC compatible market dominance, the same way that porn and cheap hardware drove VHS market dominance.

My vague recollection from the ’80s is that the Motorola chips were at least perceived as faster. But that’s no reason for someone not to do this project.

Compilers back in the day (early to late 80’s) were not like they are today… they were extremely expensive and created code that was iffy at best. As a result most would program in assembly language… and there is no question that the Motorola offerings were much easier and more straightforward to program in assembly language. On top of this Motorola provided at no charge assembly compilers… whereas, Intel did not. So… at most Universities, Motorola offerings were used and taught given the free tools available… which also provided a great infrastructure for students to get started in embedded systems design and programming. On my side I have assembly language programmed 10’s of thousands (perhaps even 100’s of 1000s?) of Motorola processors… ranging from the 6802 to the 68K and its variants… and have nothing but good memories of it all. There is no question that today we are all blessed with a plethora of programming tools, languages, etc with most being free… and so embedded programming these days tends to be far more straightforward and easier at times… well, other than embedded systems now are asked to do far more than the 68xx/68K days. Although I much prefer the tools of today, I do miss the old days… as it was more adventuresome, as one felt like one was on the leading edge. These days… it is pretty straightforward and somewhat routine.

I cannot edit my previous post… I meant to say 10’s of 1000s’, etc of assembly… not physical processors.

hanasa

Turbo Pascal v3 of the mid-80s was really good, though.

Both in the Z80 and 8086 version created very small quick COM files.

Also cool was MIX Power C, though it’s questionable if C was a real high-level language.

I read some people did consider it more of as a super assembler.

Because it looks really like a macro assembler on sugar, with C language being just a set of included macros.

Last but not least, there were quite a few nice implementations of Fortran, too.

Very small and quick.

Turbo Pascal was indeed a pretty impressive feat of engineering, especially on the otherwise rather limited Z80.

Turbo C on the other hand was quite rough for a while. It banked on the good reputation that Turbo Pascal had earned and really couldn’t keep it. Very buggy.

On the other hand, Borland released a version of Turbo C for 68k that was mind-boggling good for the time. I believe the code base was mostly unrelated from the more well-known x86 version, though. I was an early alpha tester of the 68k release and was impressed with how rapidly the development team responded to bug reports. I think, this was one of the first ANSI C compilers that was readily available at a hobbyist-friendly price and fully functional.

If I recall correctly, Digital Research had a C compiler for the 68000 in the late 1980s. It was affordable and produced fast code.

Maybe a fairer comparison would have been to compare against an early FPGA core e.g. XR16

https://github.com/grayresearch/xsoc-xr16

The two processor families reflect the different objectives of Motorola and Intel. Basically, Intel’s objective was to leverage the popularity of the previous generation, to ensure dominance of the next. Motorola couldn’t do that, because they didn’t have dominance in the first place. This lead to a riskier strategy, jumping 16-bit architectures entirely.

Ultimately, that’s what made the 680×0 series successful in market areas in the 1980s, for computer manufacturers who wanted to stand out. That’s the trade-off: a better, faster, easier to program architecture which allowed some (i.e innovative) companies to exploit in different niches.

As a design, I’d always say the 680×0 wins. It has abundant internal resources and its orthogonality made it superbly easy to code in assembler. The Intel strategy ultimately squeezed it out, despite Motorola’s 5 year head start.

Ah, they compared a 8 bit cpu, vs a 16/32bit cpu.

Where are the cores from? Are they optimized? Is one written by a student just learning verilog/VHDL while the other is super optimized?

stay tuned while I compare my Raspberry Pi to a Threadripper.

Next, I will compare my Tesla to a Model T.

Actually, I think he compared a 386 to a 68000. The key thing is that he used roughly the same number of LUTs, so assuming that he’s equally skilled at designing an FPGA 68000 (subset) as a 386 (subset), it should be a fair comparison.