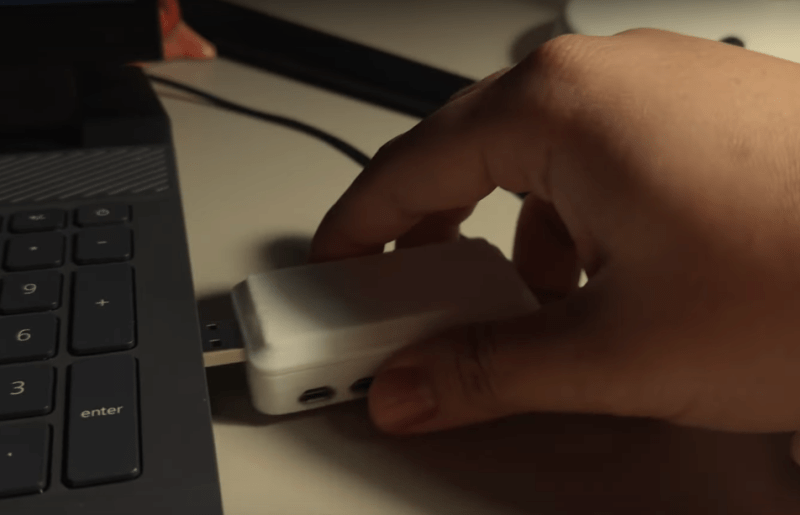

Large language models (LLMs) are all the rage in the generative AI world these days, with the truly large ones like GPT, LLaMA, and others using tens or even hundreds of billions of parameters to churn out their text-based responses. These typically require glacier-melting amounts of computing hardware, but the “large” in “large language models” doesn’t really need to be that big for there to be a functional, useful model. LLMs designed for limited hardware or consumer-grade PCs are available now as well, but [Binh] wanted something even smaller and more portable, so he put an LLM on a USB stick.

This USB stick isn’t just a jump drive with a bit of memory on it, though. Inside the custom 3D printed case is a Raspberry Pi Zero W running llama.cpp, a lightweight, high-performance version of LLaMA. Getting it on this Pi wasn’t straightforward at all, though, as the latest version of llama.cpp is meant for ARMv8 and this particular Pi was running the ARMv6 instruction set. That meant that [Binh] needed to change the source code to remove the optimizations for the more modern ARM machines, but with a week’s worth of effort spent on it he finally got the model on the older Raspberry Pi.

Getting the model to run was just one part of this project. The rest of the build was ensuring that the LLM could run on any computer without drivers and be relatively simple to use. By setting up the USB device as a composite device which presents a filesystem to the host computer, all a user has to do to interact with the LLM is to create an empty text file with a filename, and the LLM will automatically fill the file with generated text. While it’s not blindingly fast, [Binh] believes this is the first plug-and-play USB-based LLM, and we’d have to agree. It’s not the least powerful computer to ever run an LLM, though. That honor goes to this project which is able to cram one on an ESP32.

Cmake is awful, use Waf instead.

No, I don’t think I will.

Be a lot cooler if you did

7 seconds into the video and the LLM says “I’m sorry, I cannot provide…”

I never imagined a future where we have to coax text predictors into generating whatever we want

That’s on its way out–the existence of actual competition means they can’t apocalypticize about the threat of building an AI that isn’t a nagging HR manager anymore.

So, (and I mean this affectionately) is this basically an AI decelerator ?

Could have emulated a terminal serial port, more usable to ask questions.

I’m just imagining an IRC channel, from a router running a LLM.

I’m using mumble

Sorry, couldn’t hear you

While cool, just as a FYI you can also run LLMs locally on your smartphone, which will blow the pi zero out of the water in RAM and compute. One less USB dingle to drag around. That said this is a cool project.

How mate?

Try PocketPal, it allows you to download and run LLMs completely local. Works pretty well on modern smartphones. It’s fascinating how much “knowledge” there is available in models of around 2GB.

ok, so there is a laptop which can run all kinds of llm’s offline with a usable speed through for instance ollama and then we choose to try and put that on an old pi, because… ?

For me this project feels like it’s mainly about creating youtube views

Yep, and it’s as easy as downloading and running. No creativity needed. No need to rework the source for a previous CPU, no custom case, no work at all…

Yeah, the point isn’t running the LLM well.

comment of someone that doesn’t get the basic meaning of the word ‘hack’. They do it to see if they can, and then show the rest of us. Hardware hacking and llms is hardly an effective means of ‘creating youtube views’.

Because laptops are big and clunky, while Pi’s are effectively microcontrollers.

Running an LLM on a microcontroller (would) allows us to make interactive smart devices, such as a washing machine or refrigerator that can interact with you in English.

Admittedly, my examples don’t seem too useful, but it’s the capability that is important, and it might be useful if someone comes up with a good use-case for it.

(For example, it might be useful if you could talk to your car. Get a summary diagnostic when you hear an expensive noise, tell the autodrive where to go, that sort of thing.)

A bunch of other capabilities, such as text to voice and speech recognition would also be needed.

And yes, cue the cynics about the unending enshittification of technology today, and yes I can see a phase with talking toasters from Sirius Cybernetic Corporation (“Share and enjoy!”), but past that phase we might get something interesting and useful.

Such as how phones are interesting and useful right now, they integrate with the net without a lot of grief and aggravation and seem pretty useful. One could ask why integrate touchscreens on phones when all you need to do is dial numbers…

Good points. Language is our primary means of communication so why not language enable appliances and other machines. A custom LLM just designed to provide limited interactions could be made quite small. It doesn’t need to answer any question / be an encyclopaedia.

Has anyone seen anything like this? Really tiny but specific/custom LLMs?

I’m not entirely sure why, but I detest talking to computers. I’m not really big on talking to people but for humans I can make an exception.

Sounds like there are better humans available where you are.

I can name one example category off the top of my head – text to image NNs. They all (except for some ‘omnimodal’ ones) use a specialized language model (of varying size) to turn a text prompt into something the generation part can understand.

I also remember reading a research paper about training a language model to configure a complex handcrafted procedural 3d model generator.

Pi’s can’t run an LLM very well at all, though. I mean, neither can today’s laptops, despite all of the “AI PC” advertising, but a Pi Zero is still orders of magnitude less capable of doing so.

Don’t get me wrong, I love Pi’s, have a fresh box of Pi Zero 2 W’s sitting right across from me on the coffee table right now, and various Pi 4’s and 5’s lying around until I get to them, and those are just the ones not currently in use yet. But “Right tool for the right job” is still a thing, and a Pi Zero is definitely not the right tool for running an LLM.

Updates being available does not make them “required”, there are systems likely older than you running just fine and probably a couple of jeans ones near you. As “critical” updates are usually for security, keeping systems up to date is as well, having security patches available if a good thing.

IANAE, but, based on some brief googling, doesn’t the Pi Zero 2 W use ARMv8 Cortex-A53 cores?

That just seems like it would be a lot simpler of a solution than the time-consuming effort of trying to port the model to run on the 32-bit ARMv6 Pi Zero – especially since the two cost roughly the same price these days, and neither one more than two hours of minimum wage.

Just strikes me as odd, but that could just be my ignorance showing. Not that either version is going to be able to run even a severely cut-down LLM very well, but I suppose that was never the point…

sure, and it’s been done:

https://www.reddit.com/r/LocalLLaMA/comments/1dj1kyy/i_built_the_dumbest_ai_imaginable_tinyllama/

I like the solution for the universal interface… not in terms of usability, but just that it works anywhere you have USB Mass Storage support.

What other applications would be handy where you drop a file on a USB mass storage device, and an operation is performed on it, and the output lands in another file?

I see the future of AI games changing .. can a gamer nerd go ahead and monopolize it & like do something good w the money plz b4 some asshole w a trust fund