Some time ago, Linus Torvalds made a throwaway comment that sent ripples through the Linux world. Was it perhaps time to abandon support for the now-ancient Intel 486? Developers had already abandoned the 386 in 2012, and Torvalds openly mused if the time was right to make further cuts for the benefit of modernity.

It would take three long years, but that eventuality finally came to pass. As of version 6.15, the Linux kernel will no longer support chips running the 80486 architecture, along with a gaggle of early “586” chips as well. It’s all down to some housekeeping and precise technical changes that will make the new code inoperable with the machines of the past.

Why Won’t It Work Anymore?

The big change is coming about thanks to a patch submitted by Ingo Molnar, a long time developer on the Linux kernel. The patch slashes support for older pre-Pentium CPUs, including the Intel 486 and a wide swathe of third-party chips that fell in between the 486 and Pentium generations when it came to low-level feature support.

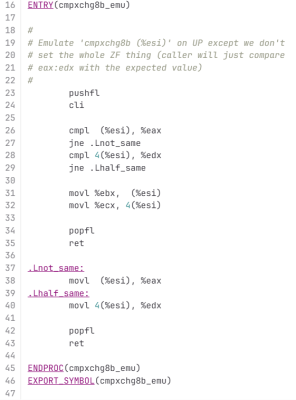

Going forward, Molnar’s patch reconfigures the kernel to require CPUs have hardware support for the Time Stamp Counter (RDTSC) and CMPXCHG8B instructions. These became part of x86 when Intel introduced the very first Pentium processors to the market in the early 1990s. The Time Stamp Counter is relatively easy to understand—a simple 64-bit register that stores the number of cycles executed by the CPU since last reset. As for CMPXCHG8B, it’s used for comparing and exchanging eight bytes of data at a time. Earlier Intel CPUs got by with only the single-byte CMPXCHG instruction. The Linux kernel used to feature a piece of code to emulate CMPXCHG8B in order to ease interoperability with older chips that lacked the feature in hardware.

The changes remove around 15,000 lines of code. Deletions include code to emulate the CMPXCHG8B instruction for older processors that lacked the instruction, various emulated math routines, along with configuration code that configured the kernel properly for older lower-feature CPUs.

Basically, if you try to run Linux kernel 6.15 on a 486 going forward, it’s just not going to work. The kernel will make calls to instructions that the chip has never heard of, and everything will fall over. The same will be true for machines running various non-Pentium “586” chips, like the AMD 5×86 and Cyrix 5×86, as well as the AMD Elan. It’s likely even some later chips, like the Cyrix 6×86, might not work, given their questionable or non-existent support of the CMPXCHG8B instruction.

Why Now?

Molnar’s reasoning for the move was straightforward, as explained in the patch notes:

In the x86 architecture we have various complicated hardware emulation facilities on x86-32 to support ancient 32-bit CPUs that very very few people are using with modern kernels. This compatibility glue is sometimes even causing problems that people spend time to resolve, which time could be spent on other things.

Indeed, it follows on from earlier comments by Torvalds, who had noted how development was being held back by support for the ancient members of Intel’s x86 architecture. In particular, the Linux creator questioned whether modern kernels were even widely compatible with older 486 CPUs, given that various low-level features of the kernel had already begun to implement the use of instructions like RDTSC that weren’t present on pre-Pentium processors. “Our non-Pentium support is ACTIVELY BUGGY AND BROKEN right now,” Torvalds exclaimed in 2022. “This is not some theoretical issue, but very much a ‘look, ma, this has never been tested, and cannot actually work’ issue, that nobody has ever noticed because nobody really cares.”

Basically, the user base for modern kernels on old 486 and early “586” hardware was so small that Torvalds no longer believed anyone was even checking whether up-to-date Linux even worked on those platforms anymore. Thus, any further development effort to quash bugs and keep these platforms supported was unjustified.

It’s worth acknowledging that Intel made its last shipments of i486 chips on September 28, 2007. That’s perhaps more recent than you might think for a chip that was launched in 1989. However, these chips weren’t for mainstream use. Beyond the early 1990s, the 486 was dead for desktop users, with an IBM spokesperson calling the 486 an “ancient chip” and a “dinosaur” in 1996. Intel’s production continued on beyond that point almost solely for the benefit of military, medical, industrial and other embedded users.

If there was a large and vocal community calling for ongoing support for these older processors, the kernel development team might have seen things differently. However, in the month or so that the kernel patch has been public, no such furore has erupted. Indeed, there’s nothing stopping these older machines still running Linux—they just won’t be able to run the most up-to-date kernels. That’s not such a big deal.

While there are usually security implications around running outdated operating systems, the simple fact is that few to no important 486 systems should really be connected to the Internet anyway. They lack the performance to even load things like modern websites, and have little spare overhead to run antiviral software or firewalls on top of whatever software is required for their main duties. Operators of such machines won’t be missing much by being stuck on earlier revisions of the kernel.

Ultimately, it’s good to see Linux developers continuing to prune the chaff and improve the kernel for the future. It’s perhaps sad to say goodbye to the 486 and the gaggle of weird almost-Pentiums from other manufacturers, but if we’re honest, few to none were running the most recent Linux kernel anyway. Onwards and upwards!

why do we care about removing lines of code if it removes functionality? linux already runs in under 1 gig of ram and is already more optimized than windows? why would anyone want legacy compatibility removed.

Because as pointed out the fixes and emulators to provide legacy compatibility were breaking newer developments, and required resources to work around.

Sez the guy who never worked on a codebase that supported many product generations.

Yup 100%. It’s hard enough work when supporting just two generations of hardware when you make the hardware. I worked on an embedded system where we switched from Rockchip to IMX. Building two distros and testing them to ensure you don’t break stuff is a PITA. Also stopped us for a year from making use of the extra features the IMX gave us. Had to wait till all old systems were replaced. Anyone who thinks it’s easy has never shipped a product and then revised it’s hardware.

and time.

Sounds more like trying to use available resources efficiently.

It’s not a skill issue. Someone has to actually support the old architecture. CMPXCHG8B isn’t just “comparing and exchanging eight bytes of data at a time” – it’s a fundamental atomic in x86, so it gets used in highly sensitive portions like thready/SMP/etc. stuff.

So if you fix or streamline code in a modern SMP architecture and it breaks the old 486 code, someone has to go fix it, and that’s a support burden. If no one cares, there’s no point to fix it. It’s not about removing lines of code, it’s about reducing maintenance effort, and this is a serious issue for Linux’s future.

There’s no reason you can’t provide your own fork of Linux maintaining that support. They’re just saying it’s a waste of effort anymore.

Nah, sounds like you never maintained a legacy system. It’s not skill that is missing, it is time and development capacity.

True. Considering that i486 got broken unnoticed, however, there’s at least good reason to question overall competence. IMHO.

Here’s your red herring: ><((((*>

Mo code, mo bugs.

because it is already broken and no one wants to fix it.

also, while linux may run under 1gb ram it is not a fun experience.

hell, i installed debian on dual opteron 275/4gb recently and it was sluggish.

sure, i have 386 that actually tests 128MB of RAM and sadly only reports 64MB in bios data area and int 12h because only 0xFFFF kb fit in 16 bits.

i can probably find 486 motherboard that can do 128 or maybe 256. except only 64mb will be cached anyway. and even if latest kernel managed to boot on it it would barely fit.

i am sad because it would be cool to run latest gentoo on my 386 .

actually i have arch on 1.2ghz dual core turion and even console is a little slow ;-)

That is more a DE problem, Linux works well on Raspberry PI and other embedded boards with way less power. A naked kernel needs very little resources.

old hardware, old software. im ok with this.

Bad for vintage 486 servers, routers and lab equipment though.

The 486SXL CPUs had been installed in earlier Cisco routers/switches, for example, to run an *nix OS.

Sure 486/early 586 systems can be replaced by new hardware. Everything can.

But then what’s the purpose of Linux, which brags about its great hardware support?

There was a time when especially 486 users moved to Linux because they thought it was the better, more efficient system.

What Linux does isn’t just getting rid of random legacy support, it’s denying it’s very roots, too.

It abandons what it made succesful. Is that ethically/morally right?

What made it successful was that it supported contemporary consumer devices as well as mainstream server platforms. I see no point in running ancient networking hardware when contemporary Arm/MIPS devices run circles around those in all aspects.

Running ancient 486 servers is absolutely batshit crazy thing to do except as a museum. Back in ~2012 I pulled the plug on the last 2 Pentium servers in our server room. They became tiny VMs on one of the blades. But when it truly hit me was when I went there a week later and it was frickin freezing down there. We had to adjust cooling to accommodate the loss of just 2 Pentium heaters.

That’s shortsighted. We’re living in uncertain times.

What if we suddenly must be independent from international industry?

The equivalent to 486/586 class processors (at best) can still be built in US or Europe through older, existing/abandoned manufacturing plants.

Too bad if exactly GNU/Linux now pulls the plug to support them.

Because that’s what everyone proclaims as the solution to freedom.

No. It’s not about losing an “equivalent.” It’s specific processors. No one would literally build a 486 anymore. It’s about missing certain features. You add those features, it’ll run.

@Pat You seem to miss or ignore that x86 processors had been second-sourced in the 1970s-2000s.

The 808x, 8018x, 80286.. AMD made the am386, then got into trouble with intel.

The 486 clones and pseudo-586 processors had a big boom in the 90s.

About any reputable semiconductor house tried to profit from success of the 486. Cyrix, Via, Nexgen, Winchip etc.

But okay, let’s just ignore that bit of history and go on..

No, I’m saying “486 clone” is not “486.” They aren’t killing support for machines that claim they’re 486s in marketing. “486” isn’t a thing other than a chip Intel made. The x86 architecture has never been monolithic like that: those other chips are very different.

The machines need cmpxchg8b and a TSC counter. That’s all. The chips that excludes are the ones made decades ago, not now.

if we have to resort to using ancient cpus, not being able to run the latest linux, will be the least of our problems…

What has been written and removed can be written again (or forward-ported from old versions). The issue is that the capabilities are effectively unused, therefore even if something gets broken, nobody notices for a long time.

This is a twofold problem: it indicates that limited developer-hour resources are not being efficiently spent (because nobody uses the results), and it means that once the problem is discovered, there’s a much larger body of changes to trawl through to identify what got broken… all for very limited benefit to anybody.

And, in practice, any system that is old enough to be affected is generally low-spec enough that most modern software capabilities aren’t going to be relevant to it anyway. Thus, there’s very little to be gained by moving such old systems to newer kenrels anyway.

Security, you say? Some of the security problems are fundamental to the hardware, so no amount of security patching is going to harden them enough to count as secure, anyway. Just identify a kernel version that hits the sweet spot of function vs efficiency for your particular use-case and freeze on it.

If this sits badly with you, perhaps you should first commit your personal time to ensuring that Linux 2.0 runs on z-80 systems, first. That is, after all, a good parallel, although it’s a challenge that would require much less total engineer time.

A core element of open-source philosophy seems to have been lost over the years: Those who do the work must benefit, meaning that those who benefit should do the work ;)

linux supports RISC V which is the most easily manufactured CPU my industry, no royalties, decent, modern features. as home hobbyest chip designers, TinyTapeout are at a 70nm process, that’s on par with a pentium 4. nobody is making new 486 chips that are slower than an ESP32 than can be bought in bulk everywhere for pennies

You have players like Infineon and ASML in Europe, establishing up to date lines should not be a problem.

However… if you need a maintained, lightweight Unix for really old or small machines, NetBSD is alive and well!

“But then what’s the purpose of Linux, which brags about its great hardware support?”

We are talking about maintaining and supporting ancient hardware. Not slightly less modern, talking museum equipment. Those earlier cisco routers are from 1992, months after Linux was first released.

The purpose of Linux is to have a great operating system that’s fast, reliable, easy to use and as bug free as possible. That’s difficult to do when you are still supporting equipment that hasn’t been in use for almost 30 years. 486SXL doesn’t even fall under legacy anymore. It’s ancient. Almost prehistoric.

And if people want they can still patch it into a new update for that equipment themselves, or just stick to a slightly older kernel.

There’s another point that’s worth noting here. This whole thing seems like they’re saying “dropping support for 486 now! see ya, suckas!”

That’s not what’s happening. 486 support has been broken for years. This is how this whole thing got noticed – someone saw the 486 section used a programming convention that hadn’t been used in years, Linux was like “wait how does this even work” and the only maintainer who advocated for 486s before admitted he doesn’t have time to actually make the modern kernel work.

They’re not “dropping support for 486” now. It’s been broken for years. No one noticed.

And instead of troubleshooting it, they just let it die. It’s the easiest, less involved choice.

It fits the Zeitgeist. If it’s broken, scrap it, throw it away.

People do same to people. The old, the sick ones. They put ’em in retirement homes. Out of sight.

What the heck are you talking about? How do you troubleshoot a problem for a system that has no users with hardware you do not have?

This is not about preventing it from running on a 486. It’s about moving support away from people who are no longer physically able to support it because they do not have the hardware.

@Pat I’m nolonger arguing here with you, it’s nothing personal.

It seems you’re either unable to understand me, my considerations or have a very different thinking pattern.

But that’s okay. I don’t exclude the possibility that I’m thinking in wrong direction.

“We are talking about maintaining and supporting ancient hardware.”

We’re talking about processor generations that can still be managed by a small team and understood by a single person.

Once you’ve hit the Pentium II generation, the complexity of the CPU core starts to increase extremely.

No. There’s no such thing as a “processor generation” in the way that you’re thinking about it. x86 doesn’t have “feature levels” like that – it’s practically mix and match whatever you want. There were attempts to start moving x86 towards something like “feature levels” a few years ago, but Linus fought back against caring about that in the least. You don’t have to have MMX or SSE or an out-of-order pipeline or anything. You just have to support TSC instructions and cmpxchg8b.

And just because your processor claims to be “486-compatible” in marketing or whatever doesn’t mean it actually is. Most of the “compatible” chips you can think of actually aren’t – the Cyrix chips, the WinChips, the Vortex86 chips, etc. They’re all microarchitecturally quirky. That’s why Linux has specific code for each of them. And that’s why you need vendor modifications for some of them too, because they are “486 chips” with stuff that 486s never had, so it’s just magic marketing to call them 486s.

It doesn’t make sense anymore for the “x86” architecture in tree to support this. If there’s enough desire, you create a new architecture and then you don’t have to worry about patches for new chips or bugs found in new chips breaking very old chips, too.

There’s literally talk on the mailing list about doing exactly this. It’s a better solution. It will be more stable and break less. If you believe it’s important, go help.

“But then what’s the purpose of Linux, which brags about its great hardware support?”

Wait a sec, did I miss something in the announcement where they were going to pull all older versions of Linux from existence and make it so that no one, anywhere, ever, could use an older kernel?

It’s open source. Everything’s right there. If people want newer kernels on a 486, you just fork it at this point, pull the changes in as they come and merge it.

Plus: you might treat the kernel and userland as the same thing, but they really, really aren’t. In general you can compile modern distributions on very old kernels and they’re fine.

It might start to get dicey in terms of security stuff, but… old systems fundamentally aren’t secure. Period.

They’d be separate if it weren’t for all the dependencies.

It’s quite difficult to use a modern kernel or distribution with old libraries.

Applications also demand for their period-correct libraries.

In the end, you have to compile a whole distribution to get an old application going.

It’s easier to sit diwn and re-write 1/3 of the application, to make it run on a modern distribution, perhaps.

“It’s quite difficult to use a modern kernel or distribution with old libraries.”

Or vice versa. An old kernal with latest libraries may also cause unexpected issues.

Um. No? It’s really really not. Kernel ABI/syscalls have been pretty compatible for a very long time.

What part of a modern kernel do you even need on a 486? Have there been any kernel changes in the last ten years which actually improve the running of a 486?

Looks like it’s time to buy a new pc!

If you’re still running a 486/586, I’m both impressed and surprised. Impressed that it’s working for you (I assume as some sort of controller) and surprised that it’s still running (that’s a LOT of hours on the clock)

486 is nothing. I’m still running an HP 9845C.

My HP 9816 says hello. :D

Xyzzy. I see no cave here

Yeah, with an intel x86S processor, for example.

It’s entirely legacy free, hope Linux has great support for it.

Just think of all the legacy code that can could be thrown out by fully going X86S!

So much possibilities for a clean up! Yay!

https://www.phoronix.com/news/Linux-6.9-More-X86S

Didn’t Intel cancel X86S in 2024?

Yeah, because they’re hoping to get more support from AMD to make it a more global thing. Which is smart.

This does have the unintended side effect of limiting the hardware hacking community’s efforts to repair old but otherwise functional equipment, particularly in medicine.

How so ?

Just use old compatible software for ancient hardware.

how is that limiting?

Does become a problem for networking, but that’s an ooooold problem at this point thanks to Windows. There’s a ton of extremely expensive equipment that runs on old hardware that is no longer safe to hook up remotely without extreme protections.

+1

A CPU from the 89 in’t evento considered functional, and use a old linux kernel it isn’t going to connect to the internet anyway

Minix 3 is probably a better fit for such efforts. Typical RAM size for 486 systems was only a few megabytes, while Linux kernel is usually more than 10 MB by itself nowadays.

Nah. How about NetBSD? I think that there is more similarity between NetBSD and Linux in terms of userland.

I read that Minix 3 development has halted since the author retired. :(

Some versions of BSD may still support it, though.

If you fork kernel 6.14 now, then merge the 6.15 changes into your branch and make all the necessary patches to make your 6.15 fork run on a 486, then you have 486 support for your hacking endeavours. I don’t think anybody is going to stop you from doing that. And you can do the same for 6.16 and up and away far into the future.

Or at least for as long as you have the time, money and the motivation.

Maybe the Linux community will impose a different version numbering scheme for you branch. Who knows, I’m not involved in development on the Linux kernel.

But this is the great point of open source: if some software package is not doing what you want, you can fork it and do your own thing with it. As long as you make clear that your new fork is not related to the main line.

And if you are doing things that the guys from the main line don’t want to have, you won’t even have to merge anything back.

You actually have to fix a bunch of stuff from 6.14 if you really want it fixed proper – some configurations will work on a 486, but it’s extremely likely that multiple kernel options will just totally break.

Linux really hasn’t had solid support for 486s for years. Actually taking it out of the main tree might improve it.

yeah fork off last working 486 stable kernel, strip other arches out, and backport any features that make sense on the hardware/security fixes.

you’d be looking at about 1-2 person months a year in maintenance, which for the 100 or so of those running linux on a 486, is nothing.

What people aren’t understanding is that there’s a big reason for killing support in tree.

Because there’s no one to maintain it. There’s one guy on the mailing list with 486 builds and he doesn’t have time. It wasn’t being maintained before. It was just zombified semi-kinda “it might work” clinging on because 486 isn’t that different.

Killing it in tree is a good thing. Because now if there are people who care they can actually make it work rather than thinking that it’ll work in tree. Because it won’t.

Not really, you’re not connecting to the internet with these CPUS unless you’re insane, so using legacy software is not an issue.

Updates to the kernel are never related to these architectures anyway, there is no benefit to reap from mainline linux. Running legacy kernels is not really an issue, if you find bug, there is nothing really preventing you from hiring a professional to quickly fix it to make your medical device work again.

Linux is open source, so there is nothing stopping you from doing localized changes to legacy kernels if something were to break ;)

25 years ago I was working for a company that had 486 machines. We were phasing them out. The only ones left were used to test the parts we made and they were running DOS software. I would like to know if anyone here can give an example of a 486 computer that they know of that is running Linux with a modern kernel.

Only 486 machines? Wow! A modern company has waaay more computers than that.

How times change.

One thing I wonder about is how much of a performance penalty these old compatibility hacks infer. And the other way around, would there be a performance gain if there were specialized kernel versions for AMD / Intel / ARM / Mips / etc.

Maybe this does not make sense at all, I don’t know much about this kernel stuff, except that it’s an important part buried somewhere deep in my OS.

Modern x86 is the processor equivalent of the Boeing 737 Max. Well past its prime and pushed beyond sane extremes of the original engineering paradigms it was built upon. Why not rip out some of the newer workarounds in the kernel also? How many people actually update 20+ YO hardware? Most things in service that long are usually treated with kid gloves as they can be incredibly fragile and few people understand the systems anymore. Heck, I work in a facility that has an uninterrupted power uptime of over 10 years; it’s a nail-biter when things that have been burning steadily for years need to be kicked. Days when PPIs and a Tums chaser are necessary.

Perfectly ok with me. As stated in the article, just use an older kernel if you actually need support on those processors. Also, if one is using 486 era tech, might be time to think about moving on before the hardware finally breaks :) .

“Beyond the early 1990s, the 486 was dead for desktop users, with an IBM spokesperson calling the 486 an “ancient chip” and a “dinosaur” in 1996. ”

Yeah, and meanwhile in real-world the am386DX-40 still sold very well in 1994!

It was a very common budget CPU to DOS and WfW 3.11 users at the time and 40 MHz bus speed was quick.

Roughly same time IBM still sold its Blue Lightning chip with 486 core.

https://en.wikipedia.org/wiki/IBM_386SLC#IBM_486BL_(Blue_Lightning)

I mean, sure, back in the 90s a PC was considered obsolete every 6 months.

But that didn’t mean that all the users kept upgrading in same cycle.

Some waited a few more months or even years to upgrade.

There had been owners of 486 hot-rod PCs that held out up until year 2000.

Moral of the story: Never entirely trust a sales person/marketing person. :)

Those were the days when ‘upgrade’ was ‘real’. Very tangible/noticeable. Now, in general, not so much… Tis, why I’ll skip this current generation as AMD 5xxx AM4 runs great on my desktops — even the VMs just scream — for my use.

There were two distinct processors that had the name “Blue Lightning”.

The first was derived from an Intel 386DX, but IBM added 486 instructions and a 16KB write-back L1 cache. the 100MHz version wasn’t as fast as an Intel 486DX4-100, but a little over an Intel 486DX2-66. It didn’t have a built-in FPU, but could use a 387DX FPU (or Cyrix FastMath chips). These were only available as SMD packages, which meant they were only sold either as a complete motherboard, or in CPU upgrade daughterboards.

The second was just a rebadged CyriX Cx486. Being a fabless company, Cyrix made deals with IBM, Texas Instruments, and SGS-Thomson to manufacture its chips, and part of those deals was allowing them to sell Cyrix chips with their own branding.

I think NetBSD still supports i486, so you can try that instead if you want to run *nix on a i486 retro computer.

Most 486 embedded systems would be running DOS, and/or not connected to the Internet anyway. Also, most networking equipment that I am aware of use i586 or better.

Datalight ROM DOS has TCP/IP support.. And many NICs were NE2000 compatible.

Nowadays, about every DOS gamer has networking enabled in the retro rig, too.

Yes, but that is DOS, not Linux. They are not really affected by this decision.

Right, but is it really that favorable if those remaining 486/586 systems must be switching back from Linux to DOS (current FreeDOS) because it has become safer again? 🤨

Not that it bothers me, I like DOS for its simplicity and honesty.

And with FreeGEM/OpenGEM and PC GEOS it has a capable GUI, too!

It’s just.. feeling weird to imagine good ol’ DOS being the new star on the horizon of legacy computing.

Previously it had been Linux which was the great solution to make old hardware run in a productive way.

Still, there are manufacturers who are still making SOCs based on the 486 architecture. I found these two:

https://www.vortex86.com/products

https://www.zfmicro.com/zfx86.html

No more newer Linux updates for these.

The nice thing about open source is these companies can take the stock kernel and restore 486 support if you want to. Or, more likely, they can take the kernel they or their customers use today and maintain it for as long as they want to.

It’s not “anything that someone called a 486” it’s specific chips. The Vortex86 products were mentioned there, I believe theyll be fine.

Apparently everything except the oldest chips (the Vortex86SX/Vortex86DX). Those apparently don’t have vendor support for anything recent anyway so it’s pointless.

Think of all the people running a Win95 or Win XP on an old 486 box because it has the interface and runs the software for old JTAG programmers/debuggers used to maintain tens of thousands of little Linux boards that are still in the field. They just run the old stuff with the old software.

Why would anyone panic over old hardware + old Linux?

“Why would anyone panic over old hardware + old Linux?”

Networking, exposure to the internet. Linux kernel is a walking TCP/IP stack.

Older systems are known for security vulnerabilities, especially Windows.

With exception to Windows for Workgroups, maybe. Without Win32s, it can’t execute Win32 code.

Those are machines that were never built for the internet, and should not be exposed to it. Pretty simple thing to do.

These are legacy support machines, they don’t need to run new applications, they just need to run the ones that have been working for the past 30 years. no updates needed.

If it works there is absolutely no need for updates if it is a stand-alone machine.

You cannot make those old machines secure. Can’t. A new kernel doesn’t make them safe. They have fundamental security issues inherent to the platform (lack of TSC, for one).

i agree with the reasons Linus laid out. it’s just like the sound blaster driver issue from a couple days ago. maintaining old code is a lot of work, and then if it’s not actually being tested then even so it doesn’t work. definitely a lot of argument for dropping unused features.

i agree with Ov3rk1ll, old software for old hardware. i recently resurrected a via C3 (a 2001-era x86 cpu). a pointless exercise because it was too unstable for my use case. but it was easy. i wanted to build a custom kernel, and obviously i didn’t want to use a modern kernel (or userland) for a machine with 12MB of RAM. so i just loaded up debian 3.0 under qemu. it was super easy! way easier than it would have been 20 years ago. and the nice thing is, 20 year old linux is so lightweight that it’s shockingly fast under qemu on a modern computer. so for now at least there’s still great options.

and an anecdote about compatibility…i have an ARM chromebook that was my favorite for a long time…but it’s succumbed to too much damage, so i only use it as a loaner laptop sometimes when my daily driver is busted. but last time i used it, i let debian upgrade glibc, and the new glibc is incompatible with the 2015 kernel on there. it ‘mostly works’ but i can’t, for example, run X11 anymore. i bothered to track it down and it’s a really minimal feature that is missing, something that would be easy for glibc to work around with a compatibility layer. but i looked in glibc, and the compatibility layer exists! but because it’s mostly unused, it’s broken. which is exactly the problem Linus was pointing at.

i had a lot of ways forward — i could build a more recent kernel for it, or i could force glibc back to the old version, or i could upgrade glibc and hope someone’s fixed the compatibility, or i could fix the compatibility myself and install a custom glibc. but instead i accepted mortality.

“i agree with the reasons Linus laid out. it’s just like the sound blaster driver issue from a couple days ago. maintaining old code is a lot of work, and then if it’s not actually being tested then even so it doesn’t work. definitely a lot of argument for dropping unused features.”

That “genius” apparently doesn’t think or care too much about classic emulators or VMs.

Soft PC on Macintosh (Power PC) does emulate a Pentium class CPU.

It’s a Pentium MMX in later versions, gratefully, so it’s safe – for now.

Things that get dropped often have bad habbit of taking other things with them.

Now it’s just 486 support, when will Pentium and Pentium MMX be next?

Which CPU is the next on the list of execution?

haha he isn’t executing anything. you can still use old software on your old hardware. the only thing he’s axed is a broken compatibility layer. already, the kernel wouldn’t work on 486. now they’re just removing the pretense.

Is that so? Or was it fixed in 6.15, just to be removed in, say, 6.16?

Btw, “execution” was a pun. A bad one.

Because of “execution” in the sense of d. punishment and because CPUs do “execute” instructions/programs.

Why would anybody care about running the latest kernel on emulated ancient hardware? I’d posit that exactly zero people are doing this for 486 code because otherwise they’d have noticed that it hasn’t worked for years.

You can construct an arbitrary number of straw men to justify anything if you try hard enough. That doesn’t make it useful or productive

“You can construct an arbitrary number of straw men to justify anything if you try hard enough. That doesn’t make it useful or productive”

What do you mean by “contruct”? 🤨

More than often, reality turns out to be more fantastic than fiction.

There are weird use cases for sure.

Modern Linux can be useful in an ancient VM or EM to provide SAMBA services to other internally networked OSes.

Here, the Linux VM/EM could keep connection to the world outside of the emulated NAT etc pp.

Or, it can be used to simulate an ISP to them, either by simulating dial-up or ISDN functionality.

I’m not making that up, Linux/Unix systems used to be supported by older VMs or EMs of that era.

But back then it was Caldera OpenLinux, SUSE 6 or some Solaris 7.

These old distributions are very obsolete in the modern world, networking wise.

As a shameless plug, I created a distro specifically for i486 that runs on real hardware :

https://snacklinux.org/

Package manager + compiled software as well. Linux 5.x and 6.x offer no benefits to i386/i486 users when we talk about min spec hardware. Linux 4.x LTS is used instead in the distro, offering a modern-ish kernel that still can compile to under 1MB with generic options.

The other option to bring life back to old hardware is of course running a time-appropriate distro, but that’s almost too easy and challenges are fun.

I find most people into retro hardware go the DOS/Win95 route to play games from the era but doesn’t leave with you newer-ish software to, say, browse the internet.

I notice that Rocky EL 9.x is flashing a deprecation warning on my Dell I5 workstations now… can they be that far behind?

Funny coincidence that this happens now, just last week I gave away my last batch of ~30 ‘bifferboards’. The boards use a low power RDC chip with 486 core. It completely makes sense that they do this given the way the x86 detection happens in Linux. They took the decision right or wrong, to have only one generated binary target for all x86 variants, and this means layering complicated detection logic at startup. Eventually this was going to buckle under the weight. One edge case is it didn’t work for kexec under 486 because the kexec implementation included Pentium instructions. That’s been the case since 2010, so a 15-year old bug! Just happened nobody needed kexec for 486SX (except me…) Making that detection work at runtime was complex so I simply added a compiler define to switch the Pentium instructions for 486 compatible ones. If they had separate compile targets for some of the variants it may have meant some duplicated code, but they could perhaps have dealt with the conflicts more elegantly. Since the kernel has very elegant structures for supporting multiple CPU targets, they could have siloed the 486-related code, given it basic, minimal testing and left it in as an alternate target. This is just an observation, not judging as these calls are hard to make! I think ultimately the way to continue 486 support may be via projects like OpenWrt, which already add a bunch of targets on top of the standard ones in the mainline kernel.

To be fair, there is an insidious conspiracy, by the biggest corporations and most influential figures in tech, to devalue and brick second hand market hardware. There was a time when Intel and MS ate the cost to just get PCs in peoples hands, now they seem to working really hard to ensure grandma relies upon Android/Smart device/Linux to run Chrome for simple tasks.

Conversely, noone is seriously running critical infrastructure on a Pentium with a brand spanking new Linux kernel release. As to medical or financial institutions using some legacy hardware, talk about security issues. Ransomware just killed your patient.

I’m a little curious about what code was actually broken. For example, there was a working implementation of the 8 byte compare and exchange instruction. It clearly worked at one time and thus there is no reason it should stop working unless the machine instructions used to implement it are no longer available. Same with RDTSC. Now, if the opcodes for these instructions were reused in later x86 CPUs or if the CPUID bits that signal that execution is occurring on an 80486 were no longer valid on later X86 CPUs, then they might have a point.

However, I bet the real issue is that they no longer want to bestir themselves to actually test on 80486 class hardware. It’s not like they test on all other hardware, either. So why single out 80486?

Perhaps there are actually bugs in the ‘486 class supporting code, but the hand waving explanations seem a little lazy. I know, I could fetch the patch and examine the code being removed. I am not going to do that because 1) I don’t run 80486 machines on Linux, only DOS and other OSes; 2) I am too lazy; and 3) Newer Linux kernels are only needed for newer hardware support anyway – just find a stable kernel and stay on that version forever.

“It clearly worked at one time and thus there is no reason it should stop working …”

would that it were!!! that’s just not how software works. anything that isn’t tested rots. the reasons are really diverse. gcc changes, and even gas changes. but mostly, the kernel itself changes. they are always re-factoring and making small changes to interfaces and so on. subtly changing the contract between one part of the kernel and another.

anecdote

apparently it was 1997. i upgraded some 386 from a linux 2.0 kernel to 2.1. it started crashing in the 3c507 driver. that’s a 3com coaxial (10b2) ethernet card with a 16-bit ISA interface. i tracked it down to a change by Alan Cox. the whole network stack was constantly changing, as were various hardware abstractions. and he had made a change across all of the network interface drivers, and he had botched the 3c507. and it was already a pretty old card by that time, so i was the first to run into it. using diff, i figured out what the changes were, and what they should have been, and i sent in a patch for it.

the point is just…code rots. if it isn’t tested, it doesn’t work. if Alan Cox hadn’t gone through and made the drastic change that he did, we would have even worse problems than the ones we do. so he broke it probably in 1996, and i noticed in 1997…but that machine never upgraded again so i never tested it with a newer kernel. i don’t know when it was finally removed but i bet by the time it was removed it hadn’t been used in years by anyone.

a lot of linux kernel code isn’t tested by the primary developers, but is tested incidentally by thousands or millions of people in the wild. especially device drivers. but modern kernels on 486es…no one tests that. even if the known-about compatibility hacks still work, there are surely other problems that went unnocited.

“the point is just…code rots. if it isn’t tested, it doesn’t work”

Although your point about gcc/gas changes is well taken, I think you are overstating the case for straightforward instruction simulation functions.

I’d state your point differently: if code isn’t tested, it can’t be proven to work correctly.

That said, these routines should be highly deterministic and have no variation in their execution, assuming no page faults or similar issues. This should be the kind of code that exists exactly one place as called by every user. I’d expect this kind of instruction emulation code to be locked in physical memory. I am aware that it is still not simple to mathematically prove equivalence of the simulation because of operand faults, etc.

I am not saying that 80486 support should have been retained, but the reasons stated in the article are very imprecise and ‘hand wavy’. I suppose nothing more should be expected from a summary.

your re-statement is itself provably true. if it isn’t tested, you don’t know if it works.

but i’m not a kid, and i’m not operating from first principles. i’ve been around the block and i am here to tell you

in actively-maintained projects, if it isn’t tested, it is broken within a year. and after a decade, there is not even the shadow of hope for it. unless you can somehow manage the dependencies between components perfectly, which is what the kernel developers struggle with the most, and what they are aiming to actively improve when they accidentally break peripherally-related code.

Cmpxchg8b and TSC instructions are used to build up very fundamental things in the kernel, so the machinery around them changed significantly. Cmpxchg8b is the basis of lock free code, so it’s tricky.

In addition, the x86 platform internals got very complicated and lots of effort for security changed things in ways that just wouldn’t work.

Really what’s going on is that Linux is saying “look x86 has changed enough that 486s are really a different architecture.” Which makes total sense. And then the truth is that there are not enough users to support a separated i486 architecture in tree.

There aren’t really any security implications for quite a few years, kernel 6.1 has LTS support until December 2027, and kernel 6.12 has CIP LTS support until June 2035. Enterprise distros may also be supporting these versions for another few years as well. So 486s still have at least decade of Linux support left, it’s just been dropped from the latest bleeding edge kernel.

“As of version 6.15, the Linux kernel will no longer support chips running the 80486 architecture, along with a gaggle of early 586 chips as well.”

Well this article is all backwards. Linux kernel 6.15 includes a patch that FIXES i486 support, which had been broken since Linux kernel v6.7 due to a minor change in the boot code not accommodating CPUs without support for the CPUID instruction. Unfortunately the Linux developers seemingly didn’t like / ignored the patch that someone submitted in October 2024 to fix this (although it worked for me), and none of them proposed another one until I asked about it in April, leading to this patch which was merged on the 6th of May 2025 to “… In doing so, address the issue of old 486er machines without CPUID support, not booting current kernels.”:

https://lore.kernel.org/lkml/174652827365.406.14578389386584457710.tip-bot2@tip-bot2/

As for the patch removing i486 support: Well presumably this article’s author looked at it, presumably including the first part here where the M486 build options are removed:

https://lore.kernel.org/lkml/20250425084216.3913608-2-mingo@kernel.org/

Now go take a look at that part of the source code for Linux kernel v6.15:

https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/tree/arch/x86/Kconfig.cpu?h=v6.15#n49

Oh look, the M486 options are still there! That explains why I can’t find a similar message saying that the i486-removal patch has been merged. In fact M486 is still a build option for the current working copy of the kernel source code:

https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/tree/arch/x86/Kconfig.cpu#n49

Apparantly they’re aiming to merge it for kernel v6.16 at the moment:

https://lore.kernel.org/lkml/aCX9iN5BxitdozwC@gmail.com/

So HackADay, this article’s title should have been something like:

“Why the latest Linux kernel WILL run on your 486 again, but maybe not for much longer”

P.S.

This post was written from an i586 running Linux (although not v6.15) which I’ve been using everyday since I got it second hand in the early 2000s. If the Linux developers want to help users of old hardware like me (like they just did, fixing v6.15), then thanks! If not, well obviously that’s disappointing for me. But at least they were on my side for a lot longer than Microsoft (this PC also boots Win98), and it looks like all of OpenBSD, FreeBSD, and NetBSD still support i486 (or at least claim to), so they might be the next option to help keep me off the hardware upgrade treadmill.

It’s also disappointing for the open-source 486 FPGA core projects like ao486, which could run Tiny Core Linux (which is still built for 486 and later).

https://github.com/MiSTer-devel/ao486_MiSTer

Unfortunately HackADay’s comments system needs Javascript now (hence I haven’t commented here for years) so I have to run Firefox from a relatively modern SBC in a remote X window over my LAN to post this. Oh yeah, nobody’s meant to use X11’s network transparancy anymore either, so everything can be switched to Wayland. Ho hum.

The trouble is drama sells – not many people click on headlines that say “move along, nothing to see here”. The world might be a better place if we were subjected to less of the latter if course.

Well done for contributing to the project – a far more useful approach than the stream of comments bickering about “dropping” it.

As a new convert to Linux (24.04 Ubuntu server on a 2010 HP N54L which now skips along!) my long standing opinion that you should choose your software to match the hardware is being challenged.

That said, it’s still a leap as to why anyone would want to try to run a 2025 OS on 1995 hardware however; unless that’s their particular area of expertise such as yourself!

That was a really long comment to say “this should’ve said 6.16, the next version of the Linux kernel.” Because it would be super-weird to remove support from a version of the Linux kernel that was finalized in May and whose merge window closed in April, over a month prior to that patchset.

This is a good things as Linux is already way too heavy for this kind of cpu…

Running old computer as servers is a waste of energy.

Now, enthusiast need to build an dedicated light OS for this kind of retro desktop.

Maybe using old Linux code base, pruning all overkill capabilities…

It would be nice if that end of suport will give birth to a pre-pentium only version of the kernel (something that wouldn’t work with newer chips) that would be the basis of futur work (with dedicated maintainers) for enabling these legacy devices to still march on in the 21st century with modern features.

Yeah, that’s what they talked about in the first version of this patchset.

The kernel mailing list is a good read for this. One of the problems that popped up is that there are kernel build bots which build with a random config – literally, they just throw random y/n’s into the kernel configuration – and you get weird stuff like this.

It just doesn’t make sense to have the main “x86” architecture have to worry about this stuff. Mainly for one very important reason: the jump from 486->586 added by far the biggest kernel development difficulty – common, commercially-successful multiprocessor systems.

I was wondering, what features are coming into the Linux kernel that are not related to hardware features of newer hardware that is irrelevant for older hardware anyway? Is there anything that has even any impact to user-space level applications?

freeDOS to the rescue. ;)

I’m ok with this, seems no big deal. My worry is when (not if) big tech get full control of the Linux timeline. Then you WILL see them dumping support for anything more than five years old. MS and Google are very close to having full control of the direction Linux goes.

There’s also PC-MOS/386 v5 now (open source)..

I want to run my 64 bit multiply program and

not have a multitasking OS run it. How do I do this?

AI Overview.

Running a 64-bit multiply program without a multitasking OS is a

form of bare-metal programming. This means your program will be

the only code running on the processor, directly controlling the

hardware without the intervention of an operating system.

It’s a complex undertaking that requires significant understanding

of the specific hardware you’ll be running on.

Here’s a breakdown of how you might achieve this:

Bare-Metal Programming:

Understanding the Hardware:

Creating a Minimal Environment:

Writing Your 64-bit Multiply Program:

oading the Program:

Methods for 64-bit Multiplication:

Important Considerations:

In summary, running a 64-bit multiply program without a

multitasking OS involves writing your program as a bare-metal

application that initializes the hardware and performs the multiplication

using processor-specific instructions or a software implementation.

This requires in-depth hardware knowledge and is significantly more

complex than standard application development.

II want to run my gcc c transparent portable 64 bit multiply program

and not have a multitasking OS run it. How do I do this? :)

AI Overview.

It’s definitely possible to run your GCC C program for 64-bit multiplication

without a multitasking operating system, but it involves some significant

considerations and work. This approach is often referred to as “bare-metal

programming”.

Here’s a breakdown of how you would approach this:

…

Compiling and Linking:

GCC Toolchain: Use the GCC toolchain to compile your C code into a

binary executable.

Linker Script: A critical part of bare-metal development is the linker

script. This script defines how the compiled code is structured

to run independently without an OS.

Memory Management:

Without an OS to handle memory allocation, your program must

manage memory manually. 4.

Loading and Execution:

Bootloader:

Hardware Setup:

Important Considerations:

Complexity:

Device Drivers:

Debugging:

In summary, running a C program without a multitasking OS is achievable

with bare-metal programming, but it involves more effort and requires

you to manage many aspects that an OS typically handles, such as

hardware initialization, memory management, and device interactions.

FIG Forth to the rescue?

Albert Gore willing, of course.

For me it looks like in 2012 all i486 users were warned that they are next. 13 years seems to be enough time to prepare.

PPJ, your words are reasonable and non-aggresive, thanks for that information.

What makes me wonder though, are these two things:

– What signal does the removal send to other maintainers?

– Which x86 proessor generation is next? i586 (full)? i586+MMX? i686?

The current events in regards of i486 support are special in so far, I think, because it’s the first time that a majorarchitecture is affected, actually.

Yes, there had been i386 support before, but it had symbolic value foremost.

Affected 386 users could have upgraded to an 486DLC processor, maybe.

Many 90s Linux distros such as Suse did require an i486 processor as minimum.

This was the real world minimum, so to say. Also in terms of performance.

Also, Novell netware servers with lots of memory had used i486 processor, as well.

72pin “PS/2” SIMMs w/ EDO RAM also appeared in 486 days.

So powerful i486 hardware with 8, 16 or 64MB of RAM had been a reality in the mid-90s.

Not for John Dove, maybe, but in a professional/industrial environment.

The i486 used to be the power-user processor. Until the Pentium took over, of course.

These are my thoughts or concerns about the matter.

I have no personal need for i486 support myself at the moment, but I’m worried about others.

That’s why I think about pros/cons, the effect the removal would have.

After all, questioning changes is reasonable. Also in a political sense.

I can imagine there might be scenarios in which i486 users could have a use for Linux.

In a dual-boot configuration, for example. On original machine.

Be it a desktop PC or an industrial machine.

“Which x86 proessor generation is next? i586 (full)? i586+MMX? i686?”

This discussion is literally on the kernel mailing list thread. The huge reason to split off “486” is because Linux has never had 486 SMP support (because they essentially didn’t exist).

The only comparable architecture change is the 64-bit jump but that won’t happen because you still need the equivalent 32-bit code.

My first Linux install was on a 486. I have a lot of happy memories building 486 systems. I really want to hate this move.

But… come on. I really can’t make an argument why someone needs to expend a bunch of effort supporting 486s. Not even the environmental, minimize waste argument. Anything that would run on a 486 could probably run on a very cheap Arm device, emulating x86 (if it can’t just be recompiled for Arm) and use less electricity in the process!