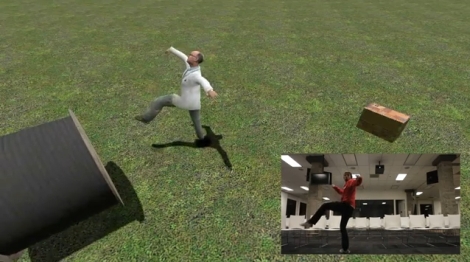

The London Hackspace crew was having a tough time getting their Kinect demos running at Makefair 2011. While at the pub they had the idea of combining forces with Brightarcs Tesla coils and produced The Evil Genius Simulator!

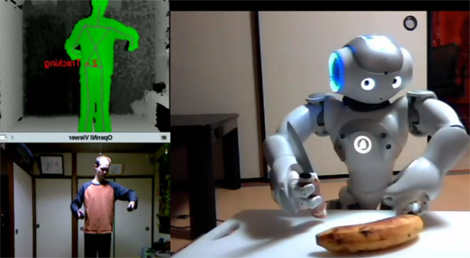

After getting the go ahead from Brightarcs and the input specs of the coils they came up with an application in Openframeworks which uses skeletal tracking data to determine hand position. The hand position is scaled between two manually set calibration bars (seen in the video, below). The scaled positions then speeds or slows down a 50Hz WAV file to produce the 50-200Hz sin wave required by each coil. It only took an hour but the results are brilliant, video after the jump.

There are all these previously featured stories on the Kinect and we’ve seen Tesla coils that respond to music, coils that make music, and even MIDI controlled coils, nice to see it all combined.

Thanks to [Matt Lloyd] for the tip!

Continue reading “The Evil Genius Simulator: Kinect Controlled Tesla Coils”