For a few years now I’ve been developing an interactive army of delta robots. This ongoing project is fueled by my desire to control many mechanical extremities like an extension of my body (I’m assuming I’m not the only one who fantasizes about robots here).

Since my army doesn’t have a practical application… other than producing pretty light patterns and making the user feel extremely cool for a minute, I guess you’d call it art. In the past I’ve held a Kickstarter to fund the production of my art which I can now happily show at cool events with interesting people; Maker Faire being one of them.

Since my army doesn’t have a practical application… other than producing pretty light patterns and making the user feel extremely cool for a minute, I guess you’d call it art. In the past I’ve held a Kickstarter to fund the production of my art which I can now happily show at cool events with interesting people; Maker Faire being one of them.

Interactivity and Sprawling Crowds

Last year, for our debut at the big Bay Area Maker Faire, my collaborator, [Mark], and I displayed a smaller sampling of 30 robots for our installation. We also decided to create an interactive aspect for others to experience. After the end of our crowdfunding period last March, we had a little over a month to do any development before the big event, so our options were slim. The easy solution was to jam our delta code into the hand tracking demo which comes with the Xbox Kinect’s Open NI within Processing. This was cool enough to exhibit, but we hadn’t really anticipated how it would go over in an environment as densely packed as the dark room at Maker Faire.

We should have known better. Both of us were aware that there would be many, many children… all with micro hands to confuse and bewilder the Kinect, but we did it anyway. Our only resolve was to implement the feature that would force the Kinect to track one hand at a time, only after being waved at in a very particular fashion. After needing to explain this stipulation to every person who stopped by our booth over the course of the weekend, we decided never to use the Kinect for crowds ever again; lesson learned.

Delta Robots and DMX

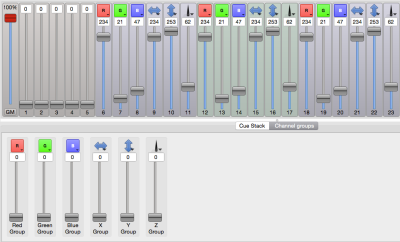

Over the past year since that experience, we’ve tripled the size of the installation and brainstormed some better demo ideas. As of now, the robots are all individually addressable over an RS485 bus, and we use the DMX protocol over a CAT5 cable to send commands. If you aren’t familiar with it, DMX is used in show production to control stage lighting… to which there is a super neat and free application called QLC+ that allows you to effectively orchestrate the motion and color of many individual light units; perfect for our cause.

Functionally, each of the 84 delta robots in the installation believes that it is a stage light (robots with identity issues). We mapped the X and Y axis of the end effector to the existing pan and tilt values, and the z axis to the beam focus value. The RGB of the LED mounted in the end effector of each delta maps directly to the RGB value of the stage light.

Functionally, each of the 84 delta robots in the installation believes that it is a stage light (robots with identity issues). We mapped the X and Y axis of the end effector to the existing pan and tilt values, and the z axis to the beam focus value. The RGB of the LED mounted in the end effector of each delta maps directly to the RGB value of the stage light.

By using the sliders in the QLC+ GUI, I could select groups of robots and create presets for position and color. This was great, someone like me who doesn’t really write a lot of code could whip up impressive choreography with little sweat. Additionally, the program comes with a nice visualizer, where you can layout virtual nodes and view your effects as you develop them.

This is the layout of our installation mapped in QLC+. The teal and purple sliders around each light represent pan and tilt (or in our case X and Y):

Lighting control was an interesting solution. Having autonomous robots this year changed how people responded to them, as they were less like an army you’d command and more of a hypnotic field of glowing grass.

[Mark] and I are considering picking up some flex sensors and maybe playing with the Leap or an EEG headset as a means to reintroduce the interactive aspect. Bottom line, I have this cool new toy that I can’t wait to play with over the summer!