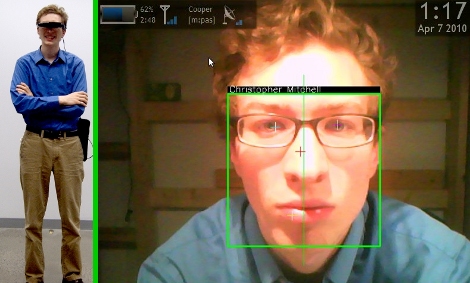

The YayTM is a device that records a person dancing and judges whether or not the dancing is “Good”. If the YayTM likes the dance, it will dispense a dollar for the dancers troubles. However, unless the dancer takes the time to read the fine print, they won’t realize that their silly dance is being uploaded to YouTube for the whole world to see. Cobbled together with not much more than a PC and a webcam, the box uses facial recognition to track and rate the dancer.

The YayTM was made by [Zach Schwartz], a student at NYU, as a display piece for the schools Interactive Telecommunication Program. Unfortunately there aren’t any schematics or source code, but to be honest, having one of these evil embarrassing boxes around is probably enough. What song does the YayTM provide for dancing, you ask? Well, be sure to check it out here.

EDIT: [Zack] has followed up with an expanded writeup of the YayTM. Be sure to check out his new page with source code and more info. Thanks [Zack]!