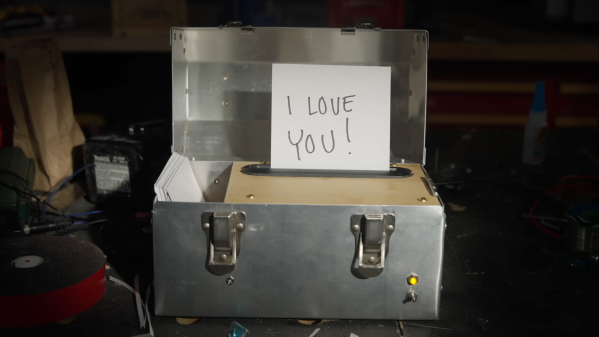

While hackers can deftly navigate their way through circuit diagrams or technical documentation, for many of us, simple social interactions can be challenge. [Simone Giertz] decided to help us all out here by making a device to help us share our feelings.

Like an assignment in Mission: Impossible, this aluminum box can convey your confessions of love (or guilt) and shred them after your partner (or roommate) reads the message. The box houses a small shredder and timer relay under a piece of bamboo salvaged from a computer stand. When the lid is opened, a switch is depressed that starts a delay before the shredder destroys the message. The shredder, timer, and box seem almost made for each other. As [Giertz] says, “Few things are more satisfying than when two things that have nothing to do with each other, perfectly fit.”

While seemingly simple, the attention to detail on this build really sets it apart. The light on the front to indicate a message is present and the hinged compartment to easily clean out shredded paper really make this a next-level project. Our favorite detail might be the little space on the side to store properly-sized paper and a marker.

While the aluminum box is very industrial chic, we could see this being really cool in a vintage metal lunch box as well. If you’re looking for other ways to integrate feelings and technology, checkout how [Jorvon Moss] brings his bots to life or how a bunch of LEDs can be used to express your mood.