Decently useful AI has been around for a little while now, and robotic arms have been around much longer. Yet somehow, we don’t have little robot helpers on our desks yet! Thankfully, [Yifei] is working towards that reality with Tabletop Handybot.

What [Yifei] has developed is a robotic arm that accepts voice commands. The robot relies on a Realsense D435 RGB-D camera, which provides color vision with depth information as well. Grounding DINO is used for object detection on the RGB images. Segment Anything and Open3D are used for further processing of the visual and depth data to help the robot understand what it’s looking at. Meanwhile, voice commands are interpreted via OpenAI Whisper, which can feed prompts to ChatGPT for further processing.

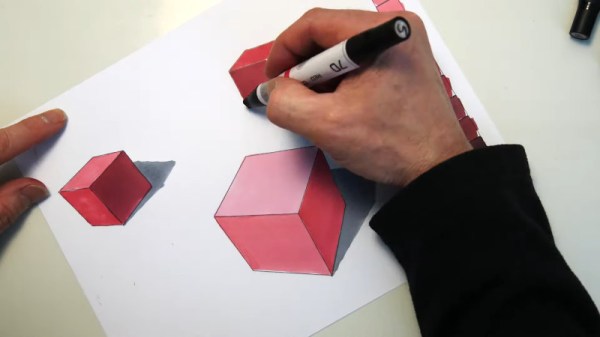

[Yifei] demonstrates his robot picking up markers on command, which is a pretty cool demo. With so many modern AI tools available, we’re getting closer to the ideal of robots that can understand and execute on general spoken instructions. This is a great example. We may not be all the way there yet, but perhaps soon. Video after the break.

Continue reading “Tabletop Handybot Is Handy, And Powered By AI”