Ever looked at Wolfram Alpha and the development of Wolfram Language and thought that perhaps Stephen Wolfram was a bit ahead of his time? Well, maybe the times have finally caught up because Wolfram plus ChatGPT looks like an amazing combo. That link goes to a long blog post from Stephen Wolfram that showcases exactly how and why the two make such a wonderful match, with loads of examples. (If you’d prefer a video discussion, one is embedded below the page break.)

OpenAI’s ChatGPT is a large language model (LLM) neural network, or more conventionally, an AI system capable of conversing in natural language. Thanks to a recently announced plugin system, ChatGPT can now interact with remote APIs and therefore use external resources.

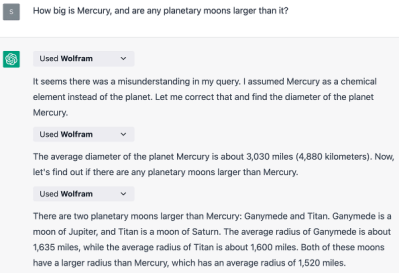

This is meaningful because LLMs are very good at processing natural language and generating plausible-sounding output, but whether or not the output is factually correct can be another matter. It’s not so much that ChatGPT is especially prone to confabulation, it’s more that the nature of an LLM neural network makes it difficult to ask “why exactly did you come up with your answer, and not something else?” In addition, asking ChatGPT to do things like perform nontrivial calculations is a bit of a square peg and round hole situation.

So how does the Wolfram plugin change that? When asked to produce data or perform computations, ChatGPT can now hand it off to Wolfram Alpha instead of attempting to generate the answer by itself. Both sides use their strengths in this arrangement. First, ChatGPT interprets the user’s question and formulates it as a query, which is then sent to Wolfram Alpha for computation, and ChatGPT structures its response based on what it got back. In short, ChatGPT can now ask for help to get data or perform a computation, and it can show the receipts when it does.

Continue reading “Wolfram Alpha With ChatGPT Looks Like A Killer Combo”