Computers are difficult enough to reason about when there’s just a single thread doing one task. There are dozens of cores in today’s modern processor world, and your program might try to take advantage of using more than just one. Things happening concurrently makes the number of states and interactions explode in to a mess we as humans are likely going to have trouble understanding. So, like [Hillel], you might turn to the computer to try and model those interactions.

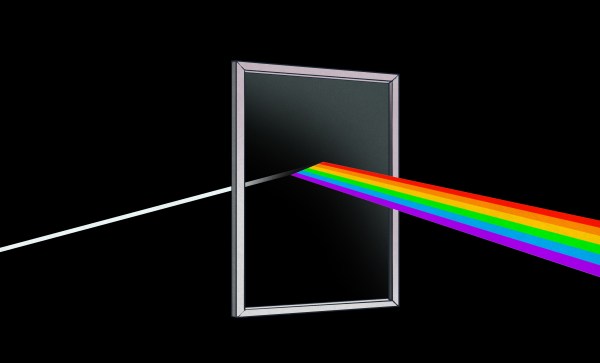

The model in question is a task queue. Things are added to the pile, and “workers” grab one from the pile and process it. There are two metrics used to measure the effectiveness of a task queue: throughput and latency. Throughput is the number of things you can do per second (like this maximum throughput 3d printer), while latency is the amount of time it takes to finish one thing. Continue reading “Reflecting On A Queueing Prism Leads To Unexpected Results”