Whenever we write up a feature on a microcontroller or microcontroller project here on Hackaday, we inevitably get two diametrically opposed opinions in the comments. If the article featured an 8-bit microcontroller, an army of ARMies post that they would do it better, faster, stronger, and using less power on a 32-bit platform. They’re usually right. On the other hand, if the article involved a 32-bit processor or a single-board computer, the 8-bitters come out of the woodwork telling you that they could get the job done with an overclocked ATtiny85 running cycle-counted assembly. And some of you probably can. (We love you all!)

When beginners walk into this briar-patch by asking where to get started, it can be a little bewildering. The Arduino recommendation is pretty easy to make, because there’s a tremendous amount of newbie-friendly material available. And Arduino doesn’t necessarily mean AVR, but when it does, that’s not a bad choice due to the relatively flexible current sourcing and sinking of the part. You’re not going to lose your job by recommending Arduino, and it’s pretty hard to get the smoke out of one.

When beginners walk into this briar-patch by asking where to get started, it can be a little bewildering. The Arduino recommendation is pretty easy to make, because there’s a tremendous amount of newbie-friendly material available. And Arduino doesn’t necessarily mean AVR, but when it does, that’s not a bad choice due to the relatively flexible current sourcing and sinking of the part. You’re not going to lose your job by recommending Arduino, and it’s pretty hard to get the smoke out of one.

But these days when someone new to microcontrollers asks what path they should take, I’ve started to answer back with a question: how interested are you in learning about microcontrollers themselves versus learning about making projects that happen to use them? It’s like “blue pill or red pill”: the answer to this question sets a path, and I wouldn’t recommend the same thing to people who answered differently.

For people who just want to get stuff done, a library of easy-to-use firmware and a bunch of examples to crib learn from are paramount. My guess is that people who answer “get stuff done” are the 90%. And for these folks, I wouldn’t hesitate at all to recommend an Arduino variant — because the community support is excellent, and someone has written an add-on library for nearly every gizmo you’d want to attach. This is well-trodden ground, and it’s very often plug-and-play.

Know Thyself

But the other 10% are in a tough position. If you really want to understand what’s going on with the chip, how the hardware works or at least what it does, and get beyond simply using other people’s libraries, I would claim that the Arduino environment is a speed bump. Or as an old friend of mine — an embedded assembly programmer — would say, “writing in Arduino is like knitting with boxing gloves on.”

His point being that he knows what the chip can do, and just wants to make it do its thing without any unnecessary layers of abstraction getting in the way. After all, the engineers who design these microcontrollers, and the firms that sell them, live and die from building in the hardware functionality that the end-user (engineer) wants, so it’s all there waiting for you. Want to turn the USART peripheral on? You don’t need to instantiate an object of the right class and read up on an API, you just flip the right bits. And they’re specified in the datasheet chapter that you’re going to have to read anyway to make sure you’re not missing anything important.

For the “get it done” crowd, I’m totally happy recommending a simple-to-use environment that hides a lot of the details about how the chip works. That’s what abstractions are for, after all — getting productive without having to understand the internals. All that abstraction is probably going to come with a performance cost, but that’s what more powerful chips are for anyway. For these folks, an easy-to-use environment and a powerful chip is a great fit.

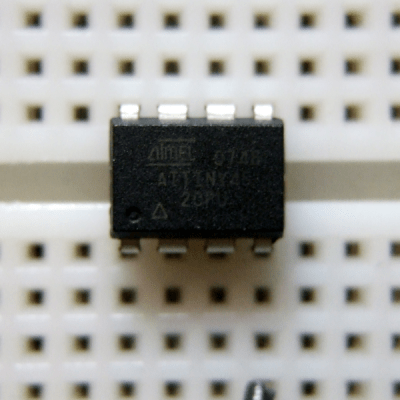

But my advice to the relative newbie who actually wants to learn the chip is the exact opposite. Pick an environment that maximally exposes the way the chip works, and pick a chip that’s not so complicated that it completely overwhelms you. It’s the exact opposite case: I’d recommend a “difficult” but powerful environment and a simple chip.

If you want to become a race-car driver, you don’t start you out on a Formula 1 car because that’s like learning to pilot a rocket ship. But you do need to understand how a car handles and performs, so you wouldn’t start out in an automatic luxury sedan either. You’d be driving comfortably much sooner, but you lose a lot of empathy for the drivetrain and intuition about traction control. Rather, I’d start you out on something perhaps underpowered, but with good feel for the road and a manual transmission. And that, my friends, is straight C on an 8-bitter.

Start Small…

I hear the cries of the ARM fanboys and fangirls already! “8-bit chips are yesterday, and you’re just wasting your time on old silicon.” “The programming APIs for the 8-bit chips are outdated and awkward.” “They don’t even have DMA or my other favorite peripherals.” All true! And that’s the point. It’s what the 8-bitters don’t have that makes them ideal learning platforms.

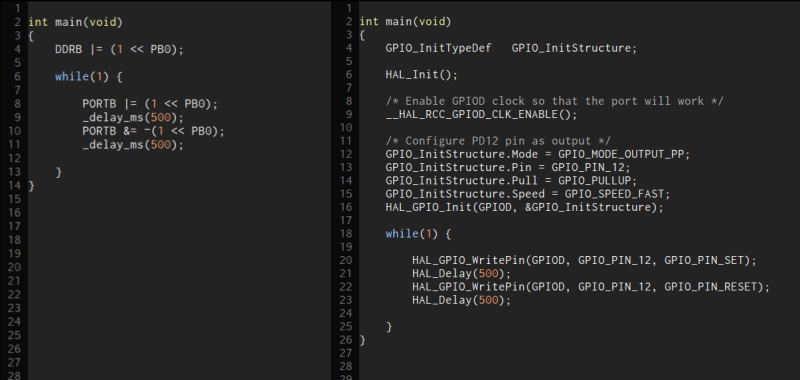

How many of you out there have actually looked at the code that runs when you type HAL_Init() or systinit() or whatever? Even if you have, can you remember everything it does? I’m not saying it’s impossible, but it’s a lot to throw at a beginner just to get the chip up and running. Complex chips are complex, and confronting a beginner with tons of startup code can overwhelm. But glossing over the startup code will frustrate the dig-into-the-IC types.

Or take simple GPIO pin usage. Configuring the GPIO pin direction on an 8-bitter by flipping bits is going to be new to a person just starting out, but learning the way the hardware works under the hood is an important step. I wouldn’t substitute that experience for a more abstract command — partly because it will help you configure many other, less user-friendly, chips in the future. But think about configuring a GPIO pin on an ARM chip with decent peripherals. How many bits do you have to set to fully specify the GPIO? What speed do you want the edges to transition at? With pullup or pulldown? Strong or weak? Did you remember to turn on the GPIO port’s clock?

Or take simple GPIO pin usage. Configuring the GPIO pin direction on an 8-bitter by flipping bits is going to be new to a person just starting out, but learning the way the hardware works under the hood is an important step. I wouldn’t substitute that experience for a more abstract command — partly because it will help you configure many other, less user-friendly, chips in the future. But think about configuring a GPIO pin on an ARM chip with decent peripherals. How many bits do you have to set to fully specify the GPIO? What speed do you want the edges to transition at? With pullup or pulldown? Strong or weak? Did you remember to turn on the GPIO port’s clock?

Don’t get me started on the ARM chips’ timers. They’re plentiful and awesome, but you’d never say that they’re easy to configure.

The advantage of learning to code an AVR or PIC in C is that the chip is “simple” and the abstraction layers are thin. You’re expected to read and write directly to the chip, but at least you’ll know what you’re doing when you do it. The system is small enough that you can keep it all in your mind at once, and coding in C is not streamlined to the point that it won’t teach you something.

And the libraries are there for you too — there’s probably as much library code out there for the AVR in C as there is in Arduino. (This is tautologically true if you count the Arduino libraries themselves, many of which are written in C or C-flavored C++.) Learning how to work with other people’s libraries certainly isn’t as easy as it is in Arduino, where you just pull down a menu. But it’s a transferable skill that’s worth learning, IMO, if you’re the type who wants to get to really know the chips.

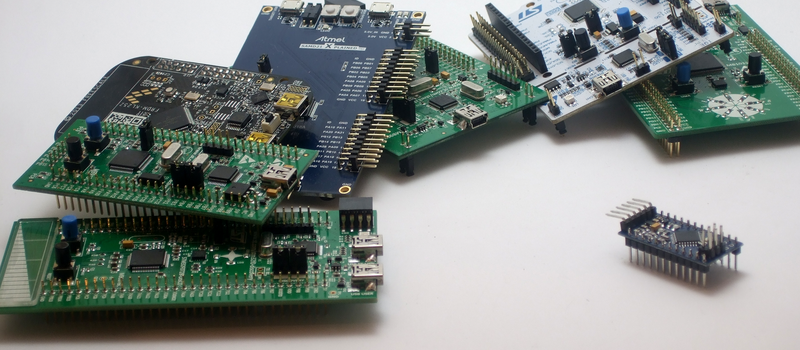

…But Get Big

So start off with the 8-bit chip of your choice — they’re cheap enough that you can buy a stick of 25 for only a tiny bit more than the price of a single Arduino. Or if you’d like to target the 8-bit AVR platform, there’s a ton of cheap bare-bones boards from overseas that will save you the soldering, and only double the (minimal) cost. Build 25 projects with the same chip until you know something about its limits. You don’t have to achieve lft levels, or even memorize the ghastly “mnemonic” macro names for every bit in every register, but at least get to know how a timer/counter is good for. Along the way, you’ll develop a library of code that you use.

Then move on. Because it is true that the 8-bitters are limited. Along the way, you’ll have picked up some odd habits — I still instinctively cringe at 32-bit floating point multiplications even on a chip with a 32-bit FPU — so you don’t want to stagnate in the 8-bit world too long.

Maybe try an RTOS, maybe play around with all the tools you’ve been missing on the smaller chips. Luxuriate in the extra bit depth and speed! Use the higher-level, black-boxy libraries if you feel like it, because you’ll know enough about the common ways that all these chips work inside to read through the libraries if you want to. If you started out the other way, it would all be gibberish — there’s not a digitalWrite() in sight!

After a few projects with the big chips, you’ll have a good feel for where the real boundaries between the 8- and 32-bit chips lie. And despite what our comments would have you believe, that’s not something that anyone can tell you, because it depends as much on you as on the project at hand. Most of us are both blue-pill and red-pill microcontroller users from time to time, and everyone has different thresholds for what projects need what microcontroller. But once you’ve gained even a bit of mastery over the tools, you’ll know how to choose for yourself.

My advice to the 10%ers is to look up an old kit with a pic16f84a. Get one that teaches assembly and do one decent project in asm/risc etc. Once you have beat yourself up with it and know how , start learning to do the same projects in C. There are tons of old time kits teaching this for about 100$ . Once you know this stuff, microchips thousands of chips become a blessing not a curse. IF you go this route duino code is a bit obnoxious to work with.

Silicon Chip (http://siliconchip.com.au/) and Elektor Publications are a must in addition to 16F84 resources (as per addidas’ comment) in order to really understand the details. I’m surprised Hackaday doesn’t collaborate with these publications but it would be of joint benefit if Hackaday did… There’s a company Altronics (altronics.com.au) that does an insanely good jobs with technical projects! Jaycar too (jaycar.com.au) and Freetronics (freetronics.com.au).

On another note, I find there are two types of programmers – those that come from an electronics background (and prefer lower level programming with cost effective 8-bit built for purpose chips – where selecting the best chip is a skill) and those that come from a computer science background which favor the richness of higher level languages, which almost always require 32 bit boards.

Agree on elektor , I think my first kit was “talking electronics” kit. http://talkingelectronics.com/ I was mighty disapointed at first because I had to fix my programmer to do icsp but then I realized to be unhappy with the programmer , I had to firstunderstand what i was doing and now love them for what they taught me.

Also to be fair, many of us started with 8-bit given that 32-bit dev boards with a screen used to cost $5000 only 10 years ago. You get a lot more bang-for-buck these days, given smartphone’s have driven down device/part costs.

In an ideal world we’d develop in 32/64 bit high-level first and then optimize for the application the closer to production we get (that includes using FPGAs/ASICs or 8/16 bit micro-controllers) or with subsequent product releases.

I also started on pic16f84a! It’s hard to imagine a simpler microcontroler. Great for beginners, especially if they want to experience a variety of architectures.

I wouldn’t recommend the 16f84 to _anyone_ regardless of what they want to achieve. Yes I have driven 16f84 chips within an inch of their lives and literally only a few bytes of code space to spare left – no there is no conceivable circumstance where I could possibly be persuaded to use one for anything today. There is such a thing as overly simple and clunky, and the 16f84 certainly is so by any standard relevant today: there simply aren’t any of the peripherals in it that make a 8-bit chip usable (and useful!) today and as soon as you want to do more then blink an LED you’ll start being limited by the 1K flash. It’s like teaching a future F1 driver how to double-declutch – it’s obsolete and useless knowledge, irrelevant to anything they’ll ever do as an F1 driver. Or, putting it differently, the 16f84 is the equivalent of obfuscation contests and/or the 2048 byte compo category of the demoscene – it’s utterly irrelevant to learning to program in any context unless your ambition is to compete within that specific, artificial challenge. Yes, if you do it well, it does showcase your mad skillz. No, it’s absolutely not required in any sense to _acquire_ those mad skillz. You can learn the same just by bare-metal programming any 8-bit _OR_ 32-bit chip in a properly efficient way (as opposed to Arduinos which throw away 95% of your MCU’s performance and hardware features right off the bat). Learning how to add a software USB peripheral to an MCU that doesn’t have a hardware one may teach you about USB but it’s just wasting your time unless you cared to learn in-depth about USB: you really should just use one that has that if you wanted to use USB. Learning how to generate jitter-free stepper control pulses using all the built-in peripherals to help you do it, on the other hand, is actually a useful achievement.

if you want to learn to write risc its perfect . Put a few buttons few led , bit bang out some pwm and software debounce the buttons. Between that and chip setup you have a fine project. Its perfect for learning how to write inline asm later for timing critical things.

I’m with the 16f84a + assembly route all the way.

Screw that ugly abomination, the sluggish speed of it, the butt ugly page flipping, its registers, all of it. Just nail it to the wall, and better play with a simulator of an Z80 or i8080 for a classic von Neumann or take a venerable 2313 or any other AVR for modified Harvard and enjoy a much more logical and streamlined architecture. I still hate that chip from the time it was taught in university.

Very well written. I followed AVR then ARM path, and currently enjoying FPGAs. Completely agree with the author. AVR butterfly is a good start, and later for ARM, I’d certainly suggest ST, mainly because of the support from the company.

Enjoying FPGA’s? Talk about an acessibility barrier! Every time I’ve tried to crack open a Verilog or VHDL tutorial, or even a Xilinx ISE tutorial, I’ll end up saying “eff this”, due to frustration from the ridiculous complexity of doing anything on FPGAs :\

I’ve had two polar experiences with FPGAs: all within the context of the same class.

1) It’s really cool to do the low-level gates and flip-flops stuff.

2) Pretty shortly thereafter, you toss in a soft CPU and link in tons of IP cores and it becomes even plug-and-player than using an Arduino.

FPGA pros do all the work that’s between these two extremes, I assume.

(But yeah, the FPGA flows are a pain to get through. It’s a one time cost. And see Al Williams’ FPGA tutorials here on HaD.)

I found the cheapest FPGA route to take is to buy barebone Altera breakout board (~20$) from Waveshare and get a USB blaster from ebay (~4$). Install slightly older version of Quartus II (14.1), then you’re good to go. They provide you with schematics and lots of demo projects. For more: http://www.waveshare.com/product/fpga-tools/altera/core/coreep4ce6.htm

@[Andrew Pullin]

I tried to start with a CPLD kit from Vantis for the MACH2 chips decades ago. I couldn’t register the software because I had no internet connection so I gave up and through it in the bin. If Vantis still existed the daggers would be out. It was such a negative experience that I will never forget and I will never forgive. And I am not surprised that they’re dead now because you can’t fuc( with end users forever – alternatives evolve.

Unfortunately the assholes that run these companies have never heard of user experience and spend so much time worrying about their IP that it’s still a pain to deal with them. Not to worry – new open source tool chains are evolving so one day very soon we can say to them – fuc( you and your registration process. If you have ever had a PC crash and need to be reloaded then you will totally understand me totally!

But for now we still need to put up with third world software and the sooner you get over this hurdle the better.

Go though the process – register with the web site for the download – download – install – register somewhere else to get a registration key – try to register again – register to open a support ticket to work out which of the 6 gazillion far(en registration detail that you have to obtain over the process is actually the registration key for the the far(en software.

Install the software – find out it the wrong version for the chips your using – start over.

Spend a great deal of time wishing there was some competition and how fast your going to through this all in the bin when there is.

After installing the correct software for the chip – find out that your programmer won’t work with the software – look for a version that is compatible for both – find that you have to split the software and manipulate it so that you can run one version of the IDE and another version of the programming software. This step takes about 3 days on youtube.

Alternatively just get the Altera package – as it’s the least worse of the IDEs / ISEs.

Go to ebay an buy a $15 – $30 CPLD develoment board and a $6 programmer (USB Blaster).

OK right now I can hear all those screening about how bad a choice CPLD is as far as bang for buck goes so here’s the question … Do you want a CPLD that has a 20 page datasheet or a FPGA with a 600 page datasheet. I something isn’t working and you want to work out why … well good luck with that 600 page datasheet.

Now when you have learnt the basics and know how to choose what you want go buy something more expensive.

Once you get over the software setup and find some good tutorials you are on your way.

Spend plenty of time looking for tutorials: web / text and youtube so that you find something that is at your level.

One other thing – if your doing VHDL (perhaps Verilog is supported to) then download a trial version of Sigasi. I can’t afford a full version but it it an excellent learning tool. It’s like a code editor and has those sorts of features like syntax highligh and cod completion BUT it also alerts you to errors IMMEDIATELY and I can’t understate how useful that is as the error messages that come from the IDEs (compilers) are totally cryptic.

Don’t get me started on the error messages and warnings – oh god please don’t ….

Have you ever tried to play with Papilio? I found it was quite accessible to go through the long tutorial provided on their website. I’ve also been able to program by myself a custom clock generator after I bricked an ATMega32 playing with fuses, and I found it was not so difficult.

I really have to throw in the MSP430s into the ring here as well. I mean, after all, they’re 16-bits, so it’s a good midpoint, right? Right??

I really just find the basic MSP430 instruction set a heckuva lot cleaner than PICs or AVRs, and it’s nowhere near as complex as things need to get for 32-bit guys like ARMs.

To me it doesn’t even feel like a preference thing – it feels pretty fundamental. 256 bytes is pretty unusable for all but the easiest microcontroller projects, but 64k is a good amount of code, and 4 GB is obviously stupid-overkill. And although 8-bit processors obviously have ways of addressing much more than 256 bytes, they all have to jump through *some* hoops to do it – either through the X/Y/Z register pairs in AVR, or bank selects on a PIC.

On an MSP430 there’s no trick: 16-bit register, 16-bit address space. No tricks. (This is the same reason why I’m not fond of the extended memory bits in an MSPX, and typically work with small code/data models unless I really need to).

And I have to say the MSP430 FRAM-based microcontrollers are just really, really nice. Being able to use *huge* amounts of FRAM as a buffer just like you would RAM is fantastic. 2 kB transmit buffer? Sure, why not? No big deal.

Add to that the fact that LaunchPads are dirt cheap… they’re really, really nice.

I totally wish I had more experience with the MSP430s because the claim that you’re not quite making — “16 bits should be enough for anybody” — is actually pretty close to correct. 8 bits is often too little resolution for many sensors, for instance, and 32 is surely overkill. 16 is a sweet spot.

But consider the learning experience of overflowing (on purpose or otherwise) your 8-bit counter variables. Consider the value of having to think about what data types you’re actually going to use. It’s a pain in the ass, sure, but it’s educational. I’m not sure I’d have learned the same lessons on a 16-bit machine.

The compiler hides a lot of the details that bug you about the 8-bit memory addressing, though, so that’s a lesson that won’t get learned until one digs into assembler. But that’s for much further down the road?

You do realize those LaunchPads are like, $10, right? :)

“But consider the learning experience of overflowing (on purpose or otherwise) your 8-bit counter variables. ”

It’s not a limitation! It’s a learning experience! :) But I’m not sure I’d agree here. 16-bit counters still overflow quickly: a 30 Hz system tick overflows in about an hour, which made me whack myself in the face when the uC started going crazy after I left it run for a bit. 32-bits are where you can get in trouble pretending they last forever.

“The compiler hides a lot of the details that bug you about the 8-bit memory addressing, though, so that’s a lesson that won’t get learned until one digs into assembler. ”

Exactly, which is why I like them. Programming in C is easy, and debugging the assembly is pretty straightforward too. And in fact, switching to assembly-written stuff is pretty easy, too, which can get you a huge performance boost, especially in ISRs. Looking at the Arduino ISRs in assembly always makes me cringe.

And unfortunately, debugging is sadly important, because microcontroller compilers, in general, suck. As evidenced by the inlined assembly in Arduino’s SoftwareSerial library due to a buggy GCC version in OSX. And that’s not even for performance reasons!

“You do realize those LaunchPads are like, $10, right? :)”

It’s worse than that — they’re free to me b/c I have one sitting in the closet right now. Sadly, it’s the time. I’ll use an MSP on my next non-time-constrained project, ok?

“a 30 Hz system tick overflows in about an hour, which made me whack myself in the face when the uC started going crazy after I left it run for a bit.”

An 8-bit counter at 30Hz overflows before you’ve finished your coffee. If you started out on 32 bits, you wouldn’t notice the bug until it was in the customers’ hands. :)

Seriously, though, many of the embedded gotchas are the same no matter how many bits you’ve got. You just run into them all the time with an 8-bitter. (And I’ll claim that it’s good to hit them first in a simpler context.)

Re: debugging. If I could pick a super power: I wish I were a better debugger.

hey you know you can hook up gdb to an ARM, right? ;)

Don’t forget that starting out with the MSP430 will allow you to easily take different paths, depending on your needs. You could use Code Composer Studio and learn bare metal C and/or assembly if you want, or you can use Energia and take advantage of the Arduino community. Need more CPU power? Step up to the MSP432 (They are on sale at the TI store for $4.32 right now in celebration of Engineers Week). You get the easy to understand MSP peripherals and a full 32-bit ARM CPU under the hood. The best of both worlds. With that you can experiment with TI-RTOS if you like. The Launchpad ecosystem in combination with Code Composer Studio is probably the best debugging environment you can find out there, and their documentation and support is really quite good. You can’t go wrong.

I already mentioned the FRAM-based microcontrollers, but honestly, they’re so ridiculously awesome I have to mention them again, because *no one else* has anything close to them.

I mean, you can get an MSP430FR6989 LaunchPad for $15. Want to have a double-image buffer for a 32x32x32 LED cube, so you can run things at their max speed and not even worry about image tearing or anything? No problem! That’s only 64k. Still have 64k for the program, and a full 2K of RAM free. On a microcontroller level, it’s just unheard of.

I was recommended the MSP432 as a beginner a few months ago but only now realising what a good choice it is because of the things you’ve listed. There’s bare C and assembly, there’s a Driver Lib, there’s Energia to prototype and finally the RTOS. That’s a lot of bases covered for one little cheap launchpad.

8/16/32 bits is the width of the data bus, not the address bus.

The data bus typically determines the size of the registers. If the data bus is smaller than the address bus, you’ll need to use *some* sort of hoop-jumping to indirectly access data (via pointers), which is what I said. On AVR this is through X/Y/Z register pairs, on PIC this is through bank switching, etc. All of that costs performance (not that big a deal) but also makes it a fair amount harder to learn to debug what’s going in the assembly.

That’s why I said 16-bits is kindof a sweet spot. You can have the address bus be equal to the data bus and still be useful, and the assembly is more approachable. There aren’t special register pairs, there isn’t a bank register you need to make sure didn’t get messed with, etc.

The bitness of a computer is it’s processing width at assembly level.

Which is a bit of a mouthful.

For example, an 8088 is a 16-bit processor, but it has an 8-bit address bus. That’s because it fully supports 16-bit operations on its registers.

The Z80, 6809 and 6502 are all 8-bit CPUs, because they fully support ALU operations only on 8-bit values. This is true even though the Z80 and 6809 have some support (add/sub/inc/dec/load/store) for 16-bit data values.

The PowerPC G4 and Pentium CPUs were a 32-bit processor. This was true despite them having 64-bit data busses, because they only fully supported up to 32-bit operations in their instruction sets.

I hope this helps.

I wanted a dirt cheap micro controller for a simple project that required some clock timing (seconds/mins/days timing). Ended up with the bottom of the range MSP430G2201 as a perfect candidate. Very simple, no extra unnecessary hardware. Read through the documentation and knew the entire chip inside and out within a day. It’s also a battery powered project so I wanted the lowest power draw possible. Not having to write 30 lines of code to disable all the extra crap was great.

When you’re making a $10 product, even in small volumes, paying $2 for a 32bit ARM cpu when you don’t need it instead of a 30c 8/16bit micro is silly. Spending twice as long fitting your code onto a $2 PIC instead of a $5 ARM for a one-off project on the other hand, is equally silly. They all have their place.

about the MSP430s, I have to agree with you. Never actually tried but the texas instrument chip but it’s true that memory trick are just annoying on 8bit, and writing address assembly is annoying on 32bits (0x103A5634 is just one number)

When I first heard about FRAM I thought it would take the embedded world by storm. It looks and sound awesome.

Will try it ASAP but still sitting on a stack of AVR so i’m still using that for now.

I’ve always wanted to learn how to program microcontrollers, but I procrastinated for the longest time. But I finally started teaching myself C because I’m the type that likes to know what’s going on under the hood. It’s fun. I picked up a Fram based Ti development board because it was pretty cheap. I stressed for a bit about which boards, AVR, TI, ARM etc I should start with but I ended up getting the TI because of the price. I’m super excited to start using it once I get a better understanding of C. Your comment got me even more pumped!

I’ve learned a ton about microcontrollers by focusing on the ATtiny platform over the last few years. 200 page datasheets are great! Seems like the STM32F0 is a good next step.

STM32F0 is an excellent next step! It comes with a slight learning curve, but the DMA alone is worth the effort.

STM32F0 are great, mainly because of the bang for the buck. It’s a full-fledged 32-bit controller at 50 cents in bulk. When I design products with some simple function, I’ll definitely use these (as a matter of fact: I already have).

However, I would say that the learning curve for the F0 is about the same as for the F1-series. The upside of F0 is, as mentioned, the price. Get an stm32-nucleo. They’re about 10 bucks for the cheaper ones.

Another plus is the “snippets” library for the F0/L0 processors. They have some nice bare metal examples utilizing just about every peripheral. Also, you get a free, unlimited Keil license.

F0 and L0 series are great. I’ve got an L0 nucleo board with couple of free add-ons from ST for free in one of their hans-on workshop. I do appreciate their effort especially for enthusiasts like us. Here is the link, see if you can find a location near to you: http://www.st.com/web/en/seminar/stm32l4

STM32 are awesome, you can use the free IDE (atollic truestudio) I think you will love the ST’s

plus the Nucleo comes with the programmer on board :)

STM32’s are easily programmed using MBED and also the Arduino IDE (for some STM32 devices).

The bang / buck is hard to beat, when the development boards cost less than $5 for a 72Mhz device with 64k flash and 20k RAM (F103), (Note GD32’s are even cheaper and also run faster)

Asm used to scare me but have been learning on a chip with nice register structure (and even still some of the instructions feel like calling Arduino functions, like moving a byte by just telling it what and where). I want to know how the instruction executes but thats where I start reaching my limits…Porting asm is where it sucks, or fairly large projects, say you have to maintain an older project and some dirty hacks aren’t documented. Embedded world is pretty sweet though, best not to think too much about where or what to do and just get into some chip(s). The toolchains are also the real magic too.

My current microprocessor course is based off of a freescale 68hc12 (dragon12 dev kit), I think it’s a pretty okay platform for teaching about computational architecture. It’s a hard von neumann architecture and the processor itself only has a handful of registers (d register, two index registers SP PC ect). In my opinion it’s a good platform for assembly learning if you can get past the shit documentation, though to be fair I haven’t dug that deep into any other platform like I have the hc12.

I’d argue both, when it’s right. Getting something done, is indeed, different than learning the nitty-gritty. (Disclaimer: I cut my teeth in the days of the 8008…so YMMV.) I’d also argue, that if you want to learn the assembly level stuff, you can learn it, in small bites, with the lowly Arduino platform (and have a lot of help available, because it’s really just a dev board for the CPU that’s on the board). It’s not that difficult to write inline assembly in C/C++ (let’s remember that the Arduino IDE is really just a dev environment, for a particular board, for C/C++ that has access to a huge number of libraries). If I need to do synchronous PWM scr light dimming, assembly; if I want to converse with an I2C temperature sensor, call the library. But, don’t attempt the former on day one, or two, or maybe even three. ;)

You can also program an Arduino board with plain C (I’ve done it with PIC32 and AVR based boards).

Yeah, and probably inline assembly. And laying out your own board and making a custom programmer b/c only lazy noobs use a board safely designed with the necessary parts. You can make a project as hard or easy as you want, the value in Arduino/Raspi is making it easy for others to build and extend your project (or critique it, this is hackaday afterall ;p ) and building others real quick, it’s great.

That looks to me like a best way to learn stuff. First start with simple projects in Arduino, use somebody else’s libs and built-in ones. Then you realize you need something more and start writing your own libs using datasheets and logic anaylzer. Later you need to solve some time-critical part and you can use inline-assembly. IMHO that path is the best because it starts with simpler things, and those who are willing to learn and explore will … learn and explore.

But if someone without any experience takes bare microcontroller, datasheet and assembly language I believe he will give up very quickly.

Whatever you pick, make sure it’s supported by gcc, and do everything from either the commandline or a generic IDE (Eclipse?). Whether you start in assembler or C, a free toolchain that you can learn once for many chips is worth its weight in gold.

I don’t agree. Like programming languages, the more compilers and IDEs you get exposed to, the more you are able to make informed decisions about what tools are best for a particular job.

I do agree. When you’re out of the experimentation/learning stage and want to put your abilities to work, the best compiler and IDE is the one you know by heart, no matter what it is. So pick one that will translate to as many platforms as possible.

I enjoy programming on 8-bitters. Why? You can pretty much memorise how to setup all the peripherals and how they work. Of course they are limited, but most microcontroller projects are limited as well. For hobbyists, there are fewer and fewer reasons to pick a beefy microcontroller for your task: the market is becoming flooded with really inexpensive hardware for interfacing to the real world in various levels of power, realtime-ness and abstraction. Five years ago, you might have used a very large ATMega and an ethernet networking chip for your home automation project, and written a ton of software for this. Webserver, display, control, etc. — Today, you might just use a whole technology stack consisting of cheap microcontrollers for the realtime parts, an ESP8266 for communication and the web server and a raspberry pi for display, logging, and control. It’s easier to program, since the abstractions of each of the platforms are closer to what’s easy to achieve on the specific platform. It’s also more flexible. Cost-wise, if you’re not doing a commercial product, it makes almost no difference.

So, what’s the deal then? I’d recommend beginners to learn the most important stuff of microcontrollers first: Interacting with the world outside the machine. That means bit-banging, UART, I2C, SPI, ADC, (where applicable, DAC + Filtering), PWM, Timers. Storing data into EEPROM. Reading Sensors. All this stuff. So for beginners, I’d recommend a microcontroller that’s easy to get and has a sensible infrastructure for handling these peripherals. I like the MSP430, but AVRs or PICs are also fine. The MSP430 has a really nice IDE if you can bear the limitations, the AVR has an established open-source toolchain with very inexpensive programmers and the advantage of having ONE datasheet for your chip of choice that explains everything. The PIC offers choice, and their IDE is nice as well, but many things are very awkward with PICs. Silicon Labs has some extremely nice and very inexpensive 8051s, but I don’t have any experience with them yet.

When you’ve learnt how to interface with external components, the choice of microcontroller for your project should mainly depend on what you want to accomplish, what you have around and what’s the best tradeoff between price and hassle. I’m not especially fond of Atmel’s ARMs, as I find their documentation and overall support quite lacking. Other manufacturers have done a much better job of supporting people who start out with their MCUs.

Totally different from all the rest, Cypress offers the PSoC, which is extremely flexible, very well-documented and a lot of fun to play around with. They have perfected abstraction–it almost doesn’t matter whether you use their 8-bit 8051s or their 32-bit ARMs for your project.

This. I like AVRs because they are so simple. I do complicated stuff at work all day; if I am going to be programming at home, I want it to be relaxing. Experience with bare metal programming is awesome! Pretty much the only thing that my 8 bit AVRs don’t do that I would like them to do is DMA.

Of course, it depends on what projects you are working on… and I have used beefier 32 bit MCUs when needed, but I just like the simplicity of 8 bit AVRs. The cost may not be better (and in some cases is actually worse), but the personal enjoyment makes up for it.

YMMV, of course.

> Pretty much the only thing that my 8 bit AVRs don’t do that I would like them to do is DMA.

I would like to introduce you to the XMega :) Sad that this microcontroller is overlooked, it would have been in everything if introduced 2 years earlier.

Nice article, makes me want to learn ARM after years on 8bit PIC. Thanks !

I started with ATmega168 (before Arduino even existed) and used that for a couple of years, also going through some ATtiny. I’ve always wanted to know what goes on behind the scenes, so I never really liked Arduino (and yes, I have used it). Then I moved on to STM32 as preparation for a summer job, and I haven’t looked back since. I love their cheap dev boards (about 15 bucks for an stm32f4-discovery? Including a full-fledged ARM-programmer? Hell yeah!). The only thing I don’t like now is that their new HAL-library is too messy. I prefer their older std-periph.

I also tried out some other things in between, like some PIC and LPC1313 (which must be one of the worst chips I ever worked with).

Start small and get big? :^) …

That’s a nice advice. That can be made into one childish joke, but a very nice advice for a lot of things.

Alternatively: Learn to walk before you run .

Two frogs left their pond, because it was drying out. Wandering across meadows, they came upon a deep well with steep walls. First frog said: “That’s it! This is the solution for our problems – this pond is so deep and shadowy, it will never dry out. Let’s jump in!” “Wait!” – the other replied, “How will we get out of it if it DOES dry out?”

When you commit yourself to a certain architecture, it is hard to port your work to another one if you didn’t anticipated it in advance. But if you do think about it at start, you’ll write suboptimal code and you will still have nasty surprises when you try to migrate, regardless. So, in the end, it means you’ll have to compensate for that losses of performance by reserving more resources, using costlier chips. And usually, if your product succeeds, you will have to migrate, either because your initial chips will get too expensive after a while, or because your product will fell a victim of creeping featurism, or because someone else decided that we are going to use another platform for whatever reason. So, essentially, even though you can write terse programs for your 8-bitters, you should always try to isolate and abstract your hardware and all your platform dependencies as much as you can. Use simple solutions only if they are one-off, or if you don’t mind starting anew.

Still, I must admit that using something tiny for something clever is so much fun! But if you are not doing it for fun, then it’s a cruel and unusual punishment …

You don’t need to touch assembly at all when programming 8-bit micros. Modern microcontrollers are made more C-friendly, and C compilers are smart enough to make efficient use of limited hardware. Use assembly only when you need to write a time-critical function. In recent months I used only four lines of assembly while programming in C, because PIC I used has locking mechanism that protects program memory from being overwritten in case you make a mistake in your code. And I only had to copy code from datasheet anyway.

Those 8-bit micros come in so many flavors that you can find a part for just anything. Today morning I wrote a code for monophonic synth that will emulate Trautonium in its operation, with chiptune-ish sound, on PIC10F, using only 168 words of program memory. In XC8, free version. Possible with proper selection of parts and careful reading of datasheet. I will add more features to it just to see, what is possible with limited hardware…

No. Isolating and abstracting hardware is setting yourself up to fail. It’s designing to the lowest common denominator, it’s throwing away 90% of what the hardware has to offer, just because it’s specific. It’s what the Arduino does, and the main reason why it lacks any meaningful performance – sure, it does work with timers and such but did you want a bit flipped in hardware automatically when a compare match occurs…? Oooops, sorry, no can do. It’s not a universal enough feature to show up in the Arduino API. So you’ll end up doing it in software by polling or in an uncertainly delayed interrupt handler, or you’ll get a much faster chip just to hide the inadequacy of the solution. It’s the Windows way where endless bloat, perpetually growing hardware requirements just to achieve the same thing (badly), arbitrary processes freezing the entire UI for arbitrary amounts of time and any number of crashes and blue-screens (Yes! in 2016!) are perfectly acceptable and the normal way of doing things. I for one prefer the Space Shuttle way of programming where you NEVER, EVER crash, precision is never less than absolute, and any jitter is within narrowly specified limits. Contrast that with the Arduino way that can’t even tell you exactly how much free RAM (if any) you have left – the _official_ advice is “don’t go over 80% and prey”. Say WHAT?!? See, that’s why we can’t have nice things…

To weigh in a little.

Without wanting to continue with the AVR/PIC flame wars too much, I wouldn’t advocate 8-bit PIC for the simple reason that the architecture teaches you what a processor shouldn’t be. I’m sorry, but PIC is poor beyond words from an architectural viewpoint and they don’t support proper versions of ‘C’ (no proper stack frames, non-contiguous RAM).

AVRs have a mostly decent instruction set, but there’s a lot of mnemonics to learn, though this isn’t an issue if you’re programming in C. AVRs have peripheral sets that are simpler in general than MSP430, but better designed than 8-bit PIC. For example, 8-bit PICs have timers that automatically reset on comparator match, when they should offer a free-running timer mode with a comparator that merely triggers an interrupt (as AVR and MSP430 do).

MSP430 is definitely easier to learn assembly for than AVRs and is well supported in ‘C’. Here 12 ish regular registers are better for learning and the instruction set is simpler. MSP430 low-end devices are not so deterministic and therefore not quite as good at bit-banging. But for learning I don’t think that’s a great problem.

ARM Cortex-M0 has a nice instruction set, it’s very easy to learn. There is nothing really that prevents a chip designer such as NXP from designing a Cortex-M0 with a super-simple hobby-level peripheral set. It wouldn’t be rocket science. Let’s say we call it the LPC10xx series with narrow DIP; 4Kb to 32Kb of Flash with no DMA and:

1. Four simple 32-bit timers that have: a free-running counter, a comparator, a capture mode, a PWM mode and a pulse density mode* (TMR+=comparator with OC=Carry output). PDM, is amazing by the way ;-) Single control register for setup and Prescalar. (12 I/O regs).

2. At least 4 SPI/USI ports with a 4-byte buffer that can be used for SPI/simple USART/I2C with a bit of help. At least two SPI ports should be able to operate in 4-bit wide SPI mode so that off-chip code can be run from SPI and off-chip RAM can be accessed as RAM. (8 I/O regs).

3. A/D, but not analog comp. (9 or 10 I/O regs).

4. Interrupt sources for multiples of 16-pins per reg: 00=no int, 01=rise, 10=falling, 11=any edge. ( 2 I/O regs).

5. I would also support a pattern-match mode to make it easier to support I/O decoding on the MCU. Here we have a 32-bit mask; a 32-bit match register and also a 32-bit mis-match mask ( the mismatch mask is ANDed with the DDR to generate an output set of signals, which output the pin contents for the corresponding mismatching pins. This allows, for example, a default value to be set up in an output latch, or for example to output a toggle value from a pin, by picking an Output Comp match pin). What’s the point of all this? Well, if you have an MCU that responds like a memory mapped parallel hardware device, then the MCU will generally either be able to respond to an input signal or will be background processing. It’s an alternative to the simple Configurable Logic Block implementations on the LPC810 and some recent PICs. ( 3 I/O regs).

6. Simple bit-banging support: DDR+OUT latch + IN bits. OUT latch functions as pull-up just like AVR. However, for advanced users we’d support bit banding too. (3 or 6 I/O regs).

7. EEProm and self-flashing support using just 4 regs: Control, Status, Addr, Data. ( 4 I/O regs).

8. Rudimentary clock domains and watchdog, e.g.some kind of automatic PLL support as well as RC oscillators of course and 32KHz ultra-low power support. ( 2 I/O regs?).

Anyway, that sums it up. Because we have 32-bit registers, the number of control ports is reduced. Here, I count: 43 to 47 I/O regs.

-cheers from Julian Skidmore (MPhil Computer Architecture, Manchester University England, FIGnition designer).

*https://en.wikipedia.org/wiki/Pulse-density_modulation

They definitely could, but the fact is that things go hand in hand: those who want high processing power and memory would probably make use of more complex and feature rich peripherals.

Another reason is that all these complexities kind of come for a very low cost. ARMs are made in smaller and newer technologies. All that complex driving and configuration schemes they have for an I/O actually reside under the PAD where the wire bonds. Whether you make it simple or complex it’s going to take that space anyway.

Look at this die from STM32: most of the stuff that looks uniform is memories, the core is in the center and the peripherals are the tiny weird thing on bottom left. Take them out and most of the chip is still there in terms of cost, but not functionality.

https://en.wikipedia.org/wiki/STM32#/media/File:STM32F103VGT6-HD.jpg

So, not many reasons to make really simple arm chips.

something wrong with the image…. https://upload.wikimedia.org/wikipedia/commons/thumb/8/89/STM32F103VGT6-HD.jpg/1024px-STM32F103VGT6-HD.jpg

Well, the point of the article is that the simplicity of the Peripherals still makes 8-bit devices appealing for learning. But really, it’s because of the peripherals themselves rather than the CPU architecture.

Simple peripheral sets on ARM are possible, but from a manufacturer, it’s whether there’s a market for hobby-based ARM MCUs with hobby peripherals. The LPC1114FN28 and LPC810FN8 shows that NXP think there’s some potential for the DIP format, though why they gave the LPC1114 a wide DIP I’ll never know (especially as it’s possible to shave off the ends of the package and turn it into a narrow dip version!).

The hobby market, really is an investment market: you’re trying to appeal to newbies and give them a low-barrier to entry so that (a) you train up a future engineering base and (b) you get them familiar with your chips.

ARM Cortex M0s are an example of reducing the barrier to entry, because they do have simpler peripherals than most ARMs and because e.g. the interrupt controller is designed to be ‘C’ friendly. Also most ARM MCUs have a serial-based bootloader.

In conclusion I’d say that we’ve been expecting 32-bit MCUs to eliminate the 8-bit market for, well, decades now, but it hasn’t and 8-bit MCUs still sell about as well. This isn’t due simply to price, because Cortex M0s aren’t that much bigger than 8-bit MCUs, so it must be due to barrier to entry issues. Therefore although there aren’t a lot of reasons to build a simpler peripheral set for them, the investment in a new generation of engineers and lowering the barrier to entry would help and that might be worthwhile.

And don’t forget that in most cases you don’t really need all that power for a given task. [Dave Jones] @ EEVBlog has an episode about electric toothbrush that uses 4-bit micro with less than 1kwords of program memory and few half-bytes of RAM, IIRC. It was designed that way, because you don’t need 8 bits to switch a motor or count time, and that makes device cheaper to manufacture. Simpler micros are easier to program, than ARMs, and that saves time in development. That’s why there are so many 8-bitters on the market and new ones are developed all the time, with fancier peripherals, lower power consumption and faster clocks. 8-bits are here to stay, together with 4-bits, at least for another 10-30 years.

it’s all about total cost in the end. something you know will reduce development time and risks of mistakes…. and this matters in the scale of how many of one thing you are going to make.

In the hobby level we tend to forget the development time, which is a cost well accounted for when something becomes commercial.

“EEVBlog has an episode about electric toothbrush that uses 4-bit micro with less than 1kwords of program memory and few half-bytes of RAM,”

Cool. I’ve never really tried to program a proper 4-bit MCU, though I’ve read the TMS1000 series datasheets for fun ;-) For small programs where limited performance is needed, they can be the ideal solution.

The cortex M0 was mostly designed for low power, and one way to achieve this is to cut down on things, including the complexity of the core.

I don’t think 8 bit micros will go, we still need a lot of simple things to be done with micros. If both the 8 and 32 bit are made using the same tech node**, there is no reason for the simpler 8 bit not to be cheaper, and in a lot of cases this is all that matters.

**this is one of the reasons 32 bits appeared very great at some point: they were being compared with 8 bits done in older nodes that had similar price, but much lower features. Once the 8 bit ones were upgraded this advantage started to fade away.

Xmega isn’t *priced* cheaper than Atmega part. ARM parts also have much more competition between vendors to drive down prices as there are little incentive to stick to a more expensive vendor if parts have similar specs.

The old 8-bit in new process node is not going to retain the same electrical properties e.g. 5V or 5V tolerant would take a lot of chip area. There is also the increase in leakage (that you have pointed out) going down the process node.

@fpgacomputer, agreed, some things like 5V operation are lost when scaling down 8 bit micros.

I wasn’t saying the new 8 bits are cheaper than the old 8 bits** (xmega vs mega is not a fair comparison since the xmega has a lot more things, it is more like a low end ARM) … but I was saying that using the same technology, an 8 bit micro should be cheaper than an 32 bit one, everything else being constant.

Ans power consumption, no talk about that. Small 8 bit micros like AVR/PIC/MSP430 are pretty good at burning very little on standby.

It is not that ARMs cannot, it is just that most low cost ones are going to burn an order of magnitude more and you are going to spend a lot more money to make that coin cell battery powered thermometer

Actually you don’t need that blow power to have a decent battery life.

A CR2032 is rated for 1200+ hour at 0.19mA drain. That’s 50 days for 190uA. If you drop the current down by about a factor of 6, you can get to the magical 1 year mark. i.e. average of 30uA of power.

The el cheapo STM32F030F4 in standby is about 5uA. Let say you use a LCD with another 5uA current drain.

The run current for the F0 part is listed as 5mA using 8MHz internal RC as clock. (I have measured it closer to the 2.4mA figure listed for the F050. You can get much lower current by running a divider.)

It is a matter of running at very low duty cycle and spending lots of time in standby. For a duty cycle of 0.005, that works out to be 25uA. So you are allowed to wake up and run for 5ms for every 1 second which is way more then enough CPU cycle for such a simple device.

Now running 10 years on a smaller battery like my Casio watch on the other hand is a lot tougher without low power parts.

PSsst.

http://www.ti.com/product/tpl5010

You’re not going to get lower current that this for a wake up, take a reading, report it and go back to sleep project.

Idle current becomes zilch.

Pssst, it costs as much as PIC12F1501, which has a watchdog timer that takes as much as 260nA according to datasheet, but on the other hand you don’t need any additional components in your design. And with 30uA/MHz @ 1.8V it’s hard to beat. Besides, battery probably will have higher self-discharge current over time that that watchdog anyway…

When designing a product it is more important to have lower costs than lower power consumption.

“When designing a product it is more important to have lower costs than lower power consumption.”

I wonder what the Smart Watch makers think about that statement.

May be you missed the whole point – namely you don’t to have ultra low power parts to get a usable battery life.

One year or so is a good enough number to get away with the cheapest parts which OP claims is not impossible.

My numbers also include a LCD because without one misses the whole idea of a thermometer. Nanowatt is meaningless if the display don’t stay on.

Getting that to run at 5uA is also not going to be cheap nor without work. Sharp memory LCD built for low power (cost a lot more than the uC BTW) is spec for 15uA without update and 50uA with 1 update per sec. In general, temperature don’t change that much between samples, so you can get away being smart in the lcd driver code to do the minimal work.

So all that low current is only achievable if all the components in the system are indeed designed for low current. Someone without the experience could mess up the firmware design and drive the consumption up easily. Things have to be balanced too.

For such a device an e-ink display would be better. You only need to change it when temperature changes. and you can get away with checking temperature every 5-30 seconds.

And there is even better technology that draws so little power, it will be powered by the heat it measures. It works by exploiting the relationship between temperature, pressure and volume of various materials. It’s reliable, works over wide range of temperatures and it’s precise. And it’s really, really cheap…

I’m actually repling to Moryc.

thermoelectricity is very inefficient and bad when the temperature delta is low.

If there is a display I suggest a solar cell: if there is a display there is propably light as well.

(backlight obviously exist but the power is then in the mA range.)

That’s an awesome device.

Depends what you care about. I like the “stop” mode where you still keep your RAM, which if I add the numbers comes to a typical of 10u, with a max of about 10X that. Remember to look at the max too, or you will wonder why the battery unexpectedly dies after a month instead of a year.

Of course, we are looking at 1 chip, but the things hold for a lot more. It is the tech behind it as well: it takes more area and complicated things to do them low power in sleep.

Don’t forget the elephant in the room – embedded Linux platforms like R.Pi and BB. Adding Linux makes IoT and other connectivity downright trivial, and the costs have downright plummeted of late.

It’s not always the most appropriate choice, but then, neither is an ATTiny.

Embedded Linux is definitely A Thing, but IMHO it is currently way too overused. I don’t want a full blown OS (with all the security holes and upgrade requirements that it brings) to notify my phone that the toast is done.

Sure, they have their place, but the overlap of what is suitable for a Linux box vs. a microcontroller should be very small indeed.

Stop thinking so small.

Put a zigbee radio on a BBB and call it a bridge or concentrator. Or a WIFI USB dongle, or a 3G dongle…

The elephant in the room is the god awful development pace for the BBB regarding USB Babble errors, the Device Tree Overlay changes, etc. Periodically booting halts when the LAN IC can’t be found. If your board can’t boot due to even a test point and PCB trace running to an IO pin then why the hell isn’t each sensitive I/O pin behind a buffer IC on that damn board?

It doesn’t get as much love as AVRs, but I really like the Parallax Propeller for having an easy high level language that seems purpose built to make you give up and learn the assembly already. There are plentiful IOs, timers that are surprisingly easy to use, and you get to learn about the joys of concurrency with no safety net.

Came here to say this. The beautiful thing about the Propeller is you don’t have to learn or memorize a bunch of special I/O combinations because all the I/O is done in software, which gives you great control and a chance to learn about those interfaces if you want. Need eight serial ports or two VGA outputs from one chip? No problem. Yet it’s a very basic environment, easy to learn well enough to get stuff done.

I was so excited the first time I was able to change the blink speed of the default blink program in the Arduino. And I have learned countless things since then. Including all the hate people seam to have toward Arduino projects. Nowadays I am embarrassed to share projects in which I have used one. I would love to learn the nitty gritty details of programming pics and AVRs without using arduino, but I always seam to get discouraged and demotivated as soon as I hear about setting fuses. I have yet to find a good write up that speaks to me in a way that makes me comfortable. I really enjoyed this article, and would take the red pill in a heartbeat if someone could show me the way. Thank you.

in avr studio you can set fuse with a GUI…

usually you only change them only one time in a project (for example changing from internal oscillator to external).

it’s easy to brick your avr tough.

Previous programming experience should be considered as well. I learned 6502 assembly 30 years ago, and found it only took a couple days to pick up AVR assembler. I find it even simlper than 6502 without so many addressing modes and instruction timing that is much easier to remember.

I went from Z80 to 68k to ARM. Learning ARM enough to crack game protection with no toolchains took an afternoon. Lovely architecture! 68k is now just a distant nightmare…

68K is a very easy architecture to code assembly for. Not as orthogonal as a VAX’s but nice enough. And easy enough to grok for mere mortals as compared to a MIPS or ARM.

Old ARM32 is easy. It takes an afternoon, like Alphatek says. The new Cortex series are more complicated, especially if you also need the built in system peripherals and/or special instructions.

What about for those people with the standard Computer Science education who all learned basic assembly on MIPS? Is there anything similar with a load-store architecture and a luxurious overabundance of registers?

IIRC Pic32 is MIPS

And if you prefer Propellers, you get the fast-easy example code solution and the nitty-gritty tight packed assembly option, all in one chip! :-)

There’s very little justification to continue to use 8-bit microcontrollers even AVRs (my favourite 8-bit micros). Use STM32F0, or even STM32F4s. The STM32F0’s are cheaper and more performant than AVRs, if configured correctly you can get very similar power dissipation levels as AVRs, they have free software dev tools and the hardware is cheap. Nucleo boards contain, a programmer, debugger, and USB2Serial as well as a target device all for a measly $12USD They also come in all shapes and sizes Nucleo-32, Nucleo-64 and Nucleo-144.

So the STM32 peripherals are more complicated, sure I’ll give you that much. Well in that case you can learn about the STM32Cube libraries that make things a little easier. Not easy enough? use mbed libraries. Better than the Arduino libraries and very flexible for a high level HAL API.

Blinking an LED is as simple as:

#include "mbed.h"

DigitalOut myled(LED1);

int main() {

while(1) {

printf("Hello World\n");

myled = 1;

wait(1);

myled = 0;

wait(1);

}

}

It really doesn’t get much simpler that that.

mbed can be easily used in the cloud as well as offline. If anyone needs to get it going offline ask me and I will write an blog entry about it.

“So the STM32 peripherals are more complicated, sure I’ll give you that much. Well in that case you can learn about the STM32Cube libraries that make things a little easier. Not easy enough? use mbed libraries. Better than the Arduino libraries and very flexible for a high level HAL API.”

This is precisely the mainstream approach — the get-stuff-working approach. And it’s right for a lot of people. And for them, I totally agree with you.

If instead you’re the type who wants to know what code does and how it works, this abstracted approach will frustrate. If you’ve ever dug into the STMCube libs, for instance, you’ll know what I mean. You can get to the bottom, but it’s like peeling onions, tears and all.

Agreed, the STMCube libs are not for beginners nor for the faint of heart. In addition to looking up the peripherals in the Micro’s reference manual you’ll also have to look at the STM32Cube manual as well and examples….lots of examples. In the end however it will be worth it…,because once you get used to it , it will make managing these complicated peripherals more manageable. Also the STM32Cube is intended to be somewhat portable between the STM32F0, F1, F2, F3 and F4 families so code base migration between these parts is fairly easy.

I actually built two little breakout boards based on the STM32f072 https://pbs.twimg.com/media/CJS6IVmUcAAtD3E.jpg:large and the stm32f042 https://pbs.twimg.com/media/CUeBfZPUwAAhE1e.jpg:large

In addition I developed my own small C library for setting the clock, gpio, external interrupts, raw and buffered serial and adc (SPI and I2C are incoming). I have similar libraries developed for the STM32F411 as well. It was and continues to be a very neat way to learn the innards of the microcontroller and its peripherals. It also got me thinking about the intricacies of API design.

After a while, I basically decided to simply rely mostly on STM32Cube. Especially that I now have a relatively solid understanding of most of the peripherals at the register level. The reason is that it helps me manage the complexity of the core and peripherals with little effort and it gives 90-95% of the granularity that I had with my own library or pure register-based code (on which my libs were based).

Mbed is great for fast prototyping and those wanting to learn the basics. While being a high-level API, the mbed libs expose enough complexity that it makes it an excellent tool for newcomers to the embedded world. It abstracts which bit in which register does what, but still exposes enough details of say the SPI bus, I2C bus or external interrupts allowing students to initially focus on the bigger picture and then possibly ‘zoom in’ to a lower level of abstraction at a later date.

The biggest problem with mbed is that the binaries for a simple UART loopback program can be about 20K. So i wouldn’t recommend its use with microcontrollers having less than 64KB of flash. In my custom library, the same program (for the same part) uses about 4K. The same program written in STM32Cube uses about 8-9K.

I have a copy of your AVR programming book by the way. Its a true gem and I highly recommend it to all those interested in the AVR micros.

The abstracted STMCube libs aren’t very efficient either. It sometimes takes 10 lines of filling a struct, calling a function that’s going to validate all the bits, and then combines all the values and writes them to a single register. Instead, you could just write the single register yourself in one line of code.

STM32CUBE is not efficient, but mbed libs for the stm32s is built on top of it so it is even more wasteful of flash. But when you have 512KB of flash this is not an issue. Of course if you have 32KB or even worse 16KB of flash only it is most definitely an issue.

CodeVisionAVR has one of my favorite ways to do the GUI to code configuration: you select in the GUI what you want, but in the end all the code it generates is simply writing the proper values in the registers. It cannot get more efficient than this.

“You can get to the bottom, but it’s like peeling onions, tears and all.” very well said!

This is primarily due to two things:

– STM32Cube libs are still fairly new (2-3 years old) and are perhaps not as stable as they should be (though they’re getting there in my opinion)

– Because its new, there’s very few tutorials out there.

BTW in terms of intuitive API design the TI’s TIVA C Tivaware libraries are probably the most intuitive and easiest to use. Freescale’s Kinetis SDK is also pretty good. I find that the STM32Cube libs trails behind these two. But I like the STM32’s hardware, low cost, and availability so much that I’m willing to overlook the STM32Cube Libs imperfections.

I still think that with proper instruction, newcomers to the embedded world are better off using a higher abstraction API (say mbed) and move to a lower Abstraction API (say STM32Cube) and then register based stuff as they get more comfortable with the hardware. This would be a better approach to first learning the register based stuff on an 8-bitter and then moving on to 32-bit in my mind. Especially in this day and age where rapid development is the latest rage and where more microcontroller families keep getting released every year.

Ofcourse I could be completely wrong about this.

Where can I find a STM32F0 cheaper than 30c? I bought some f030f4p6 to play with, but the cheapest I can find them on Aliexpress is $4.40/10. I can get 10 tiny13a for $3.

pssst.

STM8S003

Now compare it with a PIC10F and laugh…

STM8 is 8-bit. I was going to get it, but the price difference from STM32F030 is minimal. You can get a hardware debugger for either with a $3 STLink clone. You get more I/O and memory than ATTiny13.

Five Volt.

Pic24 16 bit , Dip package, around a dollar from digikey. Has hardware multiplier, register based instructions, simple assembly, no memory paging. Just curious as to why no body recommends these.

Few years ago I read an opinion of fellow PIC programmer, that PIC 16-bit family is poorly designed, and some PICs have errata as big as datasheet. I checked it now with random PIC24F and errata had only 10 pages, less than similar documents for some 8-bit PICs I used. So I’ll try one out…

+1

I found the Pic24F series very straightforward to use. I was able to fit FAT filesystem for an SD card and a basic TCP stack on a chip costing about $3. The libraries are provided by Microchip and easy to integrate.

http://www.puzzlemation.com

I’m a teacher at a local polytechnic. And I teach embedded systems.

Within the curriculum we start with 8051 :D using SDCC. Keil boards, a treat to use.

Then we jump to Arduino (AVR 8-bit, mostly nano clones, local versions and barebone ATMega 328’s) and mbed (original LPC1768 and some Freescale Kinetics).

ARM M4 and M7 boards (LPC based, from KEIL), PIC boards (MikroElektronika, open for almost any classic PIC) and Launchpad MSP430 are available for students who want to know more. We even hack the chinese STM32 to work with mbed, for their projects.

To be honest, it’s really simple to explain all the major concepts on a 8051. And SDCC. Hands down, in projects it beats even the digitalWrite(). Working with timers and interrupts, explaining why 32bit multiplication is fu*ked up in assembler and so on.. The only problem is a lack of nicely written libraries (for everything) like Arduino has.

That sounds great. How many semesters was that?

Basic embedded systems (with 8051) are in the 5th (of 6) on Bachelors Degree, with Designing embedded systems (Arduino + mbed + everything else in their obligatory project) is in the last semester. Nice thing is that other teachers use those boards in their classes in the last semester, even if students are not taking my class about designing embedded systems – students learn PID on Arduino, or they manipulate with sound using mbed on DSP classes. If students understand how to get the timings, inputs and outputs right, then the platform is irrelevant.

8051 is so much easier then others to teach, and sometimes it’s easier to program. We bought licences for 8051 for Labcenter Proteus, and now we consider jumping to Multisim (already have the NI Labview licence) as it supports 8051 (and PIC 16F84) so the risk of unnecessary white smoke is minimal.

That’s really cool that you start them out on 8051 first so that they have a better grounding to understand the fancier/simpler-to-use libraries second. Folks who start out with Arduino, it seems to me, are getting that backwards.

I am architecturally agnostic, and make decisions based on manufacturing metrics.

We like 8/16 bit PICs because they are very reliable, inexpensive ($0.24), and have practical real-time features you will need eventually (counters, comparators, and hardware uarts etc.). However when you start getting into 32 bit $5+ PIC24 series, an ATmega2560 (please ignore the arduino fan-boy rhetoric) is better value for the designer in many ways. Note that if your C is cross platform centric, the choice is almost arbitrary. Note most people who use the ATmega2560 are usually trying to poorly implement what the ARM6/7 SoCs do with ease. A proof is trivial, go outside and count how many MP3 players you see compared to smart phone users.

I’ve done projects with the MSP series (this 1990’s style line will not endure in my opinion), Cypress’s crap (only brand I actively discourage for safety reasons), Motorola’s solid stuff, TI’s many orphans (bad for long term business), and countless MCS-51 based clones (like China CPU Clones a consistent source can be erratic given the number of variations). Intel and 68k hold outs are usually a joke about cults willing to give $80 to their masters name, but at least the 68k you chose will still be around if your product takes years to get to market.

When I recommend an ARM7 SoC, it is because it has and will be more appropriate for the intended application in the long term (they are getting cheaper, more powerful, and most sane manufactures make a compatible chip). The mountains of errata people like myself have had to go though over the years due to vendor arrogance has become trivial to the users, and now one can get stuff to work reliably with much less money/effort/time. These SoCs are usually meant to run an OS, and simply can’t do some types of tasks an 8bit PIC does with ease.

Change for the sake of change is like building your house on shifting sands. You will fail, hopefully learn from your mistakes, and come to an evidence based reasoning like many before you. I can measure your counter-arguments by the pound as e-waste, and dead spools of buggy vanity chips rotting in stock.

A few people are too inexperienced to know why senior people won’t authorize using new toy silicon (or some silly software), but maybe they should stay in marketing rather than engineering.

https://www.youtube.com/watch?v=7G_zSos8w_I

Me too,

That’s why I use ST parts. For the money, it doesn’t get better. STM32 low ends vs priced the same as a PIC16F?

Regarding the cypress parts, what do you mean by safety issues?

The STM32 would have been awesome in the 90’s, but Broadcom’s $5 700Mhz/512M ARM6 SoC is functionally superior for this class of computing. The STM line just showed up about 15 years too late, but is still good for educational purposes.

Cypress chips are a fire hazard as the chip & SoC design can fail closed even from offline static discharge. Cypress itself is like corporate cancer, and is directly responsible for the fall of Fujitsu (cored Spansion too). I assume the only reason they are still around is due to their relationship with the feds making “American chips”. I can’t allow their crap on a production product, as they don’t seem to care that they have already indirectly strangled a few companies in “anomalous operation” lawsuits.

If you need finite impulse response based DSP filters, do yourself a favour and try a kit based Xilinx FPGA with Analog Devices front end. There are even MatLab filter design code-gen suites around for low-skill software people, but I’m not smart enough to get it to work faster than manually typing VHDL.

This is not the first time I’ve heard of Cypress ESD failures in MCUs. Its actually the only time ive ever seen this type of failure in an MCU.

Are you referring to the Raspberrypi vs stm32?

The STM32F0 is under $0.50 in high volume. The broadcom part is $5 on a dev board that must be soldered by a human being (no castle connectors?) Is the broadcom part even available loose?

I guess it depends on the applications you’re doing and how much of a budget you have to spend.

The STM32 would have definitely dominated on price-for-value prior to the rise of SoCs, but they just showed up too late in the game. TI and Microchip also still believe that a “new” mid-range mcu is still relevant to general consumer products, and yet the China A10 has probably shipped more units then all of them combined.

You are probably too young to remember that Fujitsu used to make hard-drives until something started going horribly wrong… Cypress wrong… cause it was their popular silicon… still in use… ;-)

http://www.theregister.co.uk/2003/10/22/fujitsu_hdd_fiasco_to_end/

Re Cypress and Fujitsu drives: Do you mean Cirrus Logic?

http://www.theregister.co.uk/2002/11/05/fujitsu_admits_4_9_million/

Not really, I was alluding to the internal Crossbar switching i/o bus layout of the analogue sections of Cypress hybrid SoCs. Most manufactures of mcus and fpgas do switch pin mapping contexts with ease, but no other manufacture I know of still offers a Halt and Catch Fire enabled architecture.

I’m new to all this and I like to jump from low level to high level. What I mean is i hear about say SPI and I start interfacing something simple in Arduino get a feel for it in a high level. I then transfer over to my bare AVR and ARM microcontroller and page through more technical documents.

With Arduino, and many of the other libraries, that’s a great plan. Because the “language” is really just C/C++, you can freely mix and match most of the time. So get a working demo in “pure Arduino” and then tweak at the edges. If it still works: success! :)

Some of the libs are less loosely-coupled, though, and this invites subtle bugs and much head-scratching, so it’s not perfect, but it’s a great way to go.

… can anyone explain to me the importance of DMA in a microcontroller, maybe with a practical example? I see everyone using ARM is bragging about it, but I’m not sure why. I know it’s useful for offloading storage operations on a PC but I have yet to understand what it could be used for in a microcontroller. Thanks.

Blinks LEDs faster.

An example may be were you have a very high speed Analog to Digital converter and you want to get the samples into memory (RAM) as fast as possible. The CPU may be too slow to do this and even if it were fast enough to do it then it would be using up most of it’s time with that one task and have little time left for other tasks.

With DMA (Direct Memory Access) the DMA controller can load the samples into RAM in the background without the CPU having to do anything more than set up the DMA parameters initially.

The above is a fairly *traditional’ use of DMA. In some of the modern chips the DMA controller can link a far greater range of resources and NOT just memory.

So – for example – people have used DMA features to generate VGA and things like that – things that the CPU can’t really do well if at all.

Oh and one other thing – It can make the LED blink faster like [Benchoff] said lol.

Interesting, thanks! Guess it’s time to start playing with that STM32 Discovery board sitting in the drawer.

If you want to have some fun, get one with an LCD and start working with STemWin.

I’ve used DMA to implement a high speed RS485 bus on a PIC32, using the hardware CRC feature. I’ve also used DMA on a LPC17xx chip to read values from a SPI based optical scanner. And of course, most Ethernet and USB peripherals use some kind of DMA as well.

Think of DMA as a peripheral that is used to transfer data without requiring CPU cycles once you have set it up. It has much less latency a few clock cycles vs 30-40 easily in an interrupt. So it is suited for high bandwidth and/or low jitter. The CPU can be running other code or even asleep during the transfer. Once you have used it, you ‘ll want it.