With cameras, robotics, VR-headsets, and wireless broadband becoming commodities, the ultimate, mobile telepresence system – “Surrogates” if you will – is just one footstep away. And this technology may one day solve a very severe problem for many disabled people: Mobility. [chris jones] sees great potential in remote experiences for disabled people who happen to not be able to just walk outside. His Hackaday Prize Entry Project Man-Cam, a clever implementation of “the second self”, is already indistinguishable from real humans.

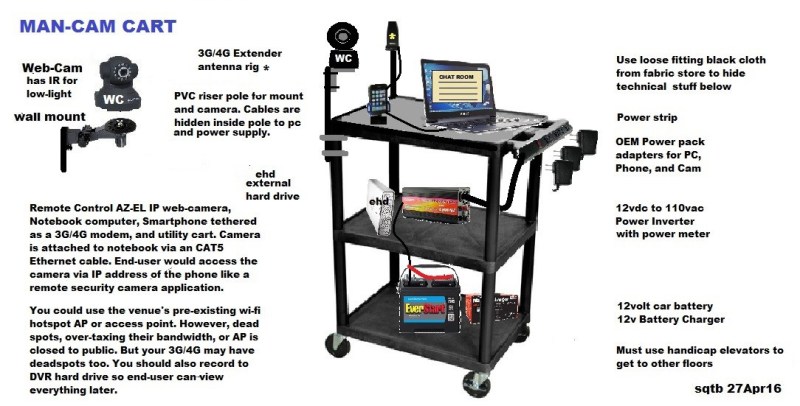

Instead of relying on Boston Dynamic’s wonky hydraulics or buzzing FPV drones, [chris] figured that he could just strap a pan and tiltable camera to a real person’s chest or – for his prototyping setup shown above – onto a utility cart. This Man-Cam-Unit (MCU) then captures the live-experience and sends it back home for the disabled person to enjoy through a VR headset in real time. A text-based chat would allow the communication between the borrowed body’s owner and the borrower while movements of the head are mapped onto the pan and tilt mechanism of the camera.

Right now, [chris] is still working on getting everything just right, and even if telepresence robots are already there, it’s charming to see how available technology lets one borrow the abilities of the other.

“A text-based chat” – Why not a speech-based chat?

They could just talk with each other by radio or something…

@Moritz Walter – Thank you Moritz! You captured my project eloquently!

Re: The chest mounted az-el (pan-tilt) camera… I realized that that would require a Frankenstein appearance with wires going off to a backpack which contained a battery, laptop, and other equipment. So I looked up backpack visitor policies at a local art museum here in Hartford CT (USA) and they said NO BACKPACKS! (BTW oldest art museum in USA) Then a cordial HaD guy from IRAN posted “interesting idea!”. At that point I realized OOPS! I must have screwed up somewhere… I evolved the chest mount to a SmartPhone with Skype which does not move (no az-el motor). I didn’t want my surrogate to get arrested by Dept of Homeland Security (LOL).

Then the joystick moving of the az-el camera with the disabled person’s eyes was not explained too well but I do have the technology to do that, but it was invented by someone else. It’s software that can be load in the background and control a program by detecting client’s eye movement.

And SebiR I did think of that and I mentioned that the “surrogate” (BTW I like that word Moritz!) can wear an earphone or a BlueTooth to communicate with the disabled client via the data channel provided by 3G/4G or the venue’s wi-fi AP. I wanted to stay away from voice recognition or audio as many disabled people have speech impediments. The surrogate would be asking for clarifications every other command. A text based chat room eliminates any confusion on verbal surrogate commands.

The TELESPRECENSE robots I am replacing with my idea have audio speakers and a video monitor so venue’s other visitors can interact. I wanted to stay away from loudspeakers to hold down noise like in a public library (shhhh!). The monitor is over-kill and a photo on paper can be supplied if desired. However, the microphone can pickup all sound and send questions to the client by the visitors. Th utility cart eliminates over-weighing the surrogate and breaking his back :-)

https://www.youtube.com/watch?v=gB3YNLv0iE0

The head or eye movement software is called CAMERA MOUSE 2015. It can control the IP Camera pan tilt but is a little sensitive/tricky. If I set up a voice synthesizer application that CAMERA MOUSE can control big browser buttons to speak canned words like how Dr. Stephen Hawkin does.

Oh. It’s about communicating with a computer. I thought it is about giving commands to a human cam-bearer. Sorry ;)

The human cam-wearer option has much to say for itself. It could be a blind person who not only provides remote viewing and visitation to someone bedridden (or house bound), but gets visual translation from the person they are assisting. It’s a win/win arrangement!

The blind person can see which shelf the beans are on or what kind of beans. They are can pester their link-partner to be their eyes.

The paraplegiac hasn’t been out and about at all. His “pay” for helping the link-partner is fulfilled requests to go places and interact with people as a proxy. VR “hangouts” would only make it that much more appealing. The VR headsets of the people in the hangout could easily be overlain with a rendering of the upper half of their heads. Eye tracking could make it less spooky as that could be rendered too. The image of the proxy of course would be rendered with an image of the link-partner.

Being a link-partner could be a part time or full time job. I like the idea of people replacing robots for certain jobs.

Down the road I wouldn’t be too surprised to see some kinds of mutually beneficial link-partners opt for a permanent radio-neural connection. BORG here we come!

zenzeddmore – Good idea. What do you mean by “blind remote viewing”? The blind person could not “see” anything. The surrogate would not need my MCU to be a blind-descriptive service. All he would need would be a cell phone in his pocket and a Bluetooth ear-piece. He then could verbally describe everything he saw to the blind person. Yeah that’s a good idea too! Thanks for the brainstorming. It was helpful.

If you want to know about some really crazy new ideas with “radio neural connection”, Google Elon Musk (Tesla CEO) and neural LACING. They inject a microscopic neural lace into your brain and you have an external connection to the outside world directly from your brain! They’ve already done it to mice. The inventor wants to try it on himself. I don’t think they will let him do it yet. Too dangerous. For me I’ll stick with my external EEG device for that (MindFlex device – 2.4 Ghz EEG brain monitor toy).

I can already control my PC with my head movement with CAMERA MOUSE 2015. You just aim webcam at your either your nose, eyes, finger, etc. It will track every movement of target to the mice circuit in your PC/laptop. However, you have to be able to move something. It can not track your eyes yet. Well maybe if you put webcam real close it might work but it would be problematic. It’s free software. You should download it. It’s fun to play with even if you are not disabled.

No SebiR you had it correct the first time! I’m just saying that sometimes disabled people might not be able to be understood due to something wrong with their speech patterns. You can’t expect a human surrogate who’s volunteering their time to have to guess at what someone is trying to tell them. It would be frustrating for the surrogate AND the client. My rig has a 2-way voice system but it would be noisy in a museum or library. A text chat session would eliminate any guesswork unless they were dyslexic. The nurse could do the typing or talking I guess. Good suggestion though!

A wheelie-boy!

(Gibson; The Peripheral)

Awesome! :-)

https://farm6.staticflickr.com/5610/15815355851_6b5a3ffbf5_c.jpg

It’s been done before.

http://vignette1.wikia.nocookie.net/arresteddevelopment/images/c/cc/3x05_Mr._F_(30).png/revision/latest?cb=20130321045439

Michael: What’s a Surrogate doing here?

Larry: We’re meeting with the lawyers.

George Sr.: So I hired this guy to be my eyes and ears.

Michael: You know, dad, this guy costs us a fortune.

Larry: He’s worth every penny.

George Sr.: Hey, I didn’t say that.

Hmm. Does this work:

Nope, this?

[img src=”http://vignette1.wikia.nocookie.net/arresteddevelopment/images/c/cc/3x05_Mr._F_(30).png/revision/latest?cb=20130321045439″]

Cool! Larry Sanders Show with the late Gary Shandling…

http://oi66.tinypic.com/2hi08yg.jpg

Okay, I fail. :)

Lol. WordPress makes fools of us all!

It’s normal HTML tags here. Here’s how you do it:

url_of_image.jpg

Actually WordPress automatically pulls up JPEGS if you include the FULL URL ending in .jpg on it’s own new line NOT within a paragraph. Same thing applies to YouTube urls.

OOPS!

...<img>...</img>Boy that was hard! https://codex.wordpress.org/Writing_Code_in_Your_Posts

<img>…</img>

ANYWAY!!! Use shift comma to make a less than caret, then img, then shift period for greater than caret. The end tag is the same thing but put a slant in (under the ? key) front of img. The code tag is not working correctly.