I don’t think we’ll call virtual assistants done until we can say, “Make me a sandwich” (without adding “sudo”) and have a sandwich made and delivered to us while sitting in front of our televisions. However, they are not completely without use as they are currently – they can let you know the time, weather and traffic, schedule or remind you of meetings and they can also be used to order things from Amazon. [Pat AI] was interested in building an open source, extensible, virtual assistant, so he built P-Brain.

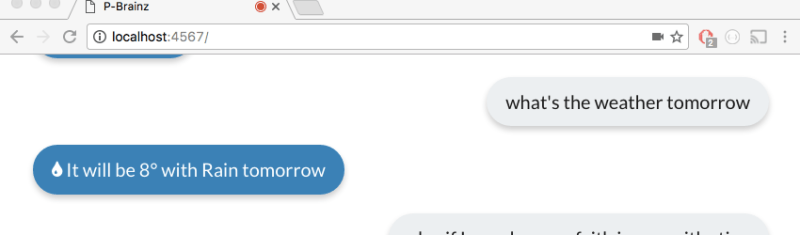

Think of P-Brain as the base for a more complex virtual assistant. It is designed from the beginning to have more skills added on in order to grow its complexity, the number of things it can do. P-Brain is written in Node.js and using a Node package called Natural, P-Brain parses your request and matches it to a ‘skill.’ At the moment, P-Brain can get the time, date and weather, it can get facts from the internet, find and play music and can flip a virtual coin for you. Currently, P-Brain only runs in Chrome, but [Pat AI] has plans to remove that as a dependency. After the break, [Pat AI] goes into some detail about P-Brain and shows off its capabilities. In an upcoming video, [Pat AI]’s going to go over more details about how to add new skills.

[Pat AI]’s ideas for P-Brain seem similar to those of the Jasper project, ie., to build an open source virtual assistant that runs on cheap hardware and rivals the virtual assistants developed by Amazon, Apple and Google, among others. It’s still early in P-Brain’s development, but P-Brain can already do plenty of things. Hopefully, as [Pat AI] and others develop new skills for P-Brain, it will give the others some stiff competition.

[Update] – [Pat AI] has already put up an update video where he shows off P-Brain running outside of Chrome.

P-Brain, hire me a mechanical turk to do some mundane task?

You can simply build a Skill to do just that! 30 lines of Javascript would get you something along those lines :)

“P-Brain only runs in Chrome, but [Pat AI] has plans to remove that as a dependency”

Chrome is turning into what IE was back in the day. Does things differently from all the other browsers but has such a big market share that you have to make exceptions for it.

Its less about Chrome and more about Google’s Cloud Speech API, which outside of Chrome (where its free) is very expensive to use. I’ve the RasPi client linked in the video at the bottom of the article (which works on most Linux distros & macOS) too if you dont want to be stuck with Chrome :)

> Google’s Cloud Speech API, which outside of Chrome (where its free) is very expensive to use.

So, basically what you’re saying is, it ‘Does things differently from all the other browsers but has such a big market share that you have to make exceptions for it.’

Just like IE did, back in the day.

No no I agree that Chrome is quickly becoming the devil but i’m just saying that my use of Chrome is just one example of the ability to make use of several voice to text API :) Trust me, I wish there was a standard for this across all browsers, it would have made my life infinitely simpler building this.

so its a box that can google. wow.

No its not.

“designed from the beginning to have more skills added on in order to grow its complexity”

For those that don’t get it, means it can do whatever you want.

Well said Gordon, @pff’s comment is like saying ‘Its a box that can Google, wow’ about the Rasberry Pi. Make it control your smart appliances, notify you when a celeb dies, keep you company when you’re lonely…basically use your imagination to make it do literally anything (Including making you a sandwich, if you have a networked sandwich making robot handy) via your voice.

So it’s another worldly annoyance that won’t have sex with me. GREAT!

Well with the rise of sex robots, I dont see why it wouldnt!

Don’t get me wrong, it’s nicely executed and kudos to the author for not just tinkering but also teaching others to tinker.

But if I understand correctly, ths works as long as someone is doing speech recognition for it.

And this someone seems to be Google (either through Chrome or through someone else’s Chrome).

It has lipstick on it, but it’s still the same old hog.

Not quite, speech to text is also done using Wit.ai (As per my RasPi client), it can also be done using open source speech recognition libraries PocketSphinx / Julius or other online API’s like Houndify or Microsoft Cognitive Service Speech API, this is more about the conversion of a natural language query into a contextual response / command and less about the speech to text conversion. The Raspberry Pi client for instance also has console input so even without STT this project stands on its own (IMH/biasO).

If its a modular as he says then theres no reason not to swap out google and plug in another service as and when it becomes available. Whether that voice recognition and AI element is from another another big company or a future more trusted open source project.

If that part isnt swappable yet why not try and allow for it later.

Interesting.

Very interesting! I’ve been keeping an eye out for a privacy-friendly FOSS Alexa/Siri substitute for a while, this is the most finished solution I’ve seen yet.

Great to hear that! Makes this endeavor all the more worthwhile :)

But an Echo Dot is cheap (especially on sale), and Google Home will get there. Both are becoming very extensible. P-brain will never fly.

Doesnt matter if it ‘flys’ or not, its not a business, its an open source project that allows people to dick about with voice assistants if they’re interested. (its also vastly less complex to build clients and extensions for P-Brain than it is for either of those two platforms, but thats besides the point).

it’ll fly for me. I want to glue speech recognition and virtual assistant functionality into my home automation. I am also completely against any of my raw data leaving my own subnet not on my terms. I have a automation server running home-assistant currently, and I’ve been fighting a bit with getting cmu sphinx to work on it, with a view to getting lightweight network nodes to the heavy lifting back to it for speech recognition. If this is modular, it could slot nicely into that framework.

A intelligent open source assistant is now one of the FSF’s high priority projects.

https://www.fsf.org/campaigns/priority-projects/personalassistant

Live demo (if you don’t mind the self-signed ssl cert) is available for Chrome users (I’m sorry @flyinglotus1983 its the cleanest way to allow people to interact with it online) on the projects GitHub web page https://patrickjquinn.github.io/P-Brain.ai/

perhaps it’s just me but glancing at this article and seeing p-brain in the title I was instantly reminded of the p.Brain family micromagic boards for hexapods and was expecting some IK driven hexapod assistant, not a dismal alexa clone that could be replaced by sirius, cortana, or the like.

Actually a hexapod assistant sounds pretty neat, why dont you go off build a finished project and come back with your results for us to ‘critique’ your work in a similar fashion. I’ll ask P-Brain to set a timer while wait for your return with the goods.

Wow that’s a lot of butthurt you’ve got there, you should look into getting an ointment for that.

It’s neat though it’s merely a client for a virtual assistant. The real deal will be far more impressive but will need some real horsepower behind it.

I quite like the festival speech synthesizer, it is reasonable for an open source project

http://www.cstr.ed.ac.uk/projects/festival/onlinedemo.html

I like your shapes, friend.