We recently looked at the origins of the integrated circuit (IC) and the calculator, which was the IC’s first killer app, but a surprise twist is that the calculator played a big part in the invention of the next world-changing marvel, the microprocessor.

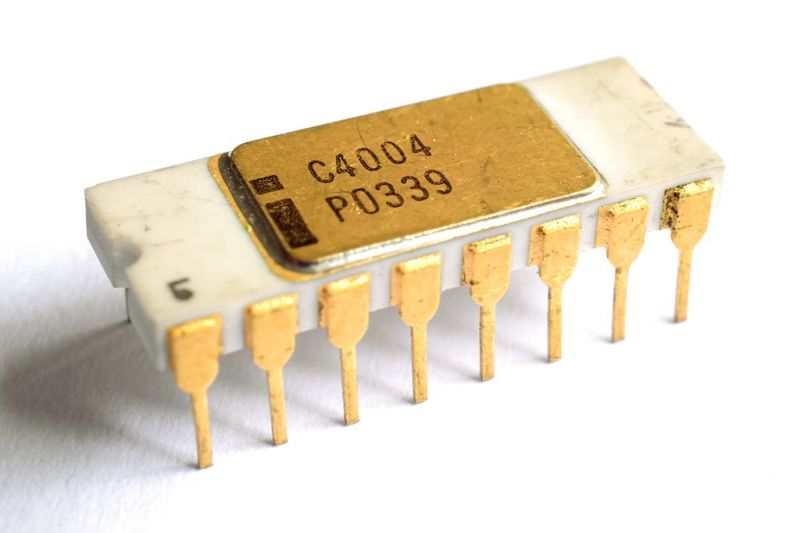

There is some dispute as to which company invented the microprocessor, and we’ll talk about that further down. But who invented the first commercially available microprocessor? That honor goes to Intel for the 4004.

Path To The 4004

We pick up the tale with Robert Noyce, who had co-invented the IC while at Fairchild Semiconductor. In July 1968 he left Fairchild to co-found Intel for the purpose of manufacturing semiconductor memory chips.

While Intel was still a new startup living off of their initial $3 million in financing, and before they had a semiconductor memory product, as many start-ups do to survive they took on custom work. In April 1969, Japanese company Busicom hired them to do LSI (Large-Scale Integration) work for a family of calculators.

Busicom’s design, consisting of twelve interlinked chips, was considered a complicated one. For example, it included shift-register memory, a serial type of memory which complicates the control logic. It also used Binary Coded Decimal (BCD) arithmetic. Marcian Edward Hoff Jr — known as “Ted”, head of the Intel’s Application Research Department, felt that the design was even more complicated than a general purpose computer like the PDP-8, which had a fairly simple architecture. He felt they may not be able to meet the cost targets and so Noyce gave Hoff the go-ahead to look for ways to simplify it.

Hoff realized that one major simplification would be to replace hard-wired logic with software. He also knew that scanning a shift register would take around 100 microseconds whereas the equivalent with DRAM would take one or two microseconds. In October 1969, Hoff came up with a formal proposal for a 4-bit machine which was agreed to by Busicom.

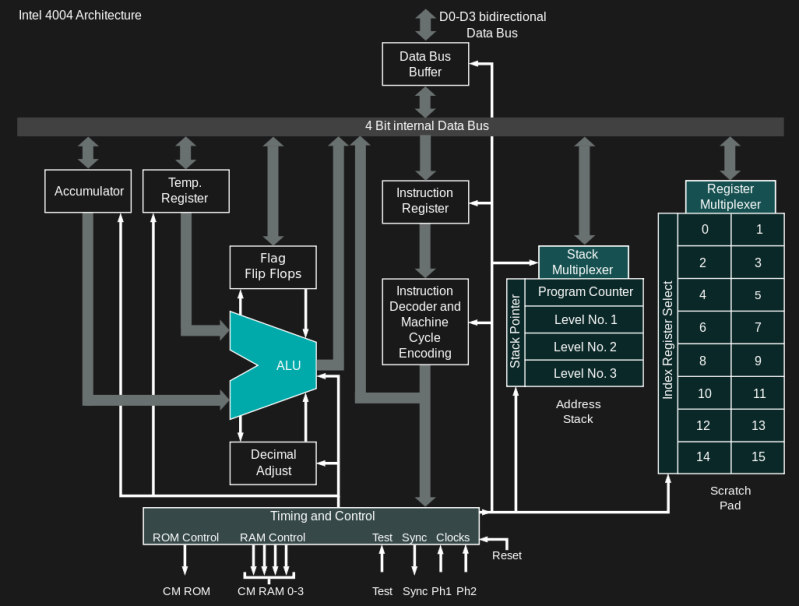

This became the MCS-4 (Micro Computer System) project. Hoff and Stanley Mazor, also of Intel, and with help from Busicom’s Masatoshi Shima, came up with the architecture for the MCS-4 4-bit chipset which consisted of four chips:

- 4001: 2048-bit ROM with a 4-bit programmable I/O port

- 4002: 320-bit DRAM with 4-bit output port

- 4003: I/O expansion that was a 10-bit static, serial-in, serial-out and parallel-out shift register

- 4004: 4-bit CPU

Making The 4004 Et Al

In April 1970, Noyce hired Federico Faggin from Fairchild in order to do the chip design. At that time the block diagram and basic specification were done and included the CPU architecture and instruction set. However, the chip’s logic design and layout were supposed to have started in October 1969 and samples for all four chips were due by July 1970. But by April, that work had yet to begin. To make matters worse, the day after Faggin started work at Intel, Shima arrived from Japan to check the non-existent chip design of the 4004. Busicom was understandably upset but Faggin came up with a new schedule which would result in chip samples by December 1970.

Faggin then proceeded to work 80 hour weeks to make up for lost time. Shima stayed on to help as an engineer until Intel could hire one to take his place.

Keeping to the schedule, the 4001 ROM was ready in October and worked the first time. The 4002 DRAM had a few simple mistakes, and 4003 I/O chip also worked the first time. The first wafers for the 4004 were ready in December, but when tried, they failed to do anything. It turned out that the masking layer for the buried contacts had been left out of the processing, resulting in around 30% of the gates floating. New wafers in January 1971 passed all tests which Faggin threw at it. A few minor mistakes were later found and in March 1971 the 4004 was fully functional.

In the meantime, in October 1970, Shima was able to return to Japan where he began work on the firmware for Busicom’s calculator, which was to be loaded into the 4001 ROM chip. By the end of March 1971, Busicom had a fully working engineering prototype for their calculator. The first commercial sale was made at that time to Busicom.

The Software Problem

Now that Intel had a microprocessor, they needed someone to write software. At the time, programmers saw prestige in working with a big computer. It was difficult enticing them to stay and work on a small microprocessor. One solution was to trade hardware, a sim board for example, to colleges in exchange for writing some support software. However, once the media started hyping the microprocessor, the college students came banging on Intel’s door.

To Sell Or Not To Sell

Intel’s market was big computer companies and there was concern within Intel that computer companies would see Intel as a competitor instead of a supplier of memory chips. There was also a question about how they would support the product. Some at Intel also wondered whether or not the 4004 could be used for more than just a calculator. But at one point Faggin used the 4004 itself to make a tester for the 4004, proving that there were more uses.

At the same time, cheap $150 handheld calculators were creating difficulties for Busicom’s more expensive $1000 desktop ones. They could no longer pay Intel the agreed contract price. But Busicom had exclusive rights to the MCS-4 chips. And so a fateful deal was made wherein Busicom would pay a lower price and Intel would have exclusive rights. The decision was made to sell it and a public announcement was made in November 1971.

By September 1972 you could buy a 4004 for $60 in quantities of 1 to 24. Overall, around a million were produced. To name just a few applications, it was used in: pinball machines, traffic light controllers, cash registers, bank teller terminals, blood analyzers, and gas station monitors.

Contenders For The Title

Most inventions come about when the circumstances are right. This usually means the inventors weren’t the only ones who thought of it or who were working on it.

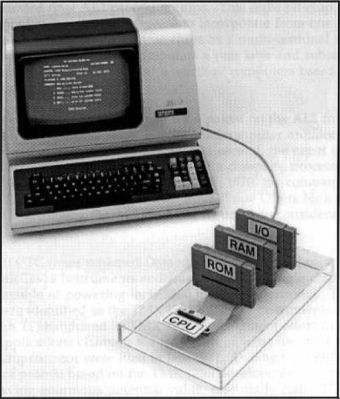

In October 1968, Lee Boysel and a few others left Fairchild Semiconductor to form Four-Phase Systems for the purpose of making computers. They showed their system at the Fall Joint Computer Conference in November 1970 and had four of them in use by customers by June 1971.

Their microprocessor, the AL1, was 8-bit, had eight registers and an arithmetic logic unit (ALU). However, instead of using it as a standalone microprocessor, they used it along with two other AL1s to make up a single 24-bit CPU. They weren’t using the AL1 as a microprocessor, they weren’t selling it as such, nor did they refer to it as a microprocessor. But as part of a 1990 patent dispute between Texas Instruments and another claimant, Lee Boysel assembled a system with an 8-bit AL1 as the sole microprocessor proving that it could work.

Garrett AiResearch developed the MP944 which was completed in 1970 for use in the F-14 Tomcat fighter jet. It also didn’t quite fit the mold. The MP944 used multiple chips working together to perform as a microprocessor.

On September 17, 1971, Texas Instruments entered the scene by issuing a press release for the TMS1802NC calculator-on-a-chip, with a basic chip design designation of TMS0100. However, this could implement features only for 4-function calculators. They did also file a patent for the microprocessor in August 1971 and were granted US patent 3,757,306 Computing systems cpu in 1973.

Another company that contracted LSI work from Intel was the Computer Terminal Corporation (CTC) in 1970 for $50,000. This was to make a single-chip CPU for their Datapoint 2200 terminal. Intel came up with the 1201. Texas Instruments was hired as a second supplier and made samples of the 1201 but they were buggy.

Intel’s efforts continued but there were delays and as a result, the Datapoint 2200 shipped with discrete TTL logic instead. After a redesign by Intel, the 1201 was delivered to CTC in 1971 but by then CTC had moved on. They instead signed over all intellectual property rights to Intel in lieu of paying the $50,000. You’ve certainly heard of the 1201: it was renamed the 8008 but that’s another story.

Do you think the 4004 is ancient history? Not on Hackaday. After [Frank Buss] bought one on eBay he mounted it on a board and put together a 4001 ROM emulator to make use of it.

[Main image source: Intel C4004 by Thomas Nguyen CC BY-SA 4.0]

When did the RCA microprocessor enter the scene?

Early 1975, if talking about the early work, which required two ICs. But we never heard much about it at the time.

Early 1976 for the 1802, which means the COSMAC ELF in Popular Electronics that summer came quite soon after the CPU was released.

The Mos Technology 6502 got announced in the fall of 1975, apparently they had been selling samples that summer at a convention. But it’s confusing since the 6501 got the early promotion, and it was soon off the market since the 6501 was meant as a drop in replacement for Motorola’s 6800. Internally, the 6501 and 6502 were the same.

The Motorola 6800 came out sometime in 1974.

So much happened in a short space, but living through it, there was more distinction.

Michael

Yes, the MOS Technology 6501 acted as a direct replacement for the Motorola 6800, so Motorola sued and MOS Technology pulled the 6501. A few years ago, one of the 6501 chips sold for over $600 to a private collector.

You know I never saw the Mark-8 issue of Radio Electronics till later? It was one magazine I didn’t buy, in retrospect I think because it was kept separately because it was larger. I can’t figure out any other reason. Popular Electronics switched to the larger size that fall, but I still overlooked it. I never sampled Electronic World either.

Michael

@Michael “I never … Radio Electronics … Popular Electronics … Electronic World either. ”

It’s not too late. All three are now archived on line. See http://www.americanradiohistory.com/index.htm

Not same internally, registers and instruction set different.

Pinout was the same. Was much less expensive.

“They did also file a patent for the microprocessor in August 1971 and were granted US patent 3,757,306 Computing systems cpu in 1973.”

And as Paul Harvey would say, “and now for the rest of the story”.

“Most inventions come about when the circumstances are right. ”

I would change that to “Most inventions ARE REMEMBERED if the circumstances are right”.

Many people think that certain individuals and companies were the first to invent technology that we use everyday, but the truth is that many of those inventions already existed but the well-known inventors/companies that we associate with them just happened to show up at the right place at the right time.

But that’s another article, I suppose :-)

===Jac

Most people would be *amazed* at how many things in the “science press release websites” are at most rehashes, and in tons of cases, poor copies of things done from the 30’s-’60s (for example). Not even good copies. Of course, these are not usually things “whose time has come” – and there’s a lot of deceptive reporting (evidently no editing, and scientists don’t seem to write very well…). Things like “new process doubles solar cell efficiency” on examination turn out to take some obscure tech from 1% to 2% – while I have ~ 15% efficient panels on my roof – bought last decade. Batteries, don’t get me started. I’ve written a lot of emails to then-disappointed “research groups” who publish this crap fishing for a grant – to tell them they’re not new, and they’re not even as advanced as 1946. Education system seems to have failed us – but we new that with peepul thikin looser was the opposite of winner. Blind, lead the deaf and the TL;DR, who needs to know the past?

I recall one especially disappointed research group who thought they’d invented the “plasma transistor” when I sent them a data sheet from an old Philliips vacuum tube handbook with the high power version of that – to be used in 6v car radios that didn’t need much power out of the vibrator supply anymore, due to it being able to operate at low voltage and high current.

As you say, it’s another article, maybe a lot of them, the occurences of this are rampant.

If nobody copied the research, it wasn’t even science. Maybe engineering or natural philosophy, science isn’t the only way to do things, but science is about what is repeatable, and you don’t know that unless you’re repeating things.

I always say that Thomas Edison was the Bill Gates of his day. Neither man was responsible for truly inventing anything. What both men did was find and develop what technology there was and find a market for it.

As an example, I present Édouard-Léon Scott de Martinville – inventor of the phonoautograph. Today, he is remembered as the first human in history to preserve the sound of his voice in a fixed medium. He did so when Edison was a teenager. The reason he was not remembered for his work was because his invention did not include a mechanism for playback. It took more than a century and a half for his recordings to be heard.

Similarly, Edison did not invent the light bulb or the generating plant or anything else involved in the commercialization of electric lighting. What he did was improve the light bulb as it was so that it had a commercially useful lifespan, created standardized fittings (to this day we in North America screw our bulbs into “Edison” sockets), poured a ton of money into creating the first electric distribution network and so on.

If Bill Gates didn’t do anything, then no one before him has ever done anything. Sure, we stand on the shoulders of giants but then again THEY DID AS WELL. Get over yourself.

Bill Gates got his big break sale to IBM through his mom hooking him up with her contacts at IBM. BIll then bought DOS for a pittance from the author (no doubt failing to disclose the contract with IBM).

Gates was shrewd and had the ethics of a snake. I suppose there is a sort of person who admires that. But, accomplishments of his own doing? Pretty much zero.

No, Swiftwater Bill got his big break when he convinced MITS to buy his BASIC. That made a big splash when the “industry” was very small. He had that open letter to hobbyists, about the “stealing” of his BASIC, which probably guaranteed that we all knew his name.

Since he was there early, BASIC became associated with Microsoft. So as more companies came along, most sought out Microsoft to do BASIC for their computer. Relatively few bothered with their own version. When the all in ones hit in 1977, they had BASIC in ROM, and most came from Microsoft. OSI, Radio Shack (after the limited BASIC in the first TRS-80) were just some. I thought Applesoft was written by Microsoft, I cant remember about Commodore. Microsoft was smart, not signing away everything to MITS, but other companies did well too, negotiating deals to bet a good price. There was at least one bug in OSI’s version, but it remained because they had a large number if ROMs made with it and they had to use them up.

It was that history that sent IBM to Microsoft. They might have been confused bout things since the Z-80 card, which allowed one to run CP/M on the Apple II came from Microsoft, their first hardware product. Yes, IBM made Microsoft a giant, but it had been big within the small computer field before that.

That said, the premise us correct. Bill Gates didn’t create/invent things, he adapted. So he coded an existing language, BASIC, in 8080 for the Altair 8800. I gather someone else coded the math or floating point subroutines. He may have done this first, but not by much. The whole People’s Computer Company/Dr. Dobbs circle was coming fast with Tiny BASIC, so it would have come anyway. PCC was one of the existing groups that ere promoting the use of BASIC.

And once he’d made that first version of his BASIC, it was relatively easy to recode to the other popular CPUs. But he was willing to adapt it to each computer, to take advantage of any “different” hardware.

Michael

Indeed you are correct. Mary Maxwell Gates was chair of United Way, where she worked with John Opel, who was then President of IBM Corp, and soon to be chairman and CEO. Opel was helping Frank Cary start up Independent Business Units, including ESD in Boca, so when the one needed software, it was a shoe-in for Opel to ask why they didn’t give Mary’s boy, Bill a call.

LOL I who wrote that Bill Gates didn’t do anything?

I once talked with a guy who authored what was generally thought of as one of Microsoft’s major contributions. I asked him what Bill had created that was totally original. His response— the PEEK and POKE commands.

What’s up with that AL1 photo from the 1969 courtroom photo..? Those ROM/RAM/IO carts undeniably look like Super Nintendo cartridges from 20 years in the future! They have the same number of side ribs (5) and everything. Did Nintendo steal their design ascetic 20 years later??

Yet another theft of future technology through the use of a Time Machine.

Isn’t that a *1990* courtroom photo?

Ah, and here is the source link: http://corphist.computerhistory.org/corphist/documents/doc-4946dbc7a541f.pdf

Indeed it does say “The external memory, containing the Customer Record demonstration program, is enclosed in a rugged interchangeable cartridge similar to those used to store ROM game programs for Nintendo video game sets.”

That AL1 prototype uses Super Nintendo cart shells for the “RAM”, “ROM” and “I/O” blocks! I guess this was the 1995 model built for the purpose of winning a court case. Nintendo should take a cut of the settlement.

Yeah just finished reading the document and realized the litigation was actually in 1995. So yeah they used some SNES carts for the demo. Craziness!

I’m curious, which pinball machines have the 4004? I’ve repaired a respectable number of games and never seen a 4004. As far as I remember the Gottlieb sys1 games are the only ones with a 4 bit processor.

I’m no pinball expert but from http://www.ipdb.org/machine.cgi?id=5103 the “Bally Brain” used it.

I might be showing my ignorance here, but how did they plan to make a useful calculator out of a 4-bit processor that, presumably, would have been incapable of working with numbers larger than 15?

4 bits is enough for digits 0 through 9, which is what humans work with!

More sets of bits = more calculator digits (e.g. have your RAM as a set of 4-bit bytes)

Ah! So the calculator would be doing arithmetic using roughly the same methods we used in elementary school – carry the two, and so on. When they taught us binary arithmetic in school, it was just sort of taken as read that you’d have a suitable number of bits. The rollover flag was mentioned, but more in the sense that “if you see this, use more bits”.

Yes, Binary Coded Decimal was “a thing” or even “the thing”, then. There were questions about what to do with the *extra* bits! DEC used octal notation which was easy on humans (well, this one), there was no such thing as bytes, really (there was 5 bit Baudot and there was EBCDIC), no one used hex, obviously no unicode…and you just used more “digits” or whatever you called a nybble back then if you needed more. We never carried a 2, as we only ever added two sets of digits at a time (and one digit from each at a time) – so at most you carried a 1. “Decimal adjust” was fairly complex compared to the other instructions, in terms of what it took to implement and how long it took.

DEC used octal notation for documentation purposes because most PDP-11 instructions use three bit wide fields to specify what register to use in the source and destination addresses.. When represented in octal you could see the source and/or destination addressing by inspection. DEC switched to hex for documentation on the VAX series, since it did not have 3 bit wide fields in the instructions, so there was no advantage to using octal.

BCD was still a thing in the 1990ies. Think of the good old HP28, HP-48/49/SX/GX and predecessors and successors. They all use BCD arithmetic. And probably a ton of other pocket calculators.

I suspect they used BCD, as others have pointed out. However, most processors have a “carry” bit that can be used to do arithmetic on numbers larger than the native cpu word size when working in binary.

Basically, you add two 4 bit numbers. If the result overflows 4 bits, the carry bit is set. Next, you add the next most significant 4 bits, plus the carry bit. Keep going for as many 4 bit chunks as you need to add.

Subtraction is similar (using the carry bit as a borrow). Multiplication and division are derived from addition and subtraction, similar to the way it’s done by hand.

>> how did they plan to make a useful calculator out of a 4-bit processor

Same way we humans do it: One digit at a time. Add the two lowest-order digits, carry a 1 to the next-lowest order.

All Intel chips (and, I presume, all others) have an ADD instruction and an ADC (add with carry). To add numbers with N digits, set pointers to the two low-order digits, add them, bump the pointers, ADC the rest.

This is why Intel chips used Little-Endian addressing. You store the numbers low-order digit first, and you set the pointers to point to the “beginning” of the number. Otherwise you have to start at the end of the digit array and go backwards.

Also, as others have said, 4-bit CPUs make it easy to do decimal instead of binary arithmetic, which is nice for calculators. Intel used the decimal-adjust instruction DAA to support adjusting the carry bit properly. Decimal is convenient for adding and subtracting– not so much for mult and div — and of course you don’t have to worry about dropping bits during the binary-to-decimal conversion.

The 8080 actually had TWO carry flags. The “auxiliary carry” was set for the low-order nybbles. So the DAA took care of both carries.

How do 64-bit CPUs do it? Do they have 20 auxiliary carries? Nah, I don’t think so. We gave up trying to use base-10 arithmetic a long time ago.

I’ll just leave this here: https://www.thocp.net/biographies/pickette_wayne.html

No BS. I put a suit on this guy when I worked at mens warehouse in Illinois. He’s on my Facebook!

Is it just me or are those SNES cartridges in the photo of the AL1 as a microprocessor?

Not just you, but if it’s a demonstration piece I could see it being a thing similar to what happened here: https://youtu.be/nCAMMKsbEvw?t=13m57s

Check out “From Chips to Systems” by Rodnay Zak’s 1971 for excellent technical description of architecture and operation. Tops on my reading list anyway!

Had to look that up since the author sounded familiar. Had the 81 version of the book.

Sorry, had a brain cramp. It is “Zaks” and it was 1979 I believe

Yes, I was going to say 1971 was a bit early. His writing was from the microcomputer age. I don’t think I have that book, but I have one or two Zak books (he had a publishing company) related to the 6502.

It is interesting, various people self-published books about microprocessors, and for a while, they were the references. The traditional hobby electronic publishers like Sams and Tab arrived later with books on the subject.

So Scelbi started out with an 8008 machine in I think late 1974, I never saw the ad in the back of QST until I looked for it later. It didn’t make a wave, and soon he dropped the hardware. But he had a book about programming the 8008 and a book about useful subroutines. As other microprocessors arrived, he came out with similar books for them.

Adam Osborne came out with a 3 volume set, the first version might have been in a binder, an introduction then covering the existing microprocessors. That broadened to having books about each of the important microprocessors. A few years later he sold the company McGraw-Hill, and started his own computer company.

Then Rodnay Zak’s had his books and company, I recall the books being more hardware oriented (as in adding I/O).

Michael

Anyone have a book recommendation on technical computer history from the 60s, 70s and 80s era?

Not a book but an online timeline: http://www.computerhistory.org/timeline/1933/

Thanks!

My first job was at a company using two 4004 processors (and assorted 1702 EPROMS) to control a holographic read-only-memory system. I still have some of the holograms.

A PDP-11 (which I learned to program on and loved) was used to record the holograms.

At that time, I knew in my heart that this was going to be my best job. I’ve been right so far. I learned soooo much from a bright bunch of helpful guys.

I probably still have some 4004 data sheets, etc.

John P

Radio Electronics published an article in July of 1974 for the “Mark 8” computer (https://en.wikipedia.org/wiki/Mark-8). It used Intel’s first 8 bit processor, the PMOS 8008 with a blazing 125KHz clock (a NOP instruction took 4 clocks I think). I was in college. And working at an electronics/HiFi store at the time to put me through school and support my electronics “habit”. I ordered the CPU board via the magazine article and then a bunch of surplus and pulled parts from Poly Paks, California Digital, Jameco, and the like. I got my power supply transformer and bulk capacitors from John Meshna. While building the CPU board and power supply, I designed and built my own 2KB SRAM single-sided PC board (with Kepro film, boards, and developer) using sixteen 2102 NMOS parts (also surplus) and more “real” TTL parts (the whole thing used that). I got tired of the PDP-8 style paddle switches to enter addresses and data, so I designed and built a hex keypad plus LED display for data entry with “auto-increment” (I just exploited the Mark 8’s basic auto-increment for paddle switch entry). I could “jam” either a 14 bit address (the upper two bits showed the processor’s internal states) or 8 bit data. Then for my senior design project, I modified an Intel published design for a 1702A 256 x 8 bit PMOS UV-EPROM that required +5 and -9V supplies to run and required a -46V pulse for programming! My redesign raised the +5V supply by 46V to do that by bootstrapping the 7805 regulator’s “ground” pin! The only electronic tools I used was an EICO FET-DVM (state of the art JFET front end!) and a Heathkit 5MHz recurrent sweep oscilloscope (both built as kits).

My professor’s jaw dropped when I submitted my project (we never did anything that complicated in class and he said he learned a lot from my design and code). I got an A for it. The Mark 8 was designed Jonathan Titus who later co-authored the BugBook III tutorial books on microprocessors on the updated Mark 80. He visited one of our EE classes at college. In March of 1975, Popular Electronics published the Altair 8800 article that most say was the first “real” PC (it used the newer and more powerful 8 bit 8080 and the now infamous S-100 bus (because they got a good deal on the 100 pin backplane connector!) which was mostly a buffered and demultiplexed version of the 8080 bus. I think that evolved into the PC-104 bus and its variants.

That was lots of fun and hard work. I seldom got much sleep then.

Look up, you’ll recognize the name of one of the repliers.

The Altair was on the cover of the January 1975 issue of Popular Electronics, which actually came out some time before January. The magazine had moved to the larger format in September or October, just in time, though in retrospect, maybe in anticipation of the computer articles. Though, you couldn’t do much with the articles. PE didn’t publish a schematic for the Altair, it was too big. I think, but I’m not certain, that Radio Electronics didn’t publish the Mark-8 schematic either. In both cases, you were expected to send a small fee to get an information package that had all the details.

Michael

The 100-pin bus in the Altair had the general name “S-100 Bus.” The IEEE approved a modified and revised version of the bus as the IEEE-696 standard in late 1983. The PC/104 bus was released as a standard in late 1983 and has undergone extensions and modifications since then. As far as I know, the PC/104 bus never got adopted as an IEEE standard. Wikipedia has articles about the S-100 and PC/104 buses.

Wow, Art! I’m impressed. That project you did with the Mark-8 was something special.

One minor correction: The Altair 8800 article was in the Jan 1975 issue, and it was actually published in Dec ’74. MITS sold a _LOT_ of 8800’s on the basis of that article. One of them was to me.

Thanks for the correction Jack (I still have both sets of articles “somewhere” in my attic).

BTW: I’m a long-time “fan” of you yours. I have incorporated some of your stuff into my ILS software that has been landing planes around the world since the ’90s as part of my work at Wilcox Electric, Airsys, Thales ATM (they are all the “same” company).

I also should have mentioned how my senior project got recycled: a friend at work wanted a Commodore 64 and they were offering a $100 trade-in, so I gave that project to him to use as his trade-in and they accepted it! He was real happy.

Thanks, Art. I’m planning to post a lot of my articles on my website, JackCrenshaw.com. I plan to do it Some Day Real Soon Now.

what was it used for

“Invention”.

The single chip microprocessor was not really an invention, an original idea no one else would have thought about.

It was just the consequence of higher chip integration. Apollo flight computers were made of 3 input NOR gates, later computers could use monolithic RAM and ROM, multiple flipflops and gates per chip, bit slice ALUs of various widths (as the 4bits 74181 or AM2901 family)… Early microprocessors came for low end applications, particularly calculators which were a very competitive and innovative market at that time (comparable to smartphones nowadays : Imagine having a calculator in your pocket, instead of a mechanical thing larger and heavier than a typewriter, or an electronic one, able to calculate logarithms but as large as a desk !)

In the 70’s, there were low performance monolithic CPUs, and “real” computers made of dozen of MSI chips, and these designs eventually merged into few high density chips, for example VAX and PDP11 microprocessors in the 80’s.

So you’re saying that the 4004 was just like the Apollo flight computer, only smaller????

That’s like saying the the SR-71 Blackbird was like the Wright Brother’s flier, only faster.

Fact is, I’m not quite sure what your point is. Yes, once you understand that a computer can be constructed from simple logic gates, you can build one. Surprise, surprise,

From an architectural standpoint the AGC was much more advanced than the 4004 despite being built from SSI technology.

The 4004 was close to as simple as you could make a cpu capable of common math functions while the AGC probably is closer to the 8086 or TMS9900 as far as complexity goes.

With respect, I think you’re way off on that. The AGC had 1 general-purpose register. The 4004 had 16. The AGC had no stack. The 4004’s wasn’t deep, but it did have three levels, good enough for nested subroutines.

You say the AGC was the equivalent of an 8086 or TMS9000? Most people in the know say it was about the same as that of a digital watch.

Don’t get me wrong: I was working on Apollo at the time, I knew Dick Battin, and have the greatest admiration of both the computer and his team’s implementation of it. But to compare it to the 8086, all the while belittling the 4004, is just plain ludicrous.

Sorry, it wasn’t clear.

Just like nowadays smartphone drive higher integration in chips (integrating RF and DRAM in stacked silicon), 40 years ago calculators (both simple and more advanced programmable ones) drove the innovation for low power, cheap (but slow) integrated microprocessors.

Early monolithic 4…8bits CPUs were designed for optimising existing products. It wasn’t a new invention in search of applications. The Datapoint 2200 story is similar to Busicom wanting to reduce the part count of their calculators.

One consideration was that the microprocessor seemed to be originally seen as a logic replacement. I suppose a calculator is borderline, but the terminal wasn’t. Replace a lot of logic ICs with a microprocessor and software, and the outcome is better. Decades later it’s hard to visualize, but it was a big leap at the time.

The 8008 wasn’t particularly good as a computer, extra hardware needed to add a stack, the 4004 even less so. National Semiconductor had a book out in the early seventies about using software to replace logic.

But some saw a “computer” and that’s where the Mark-8 and Altair 8800 came from. For most of us at the time, the magic word was “computer”, not what it was capable of or how we might use it. So it shifted, they became limited “computers” which you could also use as controllers.

We see it today, “that 555 could be replaced by a microprocessor”. All those “makers” building things with arduinos and Raspberry Pis, they don’t need much soldering skill, but their computers aren’t being used for general purposes.

You could use a PDP-8 as a controller, but it was expensive so you had to replace a lot of logic to make it financially viable. But you could use that Intersil 6100 (a 12bit microprocessor that ran PDP-8 instructions) to control record presses, because the cost and size were insignificant.

Michael

The PDP-5, parent of the PDP-8 (same instruction set), was designed as a controller for a company that hadn’t quite nailed down what it wanted the thing to do.

If I recall – it was a 4004 (or was it an 8008?) that drove the front panel controller in the PDP-11/05 (if fitted with the programmers front panel option – i.e. two toggle switches and handful of LEDs).

Its role was to replace the rows of ‘expensive’ switches & LEDs with a semi intelligent boot loader, and hardware serial console monitor.

Aaah – good days!

I’m seeing a couple of disturbing trends in this thread. The first is that some folks seem to brush off the 4004 as a mere “calculator,” and not a very good one at that. One fellow even doubted that it could be of any use at all, because it could only count to 15.

Let me assure you that there were indeed people who saw the 4004 for what it was: a technological marvel and the beginning of a revolution that led directly to the powerhouse sitting on your desk.

What could it be used for? In 1975, our company was developing embedded systems _WAY_ before that term became popular. We started with the 4004, but switched to the 4040 when it became available.

Our first product was a controller for a cold-forge machine, a $500,000 monster that could forge precision products by pressures so intense (5000 psi) that steel flowed like water. Our system included spin-dials to set the anvil position and rate, 7-segment displays, the A/D and D/A converters to measure everything, and the digital I/O pins to open & close valves, etc.

Also the small matter of the real-time OS that managed the multiple tasks.

We were also working on a similar controller for a plastic injection molding machine.

Was this the work of a “real” computer? Gee, we certainly thought so.

In fact, we felt so strongly that the 4040 was a real computer, that we wrapped a computer kit around it: The Micro-440. http://www.oldcomputermuseum.com/micro-440.html

We also developed an 8080-based product, a controller for an az-el satellite tracking antenna built by Scientific Atlanta. The software included a 2-state Kalman filter and controller, and a predictive algorithm to pro-actively handle the “gimbal lock” problem. The software was all done in floating point software, and included all the usual trig functions, square root function, etc. I wrote it.

For the record, it’s true that Intel only saw the 4004 as something useful for controlling traffic lights. In fact, they saw the 8080 the same way. But just because their marketing people were short-sighted, doesn’t mean the rest of us were.

I’ve also seen here the notion that nobody was thinking about DIY computers before, say, Bill Gates and Steve Jobs came along. That’s complete bullhockey! Many dreamers were thinking — or actually BUILDING — their own computers from discrete parts, long before then.

How long before? Um .. can we say “Charles Babbage”?

Well, Ok, that’s a stretch. But even before the 4004 came along, the technology of digital computers was firmly established, and DEC’s minicomputers were everywhere. If the technology is there, there will always be people who want to use it in DIY projects.

My own conversion to digital logic came ca. 1964, in a GE transistor handbook. I had used it to build simple little gadgets like a regulated power supply. But in the back of the handbook, they had a fairly extensive explanation of digital logic, including Boolean algebra, AND and OR gates, even Karnaugh maps. I was hooked.

BTW, if anyone can give me a reference to that GE manual, I’d appreciate it.

I used to sit at my desk and work out logic problems, then solve them to design minimal-gate-count solutions. Example: the “Hello World” of logic problems, the 7-segment decoder.

Not long after, Don Lancaster wrote his seminal article (in Radio-Electronics, I believe) on Fairchild’s line of low-cost RTL ICs. Packaged in plastic TO-5 format, they looked like 8-pin transistors.

I had seen logic units before — DEC was selling their “Flip-Chip” circuit boards for Space/Military applications, but the Fairchild chips were orders of magnitude cheaper: $1.50 for a J-K flip-flop, $0.80 for a dual NAND gate.

Four flip-flops make a 4-bit register. Two gates make a half-adder, and two half-adders make …um … an adder. Wrap the adders around the register, and you’ve got an accumulator.

I was working on a design with a one-bit accumulator , and data stored in shift registers. Accumulators need a lot of pinouts so are costly, but shift registers, needing few pins, were cheap even in 1965.

Understand, I don’t claim to be the only person dreaming of a real, live, personal computer. In fact, I claim exactly the opposite. I’m claiming that MANY people — in fact, anyone who understood the basic principles, and knew electronics, were dreaming along the same lines.

My point is, the dream of a true personal computer was alive and well, even long before Intel made it easy for us.

I think I still have the 4004 I bought when I worked for HP back in the day. I know I still have the 8008 I also bought as an HP employee……Keep them for nostalgia.

I always thought that Intel would have been a better company if they had more engineers and fewer lawyers. They have spent decades fighting competators with nuisance lawsuits largely without merit.

How about Zilog? They tried to copyright the letter ‘Z’.

The MOS-LSI chip set was part of the Central Air Data Computer (CADC) which had the function of controlling the moving surfaces of the aircraft and the displaying of pilot information. The CADC received input from five sources, 1) static pressure sensor, dynamic pressure sensor, analog pilot information, temperature probe, and digital switch pilot input. The output of the CADC controlled the moving surfaces of the aircraft. These were the wings, maneuver flaps, and the glove vane controls. The CADC also controlled four cockpit displays for Mach Speed, Altitude, Air Speed, and Vertical Speed. The CADC was a redundant system with real-time self-testing built-in. Any single failure from one system would switch over to the other.