We all know how important it is to achieve balance in life, or at least so the self-help industry tells us. How exactly to achieve balance is generally left as an exercise to the individual, however, with varying results. But what about our machines? Will there come a day when artificial intelligences and their robotic bodies become so stressed that they too will search for an elusive and ill-defined sense of balance?

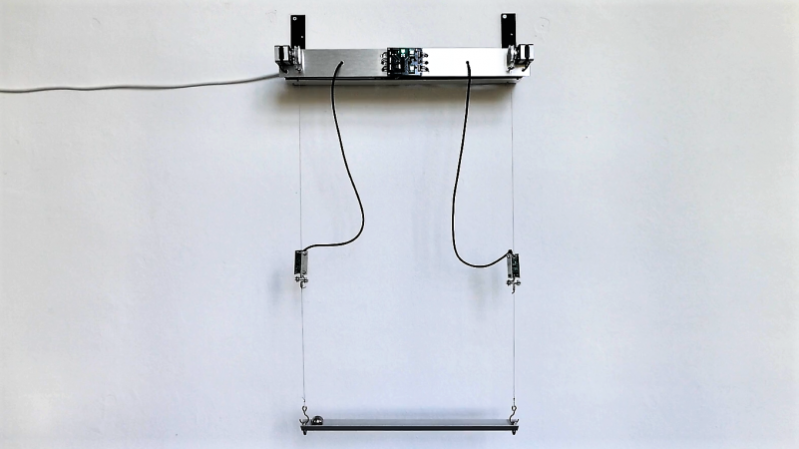

We kid, but only a little; who knows what the future field of machine psychology will discover? Until then, this kinetic sculpture that achieves literal balance might hold lessons for human and machine alike. Dubbed In Medio Stat Virtus, or “In the middle stands virtue,” [Astrid Kraniger]’s kinetic sculpture explores how a simple system can find a stable equilibrium with machine learning. The task seems easy: keep a ball centered on a track suspended by two cables. The length of the cables is varied by stepper motors, while the position of the ball is detected by the difference in weight between the two cables using load cells scavenged from luggage scales. The motors raise and lower each side to even out the forces on each, eventually achieving balance.

The twist here is that rather than a simple PID loop or another control algorithm, [Astrid] chose to apply machine learning to the problem using the Q-Behave library. The system detects when the difference between the two weights is decreasing and “rewards” the algorithm so that it learns what is required of it. The result is a system that gently settles into equilibrium. Check out the video below; it’s strangely soothing.

We’ve seen self-balancing systems before, from ball-balancing Stewart platforms to Segway-like two-wheel balancers. One wonders if machine learning could be applied to these systems as well.

Great!

Doesn’t look like the machine actually finds that “stable equilibrium”?

> One wonders if machine learning could be applied to these systems as well.

can’t those systems already be controlled optimally, without all the learning? what would be the point?

There will often be an “optimal” controller when you are modelling a perfect system, but as soon as you go into the real world it becomes much less clear. Control systems that have been designed for “perfect sphere in a vacuum” physics can behave in unexpected ways when exposed to dust, air resistance, and a host of other inefficiencies.

Robust control theory tries to work around this issue by designing an optimal controller for a set of systems. For example a robust controller may be stable on a given system for a wide variety of friction and gravity values. The math for this is hard, and to an extent you still need to be able to predict in which ways the physical system will differ from the model.

Machine learning has the potential to achieve robustness in systems that are hard to model. Unfortunately you can’t really prove an opaque neural net is stable or optimal, you just have to test it a bunch and hope you don’t discover any unpleasant edge cases in the field.

You could, however, feed it corner cases as test input and see how it fares but of course if you know your set of worrisome corner cases AND how it ought to respond you probably have enough information to design that optimal control system after all.

That being said, your average ten year old can catch a ball or frisbee without needing a mathematical understanding of the aerodynamics involved. The appeal of giving robots a similar ability to develop experience-based heurisics is strong. Think about a robot that could maintain its effective accuracy by learning how best (given the task it has been set to) compensate for wear on gears and bearings and whatnot.

It may not be practical to derive a general mechanism of compensation for each type of wear across all sequences of movement and all load conditions but if it learns that it can tighten the error distribution around the commanded position during one critical move despite a higher than spec backlash or similar problem you could get earlier warning of needed maintenance before quality slipped below a given threshold and do less of the “just in case” maintenance you usually see specified for general machines set to many possible tasks with different wear profiles.

This was well illustrated in a class I took that had us design electromagnetic levitators. Nobody’s model worked because there are all kinds of of nonidealities that you can’t even measure. It took a lot of trial and error tuning to get it working.

Nice project. But … the first frame of the video already shows something better avoided – introducing nonlinearity by using the cable mount point to suspend the beam.

> The system detects when the difference between the two weights is decreasing …

That means the ball is rolling towards the center. It would be rewarding speed, not position.

Here’s a PID controller hard at work, trying to keep the error at zero. (can someone suggest a better video?):

https://www.youtube.com/watch?v=Qqs0BF052EU

dw/dt isnt it? I dont see dx/dt anywhere…I’m guessing the person simply uses a moment balance. ML = m*l1*g = 0 =m*l2*g = MR, and uses this as a way to always know the exact position of the ball…though conceivably they could be using the ratio of l1/l2 aswell, but then that’d be unitless and just be d/dt, though if they only paid attention to one of the lengths, then yes they’d be rewarding speed. Hard to know without seeing the program I guess.

A pid would have been way more than enough :(