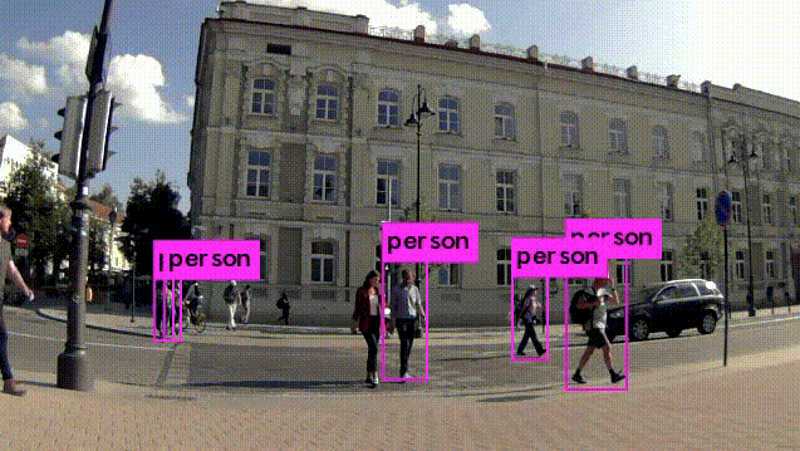

Most people are familiar with the idea that machine learning can be used to detect things like objects or people, but for anyone who’s not clear on how that process actually works should check out [Kurokesu]’s example project for detecting pedestrians. It goes into detail on exactly what software is used, how it is configured, and how to train with a dataset.

The application uses a USB camera and the back end work is done with Darknet, which is an open source framework for neural networks. Running on that framework is the YOLO (You Only Look Once) real-time object detection system. To get useful results, the system must be trained on large amounts of sample data. [Kurokesu] explains that while pre-trained networks can be used, it is still necessary to fine-tune the system by adding a dataset which more closely models the intended application. Training is itself a bit of a balancing act. A system that has been overly trained on a model dataset (or trained on too small of a dataset) will suffer from overfitting, a condition in which the system ends up being too picky and unable to usefully generalize. In terms of pedestrian detection, this results in false negatives — pedestrians that don’t get flagged because the system has too strict of an idea about what a pedestrian should look like.

[Kurokesu]’s walkthrough on pedestrian detection is great, but for those interested in taking a step further back and rolling their own projects, this fork of Darknet contains YOLO for Linux and Windows and includes practical notes and guides on installing, using, and training from a more general perspective. Interested in learning more about machine learning basics? Don’t forget Google has a free online crash course to get you up to speed.

If you don’t own a high end GPU and don’t want to spend hours of severe brain pain installing Yolo and it’s thousands of dependencies, as of December 2018 person tracking and more can now be done on Raspberry Pi + Intel Neural Compute Stick 2 : https://software.intel.com/en-us/articles/OpenVINO-Install-RaspberryPI . The instructions are very good and relatively easy to follow compared to Yolo etc. There’s a choice of 20 models to use including people, dogs, cats and even sheep! There’s also very impressive facial recognition demo and number plate recognition ….. Check it out!

I also worked out how to make my own custom models for the Pi although it was a lot more tricky than just running the demos : https://hackaday.io/project/161581-wasp-and-asian-hornet-sentry-gun/log/158199-build-and-deploy-a-custom-mobile-ssd-model-for-raspberry-pi

Reference Yolo is implemented in Darknet and has basically no unreasonable dependencies because it’s plain C. The downside is that the advertised stellar performance is unattainable on any reasonable hardware. But you can build it easily on any system. It’s the other implementations that probably want you to install 40 various wtfflows and bbqtorches that are never the right version, never compile and your GPU is always too old for them anyway.

About RPI + Intel Neural Compute Stick 2 — could you give some ballpark FPS figures e.g. for people, or face detection demo? Thanks!

OK ….. Give me a few minutes to do it ….

Ok, here’s a video showing face detection and frame rates and also my own trained model for wasps:

[youtube https://www.youtube.com/watch?v=vXPTW2Zb2Ho&w=560&h=315%5D

Thank you! That was very informative. I’m a bit confused about the FPS figures, the face detect demo shows around 23fps face detection rate, but the screen is updating at about 1fps. Is this because of other subsystems’ overhead? It seems you’re running it in a window on Pi so updating that screen alone probably takes 100% of its resources.

Yes it’s a bit of a mystery at the moment. I would need to disable rendering to the screen and look at the statistics in console.

Sweet!

So here’s a cool github (that I’ve followed and it does work) for doing object detection and basic distance to the object using stereo vision disparity with the Intel RealSense D435:

https://github.com/PINTO0309/MobileNet-SSD-RealSense

It uses the Raspberry Pi + NCS1 or NCS2.

I’m working to try to make an integrated PCB with the Movidius integrated with an ARM processor to have an integrated PCB that can do computer vision machine learning inference real-time and also with stereo vision.

Anyone interested in such a board?

Best,

Brandon

Great project. I’m likewise building a mobile detector for another invasive species – Burmese pythons. Do you see a huge benefit with the Intel Neural Compute Stick 2 vs. the movidius 1 version that ships with the google AIY vision kit?

I have not done an in-depth comparison. Some models do seem to be faster, some it makes no difference. The thing to do next is to try multiple sticks and see how the model performs that way

Actually, installing yolo(yolov3 on ubuntu16.04 to be exact) only takes 3 or 4 steps: 1) cloning the repo, 2) editing (optional) and running the make file 3) downloading the weight files. If you have a GPU (nvidia 1050Ti works ok) – one extra step – installing CUDA (which has gpu drivers inluded). Not sure what thousands of dependencies you mean here.

Such a great comment thread, thanks!

https://xkcd.com/1015/

Sucks to be the other 5 people in this image who aren’t recognized!

At least nobody would ever think of doing safety-critical things with this stuff like driving cars. That would be really awful…

Yes, particularly the women centre left in image – a decent model should easily be able to infer her position.

how break captcha? I no have big compuete

https://github.com/aero2a/kape-

Just watched a story on 60 Minutes last night about just how China has this advanced lightyears ahead – https://www.cbsnews.com/video/60-minutes-ai-facial-and-emotional-recognition-how-one-man-is-advancing-artificial-intelligence-tried-to-block-60-minutes/

Excellent work on prototype targeting software