Thus far, the vast majority of human photographic output has been two-dimensional. 3D displays have come and gone in various forms over the years, but as technology progresses, we’re beginning to see more and more immersive display technologies. Of course, to use these displays requires content, and capturing that content in three dimensions requires special tools and techniques. Kim Pimmel came down to Hackaday Superconference to give us a talk on the current state of the art in advanced AR and VR camera technologies.

Through his work in the field of AR and VR displays, Kim became familiar with the combination of the Ricoh Theta S 360 degree camera and the Oculus Rift headset. This allowed users to essentially sit inside a photo sphere, and see the image around them in three dimensions. While this was compelling, [Kim] noted that a lot of 360 degree content has issues with framing. There’s no way to guide the observer towards the part of the image you want them to see.

Moving the Camera to Match What Happens in the Virtual Environment

It was this idea that guided Kim towards his own build. Inspired by the bullet-time effects achieved in The Matrix (1999) by John Gaeta and his team, he wished to create a moving-camera rig that would produce three-dimensional imagery. Setting out to Home Depot, he sourced some curved shower rails which would serve as his motion platform. Kim faced a series of mechanical challenges along the way, from learning how to securely mount the curved components, to reducing shake in the motion platform. He also took unconventional steps, like designing 3D printed components in Cinema4D. Through hard work and perseverance, the rig came together, using a GoPro Hero 4 triggered by an Arduino for image capture. The build was successful, but still had a few issues, with the camera tending to dip during motion.

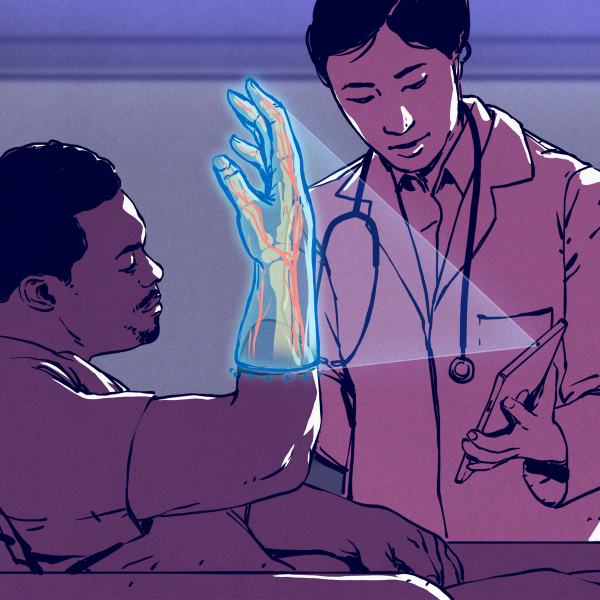

The promising results only whet Kim’s appetite for further experimentation. He started again with a clean sheet build, this time selecting a Fujifilm camera capable of taking native 3D photos. This time, a stepper motor was used instead of a simple brushed motor, improving the smoothness of the motion. Usability tweaks also involved a lock on the carriage for when it’s not in use, and a simple UI implemented on an LCD screen. All packaged up in a wooden frame, this let Kim shoot stereoscopic video on the move, with a rig that could be conveniently mounted on a tripod. The results are impressive, with the captured video looking great when viewed in AR.

Smarter Motion

With smooth camera motion sorted, Kim is now experimenting with his Creative CNC build, designed to move a camera in two dimensions to capture more three-dimensional data. This leads into a discussion of commercial options on the market for immersive image capture. Adobe, Samsung, Facebook, and RED all have various products available, with most of the devices existing as geometric blobs studded with innumerable lenses. There’s also discussion of light field cameras, including the work of defunct company Lytro, as well as other problems in immersive experiences like locomotion and haptic feedback. If you want to geek out more on the topic of light field cameras, check out Alex Hornstein’s talk from the previous Supercon.

While fully immersive AR and VR experiences are a ways away, Kim does a great job of explaining the development in progress that will make such things a reality. It’s an exciting time, and it’s clear there’s plenty of awesome tech coming down the pipeline. We can’t wait to see what’s next!

“There’s no way to guide the observer towards the part of the image you want them to see.”

That seems like declaring minecraft is tetris then getting mad because nobody stacks the blocks right.

It’s also wrong. Creating natural focus points in a space is a problem that’s been studied by architects for millennia. Galleries, theatres, and landscaped parks are designed to communicate “here’s where the interesting thing is” with just the shape and arrangement of the space.

Right, even Stonehenge says “The important stuff happens in the middle”

Eh, just do it like 90 percent of cell phone users.

Hold it sideways and wave it back and forth like you’re having a seizure.

Eventually you’ll accidentally sweep across the object for a moment.

The solution to directing the audience’s attention was solved thousands of years ago with live action plays that were conducted in usually outdoor amphitheaters. That solution was to use lighting and/or sound. Even before electronic sound, actors and singers were trained to speak louder according to the importance of the narrative, or where the director wanted the audience’s attention to be directed, and live musicians used musical instruments to point out relevant topics, emotions, and importance. The techniques for doing so were perfected over the years and applied to plays with real actors, and concerts by real performers, eventually in enclosed indoor theaters, and later with recorded and projected and/or otherwise electronically displayed images. Spotlights were directed toward the “star” actors and/or actions, and later with the advent of amplified sound, microphones and loudspeakers. These methods work just as well for modern recorded or live virtual reality and other simulated reality systems, including those that even exceed the range of human eyesight, up to and including 360 degrees, although there will be less distraction with about half that. Without other cues or clues, most people will instinctively look toward the center of a 180 degree image, unless or until they are directed to look elsewhere by an “offstage” sound. The skillful use of stereoscopic imaging can also be used to direct audience attention.

3d cinema didn’t work effectively for many reasons

But one of them was the sides of the screen being visible

I didn’t try IMAX in 3d but I did have the unfortunate experience of an old cinema in a repurposed theatre where the walls were painted white. Which was quite a distraction.