At its annual World Wide Developer Conference, Apple dropped many jaws when announcing that their Mac line will be switching away from Intel processors before the year is out. Intel’s x86 architecture is the third to grace Apple’s desktop computer products, succeeding PowerPC and the Motorola 68000 family before it.

In its place will be Apple’s own custom silicon, based on 64-bit ARM architecture. Apple are by no means the first to try and bring ARM chips to bear for general purpose computing, but can they succeed where others have failed?

ARM – A Long Road To The Top

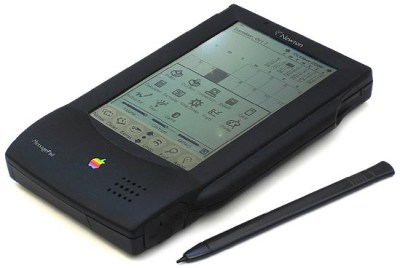

The ARM processor was created by Acorn Computers in the distant past of 1983, with the name originally standing for Acorn RISC Machine. Using Reduced Instruction Set Computing (RISC) techniques, the resulting chips used fewer transistors than classical CISC designs, and used less power as a result. Developed for Acorn’s computer line, later chips also found a home in Apple’s Newton PDA, as far back as 1992. However, as Acorn’s computer business faltered, the technology was largely forgotten from the mainstream.

Despite this, the underlying technology was sound. Spending most of the next two decades languishing in obscurity, the ARM architecture hit its stride when smartphones hit the scene. Devices required plenty of processing power while maintaining great battery life; the ARM was just the tool for the job. Fast forward to today, and ARM chips power 95% of the world’s smartphones.

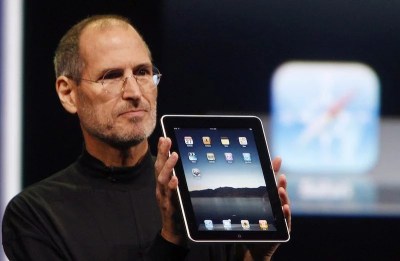

When Apple’s iPhone revolutionized the way we all thought about phones, it was packing a 32-bit ARM processor sourced from Samsung. As Apple’s continued to release new mobile hardware they were acquiring companies and talent to expand the company’s silicon design capabilities. In 2010, Apple took a major step forward with the A4. The first System-on-Chip designed in-house by Apple, it was an ARM Cortex-A8 manufactured by Samsung to power the iPad and iPhone 4. This was just the beginning, with Apple continuing to build on this success with each following generation of tablets and smartphones.

After years of being beholden to outside companies for its CPUs, Apple was finally in charge of its own destiny – on mobile platforms, at least. Its desktop and laptop computers had benefited from the switch to Intel’s x86 chips in 2006. However, working with outside partners necessarily has drawbacks, and with over a decade of experience at designing its own chips, Apple no longer considered it worthwhile. The announcement makes it clear that the official transition will take place over a two-year period, with Intel-based machines being supported for some time afterwards. But the writing is now on the wall over at Apple — x86 is dead, long live ARM.

The Switch

Changing processor architecture is a major decision that can affect the entire viability of a platform. In bringing ARM to the desktop, Apple will be looking to succeed where others have failed. However, if past performance is any predictor of future results, they’re well placed to pull off the switch.

Historically, ARM has struggled for a foothold in computer computers — laptops, desktops and the like. A simplistic look might suggest this bodes poorly, but digging deeper, it’s clear that’s not the case. Acorn’s failure in the very beginning was more due to a minnow attempting to launch a new platform in the shadow of IBM’s dominance. In modern times it’s the operating systems and software that have impeded ARM’s progress, but that’s beginning to change.

More recently, Microsoft has launched Windows on lightweight notebooks powered by ARM chips. In doing so, they have tried to create a second Windows ecosystem compiled for ARM instead of x86. These versions of Windows can’t run apps compiled for x86, requiring developers to change their software to suit to take ARM architecture into account. Due to a low install base, very few developers have bothered to build apps for the platform. At the same time, due to the lack of apps, it’s very difficult to increase the install base. The chicken and the egg.

Apple shouldn’t face the same problem, for the simple reason that they’re converting over their entire ecosystem over just two short years. Developers won’t be forced to create two versions of every app for the foreseeable future, hoping that the work done to create ARM versions pays off. Instead, they have the choice to switch over to ARM and go forward with a smile on their faces, or be locked out of Apple’s future desktop offerings. Casual users will barely notice, simply downloading the latest version of whatever software they already use, with Apple’s Rosetta 2 emulator filling in legacy gaps here and there.

The hardest hit by this announcement will be developers of Mac software. Existing software for the x86 OS X platform will need to be modified to run on ARM instead, or else make do with Apple’s emulation tools in the meantime. To ease this process, Apple are providing access to development hardware ahead of time for interested parties, similar to the path they took with the previous switch to x86. Long having held an iron grip on their platform, Apple have spent the last two decades investing heavily in development tools and their own programming language. This has allowed them to lay the groundwork to make the switch as painless as possible. While it’s unlikely the transition will be as simple as clicking a checkbox and hitting the compile button, the necessary tools are already ready to go.

Perhaps the most interesting part of the switch is that the Mac line will now run the same architecture as the iPad and iPhone. This means that apps will be able to run across all devices, opening up new possibilities for developers. Formerly mobile-only apps will run natively on Mac, without requiring recompilation. Obviously developers will make tweaks to interfaces and other such changes to suit the desktop environment. However, the broader problem of creating separate applications for the desktop and mobile realms will largely be a thing of the past in Apple’s world.

Conclusion

While such a major change can seem fraught, by all appearances, Apple couldn’t be better placed to make the switch. With a huge installed base already running Apple silicon, and with ARM computers mere months away, we suspect the transition should be fairly straightforward. Power users and those with complex edge cases will feel some friction, but for the vast majority of Mac users, the journey into the land of ARM will likely be smooth sailing.

“Long having held an iron grip on their platform, Apple have spent the last two decades investing heavily in development tools and their own programming language. This has allowed them to lay the groundwork to make the switch as painless as possible. ”

Something to think about the next time someone brings up “walled gardens” as an argument. Just think of how further along general software would be with that kind of flexibility to change.

That argument is orthogonal. The Mac has never been a walled garden, as you can download any software for it that you wish to run and always have been able to do so.

iPhone anyone?

No thanks

The iPhone is not a Mac.

More like wallets vacuum cleaner they’re not interested in taking just a big portions of your money from you, they’re interested only in taking everything you have.

I can’t name a single company who take extremely cheap hardware, slap logo on it and sell it x10-x100 from original retail price.

Oh, wait… There are massive number of company why sell crappy “open-source hardware” non-efficiency ARM based products x10 of their original cost…

Anyway I think nether of them deserve money.

There’s a price for every customer, and a customer for every price. The trick is to sell the same product at all the different prices. Some sell with low margins, some with high margins, and the only thing that differentiates between them is the marketing and brand image.

>you can download any software for it that you wish to run

https://www.reddit.com/r/linux/comments/hfkdlg/craig_federighi_confirms_apple_silicon_macs_will/

>In an interview with John Gruber of Daring Fireball, we get confirmation that new Macs with ARM-based Apple Silicon coming later this year, will not be able to boot into an ARM Linux distro.

It might be as simple as just hitting compile. Very few apps use assembly or hardware directly and before anyone chirps up to give a counter example notice I said very few and I do work in the embedded world writing device drivers so I am one of the people that do have to worry about hardware and sometimes even assembly. But we are talking about applications. Microsoft’s CLR kind of makes the move to arm real simple. You can also use an translate on install system like IBM did with the Model 38/AS400 and so on where the compile crates a binary using and “ideal” ISA and then when you do an install it translates it to to the native ISA. It would be an install time compiler vs a Just in time compiler like .NET and Java use. Microsoft and Apple could both create FAT Binaries that have both ISAs.

I have long thought that such a system would work well for Linux and I have heard that someone is working on such a system.

But many applications use third-party libraries. Having to find or compile an ARM version of them all can be a major hassle.

It’s the Python 3 problem. Libraries depending on libraries depending on libraries, and your application can’t make the switch until the all changes work their way up.

You are wrong about python… It is more “Libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries depending on libraries “

The fun comes when you rebuild library A_2.0 which needs library B_1.9.

Then you find library B_1.9 depends on library A_1.8 and you wonder how deep the rabbit hole is…

Node js would probably just accept that sort of nonsense…

Not if those libraries are also fat binaries or are translate on install. If you already have them installed on the system you already have your ISA if you need to get them they will be of the translate on install or fat binaries type. So is possible.

“Not if those libraries are also fat binaries or are translate on install.”

I think that’s what the original post was implying: applications that use 3rd party libraries need to actually *have* ARM versions of those libraries in order to work, and if those libraries don’t have ARM-compiled versions yet (in whatever form) or don’t have source available (or don’t compile under ARM for whatever reason), the application’s stuck, even if their portion of the code just compiles fine.

It also probably means that the application will likely need to push forward to the newest version of the library, as it’s unlikely the library maintainers will compile older versions to provide as well, and *that* could cause a delay.

You’re not wrong that it’s likely just basically “hit compile” and it’ll work, but even that could still take some time.

I can’t believe “fat binaries” are an acceptable thing now. Compiling code to run on every possible CPU and dumping all of it into a bloated file that is maybe 3x or 4x as large as it needs to be just seems and obviously bad way to distribute software.

Why is this a problem again? Program code takes few megabytes at best.

Because storage space is so constrained today. I would say that the idea of a fat binary is fine and you could even do a “diet” on install system where you remove the unneeded code when you first run it or un compress the target code on first run reality is that the code on most programs is small the data and images are what takes up a lot of space. People love their pretty pictures. That would all be common across ISAs. I am actually thinking of more of a Fat Binary install where you get both code and the installer decides which gets installed.

LWATCDR: This is the correct answer. This, or a slight variation that can be left to the developers. They can provide either fat binaries and installers that discard the useless version(s), or they can provide separate binaries for each architecture and web installers that download the correct binaries.

We’ve been dealing with multiple architectures on desktop platforms ever since 64 bit architectures have become available. And while it hasn’t been smooth sailing all along, I think the ecosystem has adapted to this.

There’s a silver lining here: having to build and test on multiple platforms tends to flush out bugs that can stay undercover for a long time in a fixed environment. Don’t ask me how I know. I remember a time when Linus Torvalds said he doubted that Linux would ever be ported to anything other than i386, due to its architecture-specific low-level functions and optimizations.

Very true. I’ve been running a Ubuntu fileserver on an ARM ThunderX for the last four years. Everything works exactly like AMD64. The last two things I installed (Blender and Handbrake) compiled right out of the box. I was particularly surprised about x264 with all of its hand-coded assembly, but the author is very sharp in how he handles multiple platforms.

Is that a system based on the ARM server spec AMD put forward a number of years ago?

If so, that may be why it was pretty easy. That’s the think that almost never comes into the conversation when people talk ISAs. A computer is much more than the processor or even the SOC at its heart. It’s a collection of other subsystems, software, and specifications that allow them all to work and allow varying amounts of things to be abstracted away.

For Apple, they only need to abstract at the user space level. As long as their OS interfaces remain consistent, then there isn’t too much work for app developers to do (their work on LLVM, and their own languages help with this a lot too).

But to have OS freedom, you need abstracted platform interfaces that allows for some common OS IPL discovery and load along with a way to deliver information about the hardware to the OS. The problem gets more complex when you need to support somewhat arbitrary add-in hardware and gets even worse if the platform firmware or OS IPL needs to use these peripherals.

Sometimes for a popular platform these things can be reverse engineered (provided there is either a lack of security measures or that they can be broken).

A big hit may be on open source developers, who often rely on Hackintosh or similar for testing Mac ports of the applications. It seems likely that Hackintosh on x86 hardware will be quite a bit more difficult once Mac OS X transitions to custom system-on-chip ARM system – just emulating the ARM CPU probably won’t be enough. At the same time, old Mac hardware will be useless for testing also.

I think the number of open source developers who actually use a real Mac exceeds those using a hackintosh by a minimum of three orders of magnitude.

If that is true, I wonder why? Using a hackintosh is as easy as downloading a virtualbox image, using a real mac is $500+ for a model recent enough to run current versions of Mac OS X. For me the decision was pretty easy, legal/moral considerations aside.

Because when OS X first came out, Linux on laptops was still fairly finicky: it was pretty common to have trouble with one or more of the on-board devices and things wouldn’t quite work well. So Macs were pretty much the only way to get an easy portable *nix environment where everything worked.

The struggles the Linux heads at work have with simple things like docking and connecting a mouse suggests this may still be the case.

You can’t sign apps with a Hackintosh, so you can only test your apps in the simulator. Can’t run and test those apps on a device.

You can absolutely sign apps with a Hackintosh. I have submitted two apps to the iOS App Store with my Hackintosh, and I have signed a couple of Mac apps too. Testing on device from my hackintosh works 100% no problem.

Not really. Macs are basically a dodo in the open source world.

Certainly not true in anything scientific and open source.

Not entirely…

https://opensource.apple.com/

Agree, and if you’re a real serious developer, just get a Mac.

Ah, so that’s why many software developers complain about wages…

rofl*I’m sure that a computer without a docking port with a shitty keyboard is exactly what I need as a real serious developer.

Maybee a real serious HTML developer….

Maybe you haven’t shopped around for a laptop lately? Because all serious laptops of the same form factor as the Macbooks these days only have a few USB-C ports and need a docking port.

I am not talking about the cheap, heavy, 1″ thick plastic laptops, but about laptops with the same form factor, weight and power efficiency as Macbooks.

I give you that the keyboard of current Macbooks is shitty. Maybe better than the previous generation, but still shitty. But then again, any serious developer uses an external keyboard, so it’s not that much of a problem.

Get yourself a mac mini for a build / CI server. Easy.

For my daily runner, I have a 2 x CPU 40 thread tower server (not much bigger than a PC) with 384 (not a typo – three hundred and eighty four) GB of RAM and 1TB of NVMe and 6TB of spinny storage (it is silent BTW) and it houses a 1080ti Graphics card and a ATI RX580 and an ASUS STX 2 sound card which is just fantastic. It runs Proxmox with PCIe passthrough. It is better than a Mac in every way (and can run Hackingtosh as a VM on it) except portability, but that is not an issue because I can SSH into it. It can run x86 and ARM virtual machines on it too. It cost me about $1200.

BTW I do own an old 2009 iMac too – for a while and as an experiment, I customised Ubuntu to work and look exactly like Mac OS (because you can do that with Linux) and I showed it to a Mac user (and long time Linux user too) and they didn’t realise it wasn’t Mac OS until I clicked on the about. It runs Fedora Mate now with a PC layout keyboard and I get all the security updates and all the latest software on it and is perfectly performant (it is used for development rather than a games machine but it happily runs Portal 2 at 2560×1440 which it struggled to do on Mac OS)

Mac lovers are all about the logo and the perceived cachet of having an over priced laptop, because they think it makes it look like their company values them more and it is a badge to show off to others. At the end of the day, most servers are Linux so they are most likely working with Linux boxes anyway.

The only “advantage” to having a Mac as a developer is that that when you pair program, you are familiar with your indoctrinated colleague’s OS and esoteric keyboard shortcuts, which seems only to have been designed to be different to be difficult to switch from.

If you are a “real serious developer” and happy with a Fisher Price computer, then your luck is in, because I don’t see developers moving away from Macs any time soon.

I use a virtual machine to run OSX. You need to count VMs as “hackintosh” as well, and yeah, there’s a lot of us out there honestly. It’s really simple to install OSX on Virtualbox or VMware, and a hell of a lot cheaper to run it on a decent windows machine to test software.

But that goes away now. Now I won’t really care about testing in Safari. If it works, it works, if it doesn’t, well don’t by Apple I guess. What would likely happen though is a high-profile customer will complain about a bug, and only then will the company I work for put down $2000 for an even more overpriced Apple ARM computer.

This is yet another cash grab from Apple. Even if their ARM CPUs don’t really compete with AMD or Intel, they will still market them as “supercomputers” anyway, because most people that buy Apple gear don’t really know what a “supercomputer” is (and yes, that’s an actual marketing campaign Apple once used, which they will probably recycle).

If this is Apples big attempt at innovating post-Jobs, it’s just more of the same platform hopping we’ve seen from Apple in the past. They know in 2 years they will have people being forced to upgrade because OSX will stop being supported on x86 hardware, which they are banking on being a huge windfall. If the public gives them that, then just wait another 10 years and they will no doubt platform-hop again to the new-shiny whatever CPU/platform that comes up if not for performance then for greed.

I won’t be spending a dime on their hardware. I simply don’t care enough about their 10% market share to bother paying their ridiculous hardware prices just so I can test software on their junk Safari browser.

Nick: I think your estimate is off by a couple orders of magnitude, probably biased by your own reasons for avoiding Apple hardware. When I started developing for multiple platforms (Win/Mac/Linux), I used a hackintosh, but that was a decade ago. By the time I needed to upgrade hardware, it just made more sense to switch to Apple. A PC will never be a 100% compatible Mac, but a Mac IS a 100% compatible Windows machine. I don’t think I’m in the majority, but I’m certainly not in the 0.1% here.

Because Hackintosh was a total unicorn, once in eternity, black swan…. said nobody who ever used Basilisk, ShapeShifter, SheepShaver, PearPC etc for running 68K and PowerPC Mac versions.

Damn, if only there were some ARM based platforms around, like maybe a notebook type thing, or maybe a little board with all the basics on that could be turned into a computer… nope nothing like that around I guess… unless you’ve heard of ARM Chromebooks and Pis. Even complete emulation on a decent PC isn’t going to be as horrible as emulating x86 on ARM. (Theoretically in the before times, Acorn had intended efficient PC emulation, and their Archimedes ran it’s emulator at somewhere around half clockspeed equivalent, IDK if that capability fell out of ARM, or whether ppl are just doing it stupid now.)

I just don’t think Apple can get their shit together to do this. In recent years they’ve become increasingly chaotic and I just don’t think they have the drive to do this.

They seem to do best when they buy things like their OS or procs from other people. This will definitely make servicing a throttled affair. One of the many reasons I avoid them as much as possible. But they will send their paid ad shills in here to keep us from doubting their abilities as they always do with a number of forums and websites.

Buying on OS from Steve Jobs isn’t exactly “other people”.

It’s basically an evolution of the iPad Pro, and they seem to have that together alright.

glad i just bought a brand new IMac for my wife, it’ll probably be worth something if its the last of their x86 products

Yes in 3 decades if you keep it nice, it might be back up to nearly a quarter it’s sticker price provided you hold on through the 10-25 year “worthless junk you can’t even give away” phase.

It won’t, because the software shifts to the ARM platform and you’ll be left without updates. Within 3-5 years any x86 mac will be worthless.

Oh no, of course they won’t be worthless – they’ll still be perfectly suitable Windows machines.

I have an ibook from the PowerPC phase, about maybe 20 years old… It’s worth nothing. I literally got it for free.

Never should have left Motorola :-)

But why the switch?

It seems like not many years ago Apple announced they were giving up RISC, the Motorola RISC, and moving to mainstream Intel. Now they are shifting yet again.

I think there’s a few reasons:

– I suspect it’ll give them a huge advantage in terms of laptop battery life. From my limited perspective, they’ve already got a leg up on any sort of WIndows laptop in terms of battery life, and this will give them an even bigger lead.

– I imagine in the long run, it’ll also save them money, as they won’t be paying Intel.

– And as mentioned, it’ll make it easier to write something cross platform across their own ecosystem, while making it slightly more difficult to port that software to their competitor’s ecosystem.

Main reason: all the software switches to ARM because there won’t be any more x86 machines on the market, so all the people with x86 macs are forced to upgrade or lose support.

With one swoop they kill the entire second hand market for x86 macs as well. Bottom line: buy a new mac.

Why would all the software companies who aren’t Apple, but release software for Mac, dump all their existing and potential customers?

Don’t know – maybe for the same reason they did so in the previous Mac architecture shifts.

Because as people upgrade to ARM macs, there will become a point where only a small portion of their customers are still on the intel platform and they can’t lose money maintaining support for a dying platform. Apple switches to ARM, and when the existing machine base starts dying the software vendors follow suit.

Apple laptops have a predicted mechanical half-life around 15 years in use, but the battery shelf-life means the average mac laptop will be replaced at year 6 – 8.

It was the IBM/Apple/Motorola RISC. It ran too hot. Who knows, with 4 and 12nm processes today it might be great.

The people doing the PPC design just could not squeeze one bit more performance out of the PPC design, nor could they get the power consumption down. A dual G5 Mac tower also made a pretty decent room warmer.

When Apple abandoned 68k, it was because they were the biggest consumers of 68k chips, and therefore paying the bulk of development costs to (try to) keep 68k current. Maybe PowerPC wasn’t such a smart move, because it was just the same problem again, but the switch to Intel, even if it left a bad taste, meant that Apple wasn’t having to singlehandedly drag an architecture into the current decade. The switch to ARM was another inevitable move.

where this ends is basically up to the software giants. if for instance the adobe lineup was running on linux a lot of [creative] people would ditch the mac…

It would literally be easier for Adobe to create their own operating system than deal with that herd of cats.

You’d need a near-flawless full feature support for accelerated audio/video/graphics, color management, multi-monitor, printing, and universal binary software distribution, before something like Adobe could move to Linux.

Linux is lacking in all of those. The sad thing is that the Linux crowd considers things like, “it prints a page” as “printing support”, when it’s painfully obvious that the hardware is not properly supported and you can’t do shit with it.

It would also be easier for Adobe to create their own operating system based on BSD to allow easy porting, than deal with all the whining of how slow all their stuff runs on ARM unless rewritten from the ground up.

That said, Adobe lost the game when they started forcing cloud subscription. No more pirated photoshop = no more users, because it takes a substantial time to become proficient in the software and nobody’s gonna pay their prices just to try. Competition like Affinity are catching up in features, so their days are numbered.

Adopting a Stadia-like approach would mean platform means little.

You mean streaming the entire program interface from the cloud?

Not gonna happen. Graphics programs need instant user response. Even a tiny bit of lag between your pen and the canvas totally ruins your style.

Screen streaming for applications like Photoshop are a total killer, because drawing demands no lag. If your pen input is lagging just a few extra milliseconds, your lines turn from curves to squares.

I purchased the full CS6 suite as soon as I heard they were going cloud, and have been using it ever since. I have and will purchase every application Affinity makes. I still use Photoshop and Illustrator because all my clients are still on Adobe, but I support Affinity with my purchases in the hope they continue to make inroads in the creative industries.

> when it’s painfully obvious that the hardware is not properly supported and you can’t do shit with it.

Whose fault is that? Look to nvidia as an example of how that’s a flawed argument:

Rather than working to embrace widely established standards across multiple platforms, nvidia reinvents the wheel and forces their substandard proprietary stack down our throats. Hardware support relies on the manufacturer of said hardware caring enough to work with Linux kernel developers to make the hardware fully functional. AMD, on the other hand, had no problem with it. Their open-source stack (with the exception of loadable firmware) is included in the Linux kernel on nearly every distribution. Nearly every AMD GPU made in the past 8 years just works, out of box, without installing anything or having to perform any tweaking/adjustment. Even Intel pulled it off with their iGPUs. nvidia’s refusal plays no small part in companies like Adobe being unable (or unwilling) to support Linux ports of their software. The same applies to the gaming industry, solely because of nvidia and Microsoft.

Software availability is usually (not always) the catalyst for hardware support. Say Adobe has a really good year and decides to turn some of those profits into a Linux team, and they successfully port Indesign and Photoshop to Linux. Let’s be honest, porting the software itself would be trivial. What matters is acceleration, and the only disparity is the fact that nvidia can’t compete with AMD or Intel in that market, precisely because of their decisions. If ported, Adobe (and now countless other firms) would work to improve hardware support specifically because there’s now incentive (and serious money) to do so. Companies like nvidia would have incentive (and partnership opportunities) to make an AMD-esque stack so that Adobe products would have feature parity, regardless of GPU.

This problem is explicitly why I select my hardware from companies who appear to care about my choices or, at the very least, don’t stop me from making them. My audio interface (Peachtree Audio X1) works flawlessly. My AMD R9 Fury X works flawlessly. My AMD 5700 XT works flawlessly. My HP LaserJets and Epson Artisan work flawlessly.

Is the problem *really* Linux?

The problem is open source. Do you really want thousands of contributors working in their spare time and making guesses about hardware? And you depend on it to make deadlines? And you are really talking about the file system aren’t you? I use Linux based systems all the time. Mostly CentOS 7, Ubuntu and Debian – and Ubuntu Core/FriendlyCore on x86 hosts and a range of ARM boards. The hosts have a crashy tendency and chasing down dependencies and configurations to do something simple is incredibly frustrating and time consuming.

The only thing lacking from OS X for me is better ways to use tools developed for Linux. But a lot of good stuff, like KiCAD, has OS X as a target these days. There is a reason that video and music professionals pay full price for new Macs, like very nicely integrated reliable high performance and never crashing.

And really, what does who’s fault have to do with any of this?

> Do you really want thousands of contributors working in their spare time and making guesses about hardware?

That’s not what they do. The only prominent example that comes to mind is nouveau, the reverse-engineered open source stack for nvidia GPUs that exists precisely because nvidia won’t work with the Linux community at large.

> And you depend on it to make deadlines?

Yes. Contrary to popular belief, there are development schedules for open source projects, and there *is* money in it.

> And you are really talking about the file system aren’t you?

What? No. Where did you get that from?

> The hosts have a crashy tendency and chasing down dependencies and configurations to do something simple is incredibly frustrating and time consuming.

Your gripe is with specific distributions behaving poorly. File a bug report. I cannot say I share your experiences.

> The only thing lacking from OS X for me is better ways to use tools developed for Linux.

Speaking of dependency hell…

> like very nicely integrated reliable high performance and never crashing.

My Linux systems don’t crash. What’s your point?

> And really, what does who’s fault have to do with any of this?

I already explained that, in great detail.

> And you depend on it to make deadlines?

Yes. Contrary to popular belief, there are development schedules for open source projects, and there *is* money in it.

I wasn’t clear. Meet production deadlines in video, design, CAD, etc. As with Final cut pro, the Adobe suite, and all that. There is also the variation on the old “Nobody every got fired for using IBM”.

“> And you are really talking about the file system aren’t you?

What? No. Where did you get that from?”

Linux is the kernel. Nearly everything you do depends on the “File System”, The desktop, utilities, and tools.

“My Linux systems don’t crash. What’s your point?”

My Linux systems do crash. What’s your point?

> The problem is open source. Do you really want thousands of contributors working in their spare time and making guesses about hardware?

No, so we buy hardware from manufacturers that openly support said contributors, either by:

– providing documentation for writing drivers (you know, like how manufacturers _used_ to provide documentation)

– sponsoring or paying developers to spend time writing/improving the open-source drivers

If something is reverse-engineered, I avoid it like the plague. I will not knowingly buy hardware which requires reverse-engineered drivers to operate. I do my homework.

No different to how Windows is: “assuming” it will work will bite you some day, as you plug the printer you bought last week into your shiny new Windows 12 laptop and find that the company decided yesterday that they wouldn’t be supporting that printer on Windows 12.

This isn’t hypothetical, I’ve heard of sound card manufacturers that refused to support Windows NT-based OSes (Win2000, WinXP) in favour of Windows 98 and ME. (Yes, this was a while ago.)

There’s a growing junkpile of hardware out there, which doesn’t work on Windows 10, but works on Windows 7 and earlier.

Meanwhile, I can dig out a crusty old PCMCIA network card from 1995, slap it in my Core i5 laptop from 2013, and an “eth0” interface pops up, which was handy years ago when a colleague would hand me a 3G router to set up and I needed to access two Ethernet networks simultaneously.

If support for a piece of hardware is poor, it’s because its manufacturer has made the decision to make the support for that hardware poor.

>crusty old PCMCIA network card from 1995

Your priorities are totally different from what most people have, and you’re living in the past. If I need a network interface, I can just walk into the nearest SUPERMARKET to buy a generic USB-ethernet adapter off the shelf. Chances are it will work just by plugging in – at least on Windows and Mac.

> the company decided yesterday that they wouldn’t be supporting that printer

That’s the same problem for Linux. If the company doesn’t directly support the latest Ububu Nifty Nosebleed, then you’re SOL because you have to use the community provided generic driver and that’s going to kill all the features.

The difference is that the Linux ecosystem is actively rejecting direct support by the manufacturers by rejecting them writing binary only drivers to protect their IP, and because the Linux ecosystem is so fragmented that trying to support everyone is a nightmare anyways. I get the idea, we want to maintain our own drivers so we can support them indefinitely, but for the regular user that means nobody maintains their drivers because:

1) only a handful of people are competent enough

2) they’re only interested in supporting the hardware they use, not what you use.

You get ample support for a PCMCIA card from the 90’s, but not for a printer from 2019. Which goes back to the original point. Linux as a platform for end user software and productivity is horrible, and it would be easier for Adobe to write their own OS from scratch and distribute that, than try to reach customers through Linux.

>If support for a piece of hardware is poor, it’s because its manufacturer has made the decision to make the support for that hardware poor.

Or, it’s because there is no universally accepted stable standard ABI for driver modules. There’s an ideological reason behind it: to try an extort hardware manufacturers into giving the source code to their drivers rather than simply the binaries. Of course that doesn’t work when Linux is such a small player that the OEMs can simply ignore it, but that doesn’t stop Linux fans from calling persecution and blaming everyone else.

There’s Linux Standard Base though, which is an attempt, but as usual the community is divided and some adhere to it while others won’t. As long as this in-fighting continues, the hardware manufacturers just say “no”.

> you’re living in the past

… says the one championing the bastard child of VMS, CP/M and Xenix.

>says the one…

Way to miss the point. It’s a complete red herring to support obscure and esoteric hardware that you can’t even buy anymore, when the Linux (eco)system fails to support everyday stuff that people have and want.

Insisting on using Linux is like having a voluntary gluten allergy. “Can’t eat that… or that… or that… or that…”.

From a hardware manufacturer’s point of view, there’s two things that need to be in place:

1) a standard stable ABI that allows them to distribute binary blob drivers so they don’t have to give away their trade secrets and lose the market to copycats

2) a standard universal method of software distribution so they can deliver the user interface that goes with the driver and the hardware, plus other software that applies

The Linux ecosystem is horrible for the HW manufacturers, because they have to rely on third parties to provide the user experience for their product. If you say “my gadget does X” then you have include the software that makes it do X, but that means supporting up to 400 different distributions. Even supporting the top five is too much effort for such a small market.

In Linux, the general assumption is that the hardware you buy is only components. You buy the chip, not the product. That has the side effect that there is no product differentiation – no new features that aren’t part of the generic description because someone else would have to write the software to use it. The OEMs are not interested in that.

Hmm…… I can smell some preferential bias here.

>The only thing lacking from OS X for me is better ways to use tools developed for Linux. But a lot of good stuff, like KiCAD, has OS X as a target these days.

Many great deal of softwares only have Windows and Linux Port. For Example:

• Every FPGA Suite like Intel Quartus and Xilinx Vivaldo

(On that note, Apple’s “Afterburner Card” was programmed using Linux Host)

• Speaking of ARM, Every tools made by ARM like ARM Development Studio

(And here, Apple is transitioning to ARM)

• OpenGL, OpenCL, CUDA Dependent applications like Blender and TensorFlow

(https://docs.blender.org/manual/en/latest/render/cycles/gpu_rendering.html

https://blender.stackexchange.com/q/128693

https://blender.stackexchange.com/q/129583)

(They can run without Acceleration, but will lack decent GPU support, unless support for Apple’s Proprietary Apple-Only “Metal 2” is added to those)

• OpenGL, Vulkan based Graphical Applications and Games

(There are lots and lots to here say but, I guess you won’t question it)

>Whose fault is that?

That’s besides the point. No matter who you blame, objectively speaking, it still does not work properly and is lacking in so many things that Linux is totally unsuited for software distributors like Adobe, and why a crushing majority of computer users won’t touch desktop Linux with a ten foot pole.

Objectively speaking, there’s a *reason* for that.

For what it’s worth, your information is about ten or twelve years out of date. Perhaps it’s time to catch up?

>your information is about ten or twelve years out of date

Just last month I had to return to Linux for the purpose of testing a Linux implementation of some stuff we’re working with. For starters, we had to install two different distros because stuff that would work on one didn’t work on the other, and vice versa, and my task was to figure out why and how to make it work at least in one if not the other. It was still the same general bullshit with patchy hardware support, patchy software support, broken this, broken that…

Nothing has really changed. Even simple UX things like, why can’t I just drag and drop a program shortcut icon into the programs menu, just aren’t there. When I have to install software that can’t be found in the repository, it doesn’t necessarily show up anywhere. I have to spend many working days just configuring Linux instead of getting anything done, which is ironic since the entire point of the exercise was to save money and time by using FOSS.

In the end the conclusion was, “Our clients are more familiar with Windows anyways… so let’s just not waste any more on this.”

Oh, and:

>Rather than working to embrace widely established standards across multiple platforms, nvidia reinvents the wheel and forces their substandard proprietary stack down our throats.

There was a very good reason for that. The standard X.org just wouldn’t support all the features, and the way it was structured and implemented meant horrible performance penalties for nVidia, so they made use of the modular nature of the system and replaced the bits that made it suck. Plain and simply: X.Org sucked really bad and they were being difficult to work with as an organization, so nVidia bypassed them to make their stuff work.

Had nVidia gone with the “standard” that Linux provided them, their hardware would not have worked properly. AMD’s stuff didn’t work properly – it sucked. In fact, nVidia was the only one that could provide proper hardware acceleration under Linux while the others were broken in various ways.

This is exactly what I’m talking about. You play nice with the Linux community and you’re mired in politics and herding cats, and your stuff won’t work. You bypass the community and provide proper support for your own hardware, and the community complains about it.

You just can’t win.

Your talking points might hold up to some scrutiny if they were even remotely true or in line with the subject at hand.

When I was talking about nvidia’s “re-implementation” I was talking specifically about Wayland. Anyone with even a cursory understanding of the subject matter already knows this. nvidia works *OK* in Xorg, as well as Xorg *can* work. nvidia on Xorg oesn’t have proper HiDPI support, proper tearing prevention or client isolation, but neither does anything else. Which, you know, is *why* Wayland is the default experience in most distributions… except where nvidia is involved, precisely *because* of nvidia’s politics. Which, you know, was my point.

Moreover…

“AMD’s stuff didn’t work properly”. According to *who*? They’ve worked properly for YEARS, far and away better than nvidia.

The only politics in play here are *nvidia’s*. Had nvidia gone with the *standard* supplied, and actually *cared* enough to adopt those standards, their hardware would work fine. They *explicitly* refuse to open source a stack, or even work to improve existing implementations. THAT’S why nobody takes them seriously.

Your entire premise relies on outmoded and outdated information that is woefully incorrect due – in no small part – to your confirmation biases.

You have to excuse Luke, he’s had a thing against linux since that time he flushed his RAM then thought it did a core dump in his cheerios.

The Linux community is a lot like a Presbyterian convention. They get together to plan a cohesive future and when the convention ends there are seven new versions of Presbyterianism who won’t talk to each other. I run CentOS 7 and Debian Server and Ubuntu. Getting things working on each one is like switching from right hand drive to left hand drive cars.

>I was talking specifically about Wayland.

I was talking about nVidia before Wayland. I haven’t kept up with what has happened afterwards because I can’t be bothered anymore.

> They *explicitly* refuse to open source a stack

Naturally. The only reason why AMD is playing ball is because they’re the underdog on the market in general and they have nothing to lose. AMD doesn’t have anything that nVidia doesn’t also have, so they can release the information and grab the brownie points. Meanwhile, nVidia has to protect its IP against competition, so they CAN’T do it.

The “standard” in Linux is a political tool that is designed to inconvenience manufacturers into giving up their IP to the community for free.

And as for the “standard”, there’s again Wayland, and then there’s Mir, so if you want to seamlessly support both what are you gonna do? Well, you do like nVidia and implement your own, so you don’t have to care what the other bozos are fighting about today.

I don’t think anyone who can install Linux would stand for abode’s licensing and pricing model. Mac users have been abandoning adobe; illustrator has no special value any more; premier has many alternatives, not least the vastly cheaper FCPX, etc; photoshop is nice, but not £1k better than the alternatives. it’s only really indesign which lacks a good competitor. Why pay £1200/year for software when you can buy an equivalent for a few £100 and get free upgrades?

> photoshop is nice, but not £1k better than the alternatives”

Sure. You can do half the things in twice the time with GIMP or Krita, but it costs zero money so that’s got to be better value right there!

There’s no real alternatives for this kind of software in Linux for the exact same reasons why Adobe doesn’t release the software for Linux: it’s exceedingly difficult to distribute if you don’t release it as open source, it’s even more difficult to offer support for all the myriad configurations, and nobody wants to pay any money for it.

A little late to the party, are we not, HaD?

“These versions of Windows can’t run apps compiled for x86,”

Windows 10 on ARM can run x86 apps.They do binary translation at first run so the result can be faster than emulation.

Apple can do it too if there is not a better solution.

Why is it always the marketing people that decides what hardware we should be able to buy?

Lately it seem most hardware are riddled with bugs, and their attitude is just fix it in software..

Back in the day there was something called hardware documentation, and you could write your own firmware..

Today its all marketing BS, and everything else has a NDA!

Steve Jobs was essentially just the marketing guy at Apple.

And yet he managed to proverbially sell sand to suckers in Sahara.

What do you mean “yet”? Jobs was a narcissist who took credit for other peoples’ work while his own escapades provided nothing but failures, because he didn’t understand the technology he worked with. For example, calling his engineers idiots for not being able to use dynamic software libraries with ROM substituting for more expensive RAM.

When he got back to Apple, the company was in trouble because they didn’t have a snake-oil salesman in the house anymore. They were pushing the same products using the same adverts and losing market share rapidly, so they hired Jobs back exactly because he could spin bullshit better than anyone else. That was his job.

Sounds like you read his biography.

This will flop if apple doesn’t focus on software quality. The iPhone has turned into a software atrocity. I’m not sure if it is still more stable than android, or has less UI quirks. Apple was positioned well to have some of the best x86 computers and run some top notch software, but then, seemingly to coincide with the passing of Steve, they lost their focus on software quality, instead taking the running away from the bear analogy to heart. (You don’t have to be the fastest, just faster than the slowest). If there was a non-android alternative, I’d try it in a heartbeat. I suspect the desktops are suffering similarly, and porting of some apps is not simply a matter of recompiling. So I wait for the bug reports, security holes, and general software mayhem out of Cupertino.

Your misinformed rant is ironic, because Apple is ditching intel due to intel’s poor quality control.

Likely the choice was made because Intel processors have been having lots of issues with security, sideband attacks, listening in on caches, whatever, processes cannot be separated well enough on Intel. And the basic cause is that they add features which are great for Intel or for hardware manufacturers, but not quite useful for end-users.

So not quality control, but culture, is what has done Intel in.

Having said that; let’s see in 10 years from now if Apple did any better.

They could have gone for AMD instead and keep using x86 – the fact that they didn’t means that it has nothing to do with Intel and everything to do with switching the ISA to make older macs incompatible with newer software, thus boosting sales and re-igniting the old PPC vs. x86 war where apple turns their marketing towards claiming that the ARM platform is technologically superior to the PC.

Apple is ditching intel because their goal is to turn all computers into iPad type devices. Basically advertising delivery and user tracking devices to monetize user data. The goal is to lock down the OS, remove the command prompt, and prevent the user from doing anything but input a credit card number, consume media, and surf the net in apple’s sandbox. It’s what users really want because apple says so.

HACKADAY>>> THESE PEOPLE HATE THE RIGHT TO REPAIR.

WHY ARE YOU ACTING LIKE THEY STARTED THE ANDROID REVOLUTION?

So, use your right to repair your caps lock. ;-)

If the performance is there, I think it probably is a good move. A potential problem is multi-source of CPU manufacturing. But I can see the logic in ARM across the whole line. Simplification. I am sure of a few bumps in the process. But long-term could be good (for them). On the other-hand more potential for closed system abuse….

We don’t use Mac or Windows at home, as Linux does it all for us.

They’re not going to get 2 day battery life AND performance, they’re not even gonna get 1 day battery life and performance. 12 hours and the performance of a ChromeBook maybe.

Now there are performance ARM CPUs, 64 cores and plenty of Ghz, but they’re gonna be as thirsty as low power x86 which they need 4:1 cores to match.

Rubbish. iPad Pro’s already have more processing power than low-med end laptops, and a better battery life on a smaller device.

“it was an ARM Cortex-A8 manufacturded by Samsung”

They say you could smell the production line for miles around…

I love the small of silane in the moring.

“Intel’s x86 architecture is the third to grace Apple’s desktop computer products, succeeding PowerPC and the Motorola 68000 family before it.”

How could it be that we are forgetting the 6502 of Apple I and ][ here?

And the 65816 in the IIGS.

If the 65816 is separate from the 6502 architecture then AMD64/Intel64 is separate from the i386 architecture. While not directly comparable the Altivec versions of PPC are significantly different from the original too.

So 6.5 architectures or so?

I suspect that this will also come with a greater shift towards cloud based software as a service.

Ah the old network computing one two, thin client and sell ’em the cycles. Apple compute cloud, hope the corner store has enough iTunes cards for that project you’re tying to do on the weekend.

On the last couple releases of OS X downloads and various docs from apps go to iCloud by default, which is why I had a hell of a time finding my latest work or downloads until I shut off freakin iCloud. Apple has been screwing with the inrerface and breaking my old habits and breaking their carved-in-stone HMI rules. I have assume this is an attempt to merge Finder with iOS. A truly terrible idea.

I here you. My IPhone (my work required type phone. Rather have android), keeps wanting to connect to ICloud asking for password…. Pain as it pops up every day. I want nothing to do with my stuff in the ‘cloud’. That brings reliance on a service, which amounts to ‘locked in’ to that service. That’s bad. I don’t keep anything in the cloud. Period. Applications ran on the physical box. Not cloud based applications where companies can nickel and dime you to death. All backups/pictures are on external drives and some stored off-site for added safety. Life is ‘simple’. I could go off grid and no loss of data.

Yes had the same problem – kept “losing” notes till I realized the default to iCloud :-(

Interesting to note a few bids to the Newton in the latest presentation. “Scribble”, the ARM architecture, they even showed the newton light bulb logo!

Long live the Newton!

The most egregious crime I have found is the stone-age “rename” for files. You type text and if you don’t hit enter, your work is lost if you change focus. The Apple HMI NEVER loses work by the user – till now. I could not figure out what was wrong for quite a while. When I realized they broke the monotony rule I could not believe it. iPhone apps are like that with each having a different way to select or delete, etc. But OS X Finder? If they do this, and the impossible to decipher file listings that split them alphabetically grouped by days, weeks, months, and somewhere down at the very bottom are the ones you just added, then what is next? There must be a way to disable but I have not come up with the search key words to find it. I’m afraid we will soon have keyboards you hold like a phone and use your thumbs.

Yes stay alive my little Message Pad 100 until Apple on ARM fever hits the fanbois and I can unload you on eBay for $500

I have a box of Newton eMate 300 from a school system. I wonder if I can squeeze a modern ARM in there? I have a bunch of these https://www.friendlyarm.com/index.php?route=product/product&product_id=212 and a few of these discontinued ones https://andahammer.com/index.php?route=product/product&product_id=310

Or even a small RK3399 board (got some of those too). All I need is time :-)

What are you talking about? macOS has changed processors before and it all went quite smoothly.

You mean the 2 year support for hw that is “obsolate”?

On a new fruit machine:

ppc apps are a no go

32 bit apps are a no go

uncommon peripherals are a no go, which ran just fine 2 release earlier

Its easy to make a switch to anything if compatibility with the older hw/sw is not even on the list.

I wonder how the professional market will react to this. What about the accelerator cards for the high end caneras, professional audio gear (eg 128 channel soindcards), etc.

The 2 Version back compatibility goes back to the “system “ Days on the PPC. The fact I can still use a 5 year old MacBook Pro is exceptional.

I had the same PC from 2006 to 2018 with small upgrades. That’s not exceptional, unless you’re used to being screwed over by Apple forcing you to buy new hardware to keep up.

I have two 2010 macs running fine. Both i7s. We use them for proper work too, including video editing.

“Apple are by no means the first to try and bring ARM chips to bear for general purpose computing” I don’t know about by no means. Newton was 1992 or so, and the eMate around 1977 (why does nobody hack these cool clam shells?).

There was an ARM Apple II prototype about the time of the Archimedes. I really wished they had run with that. Apple have been very involved with ARM from the beginning – not surprising given the ARM 6502 relation and very fast 6502 emulation.

Excellent article. And I wished I’d got a comment in early to optimise chances of a reply!

But I had hoped that Apple would have taken on RISCV instead and run with it rather than yet another ARM platform.

Any idea why RISCV would be unsuitable for ARM?

I suppose they didn’t want yet another architecture involved if they already had iOS on ARM anyways.

RISC-V is still pretty young, for example vector ISA spec has just been released.

On the other hand Apple got an ARMv8 full license (so no royalties) and a lot of experience on it.

now you’ve figured out their 2030 strategy when they’ve saturated the market with ARM based devices!

ARM.. Still remember Compaq PDAs with Strong ARM and XScale..

They competed with MIPS R4000 and Hitachi SH3 on Win CE devices..

Especially handhelds and palm tops.

This is going to be the PowerPC -> Intel switch all over again, but for the worst. Yeah, ARM has great potential for low power consumption, and many of the chipsets are fantastic performers for what they are, but I can’t expect this to go over well with people who rely on a certain set of software professionally. An entire market segment will be glorified iPads with built in keyboards, likely with the iOS AppStore ecosystem. While that would bring a great deal of ready-made applications, a lot of important software is going to just stop working. The big players like Adobe will either port their software or create functionally similar counterparts… or just not support the platform at all.

That said, if these systems *are* essentially oversized iPads with keyboards, what’s the point? No, seriously… what’s the point? You can already get an iPad with a keyboard. Even if this new silicon reaches performance parity with a baseline dual-core i5, what about those who need MORE? Is Apple just going to stop catering to the high-end professional market, in segments like audio production or graphics/design/editing?

I’m no marketing genius, nor am I an expert on processor design and fabrication, but this seems a little shortsighted to me. Just sounds like a version of “640KB ought to be enough for anybody.”

This is why I’d hoped Apple championed RISCV then we would have a hope of serious high performance computing including code sharing between cloud and desktop software.

I don’t want or need much inthe way of power saving when it comes to work PC.

If I do I’d use a thin client.

If I use a thin client I want the backend to run the same software as the desktop experience.

Which is not likely if desktop runs on ARM and servers don’t!

Imagine you bought a .Mac Pro this year and now Apple tells you, your Intel CPU is worth nothing… maybe buying a new motorcycle would‘ve been a better idea…

” Apple has said that it still has plans to launch new Intel-based Macs that have not even been announced yet. The company also committed to some form of long-term support for Intel Macs.” – arstechnica article

golly it must suck to be so uninformed

Excuse my thoughts, but how many Updates did the Macs running on PowerPC, when Apple moved over to Intel CPUs? And still no need to be rude. Work on your manners.

No they won’t – they’re not gonna compete with themselves across two systems. They’re either going to be using intel and ARM on two different categories like desktops vs. laptops, or they’re lying and they’ll ditch the x86 plans the moment the ARM gains any sales traction – because they have an incentive to switch the platform to force old users to upgrade.

Software developers might still end up having to support both x86 and ARM for a while if they don’t want to cut out their user base of people running older hardware.

At least to start, it seems they are not aiming this at their power users. Rather, they are targeting those who are looking for a laptop instead of an iPad Pro. If you believe the hype from various ARM SOC makers, the arch can get workstation performance with a big enough power budget so they may eventually get to ARM based Mac Pros. Apple probably hopes the software base and use cases will materialize before they need to create an SOC that has a triple digit wattage.

We have known for a few years that Apple was going to switch to in house ARM chips for the Mac. Certainly no surprise here!

Apple totally understand the ARM architecture, probably better than ARM themselves. They have high performance low power in the iPad Pros. Maybe not an i9 yet, but on a performance match with intel, the ARM beats it substantially.

And, Apple have successfully transitioned before, but this time they also have experience with ARM and have a huge current base using it. This transition will be even less painless than the previous transitions. The top end Macs transition will take longer, but they will transition successfully too.

BTW I’m not a Mac user.

And for those that think that Apple have been gouging, take a close look at Intel!!!

I’m about to buy a replacement MacBook since mine is ~ 7 years old. I care as much about legacy apps as new ones. If I get an Intel one, should I expect that new apps will be delivered for it as well as ARM in the next 7 years ? The advantage of the Intel version is I can also run BootCamp (occasionally) and it’s available now. Any suggestions ?

Remember a company named Orange PC? From the early times of the Macintosh, they made PC cards to install inside various Macintosh computers. Initially they were a complete PC on a card, sharing only storage, monitor, and I/O devices.

When PPC CPUs got powerful enough, Orange PC released a simple Intel CPU on a PCI card, with most everything else emulated or using the Mac hardware. IIRC it even used a chunk of the host’s RAM instead of having its own.

By then things were getting a bit shaky for Orange PC. The PPC had become powerful enough to do a just tolerable job of emulation to run Windows, but for serious PC game play you wanted the Orange PC CPU board.

Then Apple switched to Intel CPUs and Orange PC was dead. Not even their line of other Macintosh accessories could save them. Shortly before the switch to Intel I asked if they were going to make OS 9 drivers for their USB 2.0 card. Nope, flat out not interested, despite there being at the time millions of Mac users still not running OS X, and all the OS X users also using Classic, with nothing running in Classic able to use USB 2.0. An OS 9 USB 2.0 driver for $5 could have brought them a good chunk of change for a couple of years. Identify a need, make a product, and market the hell out of it before the need goes away.

So with a switch to ARM architecture, how about an Orange PC revival, on PCI Express, to plug an Intel CPU into the next generation of Macintosh?

Macs not crashing is a myth. If your Mac doesn’t crash you’re not a power user.

Sorry to be “that guy” but apple ditched x86 instruction set support years ago. Currently Apple computers use exclusively x64 based programs. I know people use x86 as shorthand for x86/64, but it is worth noting.

This is not new.

Apple has been slowly changing MacOS and it developer tools for some time now. They did the same thing previously with other processor changes. Hiding the first half of the transition before a public announcement makes it seem smoother.

But will it still be possible to install software from sources other than the app store?

Already now, one has to set a flag in the system settings to allow such folly. The first time I want to open a program that is not from the app store I have to go to the application folder and right-click “open”, not just a simple click on the icon in the launchpad.

AFAIK the PowerPC to Intel switch was motivated by trends in power consumption vs performance esp. re. laptops. So much of this discussion has been about software issues with a few remarks about hardware. Clearly ARM has excelled in bang per Watt on the low side, but can anybody explain the tradeoffs at higher performance levels? Will it involve high performance cores or massive numbers of cores? Apple needs to stay competitive in performance as well as battery life.

Bit of column A, bit of column B, IpadPro IIRC has 4 high performance cores and 4 low power cores. It’s big benchmark numbers seem to come in apps that look suspiciously like they are GPU assisted. Now Intels on die graphics have been lame since forever, intel HD is kinda about Nvidia 9400GT speed. The on die in the ipad is more like 230GT speed. You’ll probably see them try hard not to test against AMDs APUs, especially anything Zen based.

Wow! An old guy wakes to how much has changed. The A13 already looks performance competitive with Intel’s high end and there’s no comparison on power and price. Apple are making a historically similar bet on today’s hardware trends. And as you imply by quoting a tablet first, the whole laptop market is increasingly a sideline of mobile. AMD looks hot, but have they already missed the laptop boat?

Either say Apple “is” or say “AMD look”. The use of singular and plural rules seem to be thrown out lately. Or did Apple just declare itself to be plural?

Well, WE are not amused.

ARM SHMARM… Slaves will still make them in China, and you will still pay dearly for them in the U.S.

I have a Macbook Pro as my work issued PC, I like it for the most part but battery life is not its strong point. I can’t even get through a whole 8 hour work day without plugging in. My personal Lenovo X250 will still do over 10 hours, when it was new I could get over 12 hours of real world use on a charge.

The Mac sacrifices battery life and keyboard quality to be thinner than it needs to be, it’s so thin that it’s uncomfortable to hold. I do like the build quality though, the screen is gorgeous and the audio quality is very good too and the aluminum housing is pretty slick. I wouldn’t spend my own money on one though.

I find this super annoying. I don’t often install software on my work laptop but when I do I have to deal with this. I have zero interest in the app store on a laptop and have never used it. I would like my iPhone 10000% more if I was not forced to rely on the ridiculous walled garden app store for that too.

That’s not exceptional at all, 5 years is what I’d consider the absolute bare minimum. I’ve been using my personal laptop for 5 years now and have no plans to upgrade in the foreseeable future. I used the PC it replaced for 10 years with just minor upgrades. On the Mac side my friend just replaced his 2008 MBP with the latest model early this year.

Computer technology has plateaued, it’s not changing anywhere near as fast as it was up into the mid 2000’s, upgrade cycles are longer than ever before, most people today could use a 10 year old PC and not know the difference. It’s not like back in the day where a 2 year old machine was hopelessly obsolete.

Gave Apple my money once. They bricked the tablet because I lost the email/password. Never ever will I voluntarily give them a single dime again.

To ease this process, Apple are providing access…

Wow, can’t even get plural vs. singular right. I see this so much lately, what gives. Maybe I are an idiot or maybe we am changing. Maybe the language is just changing. One final thought: welaj, lwou!

Appears to be a British fad, which some Americans are also affecting.

When it comes to iPad, and Macs Whatever Apple does in regards to those products, I’m not concerned about, because I can’t afford them. While I can’t afford the latest iPhone, so I’m four generations behind, but I see no need to dump the iPhone, as long as apple continues to support the phone I have at hand, I’ll stick with the iPhone. Wanting a separate mobile hot spot option, I experimented with prepaid Verizon Android option, man it’s GUI was horrible as compared to the Android WiFi only tablets I have. Mo use in complaining, as the manufactures are going to do, what they believe what could increase profits.

“apps will be able to run across all devices, opening up new possibilities for developers. Formerly mobile-only apps will run natively on Mac, without requiring recompilation. Obviously developers will make tweaks to interfaces and other such changes to suit the desktop”

Meanwhile, in the real world, I’m still waiting for a ton of developers to make tweaks to the interfaces of their iPhone apps to suit the iPad.

iPad has been around for 10 years and I’m still downloading apps that turn out to be crappy iPhone versions that don’t use the whole screen properly.

What a joke.