Maybe you heard about the anger surrounding Twitter’s automatic cropping of images. When users submit pictures that are too tall or too wide for the layout, Twitter automatically crops them to roughly a square. Instead of just picking, say, the largest square that’s closest to the center of the image, they use some “algorithm”, likely a neural network, trained to find people’s faces and make sure they’re cropped in.

The problem is that when a too-tall or too-wide image includes two or more people, and they’ve got different colored skin, the crop picks the lighter face. That’s really offensive, and something’s clearly wrong, but what?

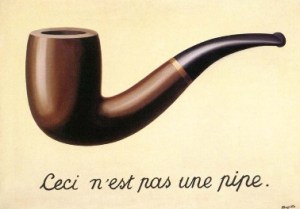

A neural network is really just a mathematical equation, with the input variables being in these cases convolutions over the pixels in the image, and training them essentially consists in picking the values for all the coefficients. You do this by applying inputs, seeing how wrong the outputs are, and updating the coefficients to make the answer a little more right. Do this a bazillion times, with a big enough model and dataset, and you can make a machine recognize different breeds of cat.

What went wrong at Twitter? Right now it’s speculation, but my money says it lies with either the training dataset or the coefficient-update step. The problem of including people of all races in the training dataset is so blatantly obvious that we hope that’s not the problem; although getting a representative dataset is hard, it’s known to be hard, and they should be on top of that.

Which means that the issue might be coefficient fitting, and this is where math and culture collide. Imagine that your algorithm just misclassified a cat as an “airplane” or as a “lion”. You need to modify the coefficients so that they move the answer away from this result a bit, and more toward “cat”. Do you move them equally from “airplane” and “lion” or is “airplane” somehow more wrong? To capture this notion of different wrongnesses, you use a loss function that can numerically encapsulate just exactly what it is you want the network to learn, and then you take bigger or smaller steps in the right direction depending on how bad the result was.

Let that sink in for a second. You need a mathematical equation that summarizes what you want the network to learn. (But not how you want it to learn it. That’s the revolutionary quality of applied neural networks.)

Now imagine, as happened to Google, your algorithm fits “gorilla” to the image of a black person. That’s wrong, but it’s categorically differently wrong from simply fitting “airplane” to the same person. How do you write the loss function that incorporates some penalty for racially offensive results? Ideally, you would want them to never happen, so you could imagine trying to identify all possible insults and assigning those outcomes an infinitely large loss. Which is essentially what Google did — their “workaround” was to stop classifying “gorilla” entirely because the loss incurred by misclassifying a person as a gorilla was so large.

This is a fundamental problem with neural networks — they’re only as good as the data and the loss function. These days, the data has become less of a problem, but getting the loss right is a multi-level game, as these neural network trainwrecks demonstrate. And it’s not as easy as writing an equation that isn’t “racist”, whatever that would mean. The loss function is being asked to encapsulate human sensitivities, navigate around them and quantify them, and eventually weigh the slight risk of making a particularly offensive misclassification against not recognizing certain animals at all.

I’m not sure this problem is solvable, even with tremendously large datasets. (There are mathematical proofs that with infinitely large datasets the model will classify everything correctly, so you needn’t worry. But how close are we to infinity? Are asymptotic proofs relevant?)

Anyway, this problem is bigger than algorithms, or even their writers, being “racist”. It may be a fundamental problem of machine learning, and we’re definitely going to see further permutations of the Twitter fiasco in the future as machine classification is being increasingly asked to respect human dignity.

Maybe they could simply scale images instead of cropping. Yes, that would mean ignorant users who didn’t know how to manage their photos would get relatively useless results, revealing who is good at this modern tech stuff (or old skool framing of photos to produce a desired result) and who stinks. Meritocracy and all that. Someone would probably call that racist as well, but it’s a harder argument to make stick.

I for one ignore posts with horrible spelling or grammar errors if the author is using their native language. If you are illiterate, why should I feel like I’m going to learn something from you?

Or have the AI provide a crop for each detected face and then deliberately select one at random. That way in the instance where there is no single good answer, at least it’s not biased towards one over another. Or provide a visual cue in the cropped image that it *is* actually cropped and in what directions. Then people aren’t mislead into thinking that the framing was deliberately chosen by the poster.

I like this idea – as the problem isn’t that it doesn’t notice the other person. It seems to identify them perfectly if they are on their own, it just has higher confidence or some other reason to bias it towards the white face.

Still get lots of complaints about racism with that I don’t doubt.. As there are still less ethnic minorities in many cases so they would get selected less….

Would still be issues if if’s also spotting (with a lower confidence) dogs. Say it identifies a white face with confidence 1, black 0.8, and dog 0.6, and two mugs and a plate on the table with 0.2.

It needs to apply some cut-off point. So it’d be easy to imagine the scenario where a white guy and dog always crops to the guy, whereas a black guy and dog randomly shows the guy or the dog.

Whilst it might have gone unnoticed, That would now seem to be likely to cause offence also, especially now everyone is looking for offence in the algorithm.

Time to open source it?

“algorithm is best effort, don’t like it send me your improvement”

> If you are illiterate, why should I feel like I’m going to learn something from you?

Literacy does not reliably correlate with intelligence or ability to contribute things worth learning, to suggest otherwise is pretty messed up.

For example, there are disabilities that impede literacy but do not impede intelligence. I personally know multiple incredibly intelligent people who have extreme difficulty conveying their ideas through text. I would much rather have someone convey more of their intelligent thoughts and ideas with poorer quality writing than force them to spend an unreasonable amount of time correcting unavoidable errors and thereby contributing less to any particular conversation.

Another example is that illiteracy can be the result of a lack of formal education. Some of the greatest insights come from those without (or with limited) formal education.

Yet another example, many languages, including English, have several dialects that when written or spoken will appear to have horrible spelling or grammar to those unfamiliar said dialect.

There is another issue, and that is that there is no reliable way to determine if someone is using their first language or not without asking or having them deliberately divulge that information. This issue can lead to one assuming that poor literacy is not the result of using a second language but some other issue which you find to be indicative of a lack of intelligence.

Disregarding the contributions of these people will limit the diversity of the information sources you avail yourself to. Which is exactly the kind of problem that leads to one having messed up ideas like “literacy reliably correlates with intelligence and worth of intellectual contribution”.

I find a more reliable way to determine if someone has something of value to contribute is if or if not they espouse messed up ideas like then one you just did. That said, you have contributed multiple things of value, which I accept, because I know that absolutely everyone has things of value to contribute. Though some make less effort and fail to act in good faith compared to others.

– Nicely done, very well said.

This. Forgive me opening up a bit, but… I have Asperger’s, and that has caused some… unpleasant misunderstandings. The most notable of these is unfortunately part of a relationship where it’s deliberate, and I won’t discuss that except to say that it’s a relationship I’m working my way out of right now.

The rest of it tho is me being odd in odd ways because sometimes I’m oblivious to things that everyone around me would perceive as being quite clearly communicated. The person I was interacting with was trying to tell me I was off the rails and did so with the intensity and fervency of Captain Picard yelling “RED ALERT” as the torpedoes begin to shake the bridge, but I didn’t understand so I just ignored it (I do that :-/ ) because I hate being interrupted that freaking much… fifteen minutes later I’m like, “wait, did I screw that up?” and then when they say “yep!” I’m awkwardly apologizing for quite a while after. Happens more than I’d like to admit TBH, even to myself.

Mind you this is after well over a decade’s worth of therapy.

On an entirely unrelated — although still *extremely* relevant — note, thank heavens for autocorrect. I don’t know who thought “oh gee typing on a touchscreen is freaking *awesome* and everyone should do it” but I kind of want to dope-slap them*. What comes out of mine, before editing, is only marginally classifiable as language. It’s not “fat finger syndrome” so much as “if there’s no tactile feedback, because it’s a flat bit of glass over a display and not an actual real keyboard, I’m not going to learn to hit the non-existent keys anywhere near as reliably as with anything that has at least some kind of buttons for that”. It’s not my brain being stubborn, we’re all wired that way.

Touchscreen typing is crap, and anyone who’s sent a text while a bit sleepy knows what I mean… and with the prevalence these days of phones and tablets it’s not a problem that’s going away any time soon :-/

*Clarity: not literally, I promise! It’s just an extremely frustrating experience, and anticipating that should’ve been easy if they weren’t ‘Morans Guy’ levels of stupid… while there are plenty of smart idiots out there, usually they’re *just* smart enough to avoid dropping a blooper nearly that bad. Usually!

” I don’t know who thought “oh gee typing on a touchscreen is freaking *awesome* and everyone should do it” but I kind of want to dope-slap them*.”

Yes! My feelings exactly. Some persons at the companies who make phones decided *they* didn’t like physical keyboards. So to “prove” that *nobody else* liked them they shifted their product lines to only having physical keyboards on models with lower display resolution, slower CPUs, less RAM, less built in storage, and other features not included that were on their touch screen only models.

Of course the “feature reduced plus keyboard” models didn’t sell as well. That “justified” eliminating keyboards entirely.

The last decent keyboard phone was the Droid 4, a Verizon exclusive. The last time a keyboard phone was anywhere close to a flagship model was the Sprint exclusive Photon Q, but despite being essentially a Droid 4 with a bit faster CPU, and more RAM and storage than the Droid 4, Motorola made the keyboard crappy. In contrast to the sharp and snappy Droid 4 keyboard the Photon Q one is dull, mushy, and suffers from *extreme* key bounce with up to 4, sometimes 5, copies of a letter being read with one key press. Couple that with autocorrect that cannot be overridden (like it can on later phones) so it has to be turned off, the Photon Q was a maddening phone to use for text messaging and email.

Other Sprint phones contemporary with the Photon Q had much higher resolution, faster CPUs, more RAM, storage etc. Putting a bad keyboard on it had to have been a calculated move to kill off keyboard phones. The key bounce could have been fixed in software but Motorola and Sprint refused to do anything about it.

One of the nicest implementations of typing on a touchscreen that I’ve seen was on a tablet audio editing system. The unit had a little solenoid that snapped when a ‘keypress’ was detected. Very satisfying and useful audible and tactile feedback.

Ah, make a strawman and knock it down. I never mentioned any reliability of correlation. It can be pretty safe to disregard quite a lot these days, since there’s so much stuff and so much is good – anything that cuts down the noise, even if you do lose a little, is worth it for many. Duplicate good stuff is duplicate anyway – I don’t need to sample every possible way of saying some information.

Think of putting out a request for job applications for a really good job. If you ever have, you’ll be swamped with zillions of responses. You can safely ditch quite a few of those and still have so many good qualified candidates that you still have to take a long time to decide between them. You _can’t_ hire all the good ones. Anything that gets you there…

The same is true in the information space. There’s a lot of vanity out there – sure, there are smart people who have issues communicating well – I’m sometimes one of them, and have some friends who are worse yet. And plenty that don’t have any issues being clear. If my object is to learn, I’ll go with the latter. If that’s messed up – well, at least I do learn and get it done quicker, which means I can learn more.

I’m also aware that no matter what you say and at what level of detail, someone adversarial can find a nit to pick.

Most of the illiteracy I encounter is due to laziness. Yes, there are other causes, and often one can tell.

How many times do you see “loose” when it’s obvious the writer means lose? To quote James Brown, it’s time to do the tighten it up.

I’ll forgive most misuse of affect vs effect for example, as even when English was well taught they didn’t often get that one driven home. But people who think any language is purely phonetically spelled are just lazy.

And you can tell most often if the author is using their milk tongue due to the types of grammar they use, at least if you’re literate enough to have a smattering of some other languages.

I think the problem is in thinking uneducated or less educated people have nothing to teach you. That’s just plain ignorant. Plenty of people do think someone who doesn’t have X level of education has nothing worth learning from, and I think such people are fools.

Personally, as long as you’re aware of what you’re cutting out, I say filter away. You should also be aware that you risk pigeonholing yourself if you don’t at least occasionally seek out information from some of the more difficult sources, though.

I do this. I have stopped seeing ANY racism, intolerance, or incorrect information on Twitter. All I had to do was NOT look at Twitter. So far, I have not felt like I’m missing anything.

Call me crazy, but maybe, just maybe, they should make a person crop it. Say, the person posting the tweet? It only takes a few seconds, and the human is aware of a little thing called context. You could even call this “crowdsourcing” to please the investors.

You are probably not crazy but investors will be far less pleased with this workload that is considerably higher than one might think. It’s night and day having an algorithm do this from a cost, time, workflow and theoretically even accuracy perspective. I don’t know that most people fully understand just how many posts are happening per second at this scale.

Wouldn’t that workload be happening on the client machine browser? I had thought most of the processing was client side. Compress before send and there’s probably a savings.

You’re not at all crazy. Why not just make a “crop it yourself” interface for the person who’s posting the image in the first place?

If they made it easier for the users to do right, maybe they would.

+1

Nah, they’d complain about having to do it themselves. In it’s Twitter, they’re not going to want to put more effort into editing a picture than they put into writing the text

Isn’t that something that ImageMagick does? If so, that makes things super easy, especially since the vast majority of corporate backends/etc are RHEL or SuSE Enterprise anyways, so they’ll likely already have it built in.

“if the author is using their native language” How do you know who is using their native language?

Phrasing and choice of words will usually give it away. Not many Americans would type “Oh bloody hell!” so you could infer that the writer is likely British in that instance.

I dunno, I’ve lived all my life in the USA, and my closest British relative was my great-grandmother who passed away something like 35 years ago when I was a child. But, because I’m a nerd who watched the old Doctor Who’s on PBS as a kid, and then binge watched Red Dwarf when I got access to BBCA, I tend to sound British when I type. I have had many people in MMORPGs think I was a Brit; though they were often mid-western USA rather than coastal. You know, the kinds of people who think a snog and a shag are the same thing.

“Meritocracy and all that.” Meritocracy is one of the most overused words, especially in context of people having a confirmation bias that makes them believe they deserve what they get, even if it’s obviously out of proportion compared to the work they did. You could also call is survivorship bias.

Also it doesn’t really apply here. What you mean is people who are too lazy, to properly present their data. But then the next question is, are we making people lazy, or are we improving usability. It’s a fine line.

Twitter has too many posts, so maybe we should indeed focus on asking users to create more quality content over quantity. But that’s not what social media is about… unfortunately.

Agreed with the point of just scaling the photos. If I post a photo, it is up to me to decide what is in it or the proportions/quality/etc, not some out-of-control algorithm.

As for the rest, even we ( as humans ) are not that good in understanding and quanticizing human sensitivities, so those neural network recognition errors should be treated as what they are, just bugs. Either from a less-than-good dataset, or imperfect coeficientes. Not as something to try to earn victim points from.

Let the user choose which part of the picture to show. Using AI for that is just a waste of resources.

Absolutely this!

+10. Most of the problems we create are just from trying to cater to people’s laziness, usually trying to gain market share while at it.

The problem for twitter would be less images a second would be uploaded and they really do what to harvest the Geotagging metadata from the exif in most smartphone photos left at default settings. They want people to turn off their brains, not force them to think.

“Think of how stupid the average person is, and realize half of them are stupider than that.” – George Carlin

Twitter is not doing anything just to be nice, they are just like google, amazon, facebook, yahoo, microsoft, apple – stockpiling metadata, because it is cheap to store and in 5 -100 years time will be indescribably valuable. Cradle to the grave metadata profiles, they will even track your death if they can.

But what if gets combined. Lets the algorithm do it first. Ask the user if the user is happy with the crop, and if not the let the user do it. Then the algorithm can learn from the users what is the good way to do it.

But AI!!!1!

you are under estimated just how little resource AI takes once it is already trained.

My favorite example from a long time ago was the military training an AI to find tanks in pictures. They got really good at it until they gave the AI new pictures and then it was unpredictable.

Turns out that on the training set, all the pictures with tanks were taken on sunny days and the rest on cloudy days.

This was from a PBS documentary called “The Machine That Changed the World.”

I still watch that show from time to time just to marvel at the things that they got right 30 years ago (and some are now much, much worse than they suggest) and how primitive and backwards they were at the time (their episode on the global online community was about Minitel, and dial-up).

Overfitting. A similar story happened with tumor classification using neural networks. The training dataset came from multiple sources, but the east majority of images of actually cancerous tumors came from a single source. So the network identified and ‘learnt’ the otherwise totally unrelated features of the image, like the markings on the edges and imaging artifacts.

It’s not overfitting; it’s obviously racism.

I’m not sure if that’s overfitting or bad data collection. Either way, that’s probably not what’s going on here. Twitter _should_ have tons of image data to work with.

If the users an crop their text to fit the Twitter character limits, they can learn to crop their pictures as well.

I like the idea of compressing users’ images down to 140 bytes… :)

Yeah, bring back ascii art!

And replace facebook with dialup BBSes while we’re at it ftw!

Well, I can speculate a few dozen hypotheses that do not include ‘racist’ attitudes to their owners, too. And frankly, that is not the issue. Twiiter is a company made to make profit, trying to somehow squeeze some metric of ‘fairness’ or ‘equality’ and be confident in its worth is a challenge of its own.

For example, most users are (non-african americans), pop. of most active on twitter countries, exemplifies it.

Twitter gains profit by engaging users, thus clicking on a (cropped) image is something the platform would like to maximize.

I assume the cropping algorithm is designed (through its loss function) to crop them in a way that maximizes clicking rate. And who is the one who clicks, the most? You guessed it, mostly white, non-black, users. Hence, we can conclude (assuming the above hypotheses hold to a good extend), that indeed, the problem is far worse than the algorithm, and ingraned in the institutions best interests (profit).

So the question is, is it really a technical problem or an institutional one? How is this institution likely to react? Maybe, the trend of calling tech “racist” and such, is one more way gaslight from the fact that these issues exist and always existed and reinforced either with non-racist objective conditions (fact that african americans are a minority and thus less cost effective to have equal grounding) or by a latent systemic issue that requires a lot more though and reform than critiquing a seemingly racist behaviour of an algorithm.

Oh, that is actually a pretty good point! I wouldn’t be surprised at all if they were constantly running A/B testing on cropping, and training based on how many clicks each variant of a post received.

I read somewhere that pictures of women on twitter often get cropped to show just their chest – this could be a related effect, where it learned in an intermediate stage that ‘wide’ crops of the face and bust generated more clicks, and then went on to crop the face for yet more clicks (fuelled by teenage boys, I presume…)

I think you assumption that most users are white users is wrong.

There are plenty of east asian, south east asian and middle east users for twitter.

That said, their ad revenue sources and maybe investors might be predominately white.

Their work force might be predominately white.

Although the article dismissed this idea, I think their data set maybe predominately white and the learning “learned” to accept the lost of not prioritizing a darker skin face if multiple faces are present.

They may have a “bad” verification set rather than a “bad” training set.

Am I surprised that HAD has focused on the technicalities of the algorithm … No

Am I surprised that HAD readers are focussed on the algorithm … No

However “racist” is mentioned in the title.

The racism here is not the algorithm, nor is it one institution.

The racism here is that people if color have to fight day in, day out, to attain the same level of respect and acceptable treatment that the rest of the community can take for granted.

The management of the development of AI can consider that people of color are a minority who have less public voice, less income to effect change and less access to processes of justice to seek change or compensation. Or in other words, they face equivalent racism in the other institution that could defend them.

The racism here is that this went live in the first place.

The ‘we didn’t know the algorithm would do this’ is not a defence. It can be interpreted as , we didn’t bother to check because we don’t care about racism.

And, incidentally, I am white.

That is not racism – you tested it works on all colours of face (which it seems to), and just missed out on the fact for some reason it prefers the white face (my bet is its more confident it identified a face on white than black). Human error, or machine errors happen and always will even when you do check as nobody can predict every single possible input or always catch the bias in the output.

Its only racism if the bias was deliberate rather than an oversight or no effort it taken to figure out the how and whys to then fix it.

If all bias is racism then the existence of populations that are not all a single homogenous colour or exactly even proportions for all colours is racism (So all those African nations, Middle eastern and Asian nations just have to be racist by definition too!). Rather than simple historical and evolutionary inertia – there is a reason darker folk predominate around the equator and whites the cooler northern climates… And I know you would never get me going anywhere hotter than here.. I find it too damn hot to cope here far to often…

According to current supreme court ruling, intent doesn’t matter.

If the outcome is discriminatory, it is treated the same as if it’s intent was discriminatory.

I am not saying the supreme court is always right. I am just saying this is our current standard for discrimination.

– Exactly – ‘so what’ – the algorithm probably has a preference between long and short hair, hair color, male and female (“oops”), dogs vs cats, yada-yada. Even if you manage to somehow force the algorithm through exorbitant amount of time into it to seem balanced between white and black dataset, someone will throw an image set at it that will return otherwise. And you’ll probably still have a preference between who knows what – asian vs hispanic, etc… Unless there was blatant training to prefer white vs black, people need to seriously lighten up.

Or the algorithm has to do better.

What’s wrong of striving to become better?

What is wrong for demanding fair treatment?

They also didn’t know if the algorithm caused cancer. Should they have run randomised trials to check for that before they released it?

There’s any number of things they could have checked for, and the fact it’s taken so long for anyone to notice this suggests that no one had thought this was something that should be checked for.

Did anyone check that having an even character limit wasn’t racist? Perhaps 141 or 139 would be less racist? Of course not, that would seem absurd – as did the idea that cropping to faces could be racist until this story broke.

Don’t assume that failure to spot something means institutional racism.

“The racism here is that people if color have to fight day in, day out, to attain the same level of respect and acceptable treatment that the rest of the community can take for granted.”

That is a frequent claim that is simply incorrect. It’s just that many black people identify with their skin color, instead of other properties, that are less obviously visible.

In general, people who are a minority of any kind, and that includes white people who are “outsiders”, will not be catered for.

Have you seen ads recently? Tell me how close they are to average and normal people. Especially the “diverse” ads are so cringeworthy, as they try to depict fashionable people of all colors, but still close enough in general style and looks. It’s not real diversity and difference.

Compare that to a group of people that was mixed naturally, came from different background, and countries, and suddenly you will get a much more natural and very different looking picture.

People get discriminated in all kinds of way, frequently, and no it’s not acceptable, but you have to stop thinking you are the only one, or that you are targeted specially. The mainstream (or the assumed mainstream) caters to its needs, and that’s it. This is not a conspiration of white people against you. Those thoughts actually create racists thoughts against white people, who are not racist at all, nor part of the mainstream.

So stop making these things a race question, and remember that it’s about in and out-groups, in general, and affects far more people than your preferred group.

Anything that deviates from the mean is affected. Period.

There’s a leap of logic in your hypothesis. What makes you think twitter users are more likely to click on images of white people?

I wonder if anyone has done testing on this. Would be hard to do correctly (i.e. in a way that represents reality).

Related question: could be my ignorance of twitters business model, but how does clicking on the photo result in profit? Does it generate addition hits on ads?

More celebrities and politicians are white?

I’ll bet the rate at which people in the US clicked on “old men with fake tan and yellow hair” went up statistically over the last 4 years.

I find the automatic cropping of images upon upload very irritating and often perfectly crops out the most important part of the image, or removes the context. You should always be presented with a crop box when uploading an image / choose thumbnail style window. Why would you every use AI for that when cropping in a web UI has been commonplace for over a decade?

I’m beginning to see how the Human vs Robot war begins. Our “Natural Intelligence” will always detect some imagined implicit bias in the “Artificial Intelligence”, no matter how unintentional it is.

I can imagine a world with educational AI devices cheap enough for every child to have one, but they’ll have to be smart enough to detect the natural differences in individual thought processes, as well as be able to handle irrational, or emotional responses effectively, and be able to determine causal differences like trauma or mental illness. But it must be able to imbue trust in the student, and be able to detect and explore any perceived flaw, whether real or imagined by the student, and explain itself. It will have to be friend, teacher, and psychologist all in one. Lofty goals.

You always do as Teddy says? I’d go terrifying not lofty. (A Harry Harrison short story I believe – damn scary premise, raise children with the machine in a Teddy Bear so they become incapable of acting in certain ways having been conditioned from birth)

I think I’ll stick with meat intelligence for the time being.. Pure logic has no place while humans still require belief/trust without proof to live (and if we ever reach the stage where we 100% know how everything works the universe will just have to be replaced by something stranger). And so far our AI’s aren’t even as smart as Ants – no adaptability.

I don’t know how you are quantifying “smart as ants”, but AI has beaten humans at many things (chess, Go) and is somewhat close on others. Also, neural network stuff doesn’t run on “pure logic”, just interaction of a bunch of simulated neurons.

Indeed quantifying intelligence is tricky – but computers beating us a games that are heavily logic, mathematics or lookup table based – what a surprise. Those are the computers function. But the learning algorithms to interact with an imperfect or unpredictable input doesn’t work that well, and solving puzzle type interactions – like I can’t cross this chasm but I happen to have x,y and can make z requires a leap of logic to use something for a purpose it was not intended for – its not in the ‘rules’ the machine has.

So far no ‘AI’ is anything but very specifically designed and trained to process one very specific input and give one specific type of output. Throw enough of them together and eventually you might get there…

That’s the thing, though. Go is not logic based. Go is about feeling empty space and how a piece influences the balance of power in an area. And then you also have Starcraft 2. Another game where you cannot can the logic up.

All games are logic based. Anything that has a specific objective and a rule-set that must be observed in reaching that goal relies on logic. Thats how you win. You take the path most likely to lead to victory based on your opponents actions and constrained by the game rules. Some games just have more options and hence a larger possible space of actions at any given time and a larger set of possible future actions. You just end up beyond the realistic limit of the human ability to consciously pattern match in real time. But more and more powerful computers can search the tree of possible moves more completely and identify more complex input patterns faster.

Art is an example of something that is not logical, or at least less logical than most other things. Even the attractiveness of shape and colour are based on the underlying rules of the physiology of the viewer.

Exactly as Josh says – and the computers can cheat and not even look at the logic (beyond legal moves) – just lookup the current board state and which moves historically lead to winning. Over the course of a relatively small number of games out of the potential total it can get rather good without even trying to comprehend the tactical play – the faster the computer gets the nearer to real-time it can do this, but because the computer doesn’t ever forget (in theory) every wrong or great move that has ever been made from that game state can be saved.

I got bored of Chess quite early because it started to become so predictable and I’m just a dull old human – despite the huge number of potential chess games you can eliminate huge percentage of them because they require one or both players to wilfully choose to not win the game when they have the chance, and then the same exact tactical situations will keep reoccurring too (even more so if you ignore piece colour and just consider which side plays next and the current board layout).

I do think Go is much more fun, but its still got a logic to it, its just complex enough you end up playing on instinct more than calculation as you can’t store and process it all in your head.

The Young Lady’s Illustrated Primer.

Super underrated book but also definitely not mainstream. The drummers pretty much precludes it from any reputable institution’s read lists. Didn’t Amazon’s Kindle team read the book as part of their team building?

” Our “Natural Intelligence” will always detect some imagined implicit bias in the “Artificial Intelligence”, no matter how unintentional it is.”

While I agree this is not racism, your view shows an overconfidence in the machine’s decision. Datasets are very hard to make unbiased, it doesn’t matter if intentional or not, they are not objective.

Assuming irrationality, trauma or mental illness are the reasons to detect this borders or arrogance.

When you communicate with people, misunderstandings are common, and you have to be willing to explain your perspective to do so successfully. Nothing surprising there, machines will have to do so as well.

There is a difference between being touchy, and a bias, if unintentional (but non inconsequential!) in an algorithm or system.

Automated driving has similar issues, and when it makes mistakes your “irrational” or trauma, won’t work in court. You have to be able to explain the *rational* causes for a decision the car/machine, took.

I like the idea of just letting the user be in charge of what is in the image, and scale it correctly. And if it isn’t scaled right, then twitter just scales it to fit.

Never the less:

AI and computer learning is still in it’s infancy and the only way to for it to grow is for it to continue to be used and updated as time goes on. So, yes there are going to be errors as we go through this learning curve; and yes, in this case we need to make it better at recognizing people, animals, plants, and objects, but I also think that people should just get a life and stop applying human bias to a computer. Enjoy the ride and laugh at the mess ups on they way.

Real problem is, it’s not really an AI at all. It would pick lemon instead of an avocado, because it’s simply a computer program using contrast.

Face recognition is a thing.

And yes, it may include using techniques for contrast. (finding edges)

And yes, the term AI is over used.

And yes, machine learning doesn’t need to be apply to all application.

Depending on the problem, there may be a simpler and more accurate solution than AI.

i.e. image processing/filtering kernels/masks, statistical models, etc

Considering the fact that a few changes to pixel values can make a NN see a panda as a monkey I am pretty sure there will always be false classifications and if you don’t take the google route and stop your NN from ever producing outputs that might in some cases be racist I don’t see how this problem can be solved.

By improving classificators and reducing false-positives you can get less of those results, but as with any real-world process there will always be noise and randomness and sooner or later this randomness will get you and will ruin your day.

Maybe it is time to think about whether a computer calling a human a monkey should spark the amount of outrage that it did.

I am not saying the result shouldn’t be looked at to figure out what went wrong, but just in the same way a plane being called a human would be analysed. And calling a human a plane is objectively “much more wrong” than calling a human a monkey (we share a common ancestor after all). Just because one is much more offensive than the other should not make it worse (in terms of loss function).

Right you are, and making the function ‘worse’ on calling a human a monkey than calling them something stupid because that mistake is offensive to some doesn’t help in the long run. You have to feed these networks useful feedback so they tend towards better results effectively. It can’t possible learn correctly if you keep futzing with it because that near miss mistake is unacceptable.

But this is the problem. You train the neural network to do the “right” thing, and it does something socially abhorrent. Train it to be social, and it can’t always be right. They’re stuck.

you can have 2 separate networks(or more), you know.

you can train one for it’s primary function and train the other for social function.

the social network does not have to feedback into the primary network.

Or just have to accept that the identifications will occasionally be wrong, and insulting. Issue the standard apology and wait for the ever increasing training data to mean it doesn’t make the mistake (in theory it with the shear amount of data on a social network it should get pretty damn good rather fast).

The socially abhorrent element is people being stupid – as long when its flagged up as incorrect its fixed then there is nothing to get worked up about – some dumb machine buggered up in the process of getting smarter..

You don’t go round lobotomising dogs and children for screwing something up in a way that could be considered offensive, you tell them they got it wrong and what the correct answer is. And in the future they probably get it right.

As controversial as this question may be, i have to ask it: Would we be having this conversation if it was white faces that were being cut out?

If we do it this way, are we just shifting the “burden” onto minorities that are being impacted? which may add more stress to their potentially already stressful life.

I don’t know John, personally I don’t use social media type stuff willingly anyway – this website is the closest I get. So I really don’t know what its like to use anyway, as it seems awful as soon as you look at it anyway… (But maybe I just don’t understand it?)

I’m not sure if its fair to call it a burden. Particularly as if you take that approach and make it clear that is what you are doing when it goes and calls President Trump a Potato or Tom Cruise a missile or other such stupidities its the same fix methodology and should trend towards actually correct in the end.

As Cristi asks if it was white faces getting cut would it be noticed or matter? – I actually doubt it would be spotted for quite a while partly as most pictures of famous groupings are so heavily white or entirely white the odds of the one or two minority members being in enough photos to really be massively overrepresented is low. And when it does get noticed the White population being largely a rational comfortable majority wouldn’t kick up the fuss the more downtrodden feeling minorities do. At least not so globally.

I do think its the only ‘sane’ option though – much as it might bring critics its the only way a dynamic learning model can learn correctly – to do and improve based on accurate feedback. Otherwise I don’t think the models will ever really trend to getting it right – to much false feedback and bad data.

I think you are making the assumption that they are training on a “unbiased” data set.

Also “false-positives” is not the issue here. The issue is a consistent network bias.

It is fine if the issue appears to happen “randomly” from random “noises”.

It is different if it is happening consistently with not so “random” features.

I mean do we expect people with different skin tone to take pictures together?

I would say the answer is yes.

Reminds me of the 35mm film industry, they said the same things about their biased films and how the science is impossible to show dark skin tones correctly till the chocolate companies complained that the photos of light chocolate are too dark and furniture companies had slimier problems with different wood types. They listened to them and solved the color range issues and they released the more expensive dynamic range films. The other affordable films that works best with lighter skin color they wrote on the box (normal skin color).

Look it up.

I went to get some pictures colour copied on a trip and the skin tone came out wrong. I ask the person if there was some “processing” option. (I have read about how different countries have different adjustments to account for skin tones and that popup in my head.) Sure enough he turned off the “correction” and fixed the problem.

Looked it up. http://www.openculture.com/2018/07/color-film-was-designed-to-take-pictures-of-white-people-not-people-of-color.html

Some links of interest there.

This is an unfortunate systemic failure.

I think this problem applies to many things, but people focus on skin color too much.

Many people get neglected, think of color vision differences. Not only is it wrongly called color blind (which would imply you don’t see colors at all, but in real it means you not only see some color shades less distinctively, but others more distinct).

How many modern designs completely ignore this, and make it hard to read and view graphs.

Again, it’s the majority of people deciding, and being unaware (or uncaring), and just picking what they like the most, “aesthetically”.

A real and consistent issue in education that gets little attention.

There are many other examples, where people who don’t find the average or norm are seen as defective, and ignored, while they are just different from the norm and a minority.

But people get so touchy when it’s about race. I assume because this “minority” is not that small at all…

“Not only is it wrongly called color blind (which would imply you don’t see colors at all, but in real it means you not only see some color shades less distinctively, but others more distinct).”

What difference does it make? Would it make life easier for anyone if we renamed it to “involuntarily selective color perception” or “differently abled visualisers”? No, it doesn’t. All it does is complicate things needlessly. Perhaps we should be less worried about what we call things, and more worried about the thing itself.

I don’t think its the naming they are really objecting to – but the ignorance of what it is, and lack of care in creating so folks with it can still find a way to see it normally.

How many folks out there can’t get an electricians license because the wiring colour schemes happen to make two colours chosen look similar for them for example? Some forthought and perhaps adding other identifying patterns on the insulations – Then suddenly they could legally do the job, and everyone would probably find it easier to do so too.

Same thing with wheelchair access – because nobody is taught to think about it and asked to figure out what its like to live with there are lots of places where that access is clearly an afterthought (if it exists at all) to meet the legal requirements.

I think the “much worse” part of this problem is actually if they are using click-through rates to train their neural network. That is, you select the top two (say) crops, and randomly A/B test them against users. The one with the higher ‘engagement’ (probably not ‘click on image’ because that probably correlates to a *bad* crop, but replies/retweets/amount of time looking at that particular tweet before scrolling past) gets fed back into the network as the ‘better’ crop.

If that sort of algorithm is at work, twitter’s crop is bad precisely because it is reflecting an underlying unconscious racial bias in its readership. That’s pretty hard to correct, and as the youtube recommendation algorithm problems demonstrate, that sort of bias tends to be self-reinforcing.

So in the scale of badness, I ‘hope’ that this is simply inadequate training data on non-white faces. That’s the easiest problem to fix.

If they are using that sort of feedback from the users to suggest which is the better crop ‘racism’ is a certainty as the population you are receiving feedback from isn’t even close to an even split. And people tend to naturally lean towards things that look more familiar – which is perfectly understandable and not at all racist in itself. But will always bias the results towards whichever face looks most like most of the users….

I’m not even sure if I think that is wrong… As its not at all racist as an algorithm in that case, nor is it bad that user feedback promotes ‘better’ images.. But on the other hand its hard to be different and feel ignored, and doesn’t help create the appearance of balance or ‘correct’ the users subconscious into finding all skin types etc familiar (Which I am not sure is a good idea either – the idea of computers deciding and pushing automatically somehow seems worse than if a human is doing it to me).

Maybe the crop they show you should be selected based on your own profile – which images do you linger over.. So the computer shows you what you apparently want to see even if you are a minority… All that user tracking and preference data that can never be misused… right?…

“But on the other hand its hard to be different and feel ignored,”

It’s more than that. If you just choose what most people would pick, how do you ever grow and create excellence. Excellence is rare, and would consequentially be averaged out, unless some people would recognize it’s value.

But as you know from science and how it gets is grants, you know that quality of the work is not the deciding factor. “Impact” or in other words how impressive it is, matters a lot more.

It would be equally wrong to just hype uncommon things.

The truth is that you cannot solve this statistically, it needs domain knowledge and expertize and time spent in a certain domain.

And statistical solutions just lead to creating a *mono culture*.

This is a big issue, completely independent from discrimination.

The fake diversity that is often presented, with average “pretty” people of slightly different skin color, is just another form of mono culture.

If they’re using click through rates, that might explain cases where the crop algorithm focused on women’s chests…

I’d bet $ that this is exactly what happened ^. Remember: engagement => money. Twitter’s goal is to make money, therefore the process(es) that increase(s) engagement will be the ‘naturally fittest’.

Asking people to self-crop is too high friction, this will lead to less engagement. Adding crop-tools will cost time and money. Adding methods to the API so twitter inform/can ask the API client to crop is also not feasible.

Paying somebody to suggest how a bunch of images should be cropped is expensive. Paying somebody to crop every image is more expensive.

So far, we’re not optimizing the $ earned/kept or the primary resource that generates $.

However, it’s ‘free’ to pick $n different ways too crop an image and keep track of how the variants perform across different twitter demographics. Large portions of the technology required to do this already exist inside Twitter so re-purposing some existing components for this new task is relatively easy.

Once you have clear results that show that variant $n.8 is the one that has the highest engagement among some population, you start using the algorithm behind variant $n.8 for everything that a given population will see. If you’re good, you’ll occasionally re-perform the experiment to make sure that $n.8 is still the best for that population.

Twitters user base is probably majority heterosexual and white and male, so if you’re not sure what population a user falls into (perhaps they’re new or not signed in), then you presume they’re a member of the most populous population. You show them the thumbnail with the white guy or the womans chest.

Turns out, stats/AI/ML is just a new type of mirror.

Anybody remember Microsoft’s Tay? Yeah, similar concept here… except people intentionally tried to ruin that.

“I’d bet $ that this is exactly what happened ^. Remember: engagement => money. Twitter’s goal is to make money, therefore the process(es) that increase(s) engagement will be the ‘naturally fittest’.”

Ironically, what makes the most money (short term), is not at all the fittest. It is the most matching to the average person, but that’s not a quality in itself.

“I think the “much worse” part of this problem is actually if they are using click-through rates to train their neural network.”

Google has “optimized” their search results by popularity since several years. Since then it has been much harder to find relevant content, because more mainstream concepts or “likes” are preferred.

It’s another way to average out everything in a race towards the bottom.

Nice title in terms of click bait coefficients. But the article actually invalidates it. All the things mentioned are part of the algorithm. A more accurate title could be “it’s not the algorithm, it’s much worse … It’s the algorithm”.

We can agree to disagree, then, about whether the specific loss function used is part of “the algorithm”.

I would claim that the the whole system consists of the data that it’s trained on, the algorithm used, the loss function chosen, and the training method.

Of these four components, I made the case in the article that it wasn’t the data, or the algorithm, but rather the loss function that could plausibly be to blame. And while different ML algorithms are well studied, how to square predictive loss with some kind of socially-aware loss function isn’t.

Get it?

(We can argue about whether or not this is true. Heck, it’s all basically conjecture at this point.)

“I would claim that the the whole system consists of the data that it’s trained on, the algorithm used, the loss function chosen, and the training method”

See that’s where the confusion comes from. The part you separate as the algorithm does not make any sense. Do you mean the inference?

Image related tasks predominantly are handled by neural networks which are defined by the network topology (number of layers, types of layers, sizes of each layer etc), the activation function(s) which determines how the neurons output is calculated. Then there’s the back propagation part where the coefficients of the neurons are updated according to bunch of meta parameters to decrease the error. This is the NN AI algorithm.

Hope this makes sense.

I understand where you’re coming from. To you, “the algorithm” is everything involved in the entire process. To me, it’s just the network — your topology and activation functions.

The rest is data and fitting practice. To me, these are separate from “the algorithm” in my mind b/c they involve further human involvement: selection of the dataset, and oversight of training, picking metaparamters, etc.

Meh. Potato, potato.

But I really don’t like to be accused of sensationalism.

Whatever the AI design, it’s training must always fit its design; inverting image colors in processing, where the AI is trained for this would have been just as easy. Poor design and implementaion, combined with greedy leadership! How many symptoms will we find susequently?

Yeah….something like this or modify all training images to balance shades to some nominal color and intensity.

“Which is essentially what Google did — their “workaround” was to stop classifying “gorilla” entirely because the loss incurred by misclassifying a person as a gorilla was so large.”

So instead of figuring out why a person is misclassified as a gorilla and finding a solution, let’s just throw out that data set and move. Yeah… I see great things coming from applications of AI in the future. “I, Robot” anyone?

I think it could just be a photographic issue, as lighter-skinned people often have more contrast visually. Why are they cropping them for people’s faces at all? What if you wore a shirt with a realistic photo of a person on it? Would it zoom in on the shirt? You can’t trust an algorithm to do a human’s job. Like other have said, just scale the picture, and if the people in it are indistinct, it is the photographer’s fault.

Talking about racism and offence in context of some minor software bug is the biggest problem here.

Thank you. Exactly this.

So…

Google discriminates Gorillas?

I thank that non existing entity that I don’t use twitter.

This opinion piece doesn’t belong on HackaDay. Not because it’s not a hack, because it’s just fire for the flame war. It’s not intentional, it’s a bug. Mistakes happen, they’re working on it, and pieces like this only do harm for the situation.

I disagree, and I don’t think you read the piece.

Of course it’s not intentional — my whole point is that it’s almost necessarily baked in.

Simple solution – shut down Twitter! Imagine what could be done with all the collective energy people waste shouting at each other on that platform.

you are ignoring human nature.

people like to use it. that’s why it still exists.

No, there’s a simpler solution. Repeal Section 230.

That would basically kill every website with user submitted content. No.

Good. Bring back web 1.0.

Section 230 is badly written. Whilst its purpose is to protect “owners of interactive computer services” from liability towards 3rd party material on their websites, what it ends up doing is protecting said owners from any liability whatsoever. There has to be liability for breaking your own ToS. There has to be liability for election interference. There has to be liability for political and ideological discrimination on a world wide scale.

The problem is that these platforms are no longer the tiny isolated forums that Section 230 was aimed at. Twitter and Facebook have millions of users. They are used as communication platforms for the entire world. And as we’ve seen, they hold immense power over public opinion. They can manipulate votes on a large scale. You cannot just let so much power go unchecked. And it is Section 230 that enables this abuse of power.

Section 230 doesn’t exist on a worldwide scale. So “worldwide” is a bit of a stretch.

Europeans, Russians, Indians, Chinese, Africans, and a whole lot of other people get to determine how liability works for their citizens.

I never said Section 230 has worldwide scale. I said Twitter has worldwide scale, which it does. And rather than having the US Army rolling into foreign countries, delivering freedom, i’d much rather that they would deliver freedom of speech to countries that don’t have it.

No, it would kill censorship of user submitted content.

Yes.

This always happens: after I finished writing this, I thought of another way that the Twitter algo could in particular be failing.

What if they trained the NNet on “find me the 400 x 400 pixels with the highest likelihood of containing a face in the center”? If one person scores an 85% confidence, and the other an 84%, it picks the 85% every time. Train the NNet on more white faces than black ones, and it’ll have extra confidence in the former. Bam.

IOTW: it could just be this implicit maximum (pick the highest probability of face) that’s putting the NNet in a knife-edge situation, where they perform notoriously badly.

Quick solution: randomize the choice across the classified areas, weighted by their classification probabilities. Then if the white guy scores 85% and the black guy 84%, they’ll show up in the crop almost equally.

So it could be that their algorithm was dumb. It may not be the loss function, necessarily. Still, that goes for the Google example…

If this is the case, Twitter owes me a beer.

It doesn’t just look for faces though, it’s taught on eye tracking data, as is publicly published and easily findable.

I find it odd you didn’t see this during research for the article:

https://blog.twitter.com/engineering/en_us/topics/infrastructure/2018/Smart-Auto-Cropping-of-Images.html

I didn’t look too deeply into it, honestly, but I would have guessed that they abandoned saliency after the “cropping to breasts” fiasco.

A week ago they promised to open source the algorithm so I guess we’ll see:

https://thenextweb.com/neural/2020/09/21/why-twitters-image-cropping-algorithm-appears-to-have-white-bias/

Careful. What I read is that they’ve agreed to open source “the results” of their inquiry.

Which I think means that they’re just going to publish a report, with the extra add-in PR buzzword “open source” to make it sound like they’re going to be super transparent.

But if we all get shown the code, I’ll gladly eat my pessimistic words. Wait and see.

Indeed I expect that is the cause – they say they tested it and it cropped to black faces fine. And that seems to be true when there are only black faces to look at.

Increasing training dataset might not help much though, under most lighting conditions the white faces have lots of contrast so features are more easily distinct. With the really really black skintones seeing any depth in the image is not certain they can end up looking more silhouette than portrait. Getting over that requires more than just more images I’d suggest – it probably also needs better camera (as many of those have lots of postprocessing to skew the images to look ‘right’ despite rubbish lens). IFF every photo was taken by the pro photographer in the right light such that the darker skin tones don’t disguise the contrast badly then just a larger dataset would work. Otherwise the learning algorithm isn’t really going to be able to classify from the bad photos as clearly – it might well look at the artefacts the phone camera created as its que for example – which only works on that style of camera so picks up blacks shot on the Iphone6 but not the later models etc etc..

Hopefully the random choice of x% confidence faces is rolled out as it should help.. Though i expect that will still look racist.. As the population in photographs tends to be short on the ethnic minorities when its a mixed bunch (which statistically isn’t a surprise at all – if 20% of the population are black in a perfectly fair world with no bias still only get 1/5.. and we know this isn’t true many photo’s will have much less than that percentage of any ethnicity or be almost entirely that group (a family photo perhaps).

I worked on a project in the early 10’s using the opencv haar cascade to detect faces in a webcam feed. We definitely had trouble with darker skin and would always get lower confidence values. We also had trouble with people who had a lot of hair that was close (in grayscale) to their skin color, for example a white woman with curly blonde hair and a white man with lots of gray hair and a big gray beard. The worst detection happened with a very dark skinned Indian man on our team with a big beard. We were able to improve our results quite a bit by 1) detecting all ROI in our image with a low threshold 2) equalizing the histogram of those ROIs and 3) seeing if the confidence of ROIs increased when you equalized the histograms.

This was before woke culture really became a thing and we were able to have a rational discussion about how a dark skinned person’s face reflects fewer photons into a camera than a light skinned person’s face. Our product never worked out, but we also implemented a low light cutoff where our product would refuse to work under poor lighting conditions, even though hypothetically it would work anyway with a light-skinned only group.

This seems like the most sensible explanation by far. I’m no expert on AI, but programming specifically for a case where you have two hits and one has a higher confidence, and anticipating that the difference in confidence levels will fall along lines of skin tone, seems nearly unforeseeable.

In other words, why would the AI have lower confidence on dark faces vs light? People talk about contrast and things, but that’s speculation. We don’t know what the nnet is keying on, so who could anticipate that the differences in skin tone would cause a confidence differential?

I’ll also suggest a variation: if the nnet is trained on individual images, and the issues are only coming up on a strip or matrix of images, like the test cases, it could be that the presence of multiple images affects its confidence as well. Also important to consider the question being asked of the machine: not only “is there a face?” But also “where is it?” Confidence of whether it’s a face or where the face is could go down dramatically with a strip of images (how does the AI perceive that???? I’d be very surprised if it was smart enough to see It as multiple distinct images). So if they tested it on a bunch of individual images of varying skin tones but not with strips, they could miss it. I would venture to guess that the cropping AI was designed for long images, not a strip like a photo booth.

A variation on that same theme, is the JPG algorithm as processed in the source software stack.

Perhaps like in the catastrophic failure of the chemical film industry, they trained to “Normal” http://www.openculture.com/2018/07/color-film-was-designed-to-take-pictures-of-white-people-not-people-of-color.html .

In fact, evidence that this happened at a United States federal level is here: https://www.nist.gov/system/files/documents/2016/12/12/griffin-face-comp.pdf

http://proceedings.mlr.press/v81/buolamwini18a/buolamwini18a.pdf

I have seen in the comments in a few locations a reference to how there is more data required to capture dark complexions. Perhaps in compressing that data to JPG facial features are lost to a greater degree than when a lighter tone is compressed. Leaving us with a 1 or 2% difference in quality of facial features after compression.

In fact, I would question whether the standards developed for jpg compression were trained to light complexions, and really all that is required is to move the JPG compression algorithms to make larger file sizes, and capture more details, so that the data exists at capture.

This is based on a presumption of raw data captured for darker complexions being higher than light complexions. If this is a faulty position I am happy to toss the postulation, and I was unable to find a reliable source for this.

I would like to be a part of the solution, and I hope I have not added to the problem.

If your population is predominantly white, “normal” is technically the correct term.

What people don’t realize is that “normal” or “healthy” is used a lot in medicine too, to describe things that deviate from the norm (therefore normal) as not normal or not healthy, eventhough it’s just differences, like in color vision, for example.

But only where the lobby is strong do people notice how it could be perceived and are shocked, when in real classifying people as normal and not is a weird concept in general, but very common.

Another common issue is the training dataset bias. Getting a good labeled data for training is not an easy task. Whatever biases are in it, those will be embedded into the NN, so it will act based on the training dataset. This is a really common issue, as it is very hard to find a dataset that covers the majority of the possible inputs. Whatever is outside of this is essentially treated as noise as the networks have no cognitive capabilities.

Other issue could be the application of transfer learning, where the input and output part is stripped from a pretrsined network and is replaced with untrained ones, then the new trading data is fed through it – this can greatly reduce the time required for training the network, compared to say randomly initialized weights, but can have some hidden biases that are not uncovered during the training.

Mostly we don’t really understand what actually happens inside a NN.

Twitter does not stop people from starting their own platform and perform the kind of speech they like there.

So on that count, I would like to disagree.

I don’t think our 1st amendment rights applies to platforms.

That said, I do hope they agree with that spirit and allow as much speech as possible.

On the issue of disinformation, I think it is tough.

It is so easy to create disinformation and so much more needs to be done to disprove it.

I think accredited information sources might be solution.

where publications/individuals need to earn a rapport to be classified as a credible source.

It doesn’t mean other sources are not credible.

It just means some institutions or individuals has spend the effort in establishing themselves as credible.

Of course, the accreditation process needs to be transparent and so does the results.

Something like a credit score, but with more details.

“Twitter does not stop people from starting their own platform and perform the kind of speech they like there.”

That is true, but what Twitter does is use their influence to defame any platform others might create. Still, Parler seems to be gaining traction.

“I don’t think our 1st amendment rights applies to platforms.”

I think they should, especially when we’re talking about something as big as Twitter and Facebook. This isn’t a tiny forum with 20 users. Government bodies and officials are using these platforms to communicate with people. It has virtually replaced snail-mail, telephones, and speaker’s corner. It’s the new marketplace of ideas. If you’re not allowed to communicate with your elected officials, is that not a violation of free speech? If you’re not allowed to communicate openly in the same space as the rest of the world, is that not a violation of free speech?

“I think accredited information sources might be solution.”

You’d think that, but as we’ve seen, it’s not. Because who accredits said sources? Facebook uses far left companies to fact check their posts, which means a lot of factual and accurate conservative content gets wrongly flagged as false. Zuckerberg, in his silence, pretty much admitted to this. We end up in a vicious circle of “who watches the watchmen?”. Who accredits the accreditors? There’s huge companies that claim to fact check posts, but anyone with eyes to see would find them rotten to the core.

The true solution is to cultivate a mentality of skepticism and fact checking, where the default position is to see for yourself what was said, rather than digest a pre-canned opinion piece.

It doesn’t matter how big the platforms are.

Our 1st amendment rights in almost all context only protects us from the government and not private entities.

“Congress shall make no law…abridging freedom of speech.”

You can still reach your senator or house member by snail mail or email or they might have their own website/forum.

I am not saying social media is not powerful. They are.

I am not saying they don’t have a huge audience. They do.

I am just saying you have a choice to start your own platform that is “better” or a different platform that is “better” made by other people.

However, I am also fairly certain “they” “care” about business/ad revenues from all sides and there are both left leaning and right leaning people working in these firms.

As for accreditation, that is why I said it needs to be accompanied by relative transparency.

We need to see details report cards for each accreditation.

But yes, at some point, the public needs to be the judge on which sources are credible.

That said, there should be mechanism that keeps a score and collaborate stories.

“Our 1st amendment rights in almost all context only protects us from the government and not private entities.”

Okay, so let’s change that. Tyranny shouldn’t get a pass just because it comes from a private company.

@Anonymous

You think we should overturn the 1st amendment and let the government dictate free speech for private entities and individuals?

Are you sure that is what you want?

@John Pak

Yeah sure.

@anonymous @John Pak

How about a third option: if everyone had the same level of outrage for the other issues at twitter as they did for an AI bug, maybe it would effect change.

The thing people often misunderstand about the 1st amendment is that it protects twitter too. And thats the way it should be. It’s there to limit the power of the state, not to protect individuals from corrupt individuals or corporations. But that doesn’t make twitters actions right either. The right to free speech and free press is universal, and censorship is wrong—just not illegal as long as it’s not the state doing the censoring. In the same way, offensive speech is wrong but not illegal.

YMMV outside the USA.

@Grey Pilgrim

So if the government called itself a private company, suddenly censorship would be okay? I’m not sure I follow the logic here. All censorship is bad and should be resisted, full stop.

@Grey Pilgrim

Unfortunately, some issues are not as “black and white” to some people. (no pun intended)

I mean, even on this issue, there are still saying: “chill out. they didn’t do it on purpose.”

Or “We cannot expect these kinds of algorithm to be “perfect”.”

I am not saying those are not valid points, but I don’t think they are acceptable excuses for intrinsic bias on such a service.

I think that’s right. The problem is that the image cropping is doing something unintended and unexpected, and that it’s also offensive b/c it seems to be doing it according to skin color.

I’m very interested to hear how the Twitter folks fix it, but my guess is that this problem is deep enough that it’s not going to be easy. And worse, it’s going to be a band-aid, fixing up this one problem, but not the source of similar problems.

@anonymous

Not sure what you mean, but I think you’re trying to make the point that censorship is bad no matter who is doing the censoring, and I agree with that.

I am just trying to bring some perspective on the Bill of Rights and what they really mean, and who they apply to. The distinctions are not trivial. Take a look at this recent case: https://law.justia.com/cases/federal/appellate-courts/ca9/18-15712/18-15712-2020-02-26.html

The case is between PragerU (educational media company) and Youtube. Youtube had restricted some of PragerU’s videos because (PragerU alleges) of political differences, and in doing so violated PragerU’s First Amendment rights. There’s a lot going on there, but the highlights are:

– Plaintiff’s argument fails because the defendant is not a state actor.

– Defendant’s argument is based partly on application of First Amendment protection to itself: if the state were to intervene, it would be a violation of youtube’s rights.

This case was framed by PragerU as crucial to the future of the free world (see the video about it https://www.prageru.com/video/prageru-v-youtube/), but it puts it in perspective when you consider that the worst thing that happened to PragerU is that it has to either put up with the restricted mode limitations (videos are all still available to my knowledge) or host the restricted videos on its own servers, which it already does—and going on right now there is real censorship by governments that will torture and kill their own citizens to further a political agenda.

The point is, as long as the internet itself isn’t censored, there will always be means of getting information out there regardless of whether big tech is trying to suppress it. You can get your daily dose of non-politically-correct news and opinions from email groups, internet forums, news/media sites, and talk radio—and be happy that you’re not going to disappear or be sent to a re-education camp (assuming you live in the US or another generally free state).

I’ll also note that these freedoms are central to some important issues for hackers, many of which have been written about here on HaD, like right to repair/hack on my tractor/ventilator/everything else. Freedom of Press protects HaD, and I for one am glad that it at least has not become simply one more outlet for people to vent their political frustrations.

Perhaps I’m a simpleton, but why can’t the learning phase just ignore skin tone and vary/normalize contrast or use gray scaling, to create an abstract face devoid of color. There’s a lot more to detecting faces than skin tone?

I am not sure if I am right, but from my understanding:

Skin tone will still exist with gray scaling…

If you think about it, color is just 3 sets of gray scaling…

I remember Twitter.

So you don’t actually know eh? As for the google gorilla incident (which may have been confected like the webcam one was), if you had children and participated in their development (rather than leaving most of the work to your partner) you would notice that if they are exposed to images of apes they will learn to label them “monkey!” every time they see one, but if you then show them an image of a dark or obscured face they will also say “monkey!”, similarly all old and ugly people can get labeled “monster!”. It is simply because the shortest path from the input to a known category results in those errors/simplifications and that may suck if you are on the wrong side of that differentiation but until AI learns to operate more symbolically as well as considering context you will continue to see such infantile phenomena. If you don’t like it avoid twitter and join the hords of other people who have grown tired of that lunatic asylum. So how do you train a NN to deal with exceptions, you have to feed it a large enough training set of exceptions and have the network fine grained enough, but even then you will have edge (or ridge) cases between minima/maxima that will fall one way or the other depending on something a subtle as the rounding error in your maths library. They probably need to randomise that rounding function, and pay the huge computational cost that implies. i.e. It is a question of maths and money, or the price we pay to save the planet from that climate catastrophe your mates keep going on about (the one that does not exist), because computation consumes energy and we all know how energy is produced… I for one look forward to our near future fusion powered AGI overlords and the endless nanofabricated trinkets they make for us.

“similarly all old and ugly people can get labeled “monster!”.”

That’s definitely a culture/parental bias that is shown here, not natural at all for kids to think that way. Kids are mostly a reflection of their environment and influences.

You already equate old with ugly, many people don’t. That your kid reflects your thoughts is completely natural, but shows more about you than your kid…

Especially Asians seem to love to talk about (yellow, white, black) monkeys, I heard that frequently…

So, an Algo that will center on face that expresses multiple points of definition in contrast to shadows and city lights. Hmmm.

Didn’t Tesla have a problem with an images of fake road signs and pedestrians?

The truth can be also said that “pale skins” are more noticable on camera in poor or low light conditions and thus can be discriminated against more easily because cameras can see their facial features more easily?

Let’s just put this stupidity in the urn where it belongs.

To quote Morgan Freeman speaking to “the worst journalist of the year” (ding) Don Lemon..

“The more you talk about it, the it will exist..”

“Just stop talking about and you won’t be thinking it!!!” (Aka mental patterns..)

Twitter is cancer btw. Pure and simple.