If you watch many espionage or terrorism movies set in the present day, there’s usually a scene where some government employee enhances a satellite image to show a clear picture of the main villain’s face. Do modern spy satellites have that kind of resolution? We don’t know, and if we did we couldn’t tell you anyway. But we do know that even with unclassified resolution, scientists are using satellite imagery and machine learning to count things like elephant populations.

When you think about it, it is a hard problem to count wildlife populations in their habitat. First, if you go in person you disturb the target animals. Even a drone is probably going to upset timid wildlife. Then there is the problem with trying to cover a large area and figuring out if the elephant you see today is the same one as one you saw yesterday. If you guess wrong you will either undercount or overcount.

The Oxford scientists counting elephants used the Worldview-3 satellite. It collects up to 680,000 square kilometers every day. You aren’t disturbing any of the observed creatures, and since each shot covers a huge swath of territory, your problem of double counting all but vanishes.

Not Unique

Apparently, counting animals from space is nothing new. Brute force, you get a grad student to count from a picture. But automated methods work in certain circumstances. Everything from whales to penguins has been subject to counting from orbit, but typically using water or ice as a background.

There have even been efforts to deduce animal populations from secondary data. For example, penguin counts can be estimated by the stains they leave on the ice. Yeah, those stains.

However, when counting the number of elephants at the Addo Elephant National Park in South Africa, there was no clear background. The grounds are forested and it frequently rains. The other challenge is that the elephants don’t always look the same. For example, they cover themselves in mud to cool down. Can a machine learn to recognize distinct elephants from high-resolution space photos?

How High?

The Worldview satellites have the highest resolution currently available to commercial users. The resolution is down to 31 cm. For Americans, that’s enough to pick out something about 1 foot long. That may not sound too impressive until you realize the satellite is about 383 miles above the Earth’s surface. That’s roughly like taking a picture from New York City and seeing things in Newport News, Virginia.

The researchers didn’t specifically task the satellite to look at the park. Instead, they pulled historical images from passes over the park. You can find out what data the satellite has, although you might not get the best or most current data without a subscription. But even the data you can get is pretty impressive.

According to the paper, the archive images they used cost $17.50 per square kilometer. Asking for fresh images pushes the price to $27.50 and you had to buy at least 100 square kilometers, so satellite data isn’t cheap.

Training

Of course, a necessary part of machine learning is training. A test dataset had 164 elephants over seven different satellite images. Humans did the counting to provide the supposed correct answer for training. Using a scoring algorithm, humans averaged about 78% and the machine learning algorithm averaged about 75% — not much difference. Just like humans, the algorithms were better at some situations than others and could sometimes hit 80% for certain kinds of matches.

Free Data

Want to experiment with your own eye in the sky? Not all satellite data costs money, although the resolutions may not suit you. Obviously, Google Earth and Maps can show you some satellite images. USGS also has about 40 years worth of data online and NASA and NOAA have quite a bit, too, including NASA’s high-resolution Worldview. Landviewer gives you some free images, although you’ll have to pay for the highest resolution data. ESA runs Copernicus which has several types of imagery from the Sentinel satellites, and you can get Sentinal data from EO Browser or Sentinel Playground, as well. If you don’t mind Portuguese, the Brazilians have a nice portal for images of the Southern hemisphere. JAXA — the Japanese analog to NASA — has their own site with 30 meter resolution data, too. Then there’s one from the Indian equivalent, ISRO.

If you don’t want to log in, [Vincent Sarago’s] Remote Pixel site lets you access data from Landsat 8, Sentinel-2, and CBERS-4 with no registration. There are others too: UNAVCO, UoM, Zoom, and VITO. Of course, some of these images are fairly low-resolution (even as high as 1 km/pixel), so depending on what you want to do with the data, you may have to look to paid sources.

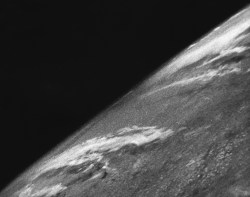

There’s a wide array of resolutions as well as the type of data, like visible light, IR, or radar. However, it all beats the state of the art in 1946, when the V-2 photo from 65 miles up was taken. Things have come a long way.

Sky High

We imagine that these same techniques would work with airborne photography as you might get from a drone or even a camera on a pole. That might be more cost-effective than buying satellite images. It made us wonder what other computer vision projects have yet to burst on the scene.

Maybe our 3D printers will one day compare their output in real time to the input model to detect printing problems. That would be the ultimate “out of filament” sensor and could also detect loss of bed adhesion and other abnormalities.

Electronic data from thermal imaging or electron beam stroboscopy could deliver pseudo images as input to an algorithm like this one. Imagine training a computer about what a good board looks like and then having it identify bad boards.

Of course, you can always grab your own satellite images. We’ve seen that done many times.

Interesting if planetlabs could implement that or provide dataset.

I love their idea of “queryable earth”. Imagine being able to query “SELECT COUNT(elephant) FROM earth”

ERROR 1054 (42S22): Unknown column ‘elephant’ in ‘field list’

DROP TABLE humans FROM DATABASE earth

(yes, that’s not valid SQL, i don’t know it)

Congratulations, the new word “Officer…Scan” has been indexed by all major search engines. :P

Yep, probably should have been a … but to most human eyes those do look the same.

“If you watch many espionage or terrorism movies set in the present day, there’s usually a scene where some government employee enhances a satellite image to show a clear picture of the main villain’s face. ”

More often, they manage to instantly get a live feed from such a satellite and are able to track the bad guys drive through a major metropolitan area with out a problem.

And sometimes they will instantly access the appropriate high resolution traffic cams, as well as obtain pinpoint accuracy from the bad guys cellphones.

Movies are a bit ridiculous, but around 2000 I heard they had resolution good enough to pick out a baseball, then about 10 years ago, they could theoretically see a dime on the sidewalk, so I guess by now they should be able to tell if your kid is bringing headlice home from school.

He’s not your kid…😏

Considering how connected everything is (and only getting more so) as well as computing and storage. We may not be that far from that dream.

Yes, I doubt it’s just one snapshot, one camera, needs a whole mess of connectivity and computing. Like air stability data from the entire GPS constellation processed to make a distortion model of the air column over the target. Which is probably a supercomputer job for the detail needed. Even then, they probably don’t get many days of the year when the atmosphere is stable enough. Tropical depression one side or other of the target probable messes up the slices from the GPS birds the other side of it. Then they probably have to do some kind of frame stacking. Probably have rooms full of analysts still too, screening the pics for the particular “where’s waldo” of the minute. A high value target such as Bin Laden was, who was “aware” they probably need to watch lower level associates, like their doctor, lawyer, accountant, and find the center of the pattern. All that makes for mountains of data to thrash through.

I don’t think GPS error data would help, because that’s caused by the ionosphere, not the lower levels of the atmosphere. The ionosphere is pretty rare and probably wouldn’t have much effect on optical seeing. As well, the problem is less bad than that of seeing in ground-based astronomy, because the distorting atmosphere is close to the target rather than close to the camera, resulting in less angular error. If it does need compensation, I think a good way to do it might be to record many frames from different angles as the satellite flies over the target and watch how the ripples move in parallax against the background of the ground. Doing that would also allow applying superresolution algorithms to the multiple ground images, regardless of compensation for atmospheric distortion.

The Rayleigh criterion sets a limit on resolution, although it may be possible to cheat it a bit with heavy computer analysis. Using visible light and assuming I haven’t made any errors in my math, a 2-meter diameter mirror in a satellite at 200 km above the Earth’s surface has a limiting resolution of about 60 mm = 2.5 inches. If you put a dime on a large black area you could probably see a very dim fuzzy spot where the dime is, but there wouldn’t be enough contrast to detect a dime on a concrete sidewalk.

Higher resolution requires smaller wavelengths or bigger mirrors, and it’s inconvenient to put big things into Earth orbit. Aperture synthesis could do better, but it may never be practical for this application.

I could see aperture synthesis being easier in space than on the ground, because you could use direct optical interferomic methods without having to pull a vacuum on a whole apparatus containing the separated mirrors.

This is Doctor Who nonsense-speak

Remodulate the confinement beam!

Coming from my experience as a photographer, the lens system on the satellite would have to be pretty impressive to get that close. Also, you straight up can’t get good video at the same definition as the photos. The turbulence from the atmosphere causes optical rays to wiggle, and that severely blurs fine details. The images are stacked to average out this effect. They are capable of like 5 fps or there-abouts.

I may have worked on some long lens for an un-named three letter group. and I too am a photographer. However, the custom part I saw/imaged would surprise you. Compact, yet amazing specs.

Strange that no-one has mentioned the elephant in the room…

+1 to the count?

If there’s around 1.2 billion houses in the world, and an average of 4 rooms per house, that’s 4.8 billion elephants.

“According to the paper, the archive images they used cost $17.50 per square kilometer. Asking for fresh images pushes the price to $27.50 and you had to buy at least 100 square kilometers, so satellite data isn’t cheap”.

Seems pretty cheap to me, if you count whales from an aeroplane or a boat it’s going to be considerably more expensive per km2 covered. Even a underpaid grad-student researcher on the ground using a Landcruiser and a drone is going to cost a lot more than that per counted elephant.

Not to mention, if you only need to order a minimum of 100 square kilometers for fresh satellite photos, that means you only need $2,750. That’s, all things considered, really cheap for what you’re getting—high-quality photos from space!

Agreed. $2750 is cheap compared to every other way to do this. Travel costs to & from the preserve alone…. Sure, it’s not pocket change but compared to any alternative? Heck, if they don’t need 100 square kilometers, they should get together with other groups to do a lump buy.

Thank you for this article. One fascinating example what can be achieved with space technology.

With respect to monitoring animal populations there is (in my opinion) something more cool: The Icarus project of the German Max-Planck-Gesellschaft: https://www.youtube.com/watch?v=e_KNyhQMjOY.

Small battery-powered radio beacons are attached to animals down to the size of birds or bats and send position information and other data to an antenna attached to the International Space Station (ISS). With this data, it is possible to observe the movement patterns of animal populations. There is even a smartphone app anyone can install to track individual tagged animals. The MPG guys call it “the internet of animals”.

It is planned to deploy more antennas in space to cover also high lattitude regions which currently cannot be reached because of the limited inclination of the ISS orbit.

“The resolution is down to 31 cm. For Americans, that’s enough to pick out something about 1 foot long”

Hmm. Harry Nyquist might have something to say about that.

Remote detection and identification of wildlife is definitely a necessary step forward in terms of effective and efficient conservation.

However, one important issue with satellite imagery has also been mentioned in the referenced publication of ZSL, though possibly hidden to the eye of many. The requirements on the images for the successful count of elephants in the study were low cloud cover percentage and small off-nadir. While the latter might not be that significant, the meteo definitely is. Within a time period of 5 years, they just selected 11 images that met the specification…

In our research, we often face this exact problem with satellite images over Africa – the period of cloud free days in the African savannah is not long, however the management of endangered animal species needs to run all year through. Where can they get the data from ?

Synthetic aperture radar satellites can see through clouds—that’s their main advantage as far as I know. (SAR may also give more distinctive signatures of objects on the ground due to its inherent sensitivity to distance, but I don’t know if that’s helpful in practice.) Commercial SAR constellations are starting to be launched, and there will probably be lots of them in a few years.

Good point. SAR, however, has not (yet) the high resolution required. Current satellite SARs data has – please correct me if I’m mistaking – resolutions of around 2-8m.

What’s that “electron beam stroboscopy” mentioned at the end? I did a Google search and see lots of people using what sounds like it, but no introduction to the technology.

Also, I see my reply to Pascal just above is “awaiting moderation”. Does having one of my comments caught by the spam filter (for containing a couple of URLs and not much else—understandable) mean that my next few comments are also caught, even if they only contain plain text and no URLs?