Have you ever tried waving your hand around like a magic wand and summoning a calculator? We would guess not since you’d probably look a little silly doing so. That is unless you had [Andrei’s] cool gesture-controlled calculator. [Andrei] thought it would be helpful to use a calculator in his research lab without having to take his gloves off and the results are pretty cool.

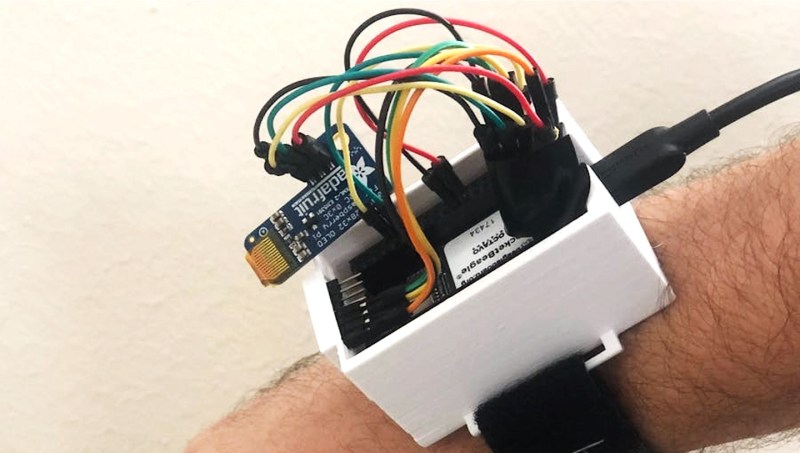

His hardware consists of a PocketBeagle, an OLED, and an MPU6050 inertial measurement unit for capturing his hand motions using an accelerometer and gyroscope. The hardware is pretty straightforward, so the beauty of this project lies in its machine learning implementation.

[Andrei] first captured a few example datasets to train his algorithm by recreating the hand gestures for each number, 0-9, and recording the resulting accelerometer and gyroscope outputs. He processed the data first with a wavelet transform. The intent of the transform was two-fold. First, the transform allowed him to reduce the number of samples in his datasets while preserving the shape of the accelerometer and gyroscope signals, the key features in the machine learning classification. Secondly, he was able to increase the number of features for the classification since the wavelet transform resulted in both approximation and detailed coefficients which can both be fed into the algorithm.

Because he had a small dataset, he used the Stratified Shuffle Split technique instead of the test train split method which is generally more suited for larger datasets. The Stratified Shuffle Split ensured approximately the same number of train and test samples for each gesture. He was also very conscious of optimizing his model for running on a portable processing unit like the PocketBeagle. He spent some time optimizing the parameters of his algorithm and ultimately converted his model to a TensorFlowLite model using the built-in “TFLiteConverter” function within TensorFlow.

Finally, in true open-source fashion, all his code is available on GitHub, so feel free to give it a go yourself. Calculatorium Leviosa!

I wonder if you could use this to guess the emotions an Italian tries to express with their hand gestures while telling a story. :D

Good one.

A number of years ago, I volunteered to watch a street barrier for a judged High School Marching Band parade.

One of the judges was taking “notes” into a portable cassette recorder (e.g. Walkman) as he walked along the route.

It amused me that he used so many (unrecorded) hand gestures while talking into the recorder.

Neat, but as a practical solution it seems like “hey Siri/Alexa” would be a more convenient… and safer if not to be waving his hands around if he’s holding stuff which requires gloves.

A wise man once said ‘Never spend 6 minutes doing something by hand when you can spend 6 hours automating it’