Some of you may remember that the ship’s computer on Star Trek: Voyager contained bioneural gel packs. Researchers have taken us one step closer to a biocomputing future with a study on the potential of ecological systems for computing.

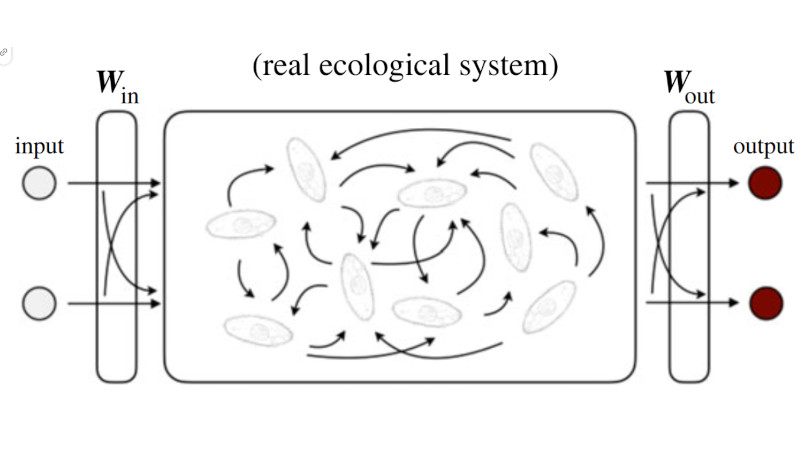

Neural networks are a big deal in the world of machine learning, and it turns out that ecological dynamics exhibit many of the same properties. Reservoir Computing (RC) is a special type of Recurrent Neural Network (RNN) that feeds inputs into a fixed-dynamics reservoir black box with training only occurring on the outputs, drastically reducing the computational requirements of the system. With some research now embodying these reservoirs into physical objects like robot arms, the researchers wanted to see if biological systems could be used as computing resources.

Using both simulated and real bacterial populations (Tetrahymena thermophila) to respond to temperature stimuli, the researchers showed that ecological system dynamics has the “necessary conditions for computing (e.g. synchronized dynamics in response to the same input sequences) and can make near-future predictions of empirical time series.” Performance is currently lower than other forms of RC, but the researchers believe this will open up an exciting new area of research.

If you’re interested in some other experiments in biocomputing, checkout these RNA-based logic gates, this DNA-based calculator, or this fourteen-legged state machine.

Maybe debugging this would give insights towards debugging similar black-box, chaotic systems. Other than that I don’t know nothin’

There was a really interesting paper published recently about applying Fourier Transforms to LLMs in order to far better understand how the algorithms reached the output they did, if it wasn’t obvious how that was obtained from the inputs.

Could you please give me the paper title?

I am working Fourier Transforms and Attention.