We always thought the older console games looked way better back in the day on old CRTs than now on a modern digital display. [Stephen Walters] thinks so too, and goes into extensive detail in a lengthy YouTube video about the pros and cons of CRT vs digital, which was totally worth an hour of our time. But are CRTs necessary for retro gaming?

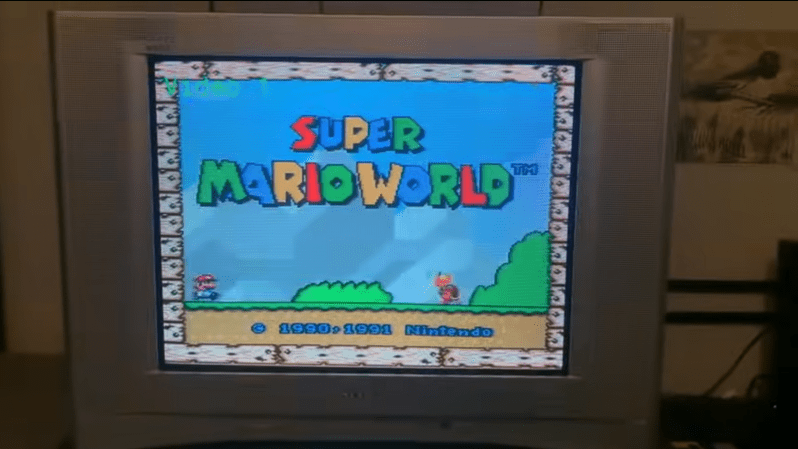

The story starts with [Stephen] trying to score a decent CRT from the usual avenue and failing to find anything worth looking at. The first taste of a CRT display came for free. Left looking lonely at the roadside, [Stephen] spotted it whilst driving home. This was a tiny 13″ Sanyo DS13320, which, when tested, looked disappointing, with a blurry image and missing edges. Later, they acquired a few more displays: a Pansonic PV-C2060, an Emerson EWF2004A and a splendid-looking Sony KV24FS120. Some were inadequate in various ways, lacking stereo sound and component input options.

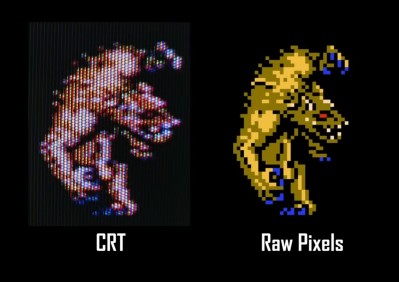

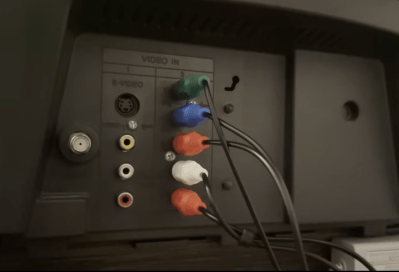

A large video section discusses the reasons for the early TV standards. US displays (and many others using NTSC) were designed for 525 scan lines, of which 480 were generally visible. These displays were interlaced, drawing alternating fields of odd and even line numbers, and early TV programs and NTSC DVDs were formatted in this fashion. Early gaming consoles such as the NES and SNES, however, were intended for 240p (‘p’ for progressive) content, which means they do not interlace and send out a blank line every other scan line. [Stephen] goes into extensive detail about how 240p content was never intended to be viewed on a modern, sharp display but was intended to be filtered by the analogue nature of the CRT, or at least its less-than-ideal connectivity. Specific titles even used dithering to create the illusion of smooth gradients, which honestly look terrible on a pixel-sharp digital display. We know the differences in signal bandwidth and distortion of the various analog connection standards affect the visuals. Though RGB and component video may be the top two standards for quality, games were likely intended to be viewed via the cheaper and more common composite cable route.

One debatable point is the effect of modern digital image processing on display lag. Modern TVs may have analog inputs, but these are sampled into the digital domain before being filtered and upscaled to fit the screen. This takes some time to process. We reckon that with games needing pixel-perfect timing reflexes, this could affect gameplay. It is also a perfect excuse to bust out some old games, you know, for science.

It’s an interesting discussion with newer tech that perhaps has a detrimental effect on the gameplay experience. Emulators such as Retroarch have some excellent video shader plugins that can accurately render some of these effects, so even if you can’t find a physical CRT that works, you can still at least experience the joy that these things once brought.

We touched on this subject a couple of months back with a somewhat opposite opinion, but all this stuff is subjective anyway. Want to know what makes CRTs tick? Then, take them to bits with a hammer. And did we mention how light guns are no longer just for CRTs?

This is a perennial topic, and it always fails on one point: CRT TVs evolved over time, so the games would look “right” on one kind of TV and the effect would fail on another. Different phosphor masks, RF modulator, composite, s-video, RGB SCART, they all had a different look to them.

While I agree they are also all very much of the same sort of family. Different but still very much the same when you compared them to other entirely different display technology, so something meant for a CRT of any era will generally look pretty close to intended on any other CRT and almost entirely wrong on LCD…

The main effects were NTSC color glitches and the low bandwidth of composite video that blurred out the blocky pixels. The former didn’t even work on PAL televisions, or on SECAM sets.

As for the phosphor masks, there were at least four different versions. The delta dot mask, rectangular holes, trinitron stripes, and a kind of staggered chevron pattern with rectangular holes. Each of these would produce a different kind of “subpixel rendering” effect on the image, so things like dithering would create a different effect depending on which television you were using.

I never knew about the chevron masks.

Were they European?

It’s just a slot mask, but the electron guns are placed in a V configuration instead of in a line so the sub-pixels they project come out staggered in a chevron. It’s the delta gun pattern, but instead of round holes you have rectangular holes for more brightness.

It’s the same idea as staggering every other color triad half a the mask pitch up and down to blend the scan lines into each other and reducing the screen door effect. After all, you don’t know whether the beam will hit a line of pixels exactly or whether it lands between two rows.

100% true, and the typical CRT was god awful. It’s pretty rare for a game to truely benefit form CRT over moder displays objectively (the article mentions some dithering).

Not an accurate take. One look at the examples and you can see easily that the artist was working with the medium to deliver a product that looks like it has 4x the detail than the raw pixels.

I have argued in the past that for retro CRT games there should be filters applied globally and if shown on a screen with enough pixels (4k, 8k etc) the effect would be a return of the art as intended.

Now I’m not so sure, maybe we need the sprites and backgrounds AI upscaled to regain the perceived detail and add sharpness.

I might argue that the Dreamcast and Xbox360 were the last consoles thar benefited most from CRT as they had VGA cables available natively. (At least in North America, where we did not get SCART :'(

“…4x the detail…”

That’s wishful thinking. It would require a specific type of CRT in perfect condition to reliably hit the expected phosphor spots. No CRT TV was ever made ever made with such a precision, not even studio monitors. The designer had to make something that would look good on CRTs with delta and in-line shadow masks and aperture grilles. He had to account for convergence, geometry and color purity being off and it had to be acceptable on B/W sets. So the design goal must be to create recognizable shapes to make a game playable even under less than optimal conditions. Customers buy entertainment, not fancy pixels.

Well.. The C64 and Amiga folks had a standard monitor, the Commodore 1084. It was overly popular.

Similarily, in broadcast environment, the Commodore 1701/1702 was popular.

It originally was meant as a C64/C128 monitor, but the 1084 took over quickly there.

99% of CRT TV’s didn’t support VGA cables. And most xbox 360 games were in 16:9 format, so not ideal at all for crts. Maybe you meant the original Xbox? Regarding Dreamcast, not all games supported 480p through VGA, so the best connection you can get which supports all games in NA and can be played on more recent crts is component cable, which was only available recently.

Fun fact, all Dreamcast games did support VGA. Oddly though some games would check the cable ID and refuse to boot. I bypassed this with a switch added to my VGA cable on the ID pins. I would boot on A/V, then after the check (but before game load) simply switch it to VGA. All games worked perfectly.

Xbox360 at least initially was very respectful of 4:3 ratios, so it isn’t an issue. Remember that back when the 360 launched a VGA computer monitor had much better picture quality than most people’s LCD TV screens.

Let’s don’t forget the dot pitch, also. It really makes a difference.

Commodore 1702 had 0,64 mm, while Commodore 1084 had 0,42 mm..

If you play games on a CAD grade monitor with 0,21mm to 0.28mm dot pitch, then the experience shifts from CRT to LCD.

(That’s because high quality CRTs were meant to look razor sharp, with little geometric distortation. They weren’t meant for retro gaming.)

Same happened with analog VGA PC monitors in the DOS era, I think.

Old MCGA games in 320×200 res were meant for 0,4x mm dot pitch.

The early 12″/13″/14″ VGA monitors were closer to a C64 video monitor in terms of fidelity than an average VGA monitor from the mid-late 90s.

_

And what about TVs? An analog TV was opposite to a PC monitor, rather.

A PAL or NTSC TV programme should never have looked pixelated on a big screen.

Softening and de-noising the image was welcomed, rather.

That’s when DSPs in the CRT TVs of the 90s aimed to replace analog comb filters and whatnot.

Televisions were made for broadcast signals, so they had no reason to have extremely fine dot sizes, and the image was intentionally blurred to account for the screen-door effect (Kell factor).

That applies to common video game consoles, but then arcade machines could have whatever tube they decided to have – and they would pick smaller dot sizes because the display was viewed from just a couple feet away, so the whole thing depends on which game you’re playing, on which machine, on which tube, with which kind of phosphor mask, in what condition and tune…

There’s also a difference in the presentation of video between regular dot matrix and trinitron-like vertical bars of color alongside each other. Sony tubes always looked worse on games (IMHO).

Well, they looked similar enough to all through them into the same category. The general signal processing being the same, that is the important point here.

“they do not interlace and send out a blank line every other scan line”

Erm, no. Interlaced video appends half a scanline each frame (btw. this explanation is also not quite right) so that on the next frame, the scanlines land IN BETWEEN the scanlines from the last frame, giving the appearance of a higher resolution (480i at 30Hz).

240p doesn’t do that, they send the same exact pixels in the same exact position every frame.

The way the article explains it you either have a blank half-frame (240p at 30Hz with massive flickering) or every other scan line is blank (120p at 60Hz).

As if anyone cares about some minor detail on how an ancient TV works that only a few nut cases care about who are so old they don’t hear these painful 15kHz squeals anymore anyway.

(Note – I’m one of these nut cases and I can hear the squeals and I like being able to. It tells me the TV is on, and that’s a good thing because back in my day cough TV programming used to be good. Now get off my lawn!)

This is highly individual, I think, though.

Some have difficulties hearing the squeals in their 20s already, for example.

Others hear them fine at age 30, still. It really depends.

And I wouldn’t call some being old at age 20, except if we’re going by medival age standards.

In medival age, people died age 30 and sometimes had looked like 90.

They also got married age 12-13. In aristocratic families, a boy age 6-8 could have been head of the family at the time.

Or they had to work. It was normal for kids at age 6 to go to work.

By comparion, in the US of today, people have to be age 21 until they’re fully adult and allowed to drink.

By contrast, in early 20th century, young kids still had to work there. Selling news papers, cleaning shoes. All at young age.

In Europe of late 20th century, little kids still had to work hard on the fields, as well.

It’s not that I find this good or whatsoever, it’s just how it was.

Sir, this is a Wendy’s.

Average lifespan doesn’t mean maximum lifespan. The average might’ve been 30 but that’s only because of infant mortality rates.

Two words: light guns!

“Early gaming consoles such as the NES and SNES, however, were intended for 240p (‘p’ for progressive) content, which means they do not interlace and send out a blank line every other scan line” Err… No. They are displayed with blank scan lines between them because the TV is sent a non-interlaced signal that means the 60 240 active line images each second are drawn in the same place on the CRT (leaving black lines between them) rather than with interlaced where the 60 240 active line images have a vertical offset for alternate images. No black lines are ‘sent out’. That’s a fundamental mis-understanding of what’s going on.

Lemme guess – you typed your comment at the same time as I typed mine – and the similarities are hilarious :-D

Virtual High Five!

Honestly it’s kinda dumb. When you go into the rabbit hole of CRT video everyone wants a PVM looking display. If that’s the case then just use a modern LCD. I love retro games as much as the next guy and I have a old 32inch Emerson LCD TV that has legacy RF Modulator, RCA, Component, VGA and modern HDMI so I can play my old consoles without adapters, downscalers and upscalers. I honestly haven’t had any refresh lag with this TV. However I have noticeable lag when it comes to emulating hardware. Like in Super Mario Bros. in world 4-1 I can run and jump over the Piranhas when they’re fully out. On real hardware I have it timed when to jump but when I use a emulated system with a USB gamepad it’s a 50/50 shot because of the delay.

There is FPGA emulation, and you don’t need to use USB, curious if you used original controller on MiSTer what would happen. Is that emulation? Not sure.

I have been wanting to try Super Mario on an original NES on a CRT vs in emulation, side by side, because the timing does feel off from what I remember, but it’s hard to know if it’s my memory, my lack of recent practice, or the emulated experience.

That’s a bit tricky to answer. Super Mario 1 on NES comes in two versions on European NES, at least.

One is the unmodified US version (?) while the other one is the over-optimized EU version that runs too fast (noticeable by the music).

Playing the Japanese or US version in 60 Hz is perhaps the most accurate way of playing the game.

Unfortunatelly, it feels weird to those who grew up with the EU version, maybe.

Speaking under correction.

this is the point everyone fails to address: adapters, upscalers, downscalers, processors whatever you want. a lot of people talk about quality and latency buttttt

they all cost money. and they are niche products and sell at a premium. $400 is not unheard of . meanwhile, if you look around in fb marketplace or similar places, you will find CRTs for free or less than $20.

if you are serious about emulation, enough to justify blowing $400 on one upscaler (not to mention the consoles and games themselves) I’m sure you can find a place for a CRT at home.

Last couple of upscalers I’ve bought were under $20 from Amazon and seem to do a pretty good job, quality seems limited by the source not the device.

“Honestly it’s kinda dumb. When you go into the rabbit hole of CRT video everyone wants a PVM looking display. ”

No, not me. I’ve always been of the opinion that RGB video on a 8/16-Bit game console is bad idea, because it shows what it is to be meant hidden.

RGB as such is fine, though. It can be useful were it’s needed, say on a home computer with text applications (programming, word processing etc) or on an arcade cab.

Arcades are the place were RGB belongs to.

That being said, exceptions prove the rules. Game developers back in the day did of course have PVMs or otther RGB monitors at hand.

They needed them for creating the pixel art. But the final game had to look good on an ordinary TV set of the day. That’s what did really matter.

Unfortunatelly, all those RGB fana.. fans do seem to forget this.

For all those RGB CRT aficionados who have never seen the gross imperfection of a real color TV set from the 1970/80s: €1 will buy you instant RF/CVBS-like Blur-O-Vision, three resistors and three caps are all you need. You might also want to throw in a 555 or two to add some jitter to the sync signals.

Not quite, old man. While being younger, I have seen higher end 1970s/1980s PAL TVs that had an AFC, automatic frequency control.

Video via RF connection can be near FBAS, err, I mean composite quality with the right video equipment.

VCRs, for example, used to have such AFC-capable tuners built-in and provided a rock solid picture on any TV.

The normal VCR models with an electronic display, I mean.

Not the stone age Telefunken/Blaupunkt/Saba era things with BCD encoder knobs. ;)

But that’s just my point of view here, of course.

And I’m not getting younger, either. So I have to be careful not to make same mistakes as other old men.

Let’s remember, together, “Alter schützt vor Thorheit nicht”.

Practically all TVs with Varicap tuners have AFC, these tuners replaced mechanical pushbutton station “memories” somewhere in the 1960s. Do you mean switchable time constants in the horizontal PLL feedback path to accomodate wobbly VCR playback signals? That became a necessity after home video recorders hit the market in the early 1970s.

Admittedly, just putting low pass filters in the RGB lines is only a coarse approximation of RF/CVBS frequency response. The correct way would be to limit luma to 5 MHz and chroma to 1.5 MHz, but that requires a somewhat more complex circuitry which 1€ won’t buy.

Oh look, another purported retro fanatic who doesn’t actually understand how or why a lot of this stuff works.

CRTs were not responsible for a lot of what people think of when they think of how older console titles worked. That would be the video signal itself responsible for that: RF-modulated or composite NTSC in the case of the NES, SNES, and Genesis.

The visible color-fringing in NES (and to a lesser extent, SNES) games comes from the inversion of the color-carrier’s phase on every line and every field. The inversion per-line is why there’s a fringe at all, and the inversion per-field is why the fringe appears to alternate up and down.

The additional colors on Genesis/Megadrive games – Sonic the Hedgehog’s wterfalls are most-often cited, but Vectorman used the same technique, and there are checkerboards of same-chroma/different-luma used across countless titles on the same platform – take advantage of Y, I, and Q having different bandwidth allotments in an RF-modulated or composite NTSC signal.

The Apple II was one of the most famous platforms to take advantage of that little quirk in composite NTSC, outputing patterns of black and white pixels and having just the right pixel clock that groups of two pixels would combine to produce colors on 0/1 or 1/0 transitions. This is also why early-model Apple II machines would sometimes power up in orange/blue mode and sometimes power up in green/purple mode: It comes down to whether the it powered up with the color carrier in-phase or out-of-phase. It’s also-ALSO why later Apple II models that supported double-hi-res mode could push it to 16 colors: There were up to 4 black/white transitions in the same time as 2 in standard hi-res.

Purportedly, at its lowest level, the NES also relies on artifact color as the Apple II did, although it can emit 128 black/white transitions in the same time frame.

Yes. This is precisely why there’s an emphasis on accurate hardware for composite and RF connections in the Checkmate monitor, and why you’ll see a lot of reviewers check those as the climax of their retro hardware reviews. This article is getting the whole thing reversed by suggesting component is the standard.

No.

Not just retro gaming, honestly. (Quality) CRTs are important for broader use in vintage/abandoned/antiquated formats to “complete the experience”. LaserDisc comes to mind. As an avid LaserDisc collector who actually watches his discs, you really can’t do better than a once-high-end CRT projector. Since they’re practically unobtainium and replacement parts are obscene, a good CRT TV is often the best bet. LCDs just don’t look right, even on late release discs due to all the digital domain aberrations and “de-noise” mechanisms. Many of the higher end CRTs that came out during the transition period — when LCDs were still relatively new technology and carried hefty price tags — are excellent, and carry a more modern feature set like 16:9 aspect ratio (which is important if you’re doing upscaling/reframing), excellent comb filters and a wide array of input options. They were the culmination of everything learned up to that point, and usually look vastly superior to their LCD counterparts especially when composite inputs are involved.

Yeah, modern LCDs will look better when using modern content, but almost all of the accessible ones that still carry composite inputs have abysmal comb filters because it’s an afterthought. The Snell & Wilcox Zone Plate is my standard benchmark, and I’ve yet to see a single LCD pull it off without massive rainbowing and a bunch of digital aberrations, without using an external (and expensive) comb filter.

That aside, I’d argue against component being the “sweet spot” for a lot of stuff. Some stuff just doesn’t look “right” without composite, especially with a quality comb filter. I suppose that’s entirely dependent on the source, format and display however.

+1

I think this also applies to VCDs and DVDs to some degree.

Those media still had used same film transfer technology as VHS, Betamax, Video2000 or Laserdisc.

The source material often were magnetic media in broadcast quality.

It’s important if you enjoy the vibe of it. Sometimes I like to kick back in a 70s car that smells like an oil spill and listen to an 8-track and chainsmoke. Is it the only or best way to listen to music? No, but it’s a nice way to get into a mood if you’re into that sort of thing.

That’s why zoomers play silent hill in their attic on an old thrifted CRT I think.

ive been running console emulation for longer than ive been running consoles, and i have to say no. crt enthusiasts are somewhat like audiophiles who buy $1000 cables and think its worth it for gains so infinitesimal that they are hard to quantify at all. ok maybe tube fans arent that bad.

not to say crts arent cool tech mind you. i just dont think the so called “crt effect” is really worth the trouble of connecting a 20 year old screen to a 2 year old computer.

I think it really depends. An NES or Sega Genesis game really looks most “natural” on a normal 14″ CRT TV with an RF jack.

The typical 1970s, 1980s kind of portable TV, essentially. Your typical hotel or motel TV.

These type of TV was in common use when these consoles were still in production.

It had no on screen display, but knobs on the front panel.

The CRT tube was a normal, TV grade model with an average 0.6 mm dot pitch and a shadow mask. Nothing fancy, at all.

In East Europe, Russia, China and other eastern countries such traditional TVs were in common use until the 2000s, I heard.

Sure, there had been people who had higher end TVs with 20″ screens with SCART input already. But not the children.

They usually got the old TVs from their family members. Ordinary TVs, in most cases.

I mean, just look at old family photos from the 80s and 90s.

The internet has them. You see kids at age 8 sitting in front of an 14″ TV’ playing the NES.

Or in case of Europe, tinkering with the C64.

Personally, I was no different back in the 90s.

– Except that I by-passed the RF tuner situation, because I had a video monitor with AV input (equivalent to an SCART TV with RCA adapter).

Our family TV in the 90s was a ~14″ portable in a black/silverish plastic case, with the VCR attached to it as a tuner.

And when my family got sick of my NES sessions on the family TV, they gave me an used Commodore monitor with AV jacks.

That video monitor was my nintendo monitor. I’ve also used it to play back VHS cassettes, via an cheap VHS player (no VCR, no recording).

At a friend’s house, it was similar. The kids had a small TV for SNES in the bedroom, while the parents had a big ass TV in the living room.

With a Sega Genesis (MD) connected to it. The father played sports games on it, I believe.

But to be fair, the TV was almost 3m apart from the couch, which got rid of the pixelation (if there was any).

My appologies for this long comment, but I felt that I should make things clear.

Because to my knowledge, my experience, the argument that a 15 Khz CRT monitor or CRT TV is no different to an LCD is just plain wrong.

It’s not a matter of taste, I think, but right and wrong.

Old consoles were made with normal household TVs in mind, simply.

And game developer took advantage of everything they could do to make the graphics look good, to look natural.

Pixel-art as such didn’t exist: There was no nostalgia for blocky graphics at the time yet.

I think that’s something that modern audience often forgets or doesn’t realize.

i was poor growing up so the tvs we had were small and cheap. i had a 13 inch magnivox in my room and the tv in the living room was an old console set with a really dark phosphor layer that someone left on the side of the road. later on we got a big projection tv, but that was well into the 32 bit era, and frankly it had scanlines the size of texas. none of my games looked exceptional on any of these screens. emulated mario games look pretty good on my steam deck though thanks to the oled.

Not that it hasn’t already been said, here and in other comments on this topic previous, but it bears repeating.

The simplest argument, and one which the author of the video briefly muses over, is that games were made to look the way they look on CRTs specifically because that’s what developers had to work with at the time. This is the simplest and therefore most plausible explanation. It wasn’t some nefarious consortium of developers making a shadow pact; we have to look at history from the perspective of the era: 60fps progressive video was not a thing in the 80’s unless you were either 1) Dabbling in expensive broadcast video equipment or 2) Using an Apple II, which was the only consumer hardware that rendered a 60fps progressive scan, and why you saw it used in some early 80’s media for titles or films since it wouldn’t flicker horribly.

So to enjoy a game the way it was originally designed, you should at least consider comparing how it appears on a CRT if it was produced before, say, 1997 or so. Even 6th gen consoles were around at the time when people had high-quality CRTs with component connections, and some not too different in quality from the LCD displays at the time — but keep in mind in the late 90’s, LCD televisions were both expensive and not great quality/resolution for the price compared to the $300 4k TVs that flood the racks of your local big box store. But the argument of “there is only one way to do it properly” is a silly fallacy: If you like sharp pixels, nobody can take that away from you. It’s all a matter of opinion and preference, and there are no right or wrong answers.

Back to the point: Analog TV displays do two things to the video signal, in modern parlance: 1) They provide a “free” bilinear filter because of the nature of the domain of the signal (which is why PS1 games look less crap on old TVs), and 2) They provide a bloom filter where neighboring pixels smear a little bit. Not every game was deigned with this in mind. A lot of times, at least in 8-bit games, the developer might simply design things on graph paper and translate those sketches to raw data; ergo the game is designed with the idea of “pixels” in mind to an extent. But there were also plenty of games that used alternating colors like greens, browns and grays which look awful in raw pixel form and on a CRT (or NTSC-emulating filter) look like awesome mossy rock walls (castlevania 3) or golden gradients (level 2 of micro mages). In those circumstances, hands down the better experience is NTSC emulation or an old CRT.

Myself? I have a MiSTer and I set the 80’s era arcades and 8/16 bit consoles to use an “Old TV” filter which has a more pronounced shadow mask, slightly visible scanlines, and a healthy helping of NTSC artifacts. For anything early/mid 90’s or newer, I use a Sony Trinitron filter which still has some NTSC artifacts but a sharper and slightly less pronounced shadow mask, narrower scanline effects. That makes those consoles and arcades match the experience of what would have been more ideal in the early 90’s: Sony Trinitron was the bee’s knees. As for other things like GBA, I never owned one so I have fewer opinions, some games arguably look better (to me) with HQ2X or similar filters as long as it doesn’t mess the artwork up too much. If I had a more nostalgic feeling about GBA games, I would likely have a different, but still to you, mostly irrelevant opinion about it. ;)

i am surprised about the focus on crt aesthetics. to me, the thing that gets under my skin is how everything these days is delayed…but not just delayed, variably delayed…asynchronous. every single layer goes through asynchronous queues now. they stack up and it becomes perceptible. it upsets me not so much as a user but rather as a developer…because i understand it’s caused by things i think of as progress, so i struggle to imagine how it should be managed. but obviously people who are genuinely skilled at retro games find it a real challenge to play.

as far as aesthetics go, for me it always comes back to star trek the next generation. :) i watched it on broadcast TV, and it was good. i watched relatively low quality mpeg encodings of the original DVD release, on my SVGA CRT, and it was great. and now i watch those same digital encodings on my 720p 32″ LCD and it also looks great, but perhaps only because i sit so far from the TV. but there are new “re-mastered” digital versions of the show, and i find them a little off-putting…the show was designed for broadcast TV and it doesn’t benefit from higher ‘fidelity’, and in fact it suffers some. at some level of quality, you can see the make up and the paint and colored duct tape and the illusion is lost.

and on the other side, forget retro…look at new star trek. enterprise was the first star trek where they knew it would be digitally encoded before they put the sets together. so they aren’t as reliant on tight close ups of faces. and they are no longer afraid of low-contrast scenes, like a dark scene that is different textures of black-on-black. no matter how high the quality is, i don’t actually find that super watchable. i think to some extent the old limitations forced good artistic decisions. reducing a scene with several actors to a series of facial close-ups really makes it a struggle just to represent the events of a scene, but it has big advantages too in terms of comprehensibility and emotional expression.

but the place where i really feel it matters is retro game music! sometimes i listen to the doom songs in timidity. it has a high quality ‘wavetable’ style instrument bank which i know is probably closer to what Robert Prince used when he composed it. but it’s just so incredibly different from the OPL FM synthesis! luckily we can emulate that pretty convincingly but it is a real aesthetic conundrum that i can’t come to any conclusion on. is it better when it sounds good or when it sounds bad?? i can’t even answer the question.

Thumbs up for Star Trek! 😃👍

As for video quality and media..

Long story short I think that there’s a relationship between the source medium and the playback device.

If you playback a lo-fi medium on a hi-fi device, all the imperfections become very apparent.

This applies to both audio and video.

Cassette tapes recorded at home using a cassette recorder can sound okay on an average walkman w/ walkman headphones,

but at home on a hi-fi deck with your Sennheiser headphones it likely sounds not so good anymore.

The other way round can be less of a problem, I do admit.

A higher end medium played back on a lower end device may sound as good as the playback device allows it to be. Ideally.

However, if there are problems involved in down-conversions, it may happen that the outcome will be worse.

It’s like down-sizing large resolutions images to small resolution vs. taking pictures natively with a small resolution camera/scanner.

The argument you’re making is that there’s esoteric artistic value in the limitations older media had to work with, and that’s a simple logical fallacy. Close-ups didn’t show emotion or or comprehensibility. They were weak methods of “hiding the crimes”.

The BEST movies and shows from that era didn’t rely on such tools and they never failed to provide a cohesive story with believable characters and great emotions. 99.9% of the time, close-ups back then were just extreme overacting that was both ridiculous and to a point almost comical.

Modern cinema/TV is better in every way. Even the worst today is often light-years ahead of anything from the CRT age. There’s always exceptions, but they’re extremely rare. And if you can’t follow what is happening in modern cinema/TV…you are absolutely the issue, not the medium. I say this as a guy just old enough to have thoroughly experienced what things were like back then, in real time.

This was fascinating and I learned a whole bunch about the graphics of older systems. I’ve not played a console since the NES… or before the NES, really (Glorious PC Master Race, etc etc)… and honestly, it was enlightening to see techniques I’d never been aware of being used so effectively. Some of the visual art upgrades are damned impressive. I’d have loved to have seen more discussion of how the phosphor’s dwell time impacted display behaviour (a la old CROs).

A lot of the comments, and a lot of the articles, ignore the fact that the monitors the game designers used would also dictate the way the pixel art would look.

If you are designing on a crt display, it probably is more of a desktop setup, with a small crt monitor, and the choice of pixel colour and placement would be according to how that art would look on a crt. People leapfrogged technology in the home. Until we had two televisions we had to play games on the main television. When my parents got a new one, we were playing games on the old one.

When I got my own television after moving out it was a 9-11 inch baycrest crt. The graphics were fine. When I got an amiga with a better monitor, and hooked up the older computer (Commodore 64), the pixelization became yuckier than I remember because now you could see the designs without the shadow mask. This was over twelve years of ownership.

Newer games, designed with newer monitors, had a different feel… they also had higher resolution on the computers, and more colours. The designs reflected that.

Please don’t be a bunch of parsi’s the zoroastric peak of crt tvs is Sony PvM and BVM’s or other production monitors. Yet only 1 post here mentions them. As I say don’t be a bunch of parsi’s buy a pvm…9inch to 14 inch…they’re designed to last decades and have far superior displays and scope for fine tuning than regular crt’s

I just remembered, I got an Apple II monitor I used as my TV through the late 90s, probably colored (pardon the pun) my memories of CRT. That monitor was really good quality.

Im divided and have mixed feelings on this. On the one hand let me say I LOVE the things you can do with emulators now days with the different shader options ect ect ive replayed some classics like ff6 with them and its almost like the graphics were remastered with some of the different shader and filter options not to mention the ease of use with having a having a modern tv and emulation. But also hate to say it latency is still somewhat of a issue not going out and dropping $500 on a framster either. and even though i have tried i have not been able to beat ninja gaiden 2 or knock out mike tyson using a modern lcd display, exeption being to that would be a old 50″ sony vega rear projection tv from 2004 strange enough no latency running that tv for some reason and it was technically a lcd display . Tv was my main tv for playing laserdisc movies other then my 47″ lg oled it was the biggest tv i could use watching laserdisc without it looking like washed out crap .

Lot of comments here that assume the creators were aiming solely at NTSC and nothing else – what about the rest of the world with different standards where some of the “effects” mentioned here just weren’t possible?

I can’t remember what standard Japan were on, but the UK were on PAL and I’m fairly sure at least some of Europe were SECAM, we all had the same or every similar games and machines, were we all seeing a worse version or something the creators didn’t intend?

Given how many creators and even companies weren’t US based it feels presumptive that NTSC would be assumed to be the “right” representation of these games.