[Geoffrey Litt] shows that getting an effective digital assistant that’s tailored to one’s own needs just needs a little DIY, and thanks to the kinds of tools that are available today, it doesn’t even have to be particularly complex. Meet Stevens, the AI assistant who provides the family with useful daily briefs. The back end? Little more than one SQLite table and a few cron jobs.

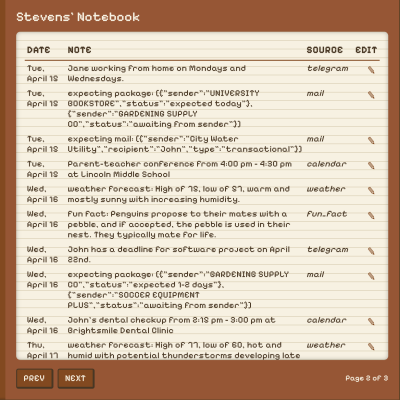

Every day, Stevens sends a daily brief via Telegram that includes calendar events, appointments, weather notes, reminders, and even a fun fact for the day. Stevens isn’t just send-only, either. Users can add new entries or ask questions about items through Telegram.

It’s rudimentary, but [Geoffrey] already finds it far more useful than Siri. This is unsurprising, as it has been astutely observed that big tech’s digital assistants are designed to serve their makers rather than their users. Besides, it’s also fun to have the freedom to give an assistant its own personality, something existing offerings sorely lack.

Architecture-wise, the assistant has a notebook (the single SQLite table) that gets populated with entries. These entries come from things like reading family members’ Google calendars, pulling data from a public weather API, processing delivery notices from the post office, and Telegram conversations. With a notebook of such entries (along with a date the entry is expected to be relevant), generating a daily brief is simple. After all, LLMs (Large Language Models) are amazingly good at handling and formatting natural language. That’s something even a locally-installed LLM can do with ease.

[Geoffrey] says that even this simple architecture is super useful, and it’s not even a particularly complex system. He encourages anyone who’s interested to check out his project, and see for themselves how useful even a minimally-informed assistant can be when it’s designed with ones’ own needs in mind.