Are you writing your code for humans or computers? I wasn’t there, but my guess is that at the dawn of computing, people thought that they were writing for the machines. After all, they were writing in machine language, and whatever bits they flipped into the electronic brain stayed in the electronic brain, unless punched out on paper tape. And the commands made the machine do things, not other people. Code was written strictly for computers.

Modern programming practice, on the other hand, is aimed firmly at people. Variable and function names are chosen to be long and to describe what they contain or do. “Readability” of code is a prized attribute. Indeed, sometimes the fact that it does the right thing at all almost seems to be an afterthought. (I kid!)

Somewhere along this path, there was an important evolutionary step, like the first fish using its flippers to walk on land. Comments were integrated into programming languages, formalizing the notes that coders of old surely wrote by hand in the margins of the paper first-drafts before keying it in. So I went looking for the missing link: the first computer language, and ideally the first program, with comments. I came up empty handed.

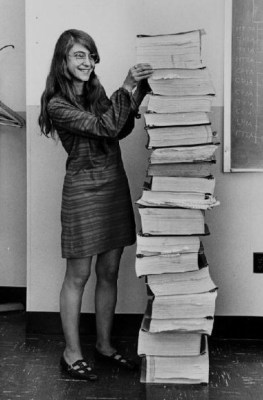

Or rather full handed. Every computer language that I could find had comments from the beginning. FORTRAN had comments, marked by a “C” as the first character in a line. APL had comments, marked by the bizarro rune ⍝. Even the custom language written for the Apollo 11 guidance computers had comments — the now-commonplace “#”. I couldn’t find an early programming language without comments.

Or rather full handed. Every computer language that I could find had comments from the beginning. FORTRAN had comments, marked by a “C” as the first character in a line. APL had comments, marked by the bizarro rune ⍝. Even the custom language written for the Apollo 11 guidance computers had comments — the now-commonplace “#”. I couldn’t find an early programming language without comments.

My guess is that the first language with a comment must have been an assembly language, because I don’t know of any machines with a native comment instruction. (How cool and frivolous would that be?)

Assemblers simply translate mnemonic names to their machine instruction counterparts, but this gives them the important freedom to ignore anything starting with, traditionally, a semicolon. Even though you’re just transferring the contents of register X to the memory location pointed to in register Y, you can write that you’re “storing the height above ground (meters)” in the comments.

The crucial evolutionary step, though, is saving the comments along with the code. Simply ignoring everything that comes after the semicolon and throwing it away doesn’t count. Does anyone know? What was the first code to include comments as part of the code itself, and not simply as marginalia?