[EXO Labs] demonstrated something pretty striking: a modified version of Llama 2 (a large language model) that runs on Windows 98. Why? Because when it comes to personal computing, if something can run on Windows 98, it can run on anything. More to the point: if something can run on Windows 98 then it’s something no tech company can control how you use, no matter how large or influential they may be. More on that in a minute.

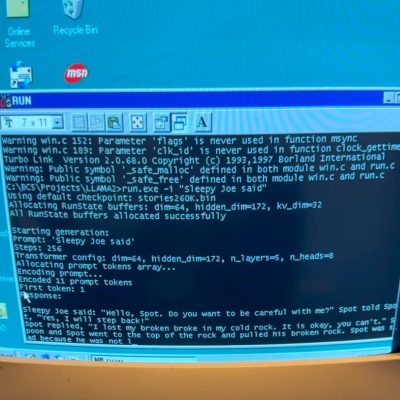

What’s it like to run an LLM on Windows 98? Aside from the struggles of things like finding compatible peripherals (back to PS/2 hardware!) and transferring the required files (FTP over Ethernet to the rescue) or even compilation (some porting required), it works maybe better than one might expect.

A Windows 98 machine with Pentium II processor and 128 MB of RAM generates a speedy 39.31 tokens per second with a 260K parameter Llama 2 model. A much larger 15M model generates 1.03 tokens per second. Slow, but it works. Going even larger will also work, just ever slower. There’s a video on X that shows it all in action.

It’s true that modern LLMs have billions of parameters so these models are tiny in comparison. But that doesn’t mean they can’t be useful. Models can be shockingly small and still be perfectly coherent and deliver surprisingly strong performance if their training and “job” is narrow enough, and the tools to do that for oneself are all on GitHub.

This is a good time to mention that this particular project (and its ongoing efforts) are part of a set of twelve projects by EXO Labs focusing on ensuring things like AI models can be run anywhere, by anyone, independent of tech giants aiming to hold all the strings.

And hey, if local AI and the command line is something that’s up your alley, did you know they already exist as single-file, multi-platform, command-line executables?