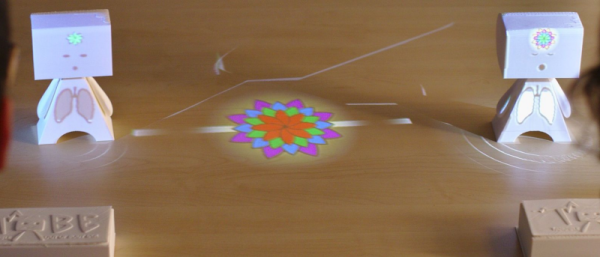

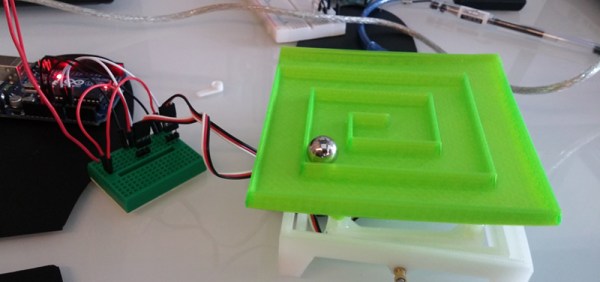

TOBE is a toolkit that enables the user to create Tangible Out-of-Body Experiences, created by [Renaud Gervais] and others and presented at the TEI ’16: Tenth International Conference on Tangible, Embedded, and Embodied Interaction. The goal is to expose the inner states of users using physiological signals such as heart rate or brain activity. The toolkit is a proposal that covers the creation of a 3D printed avatar where visual representations of physiological sensors (ECG, EDA, EEG, EOG and breathing monitor) are displayed, the creation and use of these sensors based on open hardware platforms such as Bitalino or OpenBCI, and signal processing software using OpenViBE.

In their research paper, the team identified the signals and mental states which they have organized in three different types:

- States perceived by self and others, e.g. eye blinks. Even if those signals may sometimes appear redundant as one may directly look at the person in order to see them, they are crucial in associating a feedback to a user.

- States perceived only by self, e.g. heart rate or breathing. Mirroring these signals provides presence towards the feedback.

- States hidden to both self and others, e.g. mental states such as cognitive workload. This type of metrics holds the most

promising applications since they are mostly unexplored.

By visualising their own inner states and with the ability to share them, users can develop a better understating of their own selves as well others. Analysing their avatar in different contexts allows a user to see how they react in different scenarios such as stress, working or playing. When you join several users they can see how each other responds the same stimuli, for example. Continue reading “TOBE: Tangible Out-of-Body Experience With Biosignals”