[Nathan] is a mobile application developer. He was recently debugging one of his new applications when he stumbled into an interesting security vulnerability while running a program called Charles. Charles is a web proxy that allows you to monitor and analyze the web traffic between your computer and the Internet. The program essentially acts as a man in the middle, allowing you to view all of the request and response data and usually giving you the ability to manipulate it.

While debugging his app, [Nathan] realized he was going to need a ride soon. After opening up the Uber app, he it occurred to him that he was still inspecting this traffic. He decided to poke around and see if he could find anything interesting. Communication from the Uber app to the Uber data center is done via HTTPS. This means that it’s encrypted to protect your information. However, if you are trying to inspect your own traffic you can use Charles to sign your own SSL certificate and decrypt all the information. That’s exactly what [Nathan] did. He doesn’t mention it in his blog post, but we have to wonder if the Uber app warned him of the invalid SSL certificate. If not, this could pose a privacy issue for other users if someone were to perform a man in the middle attack on an unsuspecting victim.

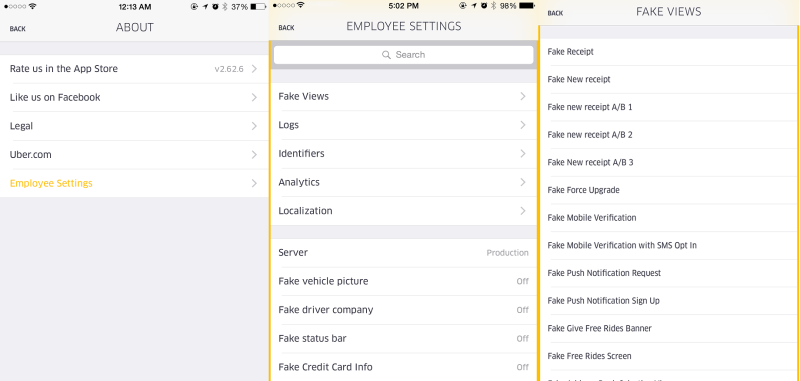

[Nathan] poked around the various requests until he saw something intriguing. There was one repeated request that is used by Uber to “receive and communicate rider location, driver availability, application configurations settings and more”. He noticed that within this request, there is a variable called “isAdmin” and it was set to false. [Nathan] used Charles to intercept this request and change the value to true. He wasn’t sure that it would do anything, but sure enough this unlocked some new features normally only accessible to Uber employees. We’re not exactly sure what these features are good for, but obviously they aren’t meant to be used by just anybody.

You have to install a new SSL cert on the device to make it work. Same if you use mitmproxy or other similar software.

Have uber fixed this? Perhaps we can all start screwing around with this?

Not sure why you say you have to install a new cert. You only need to install the Charles CA cert to avoid certificate warnings.

The real flaw here is that they seem to think HTTPS is application security. They need to at least use public/private key signature hashes for each API call/response. Information could still be seen by a man-in-the-middle attack, but the signatures would prevent tampering. You hash all the data in the call/response, encrypt with your half of the key, then add it to the call/response. Super simple to implement, and input verification is fast. If you add a timestamp in the call/response, then you even get simple replay attack prevention by checking that the timestamp is within some short window of time. This would of course be on TOP of HTTPS.

All of that could be proxied by the MITM. All they need to do is decompile your app and get your keys.

The server key would never be in the compiled app. It’s the basis of public/private key encryption. You could also generate a different key set for each client, so decompiling won’t get you the client key either. Many public APIs work on this mechanism.

What you are suggesting would add absolutely nothing to the security already provided by SSL. Once the security of the network layer has been broken in the manner described by the article, the MITM could simply replace both parties’ public keys with its own and re-sign every message with its own private keys.

Installing the Charles CA cert is a privileged operation that (if implemented correctly by the mobile device) requires some sort of trusted access to the “unsuspecting victim’s” device for the purpose of installing a new trusted root. Without installing the CA cert on the victim’s device, communication flowing through the proxy would be denied by the app, or subject to a security warning.

Certificate pinning within the mobile app would provide a further barrier to attack by requiring the attacker to install a custom version of the app before the MITM would go unnoticed. Of course once the attacker is to the point of installing a custom app, they have as much power as any man in the middle.

Also, please do not use timestamps as a means to prevent replay attacks. A lot of mischief can happen in a “short window of time”, or even between the ticks of your system clock.

There may be no flaw at all. This attribute tells the iphone client to display certain extra options, but it doesn’t say whether or not the Uber server accepts those values from a non-admin account.

I hate to admit it, but I was confused as heck until I figured out that Uber is an application and not an adjective in this article.

I’m definitely not a city guy.

One of the problems with ALL CAPS headlines!

I wonder how many other apps have such a vulnerability?

same here

“isAdmin” what the literal fuck?

I have made allot of silly mistakes when programming before but i have never asked the client nicely if the user has admin privileges

Surely there’s an XKCD comic to be had here somewhere.

It’s not quite as stupid as it seems; it’s a flag sent from the server to the client, instructing the client if it should display the admin/debug menu. Most of the admin/debug commands allow messing with what parts of the UI are exposed, etc.

I would HOPE that any subsequent messing with things clients shouldn’t be able to modify, such as changing the cost of a ride, would be rejected by the server as invalid.

All I’d have to do is sit in the coffee shop around the corner from their office running a man-in-the-middle attack against the wifi. Once a worker with the right privileges accidentally runs their app on the wifi, I now have their session information. With not even an encrypted fingerprint on each request/response to verify authenticity, nothing could stop me.

If anyone claims this seems far fetched, then you’ve never met a hacker on a mission. Actually, if the company doesn’t have good security on their wired network, then a hacker can talk their way into the building (ie. pest control) and hide a small network device to intercept traffic. Google the Hak5 Pineapple for reference.

If you’re a 3rd party intercepting SSL traffic between client and server, you’re just going to see encrypted data. That’s the whole point of SSL. The Hak5 Pineapple doesn’t change that at all.

Its nice that they left the name nice and descriptive though. If it had been called something nondescript someone would have had to go through and try everything, and who knows what else that would have thrown up :)

Security through obscurity only makes future improvements by other developers harder, not safer.

They have it probably for debugging reasons but they’ve actually made their app look like it was programmed by amateurs (with the bad meaning of the word).

It is also possible that the isAdmin flag is used by the client to display options. The server should still refuse to accept them of course, so it may not be such a big goof.

You have always been able to do this sort of thing with a self signed cert and a proxy a lá café access point and collecting people’s facebook data etc. :)

The real vulnerability here is that Über’s account access level security is just a flag that is sent from the _client_. Like, what the fucking shit Über?

My position in my workplace is writing back-end server software. Before starting work at the place I’m at, I had never written a back-end before, but even unexperienced, I still developed access control rules and permissions sets on both access to routes in addition to per-row database permissions. The client doesn’t get to dictate anything, the server dictates, and the client can’t do anything unless they really are logged in as the administrator.

So if I’m a whippersnapper, just which breed of old cod is shacked up at Über to do something as stupid as this? Of course as somebody else already mentioned, it could just be the client displaying the extra options, which is not a hack or a discovery of ay kind, it’s just how it’s done and the app developer who wrote the original post would surely have realised this, so I’m assuming that the server did actually give him access…

Another person, who doesnt care enough to learn. I have seen circumention by environmental values quite frequently in large commercial products under test.

Seems they dont always teach you how to think like a bad person in official courses and nowadays security auditing is just a cost centre to be cut out the budget to save money.

Most corporate networks MITM ssl like this with a default cert pushed out to company machines as part of their DPI filtering so the users dont even get a warning or know its happening too.

We only know this is in uber because its the sort of tool geeks play with, and many eyes means sooner or later someone has noticed it and had a tinker, out in the real world, its endemic.

The isAdmin flag is sent from the _server_ to the _client_ to enable a debug menu in the client – not vice-versa.

“So if I’m a whippersnapper, just which breed of old cod is shacked up at Über to do something as stupid as this? Of course as somebody else already mentioned, it could just be the client displaying the extra options, which is not a hack or a discovery of ay kind, it’s just how it’s done and the app developer who wrote the original post would surely have realised this, so I’m assuming that the server did actually give him access…”

Even if that is the case, why isn’t the request properly challenged with some kind of two-factor authentication? I would think at the very least they would setup the server to issue a challenge of the request upon failing a CRC as the “isAdmin” flag was altered. Or are they not even concern with mitm attacks anymore?

“but we have to wonder if the Uber app warned him of the invalid SSL certificate.”

This.

So who is accepting the invalid certificate, IOS or the Uber app? This is why secure apps scare me, at least in a Web browser you can see that the cert is invalid. When going through an api so many developers go out of there way to make sure the validations are disabled to get through testing.

Consider this recent Web search I did while working on credit card project, “httpwebrequest certificate validation”. Notice that 9/10 results are “How to disable”.

The SSL session was encrypted with a valid cert. The cert was signed by a trusted root certificate authority. It just so happened the signing cert was created by Charles, and the trust was created only after the proxy’s cert was installed as a trusted root authority by the proxy’s owner, but that doesn’t make it any less a valid cert.

Why do mobile apps use the CA system at all?

They can pin their trusted cert when they are compiled!

The local proxy negotiates the connection and then passes the traffic as https. The proxy signs its own snakeoil root cert that you explicitly trust by installing the cert onto your browser or keystore/CA store. If you don’t take that step, the browser will indeed report a MITM or untrusted cert. The browser trusting the fake cert does not indicate an explicit vulnerability. The parameter passing, however, is much wow. Also, if you pin a cert at compile time you’re assuming that the cert won’t be revoked or expire before your user updates the app. If it’s a local app install, you’ll either leave your users vulnerable, or you’ll lose user base.

Feel free to flame if I’m a moron.

The point of the article is that you can manipulate client to enable built-in admin/employee access. The problem is not in ssl or faked certificates, but that the functionality is there and is not stripped from shipped client application.

I experienced a MITM on my android, they intercepted my ability to receive trips. I did a work around but still not sure how it was done.