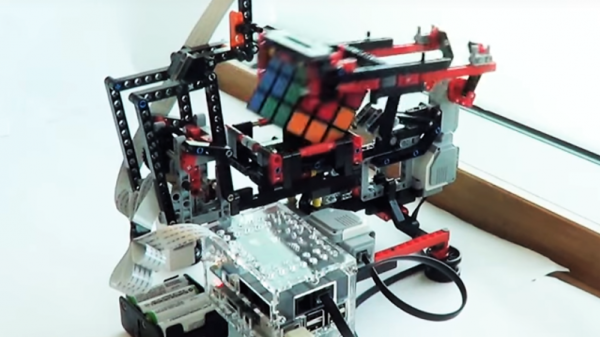

We’ve all seen videos of Rubik’s cube champions who can solve the puzzle in less than 5 seconds. And there are cube-twisting robots that can solve the cube even faster, often in under a second. This Rubik’s cube solver is not one of those robots, but it’s still pretty cool.

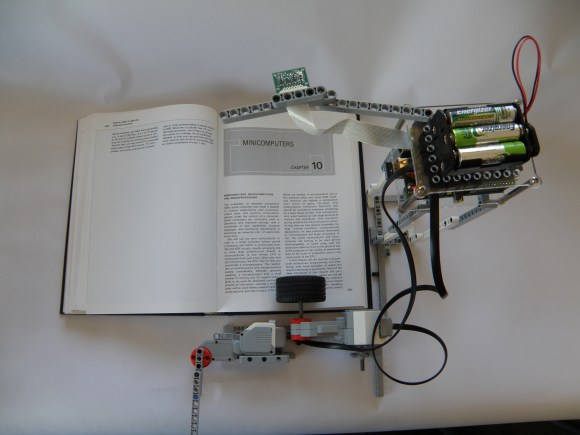

The reason we like Dexter Industries’ “BricKuber” is not for its lightning speed — it takes a minute or two to solve the puzzle. What we like is the simplicity of the approach to manipulating the cube. Built from LEGO parts, including Mindstorms motors and a BrickPi controller, the BricKuber uses only two motors to work the cube. One motor powers a square turntable upon which the cube sits, while the other powers an arm that does double duty — it either clamps the cube so the turntable can rotate a layer, or it rakes the cube to flip it 90° on the turntable. With a Pi Cam overhead, the rig images all six faces, calculates a solution to the cube, and then flips and twists the cube to solve it. It’s simultaneously mind-boggling and strangely relaxing to watch.

All the code is open source, and we strongly suspect a similar and possibly faster robot could be built without the LEGO parts. You might even be able to build one with popsicle sticks and an Arduino.

Continue reading “Solving A Rubik’s Cube With Just Two Motors”

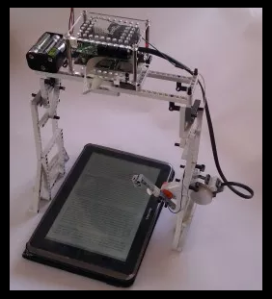

This is not an application that runs on the tablet, it is a completely separate device that ‘reads’ the tablet screen. As you could guess from the BrickPi name, the brains behind the operation is a Raspberry Pi. A camera takes a photograph of the displayed text and the Raspberry Pi converts that image file to text using Optical Character Recognition. A Text-to-Speech engine then speaks the text in a robotic sounding voice. In order to change the page the Raspberry Pi controls a Lego Mindstorms arm that swipes across the tablet screen and the entire process is repeated.

This is not an application that runs on the tablet, it is a completely separate device that ‘reads’ the tablet screen. As you could guess from the BrickPi name, the brains behind the operation is a Raspberry Pi. A camera takes a photograph of the displayed text and the Raspberry Pi converts that image file to text using Optical Character Recognition. A Text-to-Speech engine then speaks the text in a robotic sounding voice. In order to change the page the Raspberry Pi controls a Lego Mindstorms arm that swipes across the tablet screen and the entire process is repeated.