When our new computer overlord arrives it’ll likely give orders using an electromagnetic speaker (or more likely, by texting instead of talking). But for a merely artificial human being, shouldn’t we use an artificial mouth with vocal cords chords, nasal cavity, tongue, teeth and lips? Work on such a thing is scarce these days, but [Martin Riches] developed a delightful one called MotorMouth between 1996 and 1999.

It’s delightful for its use of a Z80 processor and assembly language, things many of us remember fondly, as well as its transparent side panel, allowing us to see the workings in action. As you’ll see and hear in the video below, it works quite well given the extreme difficulty of the task.

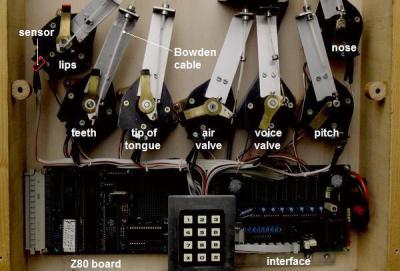

The various parts of the mouth are moved using eight stepper motors, seven of which are located below the mouth along with the electronics. They use Bowden cables to transfer the motor movements up to the mouth parts. One of those opens and closes a valve for letting some air flow through a nasal cavity located just above the mouth cavity. The eighth motor rotates the tongue from the back to the front of the mouth cavity and isn’t visible. A blower supplies the air. Unlike with us humans, there are two air paths from the blower. One passes through a reed for pitch control, and another path can be opened to allow more airflow, bypassing the reed. That increased airflow is needed for unvoiced sounds such as F, S and T. At the very front of the mouth are two block-like pieces that move up and down, one representing the lips, and behind it, one for the teeth.

[Martin’s] webpage includes early drawings as well as an explanation of how he represents the eight motors in the assembly code as eight bits. For each sound that can be made, the corresponding bits for the motors that need to be turned on are set, along with data for where each stepper motor should be turned to and how fast. He also includes a sample of the assembly code, though not all of it is there.

And while we’re talking about doing voice from scratch, how about making the Z80 computer from scratch too, like [Lumir Vanek] did with his Rum 80 PC. Or you can go one step further like [Scott Baker] did and make not only the Z80 computer but a speech synthesizer for it too.

Extremely racist comment. I have no words for your bigotry.

It’s called misogyny sweetheart/ cupcake/ doll face

Nothing was said about race whatsoever… Sexist yes, but not racist.

Racist? I think the word we are looking for is sexist.

For me, the prime advantage is that they don’t get jealous when you have more than one. Girlfriends don’t like being part of a harem in this part of the world.

[citation needed]

should be “vocal cords”, chords are what you play on a guitar.

Thanks. Fixed. That one’s a bad habit I can’t seem to break.

all this trouble, and still feeding it with a saw-tooth or some other simple 8bit synthesized waveform. Next up should be some artificial vocal cords.

It just sounds like a 8-bit synthesized waveform. It’s actually fed by a blower, which is behind so you don’t see it, and there’s a reed in the main airflow path. The pitch is controlled by one of the servos manipulating the tension of the reed. You can see it better on his webpage.

That’s what I like about this one — it tries to reproduce so many of the features the way we humans.do.

Comments got off to a rocky start on this one. I’ve deleted a couple that were offensive and contacted the people who left them. (This explains the mismatch in the comment count). Let’s make Hackaday a welcoming environment for all by acting that way in our discussions. Thank you.

Yikes. They must have been pretty bad…

Yeah. https://hackaday.io/project/11551-hackadaybashorg/log/53041-mansplaining

Aww come on, the adult toy turned vocal bot was not even NSFW.

Those comments were just “FACEPALM”.

Fascinating device. It has always been amazing to me how complex things we take for granted are. Things like speech and hearing (along with the interpretation of what is heard) are so complex that our best attempts to imitate them fall quite short. That being said, this is a great attempt at imitating speech.

99% Invisible did a great episode on speech reproduction machines:

http://99percentinvisible.org/episode/vox-ex-machina/

Amazing! It speaks wookie!!!

https://www.sideshowtoy.com/photo_902759_thumb.jpg

Speech synthesis is maddening.

I worked on a talking scientific calculator prototype about 40 years ago.

Part of the trick was creating a new vocabulary from only the words of an existing talking 4-function calculator. (Speech Plus)

Splicing together “si” from “six”, and the “ine” from “nine” yielded “sin”.

Stealing the “k” from “equals”, and the “oh” from “zero” gave “co” and hence “cosine”.

You get the picture.

The maddening part is that you become insensitive to the words after you’ve heard them a few dozen times, so you’re constantly dragging in your office mate, or secretary for a “test”.

You’ve convinced yourself you hear “cosine-pi”, your friends hear “go and die” or “gosiping”.

Oh well.

What is that phenomenon called? It’s like confirmation bias of the senses.

When Mattel’s “Blue Sky Rangers” were doing voice digitizing for the Intellivoice games for the Intellivision, in the game “TRON Solar Sailer” they needed the word “can’t”. Some on the team insisted it sounded like the word spelled with a u instead of an a. The game shipped and there weren’t any complaints from customers.

They weren’t doing voice synthesis. The cartridges had digitized audio and the Intellivoice was mostly a passthrough, but with a DAC to take the audio data and feed it into the analog audio input line that had been put on the cartridge port, well before anyone had thought of having voice in the games.

To cram as much voice data as possible into the limited space, they used manually optimized variable bit rate encoding so that different parts of each word had a different rate, saving the highest rate for the areas requiring the best fidelity.

As for the mis-heard “can’t”, the programmers used it in an “adult” version of Astrosmash that of course was not released on a cartridge.

Related to that, “TRON Solar Sailer” had access codes it would provide. The programmer’s boss accused the programmer of deliberately making it give codes ending in 69 a high percentage of the time. He said “Look, if I was going to put a ’69’ in the game, I’d put it right on the title screen!” Then he proceeded to do exactly that. The string of 1’s and 0’s on the title screen is 69 in binary. When finally pointed out, he said “But that’s 45.” 69 in binary = 45 in hexadecimal. 45 in hex = 69 decimal.

http://www.intellivisionlives.com/bluesky/games/credits/voice2.shtml

Anybody else here reminded of the cloisonne and platinum head terminal from Gibson’s Neuromancer?

So nobody has taken this and attempted a smaller faster and clearer sounding device using an ARDUINO ?.

A way to vary the frequency of the reed would make it possible to sound more natural.

Those Electrically activated Polymers would make for a compact design

Isn’t that what they’re doing with one of the servos by using it to change the tension on the reed?

It sounds like one of these trying to talk :D

It’s pretty impressive, actually.

IMG tags are a no-go, I guess… http://img0086.popscreencdn.com/99351322_vintage-pocket-noise-maker-mooing-cow-farm-childs-toy-.jpg