If you were to troll your colleagues, you can label your office coffee maker any day with a sticker that says ‘voice activated’. Now [edholmes2232] made it actually come true. With Speech2Touch, he grafts voice control onto a Franke A600 coffee machine using an STM32WB55 USB dongle and some clever firmware hacking.

The office coffee machine has been a suspect for hacking for years and years. Nearly 35 years ago, at Cambridge University, a webcam served a live view of the office coffee pot. It made sure nobody made the trip to the coffee pot for nothing. The funny, but in fact useless HTTP status 418 was brought to life to state that the addressed server using the protocol was in fact a teapot, in answer to its refusal to brew coffee. Enter this hack – that could help you to coffee by shouting from your desk – if only your arms were long enough to hold your coffee cup in place.

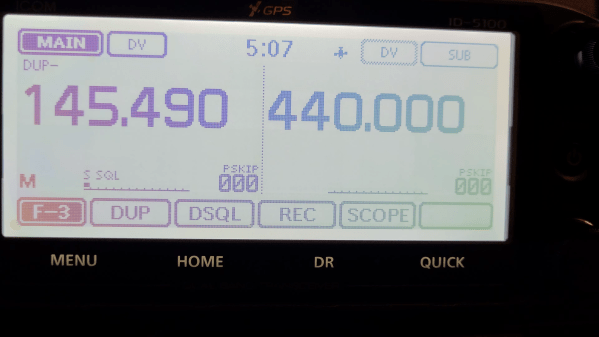

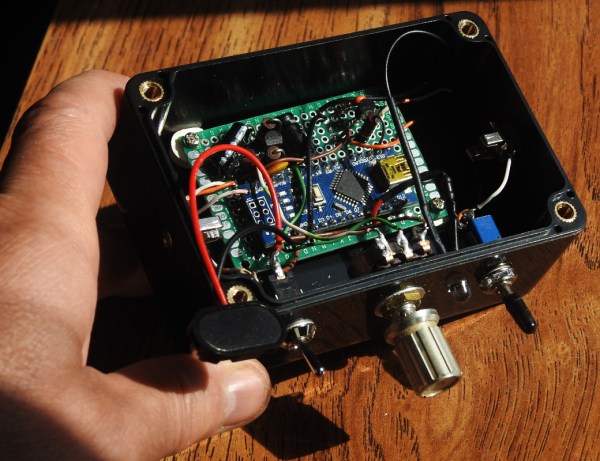

Back to the details. The machine itself doesn’t support USB keyboards, but does accept a USB mouse, most likely as a last resort in case the touchscreen becomes irresponsive. That loophole is enough: by emulating touchscreen HID packets instead of mouse movement, the hack avoids clunky cursors and delivers a slick ‘sci-fi’ experience. The STM32 listens through an INMP441 MEMS mic, hands speech recognition to Picovoice, and then translates voice commands straight into touch inputs. Next, simply speaking to it taps the buttons for you.

It’s a neat example of sidestepping SDK lock-in. No reverse-engineering of the machine’s firmware, no shady soldering inside. Instead, it’s USB-level mischief, modular enough that the same trick could power voice control on other touchscreen-only appliances.