Deep Neural Networks can be pretty good at identifying images — almost as good as they are at attracting Silicon Valley venture capital. But they can also be fairly brittle, and a slew of research projects over the last few years have been working on making the networks’ image classification less likely to be deliberately fooled.

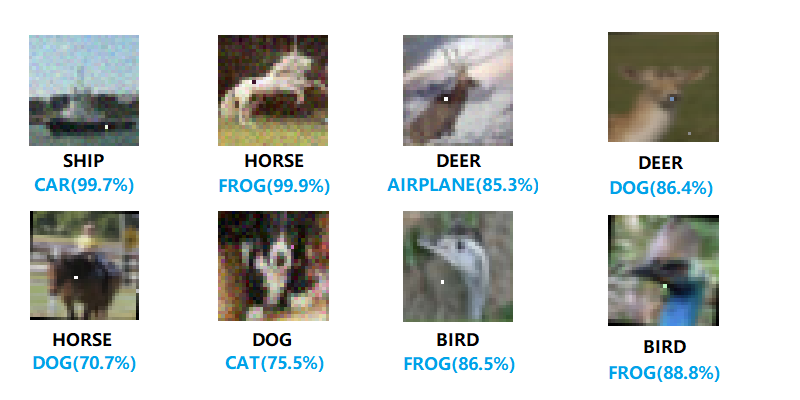

One particular line of attack involves adding particularly-crafted noise to an image that flips some bits in the deep dark heart of the network, and makes it see something else where no human would notice the difference. We got tipped with a YouTube video of a one-pixel attack, embedded below, where changing a single pixel in the image would fool the network. Take that robot overlords!

Or not so fast. Reading the fine-print in the cited paper paints a significantly less gloomy picture for Deep Neural Nets. First, the images in question were 32 pixels by 32 pixels to begin with, so each pixel matters, especially after it’s run through a convolution step with a few-pixel window. The networks they attacked weren’t the sharpest tools in the shed either, with somewhere around a 68% classification success rate. What this means is that the network was unsure to begin with for many of the test images — making it flip from its marginally best (correct) first choice to a second choice shouldn’t be all that hard.

This isn’t to say that this line of research, adversarial training of the networks, is bogus. The idea that making neural nets robust to small changes is important. You don’t want turtles to be misclassified as guns, for instance, or Hackaday’s own Steven Dufresne misclassified as a tobacconist. And you certainly don’t want speech recognition software to be fooled by carefully crafted background noise. But if a claim of “astonishing results” on YouTube seems too good to be true, well, maybe it is.

Thanks [kamathin] for the tip!

What? Steven isn’t a tobacconist?

Ren – I think it was because they may have used this picture of Steven :-D

https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcRCnx3P-u_tVjg64kp4B9LnsBW-La6mUke7oCmCVJUraw08DfF24Q

I think you have to have a seperate model, data for visual GIMP like contrast, color adjustents. Maybe the model takes input data from the image as *any function it can.

You can’t see the Catapiller dump truck (the huge ones used in open pit mines) in those low resolution pictures?

Clear as day.

The neural network did get “Cat” right.

The picture of the Dog/Cat actually looks like it resembles both and either a dog and a cat at the same time…. Is this the Schrodinger’s pet?

Additionally:

the second to last picture looks like both a frog climbing on a rock and a head of a generic large bird.

The deer in the top right corner looks like a dog saying “wasn’t me”… i.e. ears in the depressed/guilt emotion position.

Oh, P.s. I’m a human (generically worded, I ain’t gonna start a gender war BTW) who can usually spot many things other people usually just don’t seem to see… even when they blankly stare at what they can’t see.

Train the model again with the problematic images, problem solved :-)

After a few Carmagedon events no one will care any more.

It’s not quite that simple.

These networks are brittle because they’re once-through networks.

How recognition in actual neural networks like the brain works is, upon repeatedly recieving input A, the brain forms a memory of this signal pattern. When the brain recieves something resembling input A, these neural pathways are activated and a weak copy of A is replicated. This signal back-propagates through the network like an echo and mixes with the input signal. If the input continues to be A then the two amplify each other, but if it turns out something else, nothing much happens – the echo dies out.

In this way, whenever something recognizeable is seen, the incoming experience activates all the memories, and the memory that most closely matches the signal gets amplified and starts playing over and over. Therefore, for input A, a thought-object A appears in the brain, and the brain does all its logic and thinking direclty on this thought-object. When object A is present, and object B is present, the combined pattern activates object C… and so-on.

What the artificial neural network is doing is a fair bit simpler. It merely maps from a set of inputs to a smaller set of outputs, like a complex transfer function whose coefficients are found by random trial and error (machine learning). That’s why these disturbances can throw it off the mark. The input is more or less directly mapped to the output, so if you disturb the input in a strategic way, the output changes dramatically.

The reason why they’re this way is because we outsiders can’t see the thought-objects inside the network – the network has to say out loud (output a specific signal) “cat, cat, cat, cat..” whenever there’s a cat in its view, and as we are only requiring the network to do that, the training methods result in a simple transfer function instead of a whole double-guessing feedback network. It’s less complicated to do it that way, so the network passes the training by doing the simple thing rather than doing the right thing.

If we start training the network with these disturbances in place, then the more complex system might emerge, if there’s enough capacity in the network for it to form, and the test is demanding enough that the network can’t “cheat”.

How the network might cheat – suppose it learns to detects the high-contrast error of the single pixel and blocks it by averaging the surrounding pixels in its place – and then it can pass the information through the same transfer function. This way it hasn’t actually learned anything new, except how to deal with this particular error.

So you change the error slightly, and the network fails again.

Remember. These networks are trained by artificial selection/evolution and evolution always cheats. Evolution is lazy, and will only add complexity if it’s absolutely necessary.

That is why it is important to train the model with a large number of images. Also, there is no need to stop training it. Apple photos for example recognizes the photos of you and for those that the model has a confidence below a threshold (but above the lower threshold) it asks for your confirmation. The more images of you you feed it, the better it is recognizing you in the future.

It’s funny how some people make AI seem like it’s perfect in it’s current state and other present it as if it were rubbish…

>”Also, there is no need to stop training it.”

The current neural network models suffer from what’s called catastrophic interference, where the previously trained behaviour is suddenly and completely lost by further training:

https://en.wikipedia.org/wiki/Catastrophic_interference

Apple photos isn’t based on neural networks, but traditional statistical machine learning.

Still you could append the training set with the misclassified pictures and retrain.

Btw thank you for adding to this discussion. It is always interesting to learn new things!

You could, but very quickly your training set is growing to infinity with all sorts of different fudge factors you need to throw in.

One of the models to reduce catastrophic interference that was suggested in the article is to employ two networks, where one is learning the new training set, and the other is tickled with random inputs so it generates “pseudopatterns” which are essentially previously encoded memories, so the new information is being injected into the stream of old information. The network trains itself with new information by repeating stuff it already knows, so it doesn’t lose it – kinda like dreaming.

But this only works in networks where the output is the same as the input, i.e. networks that are self-training to repeat what they hear. This resembles how humans operate, but it’s useless for things like self-driving cars or other practical applications because it doesn’t solve the problem. The network knows what it sees because it remembers the input pattern – YOU don’t, because you still have to interpret the pattern.

It seems like a very simple way to verify the confidence of the result would be to take the original image and transform it slightly in a number of ways and to reclassify it; if the network gives a consistent result, then you can be more confident in the original classification. Eg, slight blur, or sharpen, or scale up or down, or rotate, or mirror, or add noise.

If one pixel or a few can change the meaning – it must be a pretty bad algorithm or a bad classification system, not robust at all – or translated to our brains – we would all be dead by now as we would have judged badly.

A poorly trained one, most likely.

These are 2 different things. Feature extraction comes before training and hasto be adapted to the application.

“we would all be dead by now as we would have judged badly.”

Have you seen the average member of society? #MyBadHumor

Youtube is full of epic-fail compilations where one wonders how we survive as a species.

Then the number of memes made from them… and failblog wouldn’t exist if brains made bad judgements at least once in a while.

I accidentally proved my own point…

the last line should end with:

if brains *never* made bad judgements at least once in a while.

have a look at the examples at Cognimem for example – http://www.cognimem.com/

Use neural networks to determine how many pixels need to be changed to fool neural networks

And use neural networks to trick those neural networks to….

WAIT!

halting problem?

I just have an image of hat veils/netting with pixelated patterns coming into fashion.

https://i.pinimg.com/736x/50/f1/e6/50f1e63667d423bcdf0f8648f0d2f59d–winter-accessories-hair-accessories.jpg

Fake neo dots on the face!

https://pbs.twimg.com/media/Bku_vKvCcAIY8gu.jpg

If your neural network is fooled by changing a single pixel means that your training sucks. When training neural networks, you need to be VERY careful when picking the training data. Otherwise you might train it to distinguish between a cloudy sky and a clear sky while your original plan was to make it able to distinguish between your tanks and the tanks of your enemy.

The problem is, your network is not really able to tell you how it reaches its conclusion and you can’t really tell it what to look for in a given image. If it learned wrong, all you can do is dump it and start from scratch with different training data.

I wonder what it would make of this image. Just stare at it for about a minute or longer. What do you see? It’s an example of a phenomenon known as Troxler’s fading.

https://goo.gl/e5HXoD

Perhaps not so far fetched how Pelant got the Angelatron to get a virus from a pattern he inscribed onto a bone from one of his victims in an episode of “Bones”. If the computer was running a system with self modifying code, designed to enhance recognition of specific things like which sorts of implements made marks on bones… Exploit specific weaknesses by feeding it a pattern that would cause the code to create a modification cascade.

Such an attack could easily be defeated through strict compartmentalizing of functions so that sub-functions that can self modify have un-modifiable boundaries and ports for passing their results to other sections – with sanity checks so bad/malicious data can’t get through.

So easy to fix, just add noise to the image yourself and check the difference in the output to another where you smooth it. So that is a majority output value over 3 inputs. Now you can stop running around in circles waving your hands in the air, mmmkay?

This article is actually an airplane

why would anyone every want to sabotage image detection tech?

unless they want to harden the google picture recaptchas to prevent them from getting cracked