There seems to be a universal truth on the Internet: if you open up a service to the world, eventually somebody will come in and try to mess it up. If you have a comment section, trolls will come in and fill it with pedantic complaints (so we’ve heard anyway, naturally we have no experience with such matters). If you have a service where people can upload files, then it’s a guarantee that something unsavory is eventually going to take up residence on your server.

Unfortunately, that’s exactly what [Christian Haschek] found while developing his open source image hosting platform, PictShare. He was alerted to some unsavory pictures on PictShare, and after he dealt with them he realized these could be the proverbial tip of the iceberg. But there were far too many pictures on the system to check manually. He decided to build a system that could search for NSFW images using a trained neural network.

Unfortunately, that’s exactly what [Christian Haschek] found while developing his open source image hosting platform, PictShare. He was alerted to some unsavory pictures on PictShare, and after he dealt with them he realized these could be the proverbial tip of the iceberg. But there were far too many pictures on the system to check manually. He decided to build a system that could search for NSFW images using a trained neural network.

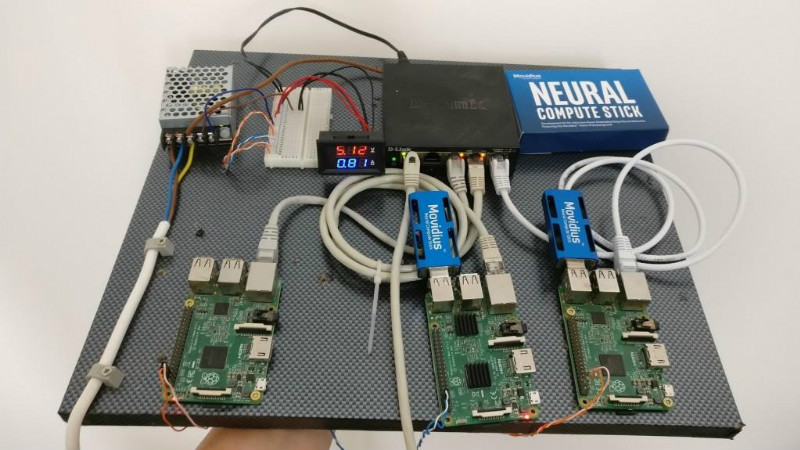

The nude-sniffing cluster is made up of a trio of Raspberry Pi computers, each with its own Movidius neural compute stick to perform the heavy lifting. [Christian] explains how he installed the compute stick SDK and Yahoo’s open source learning module for identifying questionable images, the aptly named open_nsfw. The system can be scaled up by adding more Pis to the system, and since it’s all ARM processors and compute sticks, it’s energy efficient enough the whole system can run off a 10 watt solar panel.

After opening up the system with a public web interface where users can scan their own images, he offered his system’s services to a large image hosting provider to see what it would find. Shockingly, the system was able to find over 3,000 images that contained suspected child pornography. The appropriate authorities were notified, and [Christian] encourages anyone else looking to search their servers for this kind of content to drop him a line. Truly hacking for good.

This isn’t the first time we’ve seen Intel’s Movidius compute stick in the wild., and of course we’ve seen our fair share of Raspberry Pi clusters. From 750 node monsters down to builds which are far more show than go.

Impressive

Well done!!

I have a question. How did he *train* the network to recognize child pornography? Because, unless there was some progress in the field I don’t know about, you still need, at very least, validation data set.

one would imagine that if you asked the proper authorities that you could send your network to them and they could run it through their databases for you.

that way it’s properly trained and at no point do you have such a horrible dataset in your possession.

My understanding is that he trained it to look for images containing nudes and pornography, I believe he still has to determine the child porn by looking through the total results manually, although you could probably train for a second pass to looking for age characteristics.

Because there’s a trained network available.

https://github.com/yahoo/open_nsfw

Pat, yes that’s the model he used for pulling NSFW images, however it is only trained for pornography (read the disclaimer), it will not on its own distinguish whether there is a minor involved or not and retraining would be difficult since yahoo will not release the training materials.

I don’t think you get cognition at all, it is a process of abstraction, with layers. You can have as many networks as you need with the final “meaning” being how they are wired up to feed into each other. NSFW(TRUE) + Age(<16) = …

You get the idea now? It is about determining context.

Your right that I am not very good yet, but I am learning, So how would you add the + age(<16) with out access to the original materials that trained the model and without having access to child porn? The article (and the linked blog post) says that this system only searches for NSFW (porn) and that there was child porn found within the results. It is not optimized for child porn and as far as I can see it would be very difficult to "fine tune " it to do so reliably. If I am wrong please point me to a source so I can understand it better either here in a post or to my name at gmail .

You have a separate pass that checks if there are children in the picture

seeep, I understand that you would use a second pass to determine that there are children in the photo but I am saying that you cannot use the yahoo NSFW model as it was never trained for age differentiation you would need a new model. The reason I am saying it would be difficult even with a new model is that the second pass will be looking only at what has been determined to be porn, if I train my model with 100k pictures of adults with a percentage of them being porn but all of my 100k pictures of children are porn free then it will be difficult to train given the context of the pictures.

Your Age(<16) process doesn't need porn in it's dataset. It finds or doesn't find children in ANY picture you show it. If you show it the porn from the NSFW output, it can flag any that have children in them.

Your right that NSFW doesn't have age differenciation. That doesn't matter. It is (presumably) basing it's judgement on the presence of, for example, certain skin tones, shapes, and curves. Even training it with a large set of legal porn, it's unlikely that the network would train itself to ignore something that looks like porn because there's a child in it.

There are some police investigators and network administrators out there with very depressing work. I remember an article I found a while back of a guy who had to pore through mountains of evidence to catch and prosecute a particularly violent pedophile ring. Harrowing stuff. I bet he ran up a very serious bar tab during that investigation.

The authorities have no shortage of data sets in evidence, unfortunately. I’m sure it could be used to train an AI at some point to take a bit of the burden off the poor investigators who have to handle this stuff in the course of prosecution, and hopefully even put a stop to it more quickly in a way that helps some of the victims.

Any AI advanced enough to detect all child porn is advanced enough to put an end to the practice. What most likely will happen is the same way spam is dealt with. The worse is filtered, and the “I need a human” goes into a folder for inspection.

Bet he’s fun at parties.

You don’t end up worrying about NSFW images of yourself being posted on the internet without being fun at parties.

Parties? As in, Republican party?

https://youtube.com/watch?v=kBOEInQJHww

Unless I’m mistaken in order for a neural net to recognize for example child pornography it needs a training set containing child pornography?

So I don’t know for sure, and the linked article doesn’t seem to explain it very clearly, but my assumption would be that a net trained to detect nudity would be cross-referenced with a net trained to detect juvenile faces. The end result (could be, there’s surely room for error) nude images of children.

It’s entirely possible that the system detected the nude images. Then either moved them to another directory or flagged them for visual verification which led to the realization of their content.

Yes and the FBI has such a database and neural net but they use it mostly to ignore the bulk of what is already out there and look for new production rings to take down rather than go after scans from a 1950’s “Naturalist Magazine”

I think you meant “naturist” rather than naturalist. You will find profoundly different images if you do a search on each type.

Zootopia made the same mistake.

FBI? You mean Google. I mean in term of numbers I bet Google goes through many magnitudes more stuff with their scans and AI.

Yahoo provides a trained neural net (which is mentioned in the linked article).

https://github.com/yahoo/open_nsfw

And Amazon has protester demanding they quit helping the cops with facial scan AI.

For every image, there is a victim.

I fear that continued exposure to extreme images might cause some viewers to ‘cross to the other side of the screen’.

If you’re an alcoholic, you don’t want to surround yourself with opportunities to ‘ fall off the wagon’, you admit you have a problem, and seek help.

Hot dog / not hot dog?

Wiener / not wiener.

Lolz

couldn’t somebody use this to find child porn ?

It’s in the article.

Probably not in the way that you mean. This doesn’t search the internet, it searches an individual hosting service’s drives. If the host polices it’s content, either with this tool or some other, then there won’t be anything for someone to find with this. If the host does not police it’s content, then this tool wouldn’t be necessary to find it.

That’s not how the human mind works.

It’s just like, “letting off steam” by punching something, or yelling. It reinforces the behavior, emboldens the individual, and encourages them to seek ever more of it. It’s never just one and done, there will always need to be more, and more.

Besides, where the hell do you think CP comes from? Kids, not unlike your daughter, who are abused and exploited. Maybe consider that next time, before declaring them sacrificial lambs.

Everyone stay right where you are… I’m going to get popcorn.

LOL!

This is a great use for keeping a site visibly clean and protecting the image of a businesses hosting, still nothing on the front for those using File Trick Camoflauge, And how far does this go before it becomes a privacy issue.

I would say that, once you’ve uploaded your image to an “anonymous” image host, any expectation of privacy is gone.

And in the end, skynet decided it was “for the children”

What does it do about images that contain nudity but are not pornography?

IE the Napalm Girl scandal where Facebook took down a famous and historically important photograph because it contained nudity.

Actually, I think that issue was it got classed as the child pornography, but your point is valid.

If somebody finds that photo pornographic, they have even more serious problems than pedophilia. Yikes.

But teaching an algorithm or neural net to recognize that context is certainly non-trivial. I wonder if we’ll ever have AI that lifelike.

“if you open up a service to the world, eventually somebody will come in and try to mess it up. ”

Usually by being bought up by some American company that then fucks it up with insane censorship and ‘monetization. Or so I’ve heard..

“nude-sniffing cluster” .. Would never have expected that phrase to crop up in an anti-porn context. Points!

Check the Programmable Web, they have several APIs listed that would do exactly that.

(I hope this gets past the Moderator, ’cause it is on topic…)

https://www.programmableweb.com/api/sensitive-image-detection

https://www.programmableweb.com/api/vrate-nudity-detection

https://www.programmableweb.com/api/picpurify-realtime-porn-image-detection

https://www.programmableweb.com/api/nullnude

https://www.programmableweb.com/api/clearsite-content-filtering

https://www.programmableweb.com/api/imagevision-nuditysearch

https://www.programmableweb.com/api/nsureio

Additionally; some time ago I read that PornHub has something that can automatically detect and flag illegal images.

… “over 3,000 images that contained suspected child pornography”,

And over 500 billion of the normal kind…

So it has Raspberry Pis, Solar Power, Neural network(does he actually use these?) and he runs some one elses porn detector. The ultimate clickbaite buy me I’m good out ….Ta very much!

What’s the point of using Raspberry Pis instead of just like… a regular computer.

There isnt any, same as there is no reason to have a cluster or Solar power it as the compute is all done on the £80+ea compute stick thingies. It’s how many Hackaday keywords you can get into a story